This article will classify the different ways in which Crypto+AI may intersect and explore the prospects and challenges of each classification.

Author: Vitalik Buterin

Translation: Karen, Foresight News

Special thanks to the feedback and discussions from the Worldcoin and Modulus Labs teams, Xinyuan Sun, Martin Koeppelmann, and Illia Polosukhin.

For many years, people have asked me a question: "Where is the most effective intersection between cryptocurrency and AI?" This is a reasonable question: cryptocurrency and AI are two major deep (software) technology trends in the past decade, and there must be some connection between the two.

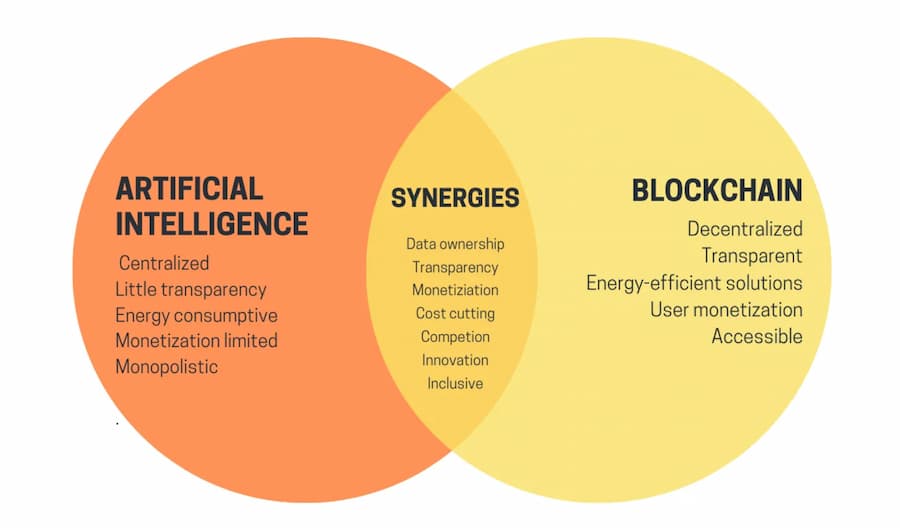

At first glance, it is easy to find the synergy between the two: the decentralization of cryptocurrency can balance the centralization of AI, AI is opaque, and cryptocurrency can bring transparency; AI needs data, and blockchain is good at storing and tracking data. But over the years, when people asked me to explore specific applications, my answer has been somewhat disappointing: "Yes, there are indeed some applications worth exploring, but not many."

In the past three years, with the rise of modern LLM (large language models) and more powerful AI technologies, as well as the rise of more powerful cryptocurrency technologies such as not only blockchain scaling solutions, but also zero-knowledge proofs, fully homomorphic encryption, and (two-party and multi-party) secure multi-party computation, I began to see this change. Within the blockchain ecosystem, or combining AI with cryptography, there are indeed some promising AI applications, although caution is needed when applying AI. A particular challenge is that in cryptography, openness is the only way to truly secure something, but in AI, open models (even their training data) greatly increase their vulnerability to adversarial machine learning attacks. This article will classify the different ways in which Crypto+AI may intersect and explore the prospects and challenges of each classification.

Summary of the intersection points of Crypto+AI from the uETH blog article. But how can these synergies be truly realized in specific applications?

Four Major Intersection Points of Crypto+AI

AI is a very broad concept: you can view AI as a set of algorithms, where the algorithms are not explicitly specified, but are driven by stirring a large computational soup and applying some optimization pressure to produce algorithms with the properties you want.

This description should not be underestimated, as it includes the process of creating us humans! But it also means that AI algorithms have some common features: they have very powerful capabilities, while there are certain limitations in understanding or understanding their internal operation.

There are many ways to classify artificial intelligence, and for the interaction between artificial intelligence and blockchain discussed in this article (Virgil Griffith's "Literally, Ethereum is a game-changing technology" article), I will classify it as follows:

- AI as a participant in the game (highest feasibility): In the mechanism where AI participates, the ultimate source of incentives comes from human input into the protocol.

- AI as a game interface (great potential, but with risks): AI helps users understand the surrounding crypto world and ensures that their actions (such as signed messages and transactions) align with their intentions to avoid deception or fraud.

- AI as game rules (requires extreme caution): Blockchain, DAO, and similar mechanisms directly invoke AI. For example, an "AI judge."

- AI as a game goal (long-term and interesting): Designing the goals of blockchain, DAO, and similar mechanisms is to build and maintain an AI that can be used for other purposes, with the use of cryptographic techniques either to better incentivize training or to prevent AI from leaking private data or being abused.

Let's review each one by one.

AI as a Game Participant

In fact, this category has existed for almost a decade, at least since the widespread use of on-chain decentralized exchanges (DEX). Whenever there is an exchange, there is an opportunity for arbitrage to make money, and robots can arbitrage better than humans.

This use case has been around for a long time, even with much simpler AI than now, but it is indeed a real intersection of AI and cryptocurrency. Recently, we have often seen MEV (Maximize Extractable Value) arbitrage robots taking advantage of each other. Any blockchain application involving auctions or trades will have arbitrage robots.

However, AI arbitrage robots are just the first example of a larger category, and I expect many other applications to be covered soon. Let's take a look at AIOmen, a demonstration of AI as a participant in a prediction market:

Prediction markets have long been the holy grail of cognitive technology. As early as 2014, I was excited about using prediction markets as inputs for governance (future rule). But so far, prediction markets have not made much progress in practice, for many reasons: the biggest participants are often irrational, people with correct knowledge are unwilling to spend time and bet unless there is a lot of money involved, and the market is usually not active enough, and so on.

One response to this is to point out the user experience improvements being made by Polymarket or other new prediction markets, and hope that they can continuously improve and succeed where previous iterations have failed. People are willing to bet hundreds of billions of dollars on sports, so why don't people invest enough money to bet on the US election or LK99, and get serious players involved? But this argument must face a fact that previous versions have not achieved this scale (at least compared to the dreams of its supporters), so it seems that some new elements are needed to make prediction markets successful. Therefore, another response is to point out a specific feature of the prediction market ecosystem, which we can expect to see in the 2020s, but did not see in the 2010s: the widespread involvement of AI.

AI is willing or able to work for less than $1 per hour and has encyclopedic knowledge. If that's not enough, they can even integrate with real-time web search capabilities. If you create a market and provide a $50 liquidity subsidy, humans won't care about bidding, but thousands of AIs will flock in and make their best guesses.

The motivation to do a good job on any one issue may be small, but the motivation to create AI that can make good predictions may be in the millions. Note that you don't even need humans to arbitrate most issues: you can use a multi-round dispute system similar to Augur or Kleros, where AI will also participate in early rounds. Humans only need to react in a few cases where a series of upgrades have occurred and both sides have invested a lot of money.

This is a powerful primitive, because once "prediction markets" can function at such a micro scale, you can repeat the "prediction market" primitive for many other types of problems, such as:

According to the terms of use, can this social media post be accepted?

What will happen to the price of stock X (e.g., see Numerai)?

Is the account currently messaging me really Elon Musk?

Can the task submitted on the online task market be accepted?

Is the DApp on https://examplefinance.network a scam?

Is 0x1b54….98c3 the ERC20 token address for "Casinu In"?

You may notice that many of these ideas are moving towards what I referred to earlier as "info defense." In a broad sense, the question is: how do we help users distinguish between true and false information and identify fraudulent behavior without giving a centralized authority the power to decide right from wrong, to prevent abuse of that power? At a micro level, the answer can be "AI."

But at a macro level, the question is: who builds the AI? AI is a reflection of its creation process, and therefore inevitably carries biases. A higher-level game is needed to judge the performance of different AIs, allowing AI to participate as players in the game.

This use of AI, where AI participates in a mechanism and ultimately receives rewards or penalties from humans through an on-chain mechanism (probabilistically), is something I believe is worth researching. Now is the right time to delve deeper into such use cases, as blockchain scalability has finally been achieved, making anything "micro" that was previously often infeasible on-chain now feasible.

A related class of applications is moving towards highly autonomous agents, using blockchain to cooperate better, whether through payments or making trustworthy commitments using smart contracts.

AI as a Game Interface

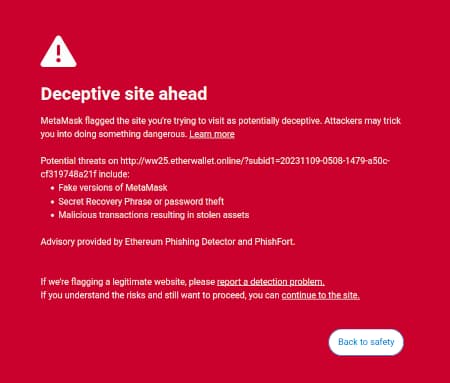

An idea I proposed in "My techno-optimism" is that there is a market opportunity for user-facing software that can protect users' interests by explaining and identifying dangers in the online world they are browsing. MetaMask's scam detection feature is an existing example.

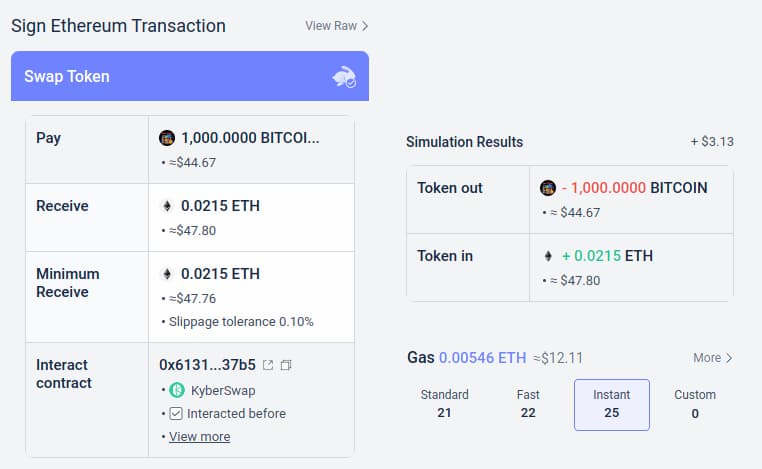

Another example is the simulation feature of the Rabby wallet, which shows users the expected outcome of the transactions they are about to sign.

These tools have the potential to be enhanced by AI. AI can provide richer, more human-understandable explanations, explaining what kind of DApp you are engaging with, the consequences of more complex operations you are about to sign, whether a specific token is genuine (e.g., BITCOIN is not just a string of characters, it is the name of a real cryptocurrency, it is not an ERC20 token, and its price is far higher than $0.045), and more. Some projects are fully developing in this direction (e.g., using AI as the primary interface in the LangChain wallet). In my opinion, purely AI interfaces may currently carry too much risk, as they add other types of error risks, but combining AI with more traditional interfaces becomes very feasible.

There is a specific risk worth mentioning. I will discuss this in more detail in the "AI as a Game Rule" section below, but the overall issue is adversarial machine learning: if a user has an AI assistant in an open-source wallet, then bad actors also have the opportunity to access that AI assistant, giving them unlimited opportunities to optimize their fraudulent behavior to bypass the wallet's defenses. All modern AI has some vulnerabilities, and even with limited access to the model during the training process, it is easy to find these vulnerabilities.

This is where the "AI participating in on-chain micro markets" is more effective: each individual AI faces the same risks, but you intentionally create an open ecosystem iterated and improved by dozens of people.

Furthermore, each individual AI is closed: the security of the system comes from the openness of the game rules, not from the internal operations of each participant.

In summary: AI can help users understand what is happening in simple language, can act as a real-time mentor, protecting users from erroneous influences, but caution is needed when encountering malicious deceivers and scammers.

AI as a Game Rule

Now, let's discuss the applications that many people are excited about, but I believe it is the riskiest and we need to act extremely cautiously: what I call AI as a part of the game rules. This is related to the excitement of mainstream political elites for an "AI judge" (e.g., as seen on the World Government Summit website), and similar desires exist in blockchain applications. Can AI simply become part of a contract or DAO to help enforce these rules if a blockchain-based smart contract or DAO needs to make subjective decisions?

This is where adversarial machine learning will be an extremely challenging issue. Here is a simple argument:

- If a key AI model in a mechanism is closed, you cannot verify its internal operations, so it is no better than centralized applications.

- If the AI model is open, attackers can download and simulate it locally, design highly optimized attacks to deceive the model, and then replay that model on the real-time network.

Example of adversarial machine learning. Source: researchgate.net

Now, readers who frequently read this blog (or are native to the crypto world) may have grasped my meaning and started thinking. But please wait.

We have advanced zero-knowledge proofs and other very cool forms of cryptography. We can certainly perform some cryptographic magic to hide the internal operations of the model so that attackers cannot optimize attacks, while also proving that the model is executing correctly and is built on a reasonable training process with a reasonable base dataset.

In general, this is exactly the mindset I advocate in this blog and other articles. But there are two major objections when it comes to AI computation:

Cryptographic Overhead: Performing a task in SNARK (or MPC, etc.) incurs much higher efficiency costs than plaintext execution. Considering that AI itself already has high computational demands, is it feasible to execute AI computations in cryptographic black boxes?

Black Box Adversarial Machine Learning: Even without understanding the internal workings of the model, there are methods to optimize attacks on AI models. If the hiding is too tight, you may make it easier for the people choosing the training data to undermine the integrity of the model through poisoning attacks.

Both of these are complex rabbit holes that require in-depth exploration.

Cryptographic Overhead

Cryptographic tools, especially general-purpose tools like ZK-SNARK and MPC, incur high overhead. Client-side verification of Ethereum blocks takes several hundred milliseconds, but generating a ZK-SNARK to prove the correctness of such blocks may take several hours. The overhead of other cryptographic tools (e.g., MPC) may be even greater.

AI computation itself is already very expensive: the most powerful language models output words only slightly faster than human reading speed, not to mention that training these models typically costs millions of dollars in computation. There is a significant quality difference between top models and those attempting to save on training costs or parameter count. At first glance, this is a good reason to be skeptical about wrapping AI in cryptography to add guarantees to the entire project.

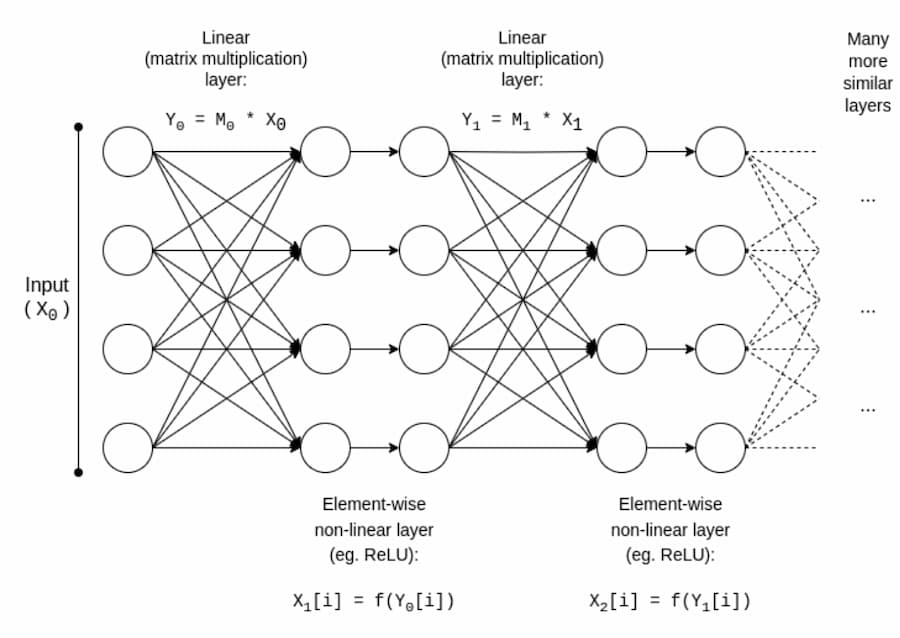

However, fortunately, AI is a very special type of computation, which allows for various optimizations that more "unstructured" computation types like ZK-EVM cannot benefit from. Let's take a look at the basic structure of AI models:

Typically, AI models consist mainly of a series of matrix multiplications, interspersed with non-linear operations that scatter each element, such as the ReLU function (y = max(x, 0)). Asymptotically, matrix multiplication accounts for most of the work. This is convenient for cryptography, as many cryptographic forms can perform linear operations almost "for free" (at least when performing matrix multiplication on encrypted models rather than inputs).

If you are a cryptographer, you may have heard of a similar phenomenon in homomorphic encryption: performing addition on encrypted ciphertext is very easy, but multiplication is very difficult, until we found a way to perform multiplication operations with infinite depth in 2009.

For ZK-SNARK, a protocol similar to the 2013 protocol had less than 4x overhead in proving matrix multiplication. Unfortunately, the overhead of non-linear layers is still significant, with the best practical implementations showing an overhead of about 200x.

However, through further research, there is hope for significantly reducing this overhead. You can refer to Ryan Cao's demonstration, which introduces a new method based on GKR, and my own simplified explanation of the main components of GKR.

For many applications, we not only want to prove the correctness of AI output computations, but also want to hide the model. There are some simple ways to do this: you can split the model so that a set of different servers redundantly store each layer, and hope that leaking some layers from some servers will not leak too much data. But there are also surprising specialized multi-party computations.

In both cases, the moral of the story is the same: the main part of AI computation is matrix multiplication, and very efficient ZK-SNARKs, MPCs (or even FHE) can be designed for matrix multiplication, making the overall overhead of putting AI into a cryptographic framework unexpectedly low. Non-linear layers are typically the biggest bottleneck, despite their smaller size. Perhaps new technologies like lookup arguments can provide assistance.

Black Box Adversarial Machine Learning

Now, let's discuss another important issue: even if the content of the model remains private and you can only access the model through an "API," there are still types of attacks that can be carried out. Quoting a paper from 2016:

Many machine learning models are susceptible to adversarial examples: specially crafted inputs that cause machine learning models to produce incorrect outputs. Adversarial examples that affect one model often affect another model, even if the two models have different architectures or are trained on different datasets, as long as both models are trained to perform the same task. Therefore, attackers can train their own substitute model, craft adversarial examples against the substitute model, and transfer them to the victim model with little information about the victim.

Potentially, even if you have very limited or no access to the model you want to attack, you can create attacks simply by training data. As of 2023, these types of attacks are still a major problem.

To effectively curb such black box attacks, we need to do two things:

Truly limit who or what can query the model and the number of queries. A black box with unrestricted API access is insecure; a black box with very limited API access may be secure.

Ensure the integrity of the training data creation process while hiding the training data.

Regarding the former, the project that has done the most in this regard is likely Worldcoin, where I extensively analyzed its early versions (as well as other protocols) here. Worldcoin widely uses AI models at the protocol level to (i) convert iris scans into short "iris codes" that are easy to compare for similarity, and (ii) verify that the scanned object is actually human.

The main defense that Worldcoin relies on is not allowing anyone to simply call the AI model: instead, it uses trusted hardware to ensure that the model only accepts input digitally signed by the orb camera.

This approach is not guaranteed to be effective: it turns out that you can carry out adversarial attacks on biometric recognition AI through physical patches or wearing jewelry on the face.

Wearing something extra on the forehead can evade detection or impersonate someone. Source: https://arxiv.org/pdf/2109.09320.pdf

But our hope is that if all defense measures are combined, including hiding the AI model itself, strictly limiting the number of queries, and requiring each query to be authenticated in some way, adversarial attacks will become very difficult, making the system more secure.

This leads to the second question: how do we hide the training data? This is where "DAO democratically managing AI" can actually make sense: we can create an on-chain DAO to manage who is allowed to submit training data (and the statements required for the data itself), who can make queries and the number of queries, and use cryptographic techniques like MPC to encrypt the entire AI creation and operation process from the training input of each individual user to the final output of each query. This DAO can also fulfill the widely popular goal of compensating those who submit data.

It needs to be reiterated that this plan is very ambitious and there are many aspects that can prove it to be impractical:

For this completely black box architecture, the cryptographic overhead may still be too high to compete with traditional closed "trust me" methods.

The fact may be that there is no good way to decentralize the training data submission process and prevent poisoning attacks.

Due to collusion among participants, multi-party computation devices may compromise their security or privacy guarantees: after all, this has happened repeatedly on cross-chain bridges.

One reason I did not start this section with a warning not to be an "AI judge, that's dystopian" is that our society is already heavily reliant on unaccountable centralized AI judges: deciding which types of algorithmic posts and political views are boosted and suppressed on social media, and even subject to censorship.

I do believe that further expanding this trend at this stage is a pretty bad idea, but I don't think the blockchain community experimenting more with AI is the main reason things are getting worse.

In fact, there are some very basic and low-risk ways that cryptographic technology can improve or even existing centralized systems, and I am very confident in this. One simple technique is delayed-release verifiable AI: when a social media site uses AI-based post ranking, it can release a ZK-SNARK proving the hash of the model that generated the ranking. The site can commit to publicly releasing its AI model after a certain delay (e.g., one year).

Once the model is released, users can check the hash to verify if the correct model was released, and the community can test the model to verify its fairness. The delayed release will ensure that the model is outdated by the time it is released.

So, the problem is not whether we can do better compared to the centralized world, but how much better we can do. However, caution is needed for the decentralized world: if someone builds a prediction market or stablecoin using an AI oracle, and then someone finds that the oracle is attackable, a large amount of funds could disappear in an instant.

AI as a Game Target

If the technology used above to create scalable decentralized private AI (whose content is a black box unknown to anyone) can actually work, it can also be used to create AI with practicality beyond blockchain. The NEAR Protocol team is making this a core goal of their work.

There are two reasons for doing this:

If a "trusted black box AI" can be created by running the training and inference processes through some combination of blockchain and multi-party computation, many users concerned about biased or deceptive applications of the system can benefit. Many have expressed a desire for democratic governance of the AI we rely on; cryptographic and blockchain-based technologies may be a way to achieve this goal.

From an AI security perspective, this would be a way to create decentralized AI with a natural emergency stop switch and limit queries attempting to use AI for malicious behavior.

It is worth noting that "using cryptographic incentives to encourage the creation of better AI" can be achieved without fully falling into the rabbit hole of using cryptography to fully encrypt: methods like BitTensor fall into this category.

Conclusion

As blockchain and AI continue to evolve, the intersection of the two fields is also increasing, with some use cases being more meaningful and robust.

Overall, the mechanisms that remain fundamentally unchanged but with individual participants becoming use cases for AI, operating effectively at a more granular level, are the most immediate and easiest to implement.

The most challenging are those trying to create "singletons" using blockchain and cryptographic technologies: decentralized trusted AI that certain applications rely on for a specific purpose.

These applications have the potential to improve both functionality and AI security while avoiding centralization risks.

However, the underlying assumptions could also fail in many ways. Therefore, caution is needed, especially when deploying these applications in high-value and high-risk environments.

I look forward to seeing more constructive attempts at AI use cases in all of these areas, so we can see which use cases are truly feasible at scale.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。