Source: Synced

Image Source: Generated by Wujie AI

LeCun said that the vast majority of academic peers strongly support open AI development, but there are still opponents.

Regarding the issue of AI risk, opinions among the big shots are also divided. Some took the lead in signing a joint letter, calling for AI labs to immediately suspend research. Deep learning giants like Geoffrey Hinton and Yoshua Bengio support this view.

Just in the past few days, Bengio, Hinton, and others issued another joint letter titled "Managing the Risks of Artificial Intelligence in a Rapidly Developing Era," calling for urgent governance measures before developing AI systems, prioritizing safety and ethical practices, and urging governments to take action to manage the risks of AI.

The article mentioned some urgent governance measures, such as involving national institutions to prevent the misuse of AI. To achieve effective regulation, governments need to fully understand the development of artificial intelligence. Regulatory agencies should take a series of measures, such as model registration, effective protection of whistleblowers, and monitoring of model development and supercomputer usage. Regulatory agencies also need to access advanced artificial intelligence systems before deployment to assess their dangerous functions.

Moreover, going back a bit, in May of this year, the U.S. non-profit organization AI Safety Center issued a statement warning that artificial intelligence should be seen as a risk to humanity, similar to a pandemic. Those supporting the statement include Hinton, Bengio, and others.

In May of this year, in order to freely discuss the risks of artificial intelligence, Hinton also resigned from his job at Google. In an interview with The New York Times, he said, "Most people think this (AI harm) is still far away. I used to think it was far away, maybe 30 to 50 years or even longer. But obviously, I don't think so now."

It is foreseeable that in the eyes of AI giants like Hinton, managing the risks of AI is a very urgent matter.

However, Yann LeCun, one of the three giants of deep learning, holds a very optimistic attitude towards AI development. He is basically opposed to signing joint letters about AI risks, believing that the development of artificial intelligence does not yet pose a threat to humanity.

Just now, in a conversation with User X, LeCun responded to some questions from netizens about AI risks.

The netizen asked about LeCun's views on the article "‘This is his climate change’: The experts helping Rishi Sunak seal his legacy," which stated that the actions of Hinton, Bengio, and others in issuing a joint letter have changed people's perception of AI from initially viewing it as an assistant to seeing it as a potential threat. The article then stated that in recent months, observers in the UK have found an increasingly apocalyptic atmosphere about AI. In March of this year, the UK government released a white paper promising not to stifle innovation in the field of AI. However, just two months later, the UK began discussing setting up guardrails for AI and urged the U.S. to accept its global AI rules plan.

Article link: https://www.telegraph.co.uk/business/2023/09/23/artificial-intelligence-safety-summit-sunak-ai-experts/

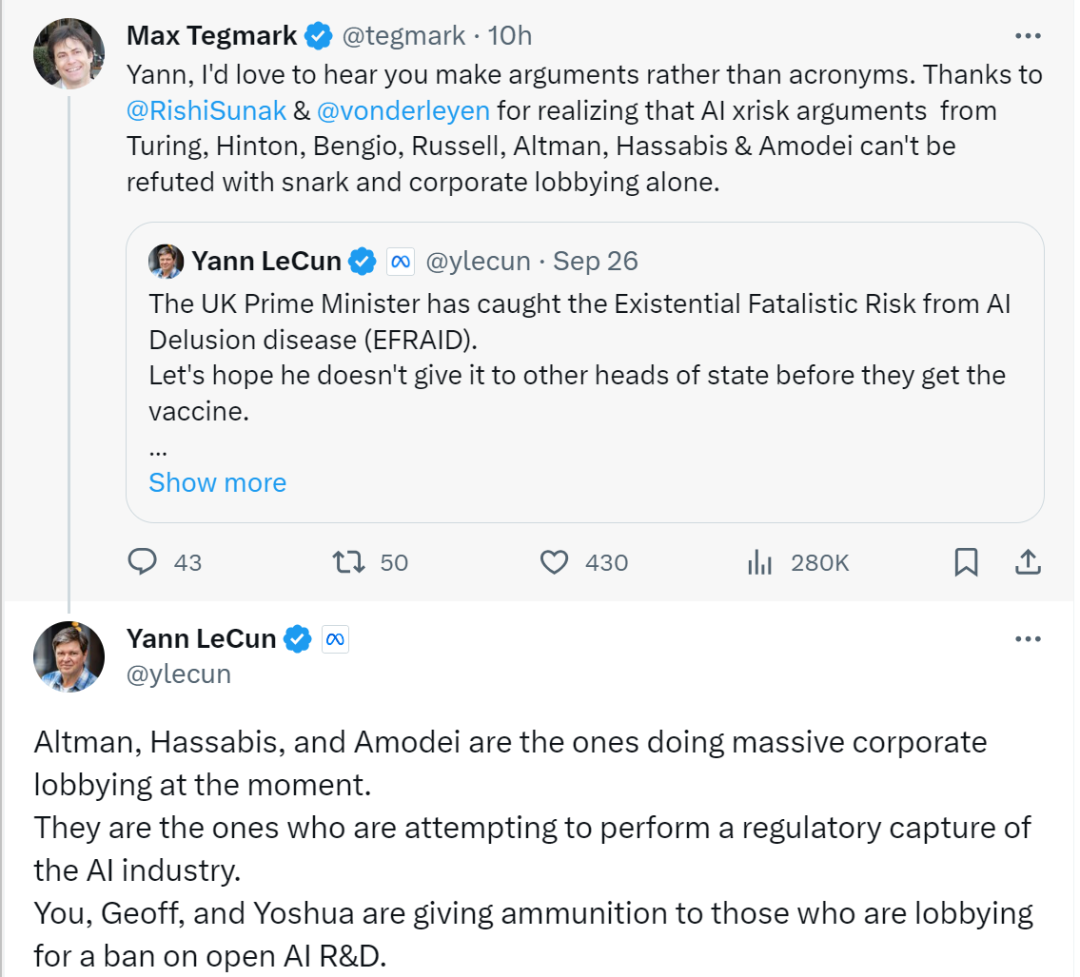

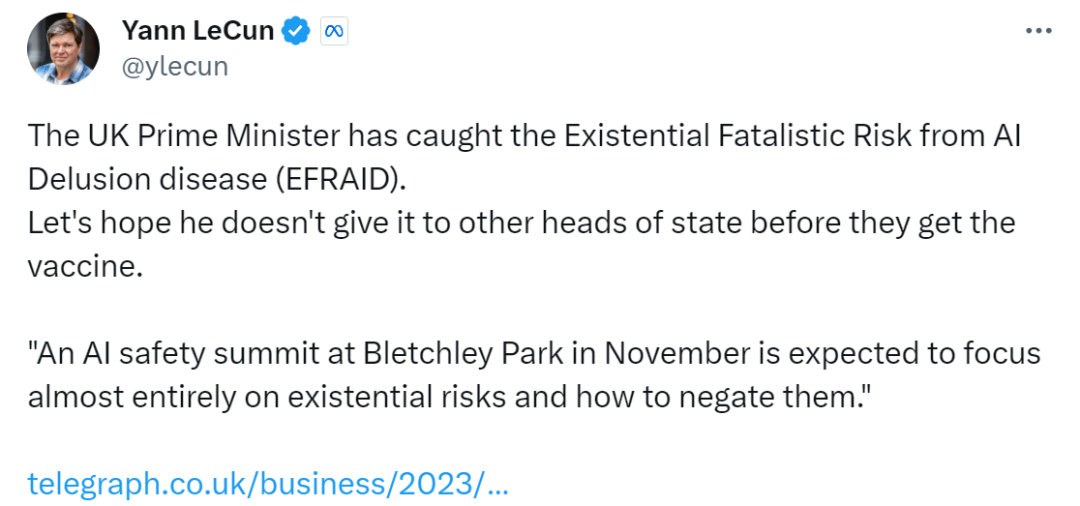

LeCun's reaction to this article was that he did not want the UK's concerns about the existential risks of AI to spread to other countries.

Subsequently, there was the aforementioned exchange between LeCun and User X. Below is LeCun's full response:

"Altman, Hassabis, and Amodei are engaged in large-scale lobbying. They are trying to regulate the AI industry. And you, Geoff, and Yoshua are providing 'ammunition' to those who lobby to ban open AI research.

If your fear-mongering campaign is successful, it will inevitably lead to what you and I both consider a disaster: a few companies will control AI.

The vast majority of academic peers strongly support open AI development. Few believe in the doomsday scenario you are advocating. You, Yoshua, Geoff, and Stuart are the only exceptions.

Like many others, I strongly support open AI platforms because I believe in the combination of various forces: human creativity, democracy, market forces, and product regulations. I also know that it is possible to produce AI systems that are safe and under our control. I have made specific proposals for this. All of this will encourage people to do the right thing.

You write as if AI is generated out of thin air, something we cannot control. But that's not the case. It progresses because of every person we know. We and they are all capable of creating 'the right things.' Demanding regulation of R&D (as opposed to product deployment) implies the assumption that these people and the organizations they serve are incompetent, reckless, self-destructive, or evil. But that's not the case.

I have presented many arguments to prove that the doomsday scenario you fear is absurd. I won't go into that again here. But the main point is, if powerful AI systems are driven by goals (including guardrails), then they will be safe and controllable because they set those guardrails and goals. (Current autoregressive LLMs are not goal-driven, so we shouldn't infer from the weaknesses of autoregressive LLMs.)

About open sourcing, the effect of your activities will be the opposite of what you are pursuing. In the future, AI systems will become a repository of all human knowledge and culture, and what we need is an open and free platform so that everyone can contribute to it. Openness is the only way for AI platforms to reflect all human knowledge and culture. This requires contributions to these platforms to be crowdsourced, similar to Wikipedia. Unless the platform is open, this won't work.

If open-source AI platforms are regulated, another scenario will inevitably occur, where a few companies will control AI platforms, and then control all of people's digital dependencies. What does this mean for democracy? What does this mean for cultural diversity? This is the reason that keeps me awake at night."

Below LeCun's lengthy tweet, there are also many people "supporting" his views.

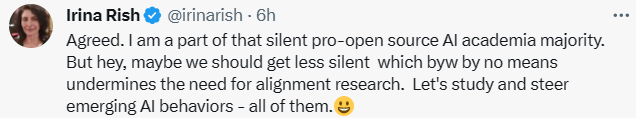

Irina Rish, a professor in the Department of Computer Science and Operations Research at the University of Montreal and a core member of Mila - Quebec AI Research Institute, suggested that researchers who support open-source AI should no longer remain silent and should lead the development of emerging AI.

Amazon founder Jeff Bezos also commented in the discussion, stating that such comments are important and what people need.

The netizen stated that initially, discussing safety issues can enrich everyone's imagination of future technology, but sensational science fiction should not lead to the emergence of monopolistic policies.

Blog host Lex Fridman has higher expectations for this debate.

The discussion of AI risk issues will also affect the future development of AI. When one viewpoint dominates, people will blindly follow. Only through continuous rational discussion can we truly see the "true face" of AI.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。