原文标题:[DappLearning] Vitalik Buterin 中文专访

原文作者:DappLearning

2025年04月07日,由 DappLearning,ETHDimsum,Panta Rhei 以及 UETH 联合主办的 Pop-X HK Research House 活动上,Vitalik 和小薇一同现身活动现场。

活动间隙,DappLearning 社区发起人Yan 对 Vitalik 进行了采访,采访内容涵盖 ETH POS,Layer2,密码学和 AI 等多个话题。此次访谈为中文对话,Vitalik 的中文表现非常流利。

以下是访谈的内容(为便于阅读理解,原内容有所整编):

01 对于 POS 升级的看法

Yan:

Vitalik,您好,我是 DappLearning 社区的 Yan,十分荣幸能在这里采访您。

我是从17年就开始了解以太坊,记得在18、19年那会儿,大家对 POW 和 POS 讨论非常激烈,可能这个话题还会持续讨论下去。

从现在来看,(ETH)POS 已经稳定运行了四年多,共识网络里有上百万的 Validator。但同时,ETH 兑 BTC 的汇率又一路下跌,这其中既有积极的一面,也有一些挑战。

所以站在这个时间点,你怎么看以太坊的POS升级?

Vitalik:

我觉得 BTC 和 ETH 的这些价格跟 POW 和 POS 完全没有关系。

BTC 和 ETH 社区里会有很多不同的声音,而这两个社区做的事是完全不同的,大家的思考方式也是完全不同的。

对于 ETH 的价格,我觉得有一个问题,ETH 是有很多可能的未来,(可以想象)在这些未来里面,以太坊上会有很多成功的应用,但是这些成功的应用可能为 ETH 带来的价值不够。

这是社区里面很多人担心的一个问题,但其实这个问题是很正常的。比如 Google 这个公司,他们做很多很多的产品,做很多有意思的事情。但是他们 Revenue 的 90% 多还是他们的 Search 业务相关的。

以太坊生态应用和 ETH (价格的关系)也是类似。就是有一些应用付了很多的交易费,他们消耗的 ETH 很多,同时也有很多(应用)他们可能比较成功,但是他们对 ETH 带来的成功并没有对应的那么多。

所以这是一个我们需要思考和继续优化的问题,我们需要多支持一些对以太坊 Holder 和对 ETH 有长期价值的应用。

所以我觉得 ETH 未来的成功,它可能会出现在这些领域。跟共识算法的改善,我觉得没有很多相关性。

02 PBS 架构与中心化担忧

Yan:

对,ETH 生态的繁荣也是吸引我们开发者愿意去建设它的重要原因。

OK,那您怎么看 ETH2.0 的 PBS(Proposer & Builder Separation)架构?这是一个很好的方向,大家以后可以拿个手机当轻节点,去 verify(ZK)proof,然后每个人 stake 1 ether 就可以去当 Validator。

但 Builder 可能就会更加中心化,他既要去做抗 MEV,又要去生成 ZK Proof 这类事情,如果采用 Based roll up,那 Builder 做的事可能还要更多,比如当 Sequencer。

这样的话 Builder 会不会太中心化?虽然 Validator 已经足够去中心化,但这是一个链条。如果中间一个环节出了问题,也会影响整个系统的运行,那要如何去解决这一块的抗审查问题呢?

Vitalik:

对,我觉得这是一个很重要的哲学性问题。

在比特币和以太坊早期的时候,有一个可以说是潜意识的假设:

构建一个区块和验证一个区块是一个操作。

假设你在构建一个区块,如果你的区块包含 100 笔交易,那在你自己的节点上就需要跑这么多( 100 笔交易)的 gas。当你构建完区块,把构建的区块广播给全世界,那全世界的每一个节点也需要做这么多工作(耗费相同的 gas )。所以如果我们设置的 gaslimit, 是要让世界上每一个 Laptop 或者 Macbook,或者某种大小的服务器都能构建区块,那就需要相应配置的节点服务器去验证这些区块。

这是之前的技术,现在我们有 ZK,有 DAS,有很多新的技术,还有 Statelessness(无状态的验证)。

在还未使用这些技术之前,构建区块和验证区块是需要对称的,而现在就可以变得不对称了。所以构建一个区块的难度可能会变得很高,但是验证一个区块的难度就可能会变得非常低。

用无状态客户端做一个例子:如果我们用无状态这个技术之后,把 gaslimit 提高十倍,构建一个区块的算力的需求会变得巨大,那一台普通的电脑可能已经做不了。这时可能就需要用特别高性能的 MAC studio,或者是更强配置的服务器。

但验证的成本会变得更低,因为验证完全不需要什么存储,仅依赖带宽和 CPU 计算资源。如果再加上 ZK 技术,那验证的 CPU 的成本也可以去掉。如果再加上那个 DAS,那验证的成本会非常非常低。如果构建一个区块的成本会变得更高,但是验证的成本会变得很低。

那么这个跟现在的情况对比是不是更好了呢?

这个问题比较复杂,我会这么思考,就是如果在以太坊的网络里有一些超级节点,即,有一些节点会有更高的算力,我们需要他们做高性能计算。

那我们要怎样不让他们作恶,比如说有几种攻击,

第一:制造一个 51% 的攻击。

第二: Censorship attack。如果他们不接受一些用户的交易,那我们如何降低这类风险;

第三:抗 MEV 相关的操作,我们怎么可以降低这些风险呢?

在 51% 攻击这个方面,由于验证的过程是通过 Attester 做的,那 Attester 节点他们就需要验证DAS, ZK Proof 和无状态的客户端。这个验证的成本会很低的,所以做共识节点的门槛还是会比较低的。

比如说如果有一些 Super Nodes 会构建区块,如果发生这样一个情况,这些节点的 90% 是你,有 5% 是他,还有 5% 是其他的人。如果你完全不接受什么交易,其实也不是什么特别坏事,为什么?因为你没有办法干扰整个共识的过程。

所以你没有办法做一个 51% 的的攻击,那你唯一能做的就是你可以厌恶某些用户的交易。

用户可能只需要等十个区块或者二十个区块后,让另外一个人把他的交易纳入区块中即可,这是第一点。

第二点就是我们有 Fossil 那个概念,那 Fossil 是做什么的?

Fossil 就是可以把 “选择交易” 的角色和做 Execution(执行交易) 的那个角色分开。这样选择哪些交易包含在下一个区块里面的这个角色,可以做的更去中心化,因此通过那个 Fossil 的方法,对于那些小的节点,他们会有独立选择交易纳入下一个区块的能力。另外如果你是一个大的节点,你的这个权利其实很少的[1]。

这个方法比之前更复杂,之前我们想的是每一个节点就是一台个人笔记本电脑。但是其实你看比特币,它现在也是一个比较混合的架构。因为比特币的那个矿工他们都是那种 Mining 的 Data Center。

所以在 POS 里面大概是这样做的,就是有一部分节点需要的算力更多,需要的资源更多。但是这些节点的权利是有限制的, 那其他的节点可以做的非常分散,非常去中心化,因此他们可以保证网络的安全和去中心化。但是这个方法更复杂,所以这也是我们的一个挑战。

Yan:

非常不错的思路。中心化不一定是坏处,只要我们能限制他作恶。

Vitalik:

对。

03 Layer1 与 Layer2 之间的问题,以及未来的方向

Yan:

感谢解答我多年的困惑,我们来到第二部分问题,作为以太坊一路走来的见证人,其实 Layer2 很成功了。现在 TPS 问题确实得到了解决。不像当年 ICO (抢发交易) 的时候,拥堵不堪。

我个人觉得现在 L2 都挺好用的,然而当前对于 L2 的流动性割裂(liquidity fragment)的问题,现在也有很多人提出各种方案,您怎么看 Layer1 和 Layer2 的关系,是否当前以太坊主网太佛系,太去中心化,对 Layer2 没有任何的约束。是否 Layer1 需要跟 Layer2 制定规则,或者制定一些分润模型,或者采用 Based Rollup 这样的方案。 Justin Drake 最近也在 Bankless 提出这个方案,我也比较认同,您是怎么看,同时我也好奇如果已经有对应的方案的话什么时候会上线?

Vitalik:

我觉得现在我们的 Layer2 有几个问题。

第一个,是他们在安全方面的进展还不够快。所以我一直在推动让 Layer2 都升级到 Stage 1,并且希望今年可以升级到 Stage 2。我一直在催他们这么做,同时一直在支持 L2BEAT 在这个方面做更多的一些透明性工作。

第二个,就是 L2 互操作性的问题。也就是两个 L2 之间的跨链交易和通信,如果两个 L2 是一个生态里面的,互操作性就需要比现在更简单,更快以及成本更低。

去年我们开始了这一项工作,现在叫 Open Intents Framework,还有那个 Chain-specific addresses,这个大部分是 UX 方面的工作。

其实我觉得 L2 的跨链问题,可能80%其实是 UX 的问题。

虽然解决 UX 问题的过程可能比较痛苦,但只要方向对,就能把复杂的问题变得不复杂。这也是我们正在努力的方向。

有些事情则需要更进一步,比如说 Optimistic Rollup 的 Withdraw 的时间就是一周。如果你在 Optimism 或者 Arbitrum 上有一个币(Token), 你把那个币跨链到 L1 或跨链到另一个 L2 都需要等一周的时间。

你可以让 Market Makers 等待一周(相应的你需要支付一定手续费给他)。普通的用户,他们可以通过 Open Intents Framework Across Protocol 等方式,从一个 L2 跨链到另一个 L2,对一些小额的交易,这个是可以的。但是对一些大额的交易, Market Makers 他们的流动性还是有限制的。所以他们需要的交易费会比较高。我上周发了那个文章[2],就是我支持 2 of 3 的验证方法,就是 OP + ZK + TEE 的方法。

因为如果做那种 2 of 3,可以同时满足三个要求。

第一个要求就是完全 Trustless,不需要 Security Council (安全理事会), TEE 技术是作为辅助的角色,所以也不需要完全信任它。

第二,我们可以开始用 ZK 技术,但是 ZK 这个技术是比较早期的,所以我们还不能完全依靠这个技术。

第三,我们可以把 Withdraw 的时间从一周降低到1个小时。

你可以想一想如果用户使用 Open Intents Framework,那 Market Makers 的流动性的成本会降低 168倍。因为 Market Makers 他们需要等待的(做 Rebalance 操作)时间 会从1周降低到1个小时。长期的话,我们有计划把 Withdraw 的时间从1个小时降低到12秒(当前的区块时间), 如果我们采用SSF还可以降低到4秒。

当前我们还会采用比如 zk-SNARK Aggregation,把 ZK证明的过程并行处理,把 Latency(延迟) 降低一点。当然如果用户用 ZK 这么做的话,就不需要通过 Intents 做。但是如果他们通过 Intents 做,成本会非常低,这都是 Interactability 的这个部分。

对于 L1 的角色问题,可能在 L2 Roadmap 的早期,很多人会认为我们可以完全复制比特币的 Roadmap,L1 的用途会非常少的,只做证明(等少量的工作),L2 可以做剩下的一切。

但是我们发现了,如果 L1 完全没有担任什么角色,那这个对 ETH 来说是危险的。

我们之前聊过的,我们最大的一个担心之一就是:以太坊应用的成功,无法成为 ETH 的成功。

如果 ETH 不成功,就会导致我们的社区没有钱,没有办法去支撑下一轮的应用。所以如果 L1 完全没有担任角色,用户的使用体验以及整个架构都会被 L2 和一些应用控制。就不会有谁代表 ETH。所以如果我们可以在一些应用里面多给 L1 分配角色,这样对于 ETH 会更好。

接下来我们需要回答的一个问题就是 L1 会做什么?L2 会做什么?

我二月份的时候发了篇文章[3],在一个 L2 Centric 的那个世界里,有很多比较重要的事情需要 L1 来做。比如 L2 需要发证明到 L1,如果一个 L2 出问题的话,那用户会需要通过 L1 跨链到另外一个 L2,此外 Key Store Wallet,还有在 L1 上面可以放 Oracle Data,等等。有很多这种机制需要依赖 L1。

还有一些高价值的应用,比如 Defi,其实他们更适合在 L1。一些 Defi 应用更适合 L1 的一个重要原因,就是他们的 Time Horizon(投资期),用户需要等的时间是很长的,比如一年、两年、三年。

这个在预测市场里尤其明显,有时候预测市场里会问一些问题,如关于 2028年的时候会发生什么?

这里就存在一个问题,如果一个 L2 的治理出问题。那理论上说那里所有的用户他们可以 Exit ,他们可以搬到 L1,他们也可以搬到另一个 L2。但是如果在这个 L2 里面有一个应用,它的资产都是锁在长期智能合约里面的,那用户就没有办法退出。所以有很多的那种理论上安全的 Defi,实际上不是很安全的。

基于这些原因有一些应用还是应该在 L1 上做,因此我们又开始多关注 L1 的扩展性。

我们现在有一个 Roadmap,在 2026 年的时候,有大概四到五个方法提升 L1 的可扩展性。

第一就是 Delayed Execution(区块的验证和执行分开),就是我们可以每一个 Slot 里只验证区块,让它在下一个 Slot 的时候真正执行。这样有一个优点,我们最高可以接受的执行的时间,可能从 200毫秒 提高到 3秒 或者 6秒。这样就有更多的处理时间[4]。

第二就是 Block Level Access List,就是每一个区块会需要在这区块的信息里面说明,这个区块需要读取哪些账户的状态,以及相关存储状态,可以说有一点类似于没有 Witness 的 Stateless,这有一个优点就是我们可以并行处理 EVM 的运行和 IO,这是一个并行处理比较简单的实现方法。

第三就是 Multidimensional Gas Pricing [5],可以设置一个区块最大的容量,这个对安全是很重要的。

还有一个是(EIP4444)历史的数据处理,不需要每一个节点永久保存所有的信息。比如说可以每一个节点只保存 1%,我们可以用 p2p 的方式,比如你的节点可能会存一部分,他的节点存一部分。这样我们可以更分散的存储那些信息。

所以如果我们可以把这四个方案结合到一起,我们现在认为可能可以提高 L1 的 Gaslimit 10 倍,我们所有的应用,就会有机会开始多依赖 L1 ,多在 L1 上面做事情,这样对 L1 有好处,对 ETH 也会有好处。

Yan:

好的,下一个问题,这个月我们就可能迎来 Pectra 升级吗?

Vitalik:

其实我们希望做两个事情,就是大概这个月底进行 Pectra 升级,然后会在 Q3 或者 Q4 进行 Fusaka 升级。

Yan:

哇,这么快吗?

Vitalik:

希望是。

Yan:

我接下来问的问题也与这个相关,作为一路看着以太坊成长的人,我们知道以太坊为了保证安全性,大概各自都有五六个客户端(共识客户端和执行客户端)在同时开发,中间有大量的协调工作,导致开发周期比较长。

这有利有弊,相对于其他的 L1 的话,可能确实很慢,但也更安全。

但是有什么样的方案让我们不用等到一年半才能迎来一次升级。我大概也有看到您提了一些方案,可以具体介绍一下吗?

Vitalik:

对,有一个方案,我们可以多提高协调效率。我们现在也开始有更多人可以游走在不同团队之间,保证团队之间的沟通更高效。

如果某个客户端团队有一个问题,他们可以把这个问题说出来,让研究者团队知道,其实托马斯他变成我们新的 ED 之一的优点就是这个,他是在客户端(团队)的,那现在他也是 EF(团队)里。他可以做这个协调,这是第一点。

第二点就是我们可以对客户端团队更严格一点,我们现在的方法是,比如有五个团队,那需要我们五个团队都完全准备好,我们再公布下一个硬分叉(网络升级)。我们现在在思考说,只等四个团队完成即可开始升级,这样不需要等那个最慢的,也可以多调动大家的积极性。

04 如何看待密码学以及 AI

Yan:

所以适当的竞争还是要有。挺好的,真的每一次升级都很期待,但是不要让大家等太久。

后面我想再问密码学相关的问题,问题比较发散。

在21年我们社区刚成立的时候,聚集了国内各大交易所的开发者和 Venture 的研究员一起讨论 Defi。 21年确实是人人都参与去了解 Defi ,学习 和设计 Defi 的阶段, 是一个全民参与的热潮。

后面发展来看,对于 ZK,无论是大众还是开发者,学 ZK,如 Groth16,Plonk, Halo2这些,越到后面的开发者发现也很难去 Catch Up,并且技术进步又很快。

另外现在看到一个方向就是 ZKVM 发展也快,导致 ZKEVM 的方向也没有之前那么受欢迎,当 ZKVM 慢慢成熟了的话,其实开发者也不用太去关注 ZK 底层。

对此您有什么建议和看法?

Vitalik:

我觉得对 ZK 的一些生态里来说,最好的方向就是,这些大部分的 ZK 开发者他们可以知道一些高端的语言,就是 HLL (High Level Language)。那他们可以在 HLL 里面写他们的应用代码,而那些 Proof System 的研究者, 他们可以继续改,继续优化底层算法。开发者需要分层,他们不需要知道在下一层发生的是什么。

现在可能有一个问题就是 Circom 和 Groth16 现在的生态是很发达,但是这个对 ZK 生态应用是有一个比较大的限制。因为 Groth16 有很多的缺点,比如每一个应用需要自己去 Trusted Setup 的这个问题,还有它的效率也不是很高,所以我们也在思考,我们需要多放一些资源在这里,然后多帮助一些现代的 HLL 获得成功。

还有一个就是 ZK RISC-V 的这个路线也是很好的。因为 RISC-V 这样也变成一个 HLL, 很多的应用,包括 EVM,以及一些其他的应用,他们都可以在 RISC-V 上面写[6]。

Yan:

好的,这样开发者只用学 Rust就挺好。 我去年参加 Devcon 曼谷 也听到了应用密码学的发展,也是让我眼前一亮。

对于应用密码学的方面,就是 ZKP 跟 MPC, FHE 的结合这个方向您 怎么看,以及给开发者一些什么样的建议?

Vitalik:

对,这个非常有意思。我觉得 FHE 现在的前景很好, 但有一个担心,就是 MPC 和 FHE 它的总是需要一个 Committee,就是需要选择比如七个或者更多节点。那如果那些节点, 有可能 51%, 有可能 33% 被攻击, 那你的系统都会出问题。相当于说系统有一个 Security Council,其实比 Security Council 更严重。 因为, 如果一个 L2 是 Stage 1, 那 Security Council 需要有 75% 节点被攻击才会出问题[7],这是第一个点。

第二点就是那个 Security Council,如果他们是靠谱的话,他们的大部分会扔到冷钱包里,也就是他们大部分会 Offline,但是在大部分 MPC 和 FHE, 他们的 Committee 为了让这系统运行, 需要一直 Online,所以他们可能会部署在一个 VPS 或者其他服务器上面,这样的话,攻击他们会更简单。

这让我有一点担心,我觉得很多应用还是可以做的,就是有优点,但是也不完美。

Yan:

最后我再问一个相对轻松的问题,我看您最近也在关注 AI,我想罗列一些观点,

比如 Elon Mask 说就是人类可能只是硅基文明的一个引导程序。

然后《网络国家》里有观点说集权国家可能更喜欢AI, 民主国家更喜欢区块链。

然后我们在币圈的经验来看,其实去中心化的前提是大家会遵守规则,会互相制衡,也会懂得承担风险, 这样最后又会导致精英政治。所以您怎么看这些观点?就聊聊观点即可。

Vitalik:

对,我在思考要从哪里开始回答。

因为 AI 这个领域是很复杂的,比如五年前,可能不会有人预测,美国会有世界上最好的 Close Source AI, 而中国有最好的 Open Source AI,AI 它可以提高所有人的所有能力, 有时候也会提高一些集权(国家)的权利。

但是AI有时候也可以说有一个比较民主化的一个效果。当我自己使用 AI 时,我会发现,在那些我已经做到全球前一千名的领域里。如一些 ZK 开发的领域,AI 其实在 ZK 部分帮助我的比较少, 我还是需要自己写大部分的代码。但是在那些 我是比较菜鸟的领域, AI 可以帮我非常多,例如,对于 Android 的 APP 的开发,其实我之前从来没有做。我在十年前做过一个 APP,用了一种框架,用 Javascript 写的,然后转成 APP,除此之外我之前从来没写过一个 Native 的安卓 APP。

我在今年初做了一个实验,就是说我要试一试,通过那个 GPT 写一个 App,结果在1个小时之内就写好了。可见专家和菜鸟之间的差距,已经通过 AI 的帮助降低了很多,而且 AI 还可以给到一些很多的新的机会。

Yan:

补充一点,挺感谢你给我的一个新角度。我之前会认为有了 AI, 可能有经验的程序员会学习的更快,而对于新手程序员不友好。但是某方面确实对新手也是会提高他们的能力。可能是一种平权,而不是说分化是吧。

Vitalik:

对,但是, 现在一个很重要的, 也需要思考的问题, 就是一些我们做的技术,包括区块链,包括 AI,包括密码学,以及一些其他的技术,他们的结合(对社会)会有什么样的效果。

Yan:

所以您还是希望人类不会只是一个精英统治是吧?也是希望达到整个社会的帕累托最优。普通人通过 AI,区块链的赋能成为超级个体。

Vitalik:

对对,超级个人,超级社区,超级人类。

05 对于以太坊生态的期待和对开发者的建议

Yan:

OK,然后我们进行最后一个问题,您对开发者社区的一些期望和寄语?有什么话想对以太坊社区的开发者说?

Vitalik:

对这些以太坊应用的开发者, 要想一想了。

现在在以太坊里面开发应用有很多的机会,有很多之前没有办法做的事情,现在是可以做的。

这里有很多的原因,比如说

第一:之前 L1 的 TPS 完全不够, 那现在这个问题没有了;

第二:之前没有办法解决隐私的问题, 那现在有;

第三:是因为那个 AI, 开发任何东西的难度都变小了, 可以说尽管以太坊生态的复杂性变高一些,但是通过 AI, 还是可以让每一个人都更能理解以太坊。

所以我觉得有很多以前, 包括十年前或者五年前失败的事情,现在可能可以成功。

当前区块链的应用生态里面,我觉得最大的一个问题是我们有两种应用。

第一种就是可以说是非常开放的,去中心化的,安全的,特别理想主义的(应用)。但是他们只有 42 个用户。第二种可以说就是赌场。 那问题是这两个极端,他们两个都是不健康的。

所以我们希望的是做一些应用,

第一用户会喜欢用的,就是会有真正的价值。

那些应用对世界会更好。

第二就是真的有一些商业模型,比如说经济方面, 可以持续运行,就不需要靠有限的基金会的或者其他组织的钱,这也是一个挑战。

但是现在我觉得每一个人拥有的资源都比之前的更多了, 所以现在如果你能找到一个好的想法,如果你能做好,那你成功的机会是非常大的。

Yan:

我们一路看过来,我觉得以太坊其实是挺成功的,一直引领着行业,并且在去中心化的前提下努力地解决着行业碰到的问题。

还有一点深有感触,我们社区一直也是非盈利的, 通过以太坊生态里的 Gitcoin 的 Grant, 还有 OP 的回溯性奖励, 以及其他项目方的空投奖励。 我们发现在以太坊社区 Build 是能拿到很多的支持, 我们也在思考如何让社区能持续稳定运营下去。

以太坊建设真的很激动人心, 我们也希望早日看到世界计算机的真正实现,谢谢您的宝贵时间。

访谈于香港摩星岭

2025年04月07日

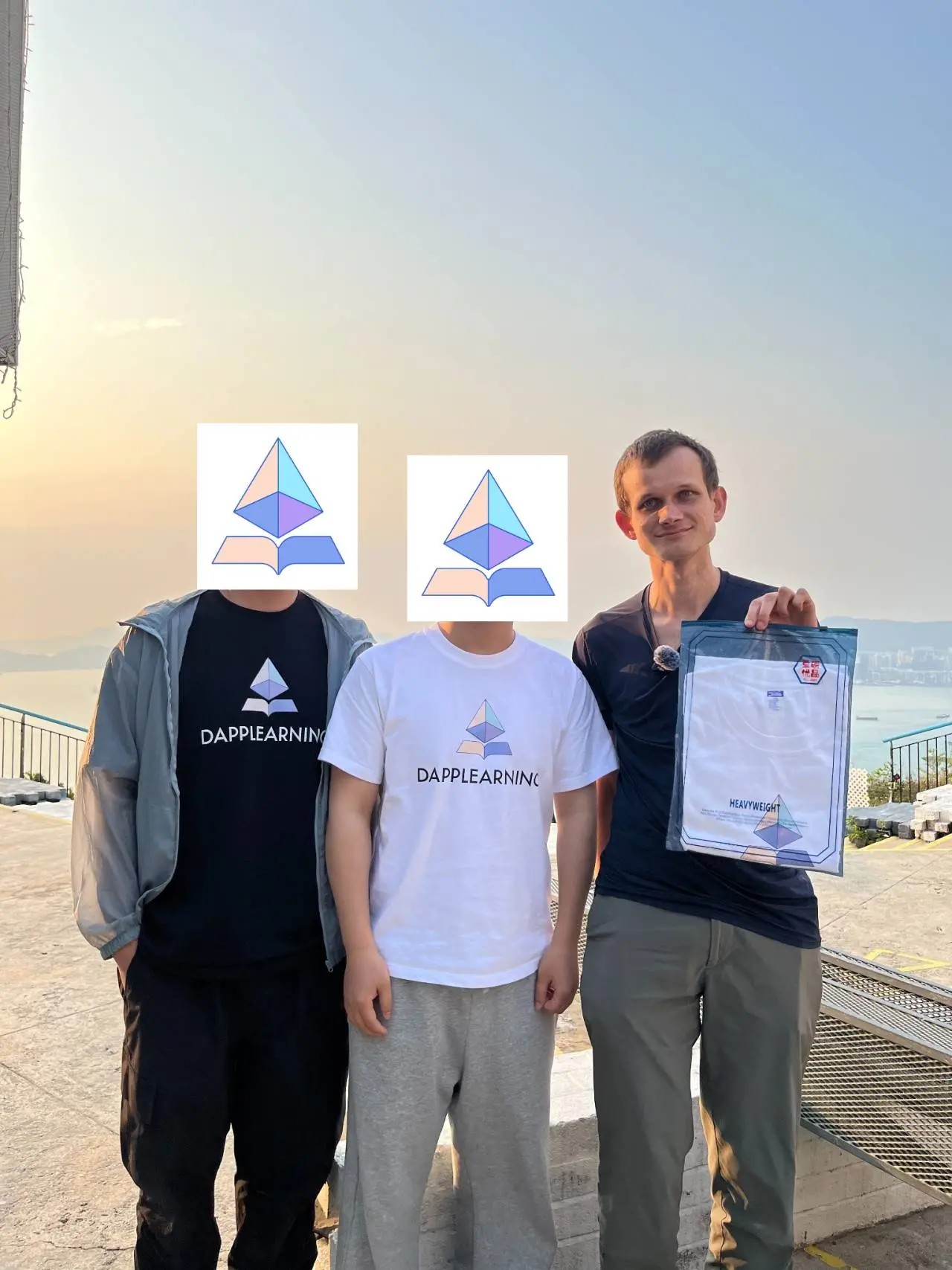

最后附上跟 Vitalik 的合影?

文章内 Vitalik 提到的参考内容小编整理如下:

[1]:https://ethresear.ch/t/fork-choice-enforced-inclusion-lists-focil-a-simple-committee-based-inclusion-list-proposal/19870

[2]:https://ethereum-magicians.org/t/a-simple-l2-security-and-finalization-roadmap/23309

[3]:https://vitalik.eth.limo/general/2025/02/14/l1scaling.html

[4]:https://ethresear.ch/t/delayed-execution-and-skipped-transactions/21677

[5]:https://vitalik.eth.limo/general/2024/05/09/multidim.html

[6]:https://ethereum-magicians.org/t/long-term-l1-execution-layer-proposal-replace-the-evm-with-risc-v/23617

[7]:https://specs.optimism.io/protocol/stage-1.html?highlight=75#stage-1-rollup

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。