After experiencing the impact of DeepSeek and Manus at the beginning of 2025, major companies are redefining their next strategic steps.

Author: Wan Chen

The excellent writing of DeepSeek-R1, the Ghibli art style of GPT-4o, and the geographical inference capabilities of OpenAI o3…

These are the phenomenal AI products that have been trending over the past two months. You can clearly see that reinforcement learning can finally generalize, and multimodal models are becoming increasingly usable. This also means that 2025 has truly entered the time point for the application and accelerated implementation of Agents.

The previously popular AI Agent—Manus team revealed that by the end of last year, Claude 3.5 Sonnet had reached the level required for Agent capabilities in long-term planning tasks and step-by-step problem-solving, which was the premise for the birth of Manus.

Now, with the further maturation of deep thinking models and multimodal model capabilities, there will certainly be more Agents capable of handling complex tasks.

Based on this judgment, on April 17, ByteDance's cloud and AI service platform "Volcano Engine" launched a stronger model for the enterprise market—Doubao 1.5 · Deep Thinking Model, which is also the first appearance of the reasoning model behind ByteDance's AI application Doubao App. Along with it, the Doubao · Text-to-Image Model 3.0 and an upgraded visual understanding model were also released.

Regarding the models released this time, Tan Dai, president of Volcano Engine, believes that "the deep thinking model is the foundation for building Agents. The model must have the ability to think, plan, and reflect, and it must support multimodality, just like humans have vision and hearing, so that Agents can better handle complex tasks."

When AI evolves to have end-to-end autonomous decision-making and execution capabilities, moving into core production processes, Volcano Engine has also prepared the architecture and tools for Agents to operate in the digital and physical worlds—OS Agent solutions and AI cloud-native inference suites, helping enterprises build and deploy Agent applications faster and more economically.

In Tan Dai's view, developing an Agent is like developing a website or an app; having only the model API cannot completely solve the problem. It requires many cloud-native AI components. In the past, cloud-native had its core definitions, such as containers and elasticity; now, AI cloud-native will also have similar key elements. Through continuous thinking, exploration, and rapid action in AI cloud-native—such as various middleware, evaluation, monitoring, observability, data processing, security assurance, and related components like Sandbox around the model—Volcano Engine is committed to becoming the optimal solution for infrastructure in the AI era.

01 Doubao Deep Thinking Model: Thinking, Searching, and Reasoning Like a Human

Since the release of DeepSeek-R1 at the beginning of the year, many ToC applications have integrated the R1 reasoning model, except for the Doubao App. The "Deep Thinking" mode launched on the Doubao App in early March is backed by ByteDance's self-developed Doubao Deep Thinking Model.

Now, this reasoning model—Doubao 1.5 · Deep Thinking Model is officially released and can be experienced and invoked on the Volcano Ark platform.

In online mode, Doubao can think, search, and then think again, just like humans do when solving problems, ultimately aiming to resolve the issue.

Here’s an example in a shopping scenario: given budget, size, and other constraints, Doubao is asked to recommend a suitable camping gear set.

In this case, Doubao first breaks down the considerations, plans the necessary information, then identifies the missing information and conducts an online search. It searched three rounds, first looking for prices and performance to ensure they meet the budget and needs; it also considered the specific needs of children and finally took the weather into account, searching for relevant detailed reviews. It thought and searched until it obtained all the necessary context for decision-making, providing a reasoning answer.

In addition to thinking while searching, the Doubao Deep Thinking Model also possesses visual reasoning capabilities, allowing it to think based on both text and visual images.

Take the scenario of ordering food, for example. With the upcoming May Day holiday, friends traveling abroad no longer need to take photos and upload them to translation software to translate menus; the Doubao Deep Thinking Model can directly help you order based on images.

In the example below, the Doubao Deep Thinking Model first performed currency conversion to control the budget, then considered the preferences of the elderly and children while carefully avoiding dishes they are allergic to, and directly provided a menu plan.

With online connectivity, thinking, reasoning, and multimodality, the Doubao 1.5 · Deep Thinking Model demonstrates comprehensive reasoning capabilities, able to solve more complex problems.

According to the technical report, the Doubao 1.5 · Deep Thinking Model has a high completion rate in reasoning tasks in professional fields, such as achieving scores in the AIME 2024 math reasoning test that match OpenAI o3-mini-high, with programming competition and scientific reasoning test scores also close to o1. In general tasks like creative writing and humanities knowledge Q&A, the model also shows excellent generalization capabilities, suitable for a wider range of use cases.

The Doubao Deep Thinking Model also features low latency; its technical report indicates that the model uses a MoE architecture with a total of 200B parameters, of which only 20B are activated, achieving results comparable to top models with fewer parameters. Based on efficient algorithms and high-performance inference systems, the Doubao model API service ensures low latency of up to 20 milliseconds while supporting high concurrency.

At the same time, it has multimodal capabilities, allowing the deep thinking model to be applied in various scenarios. For example, it can understand complex enterprise project management flowcharts, quickly locate key information, and, with strong instruction-following capabilities, strictly adhere to the flowchart to answer customer questions; when analyzing aerial images, it can assess the feasibility of regional development based on geographical features.

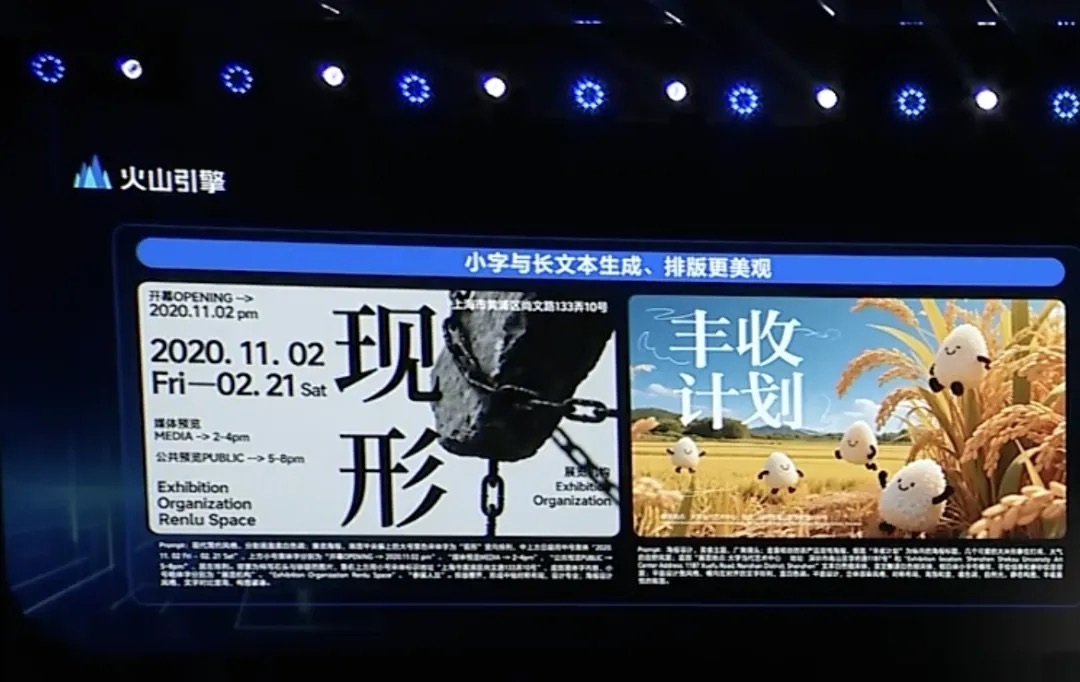

In addition to the reasoning model, the Doubao large model family also introduced updates for two models. In the text-to-image model aspect, Doubao launched the latest 3.0 upgraded version, which can achieve better text layout performance, real-life level image generation effects, and 2K high-definition image generation.

The new version not only effectively solves the challenges of generating small text and long text but also improves image layout. For instance, the two posters generated on the far left, "Transformation" and "Harvest Plan," have finely detailed generation and natural layout, ready for immediate use.

Another upgrade is the Doubao 1.5 visual understanding model. The new version has two key updates: more accurate visual localization and smarter video understanding.

In terms of visual localization, the Doubao 1.5 visual understanding model supports bounding box localization and point localization for multiple targets, small targets, and general targets, as well as counting, describing localized content, and 3D localization. The enhancement of visual localization capabilities allows the model to further expand application scenarios, such as inspection scenarios in offline stores, GUI agents, robot training, and autonomous driving training.

In video understanding capabilities, the model has also seen significant improvements, such as memory capabilities, summarization understanding, speed perception, and long video comprehension. Enterprises can create more engaging commercial applications based on video understanding; for example, in home scenarios, we can use video understanding capabilities combined with vector search to perform semantic searches on home surveillance videos.

In the example below, a cat owner wants to understand the cat's daily activities. Now, simply searching "What did the kitten do at home today?" can quickly return semantically relevant video clips for the user to view.

With the reasoning model equipped with visual understanding and a large reserve of reasoning capabilities, many previously impossible tasks can now be achieved, unlocking more scenarios. For instance, cameras with such functionality will certainly be more popular, and AI glasses, AI toys, smart cameras, and locks will also have new development space.

02 Cloud: Entering the Agentic AI Era

Recently, OpenAI researcher Yao Shunyu (core author of Deep Research and Operator) pointed out in an article titled "The Second Half of AI" that as reinforcement learning has finally found a path to generalization, it is not only effective in specific fields, such as defeating human chess players with AlphaGo, but can also achieve near-human competitive levels in software engineering, creative writing, IMO-level mathematics, mouse and keyboard operations, and more. In this context, competing for leaderboard scores and achieving higher scores on more complex leaderboards will become easier, but this evaluation method is already outdated.

What is now being competed is the ability to define problems. In other words, what problems should AI solve in real life?

In 2025, the answer is productivity Agents. Currently, AI application scenarios are rapidly entering the Agentic AI era, where AI can gradually complete complete tasks that require high professionalism and are time-consuming. In this situation, Volcano Engine has also built a series of infrastructures for enterprises to "define their own general Agents."

Among them, the most important is the model, which can autonomously plan, reflect, and make end-to-end autonomous decisions and executions, moving into core production processes. At the same time, it also requires multimodal reasoning capabilities, allowing it to complete tasks in the real world using ears, mouths, and eyes together.

Beyond the model, the Infra technology stack also needs to continuously evolve. For example, as the MoE architecture shows more efficient advantages, it is gradually becoming the mainstream architecture for models, which in turn requires more complex and flexible cloud computing architectures and tools to schedule and adapt to MoE models.

Now, in the scenario of enterprise general Agents, Volcano Engine has launched better architectures and tools—the OS Agent solution, supporting large models to operate in the digital and physical worlds. For instance, an Agent can operate a browser to search for product pages, perform price comparisons for iPhones, or even edit videos and add music remotely using editing software.

Currently, the Volcano Engine OS Agent solution includes the Doubao UI-TARS model, as well as veFaaS function services, cloud servers, cloud phones, and other products, enabling operations on code, browsers, computers, phones, and other Agents. Among them, the Doubao UI-TARS model integrates screen visual understanding, logical reasoning, interface element localization, and operations, breaking through the limitations of traditional automation tools that rely on preset rules, providing a model foundation for intelligent interactions of Agents that is closer to human operations.

In the general Agent scenario, Volcano Engine allows enterprises, individuals, or specific fields to define and explore Agents based on their needs through this OS Agent solution.

In the vertical Agent space, Volcano Engine will explore based on its own advantageous fields, such as the previously launched "Smart Programming Assistant Trae" and the data product "Data Agent," the latter of which maximizes data processing capabilities by building a data flywheel.

On the other hand, as Agents penetrate deeper, it will also lead to a larger consumption of model reasoning. In response to large-scale reasoning demands, Volcano Engine has specifically developed the AI cloud-native ServingKit inference suite, enabling faster model deployment and lower reasoning costs, with GPU consumption reduced by 80% compared to traditional solutions.

In Tan Dai's view, to meet the demands of the AI era, Volcano Engine will continue to focus on three areas: continuously optimizing models to maintain competitiveness; constantly reducing costs, including expenses, latency, and improving throughput; and making products easier to implement, such as tools like Koudai and HiAgent aimed at developers, as well as cloud-native components like OS Agent. By maintaining product and technology leadership, market share will also lead. Previously, IDC released the "Analysis of the Market Landscape of China's Public Cloud Large Model Services, 1Q25," showing that Volcano Engine ranks first with a market share of 46.4%.

In December last year, the daily token call volume of the Doubao large model was 40 trillion. By the end of March this year, this number had exceeded 12.7 trillion, achieving over 106 times rapid growth in less than a year since the Doubao large model was first released. In the future, with the further maturation of deep thinking models, visual reasoning, and optimization of AI cloud infrastructure, Agents will drive even greater token call volumes.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。