Allora aims to achieve a self-improving decentralized AI infrastructure and supports projects that wish to safely integrate AI into their services.

Author: Tranks, DeSpread

Disclaimer: The content of this report reflects the views of the respective authors and is for reference only. It does not constitute advice to buy or sell tokens or use protocols. Nothing in this report constitutes investment advice and should not be understood as such.

1. Introduction

Since the emergence of generative artificial intelligence represented by ChatGPT, AI technology has rapidly developed, and corporate participation and investment in the AI industry have continuously increased. Recently, AI has not only excelled in generating specific outputs but has also performed outstandingly in large-scale data processing, pattern recognition, statistical analysis, and predictive modeling, leading to an expanding range of applications across various industries.

JP Morgan: Hired over 600 ML engineers to develop and test over 400 use cases of AI technology, including algorithmic trading, fraud prediction, and cash flow forecasting.

Walmart: Analyzed seasonal and regional sales history to predict product demand and optimize inventory.

Ford Motor: Analyzed vehicle sensor data to predict part failures and notify customers, preventing accidents caused by part failures.

Recently, the trend of combining blockchain ecosystems with AI has become increasingly evident, particularly in the DeFAI field, which combines DeFi protocols with AI.

Moreover, there are more and more cases of directly integrating AI into protocol operating mechanisms, making risk prediction and management of DeFi protocols more efficient and introducing new types of financial product services that were previously unattainable.

Further reading: "As AI Narratives Heat Up, How Can DeFi Benefit?"

However, due to the high entry barriers of extensive information training and specialized AI technology, the establishment of AI models dedicated to specific functions is currently monopolized by several large enterprises and AI experts.

As a result, other industries and small startups face significant difficulties in adopting AI, and blockchain ecosystem dApps face the same limitations. Since dApps must maintain the "trustless" core value that does not require a trusted third party, a decentralized AI infrastructure is necessary to allow more protocols to adopt AI and provide services that users can trust.

Against this backdrop, Allora aims to achieve a self-improving decentralized AI infrastructure and supports projects that wish to safely integrate AI into their services.

2. Allora, Decentralized Inference Synthesis Network

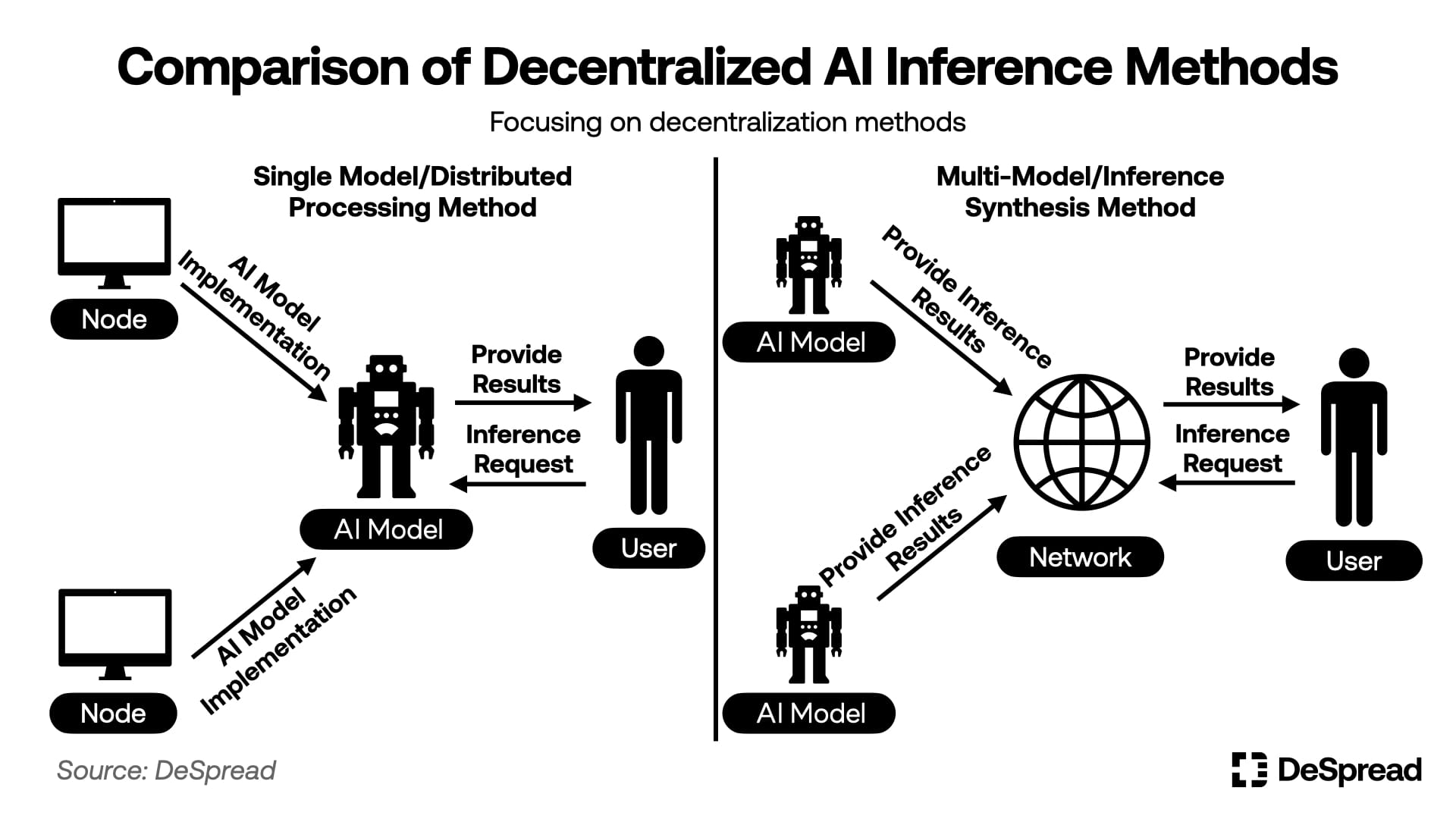

Allora is a decentralized inference network that predicts and provides future values for specific topics required by different entities. There are two main methods for implementing decentralized AI inference:

Single Model/Decentralized Processing: Conducting model training and inference processes in a decentralized manner to establish a decentralized single AI model.

Multi-Model/Inference Synthesis: Collecting inference results from multiple pre-trained AI models and synthesizing them into a single inference result.

Among these two methods, Allora adopts the multi-model/inference synthesis approach, allowing AI model operators to freely participate in the Allora network and perform inferences for specific topic prediction requests. The protocol will respond to the requesters based on the synthesized single prediction result derived from the inference values obtained by these operators.

When synthesizing the inference values of AI models, Allora does not simply calculate the average of the inferences obtained from each model but derives the final inference value by assigning weights to each model. Subsequently, Allora compares the inference values obtained from each model with the actual results of the topic and executes self-improvement by giving higher weights and rewards to models whose inference values are closer to the actual results, thereby improving the accuracy of the inferences.

Through this method, Allora can perform more specialized and topic-specific inferences than those established by the single model/decentralized processing method. To encourage more AI models to participate in the protocol, Allora provides an open-source architecture Allora MDK (Model Development Kit) to assist anyone in easily building and deploying AI models.

Additionally, Allora offers two SDKs for users who wish to utilize Allora inference data: Allora Network Python and TypeScript SDK. These SDKs provide users with an environment to easily integrate and use the data provided by Allora.

Allora's goal is to become an intermediary layer connecting AI models and protocols that need inference data, providing opportunities for AI model operators to generate revenue while building a bias-free data infrastructure for services and protocols.

Next, we will explore Allora's communication protocol architecture to further understand how Allora operates and its features.

2.1. Communication Protocol Architecture

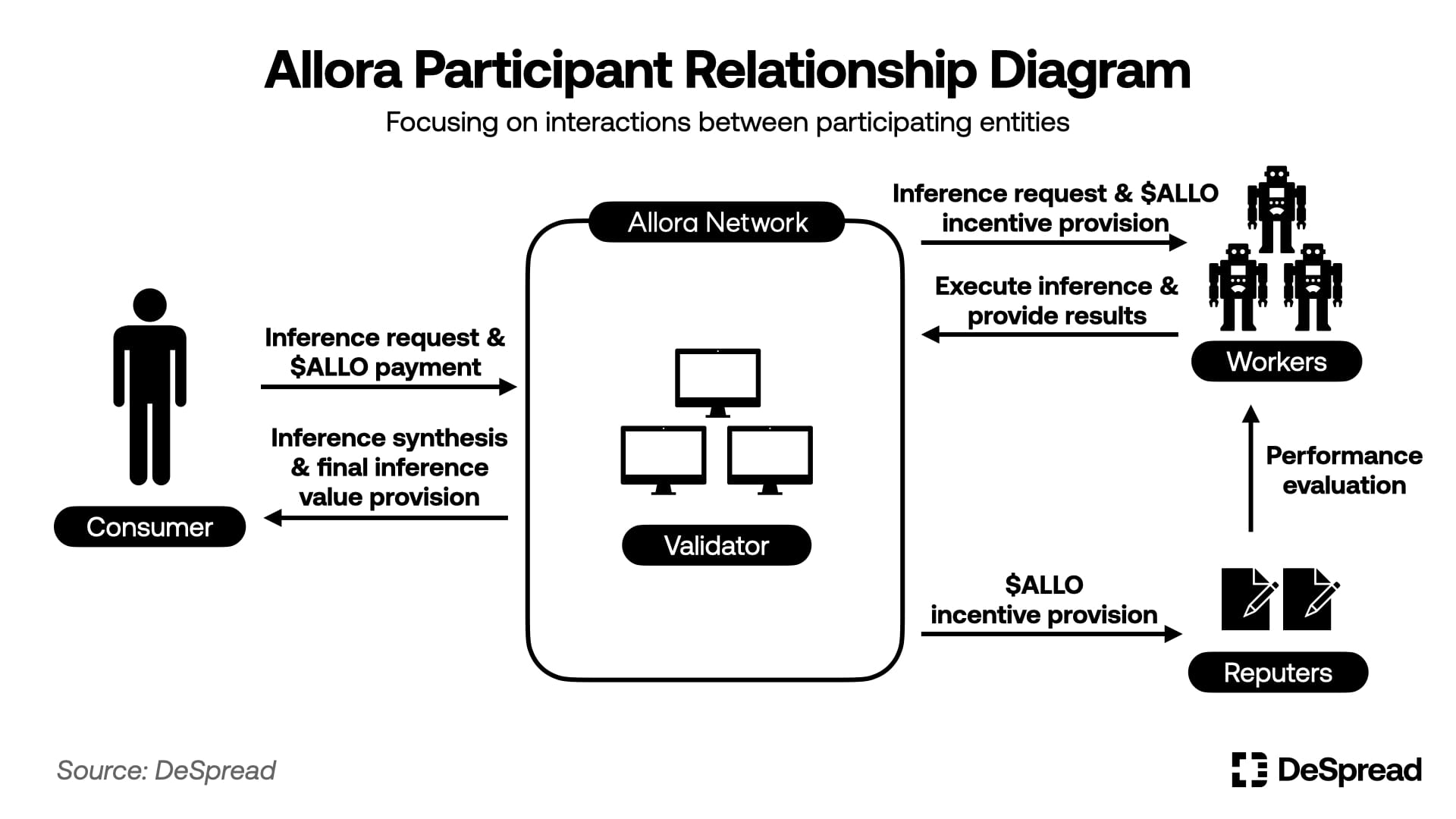

In Allora, anyone can set up and deploy specific topics, and during the process of executing inferences and obtaining the final inference values for specific topics, there are four participants:

Consumers: Pay to request inferences on specific topics.

Workers: Operate AI models using their databases and execute inferences on specific topics requested by consumers.

Reputers: Evaluate by comparing and contrasting the data inferred by workers with actual values.

Validators: Operate Allora network nodes, processing and recording transactions generated by each participant.

The structure of the Allora network is divided into inference executors, evaluators, and validators, centered around the network token $ALLO. $ALLO is used as the fee for inference requests and rewards for inference execution, connecting network participants while ensuring security through staking.

Next, we will examine the interactions between the various participants based on the functions of each layer, including the inference consumption layer, inference synthesis layer, and consensus layer.

2.1.1. Inference Consumption Layer

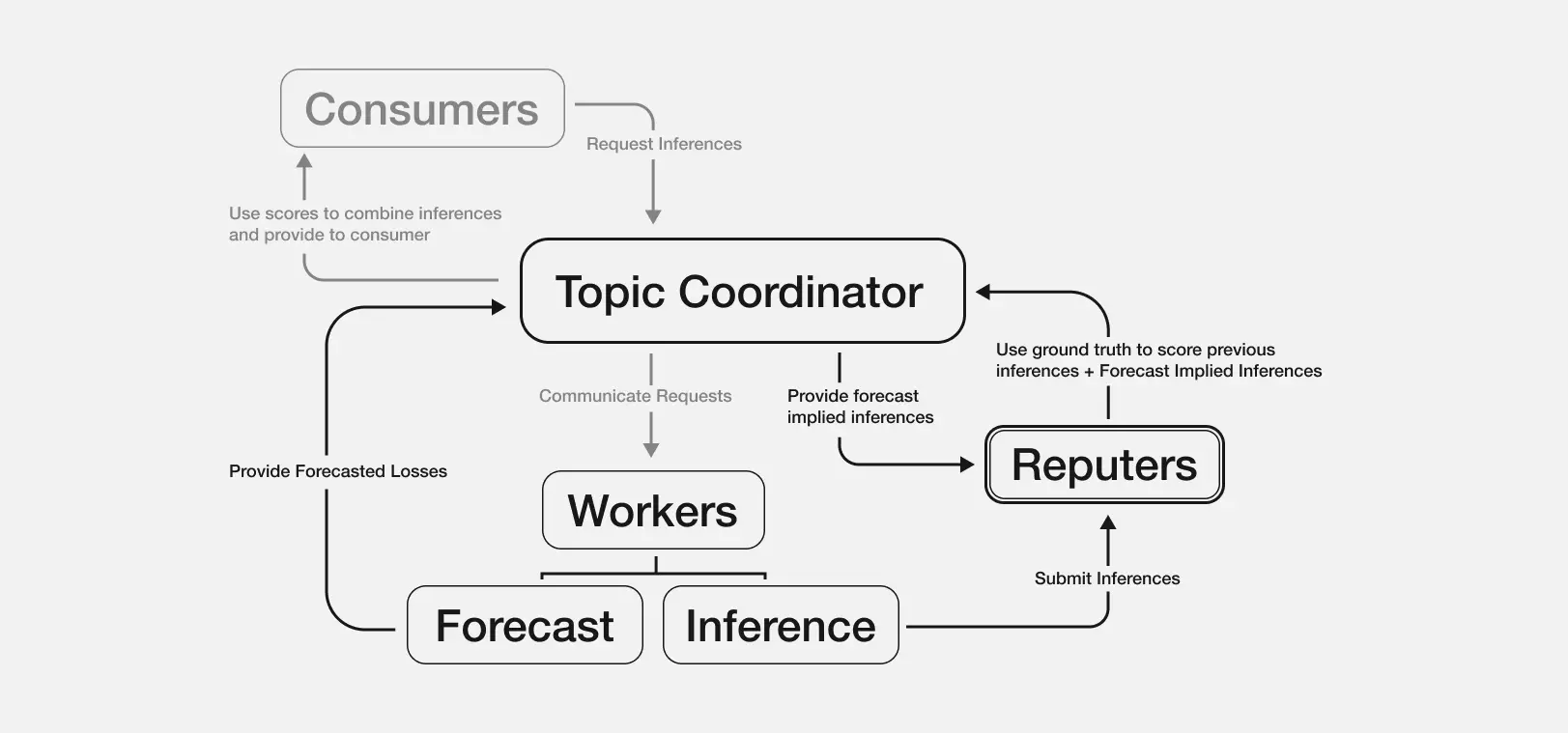

The inference consumption layer handles the interactions between protocol participants and Allora, including topic creation, participant management, and inference requests.

Users wishing to establish a topic can interact with Allora's Topic and Inference Management System (Topic Coordinator) by paying a certain amount of $ALLO and setting rules to define the content they wish to infer, how to verify actual results, and how to evaluate the inference values obtained by workers.

Once a topic is established, workers and Reputers can use $ALLO to pay a registration fee to register as inference participants for that topic. Reputers must additionally stake a certain amount of $ALLO in that topic, exposing themselves to the risk of asset slashing due to malicious results.

When the topic is established and workers and Reputers are registered, consumers can pay $ALLO to the topic to request inferences, and workers and Reputers will receive these topic request fees as rewards for their inferences.

2.1.2. Inference and Synthesis Layer

The inference and synthesis layer is the core layer used by Allora to generate decentralized inferences, where workers execute inferences, Reputers evaluate performance, and weights and inference synthesis are determined based on these evaluations.

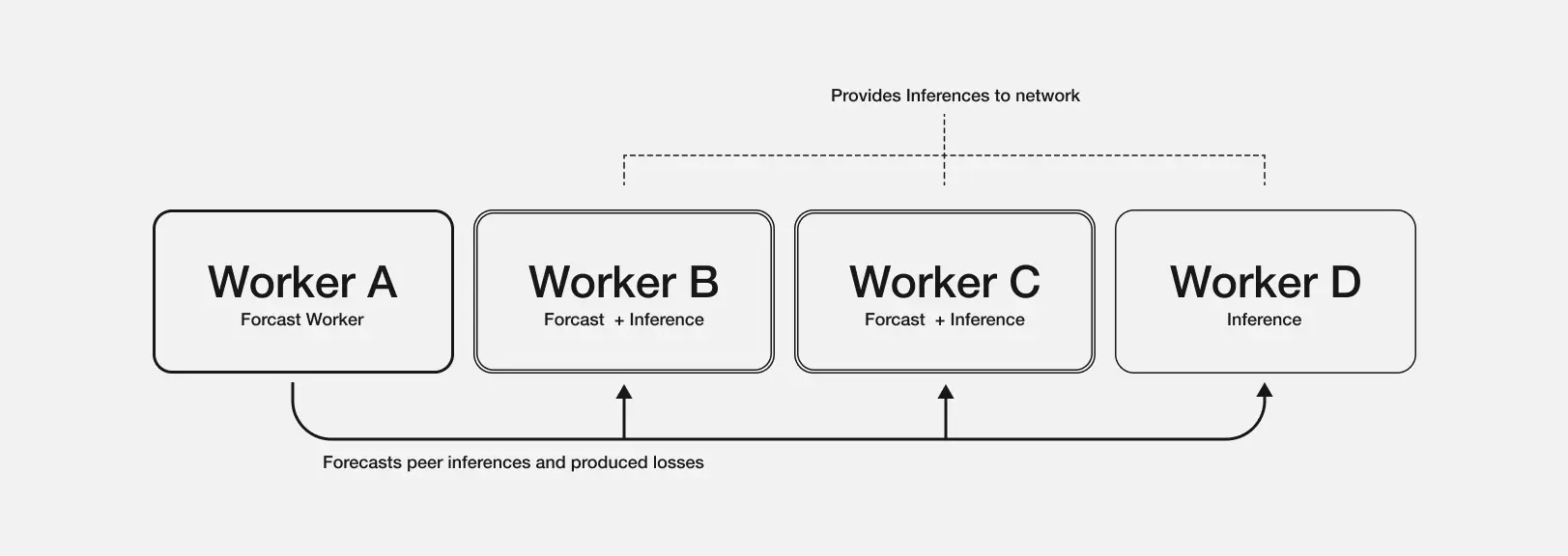

Workers in the Allora network not only need to submit inference values for the topics requested by consumers but also need to evaluate the accuracy of other workers' inferences and derive "Forecasted Losses" based on these evaluations. These forecasted losses will reflect in the weight calculations required for inference synthesis, and when a worker's inference is accurate and accurately predicts the accuracy of other workers' inferences, the worker will receive higher rewards. Through this structure, Allora can derive inference synthesis weights that consider various scenarios rather than just the past performance of workers.

Workers' inference accuracy prediction for context awareness

Source: Allora Docs

For example, in the topic of predicting Bitcoin's price one hour later, we assume the following situations for workers A and B:

Worker A: Average inference accuracy of up to 90%, but accuracy decreases in unstable market conditions.

Worker B: Average inference accuracy of 80%, but maintains relatively high accuracy even in volatile market conditions.

If the current market is highly volatile, and multiple workers predict "Worker B has an advantage in volatile conditions, with only about 5% error in this prediction," while simultaneously predicting "Worker A is expected to have about 15% error in this volatile situation," Allora will still assign a higher weight to Worker B's inference in this prediction, despite Worker B's lower average historical performance.

The topic coordinator uses the final weights derived from this process to synthesize inferences and provides the final inference value to consumers. Additionally, confidence intervals are calculated and provided based on the distribution of inference values submitted by workers. Subsequently, Reputers compare the actual results with the final inference values to evaluate each worker's inference performance and predict the accuracy of other workers' inferences, adjusting the weights of workers based on the staking consensus ratio.

Allora conducts inference synthesis and evaluation through this method, particularly its "context awareness" structure, which allows each worker to assess the accuracy of other workers' inferences, enabling Allora to derive optimized inference values for various situations, thereby improving inference accuracy. Furthermore, as data on workers' inference performance accumulates, the operational efficiency of the context awareness function will also improve, making Allora's inference capabilities more effective in self-improvement.

Allora's inference synthesis process

Source: Allora Docs

The consensus layer of Allora is where topic weight calculations, network reward distributions, and participant activity records take place, built on the Cosmos SDK based on CometBFT and DPoS consensus mechanisms.

Users can participate in the Allora network as validators by minting $ALLO tokens and operating nodes, collecting transaction fees submitted by Allora participants as rewards for operating the network and ensuring security. Even without operating nodes, users can indirectly earn these rewards by delegating their $ALLO to validators.

Additionally, Allora features the distribution of $ALLO rewards to network participants, with 75% of newly unlocked and distributed $ALLO allocated to workers and Reputers participating in topic inferences, while the remaining 25% is distributed to validators. Once all $ALLO has been issued, these inflationary rewards will cease and follow a structure where the amount unlocked gradually halves.

When 75% of the inflationary rewards are distributed to workers and Reputers, the distribution ratio depends not only on the performance of workers and the staking of Reputers but also on the topic weight. The topic weight is calculated based on the staking amounts and fee income of Reputers participating in that topic, incentivizing workers and Reputers to continue participating in high-demand and stable topics.

3. From On-Chain to Various Industries

3.1. Upcoming Allora Mainnet

Allora established the Allora Foundation on January 10, 2025, and is accelerating the launch of the mainnet after completing a public testnet with over 300,000 participating workers. As of February 6, Allora is selecting AI model creators for the upcoming mainnet through the Allora Model Forge Competition.

Allora Model Forge Competition categories

Source: Allora Model Forge Competition

Furthermore, before the mainnet launch, Allora has established partnerships with most projects. The main partnerships of Allora and the functions they provide are as follows:

Plume: Provides RWA prices, real-time APY, and risk predictions on the Plume network.

Story Protocol: Offers IP value assessments and potential analyses, price information for non-circulating on-chain assets, and Allora inferences for DeFi projects based on Story Protocol.

Monad: Provides price information for illiquid on-chain assets and Allora inferences for DeFi projects based on Monad.

0xScope: Uses Allora's context awareness features to support the development of the on-chain assistant AI Jarvis.

Virtuals Protocol: Enhances agent performance by integrating Allora inferences with the G.A.M.E framework of Virtual Protocol.

Eliza OS (formerly ai16z): Enhances agent performance by integrating Allora inferences with the Eliza framework of Eliza OS.

Currently, Allora's partners are primarily focused on AI/cryptocurrency projects, reflecting two key factors: 1) the demand for decentralized inferences in cryptocurrency projects, and 2) the need for on-chain data required for AI models to execute inferences.

For the early mainnet launch, Allora expects to allocate a significant amount of inflationary rewards to attract participants. To encourage these participants attracted by inflationary rewards to remain active, Allora needs to maintain an appropriate value for $ALLO. However, as inflationary rewards gradually decrease over time, the long-term challenge will be to generate sufficient network transaction fees by increasing inference demand to incentivize continued participation in the protocol.

Therefore, to assess Allora's potential success, the key lies in Allora's short-term $ALLO appreciation strategy and its ability to promote inference demand to ensure stable and long-term fee income.

4. Conclusion

With the advancement of AI technology and the growth of its practicality, the adoption and implementation of AI inference are actively developing across most industries. However, the resource intensity required for adopting AI is widening the competitive gap between large enterprises that have successfully integrated AI and small companies that have not. In this environment, the demand for Allora's functionality (providing optimized topic inferences and self-improving data accuracy through decentralization) is expected to gradually increase.

Allora's goal is to become a decentralized inference infrastructure widely adopted across various industries, and achieving this goal requires demonstrating the effectiveness and sustainability of its functionality. To prove this, Allora needs to secure enough workers and Reputers after the mainnet launch and ensure that these network participants receive sustainable rewards.

If Allora successfully addresses these challenges and gains adoption across various industries, it will not only demonstrate the potential of blockchain as a significant AI infrastructure but also serve as an important example of how AI and blockchain technology can combine to create real value.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。