The more AI applications are built on the foundation of "large application models," the greater the opportunity for success they theoretically have.

Image source: Generated by Wujie AI

The Spring Festival holiday of 2025 has just passed, but the shockwaves caused by DeepSeek are still lingering.

Through methods such as FP8 training, multi-token prediction, improved MOE architecture, multi-head latent attention mechanism (MLA), and reinforcement learning without SFT, DeepSeek-V3 has achieved performance surpassing top open-source models like Qwen2.5-72B and Llama-3.1-405B, as well as some closed-source models, all at an extremely low training cost. DeepSeek-R1 has even demonstrated reasoning effects that exceed those of OpenAI's o1.

The success of the DeepSeek series models has opened up new pathways for the large model industry, which was originally driven by computing power as its core logic, elevating global foundational large models to a new level.

However, beyond foundational large models like DeepSeek, there is another category of large model development worth noting: application-oriented large models that innovate AI technology around core products and core scenarios.

China has always been a major player in applications.

In 2024, against the backdrop of gradually increasing computing power supply and significantly reduced inference costs, domestic AI applications have emerged vigorously—whether in the fields of text-to-image and text-to-video with Jdream AI, Miaoduck Camera, and Kuaishou Keling, or in AI search with Nano Search (formerly 360AI Search) and Tiangong AI Search, or in AI companionship with Xingye and Cat Box, or in AI assistant types like Doubao, Quark, Kimi, and Tongyi, all have seen explosive user growth in 2024.

These AI applications rely on the support of underlying model capabilities. For AI applications, the competition among application-oriented large models is not about model parameters, but about application effectiveness.

For example, Kimi's ability to gain significant attention in a short time is closely related to the long text reading and parsing capabilities of its underlying large model; Quark's 200 million user base and 70 million monthly active users benefit from the "user-friendly" nature of the Quark large model; Keling AI's powerful text-to-video and image-to-video functions depend on the support of the Keling large model.

The evolution of foundational large models is far from over, but as more companies begin to lay out AI applications in 2025, the development of application-oriented large models will be a necessary prerequisite for the comprehensive explosion of AI applications.

1. Why Large Companies Have an Advantage in AI Applications

With the maturity and breakthroughs in large model technology, the gradual improvement of computing power infrastructure, continuous reinforcement of national policies, the emergence of killer applications like Sora/Suno, and strong growth in investment and financing in fields like AI Agents, embodied intelligence, AI toys, and AI glasses, 2025 is set to be the year of AI application explosion, which has almost become a widespread consensus in the tech community.

This consensus has been accelerated by the popularity of DeepSeek, as it raises the baseline capabilities of industry foundational models, creating a better development environment for AI applications.

According to observations from "Gizhi Light Year," from the second half of 2024 to now, well-known investment institutions such as Hillhouse Capital, Matrix Partners, Baidu Ventures, and Inno have increased their investment in AI applications, especially targeting early-stage projects in the AI application field; some investors have stated that by the end of 2024, the number of AI application projects that have genuinely secured financing in the primary market is at least double the number of projects that have been publicly announced.

Sensor Tower data also shows that in 2024, global mobile users' spending on AI applications reached $1.27 billion, with AI-related applications achieving 17 billion downloads in the iOS and Google Play stores.

However, a harsh reality is that among the thousands of AI applications, only a few can sustain long-term operations, and even fewer can become wildly popular.

"Gizhi Light Year" previously reported on a website called "AI Graveyard," which cataloged 738 dead or discontinued AI applications, including some once-celebrated projects: for example, OpenAI's AI voice recognition product Whisper.ai, the well-known shell site FreewayML for Stable Diffusion, StockAI, and the AI search engine Neeva, which was once seen as a "Google competitor" (see “AI Graveyard, and 738 Dead AI Projects | Gizhi Light Year”).

So, what kind of AI applications can operate sustainably and have vitality?

"Gizhi Light Year" believes that first, they must be model-centric, fully leveraging the model's capabilities; second, they must have strong user demand insight.

Microsoft CEO Satya Nadella once stated while looking ahead to the trends in the AI industry in 2025, “Applications centered around AI models will redefine various application fields in 2025.” In other words, applications that have fewer shell layers, are closer to the model, and maximize the model's capabilities will attract more user engagement and retention.

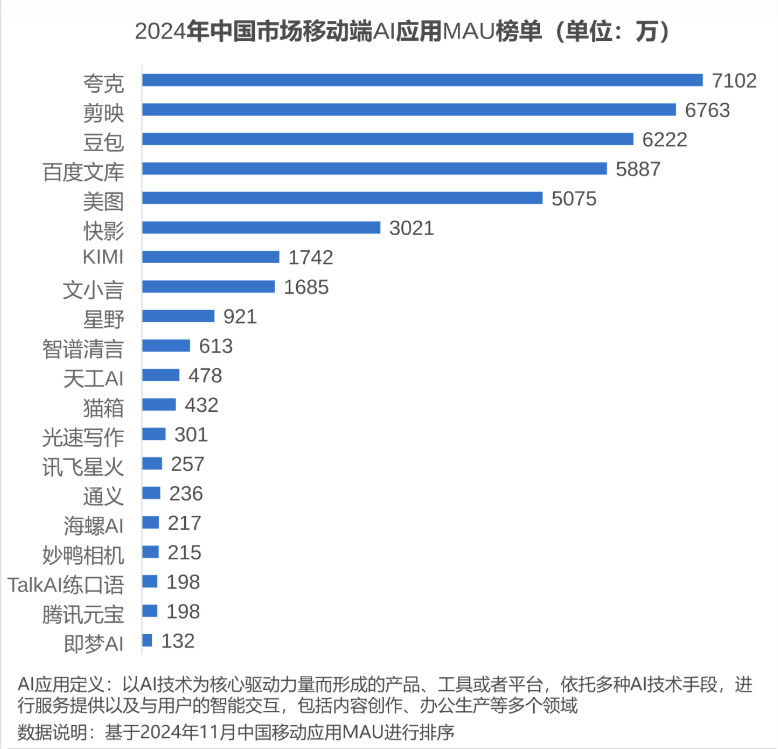

Observing the AI product rankings from Newbang in January 2025, it is not difficult to find that among the top ten applications in the domestic list, eight are directly built on models and are AI assistant-type applications.

Image source: Newbang

To have strong insight into user demand, it relies on a large user base—only with a sufficient number of users can user data and labels accumulate enough depth, allowing companies to uncover the most genuine pain points of user needs.

These two points also imply that large companies have an advantage in developing AI applications.

Large companies have ample computing power and talent to develop models in-house, allowing them to deploy AI applications directly on self-developed models without multiple layers of shells; they also have a vast user base and mature traffic channels, which not only provide richer user data and make it easier to uncover needs but also offer a natural advantage for promoting AI applications; additionally, the strong ecosystem integration capabilities of large companies help provide richer functionalities for products, enhancing user stickiness to AI applications.

The aforementioned product rankings also confirm this point. Among the top ten applications, six are from large companies.

In a recent interview with Tencent Technology, Zhu Xiaohu also stated that the data barriers for startups are not that high, making them unsuitable for developing foundational models; instead, they need to focus on capturing "customers" more tightly on top of foundational models. This indirectly corroborates the advantages of large companies in developing AI applications.

Overall, the models and applications of large companies are mutually causal, together forming a growth flywheel:

The data accumulation provided by a large user base offers high-quality training material for model development, helping to enhance model capabilities, making them better suited to niche scenarios and user needs; conversely, the growth of model capabilities feeds back into applications, endowing them with stronger product power and attracting more users.

This type of model, which has a large user base, is driven by user demand in its research direction, and performs better in niche scenarios, could perhaps be termed an "application-oriented large model." The more AI applications are built on the foundation of "application-oriented large models," the greater the opportunity for success they theoretically have.

For example, Quark, which ranks just below DeepSeek in the list, is a typical representative of this.

"Gizhi Light Year" has observed that in the recent fierce competition among AI applications, Quark, which was rarely mentioned before, is quietly leading the way. According to the latest data from Analysys, by the end of 2024, Quark ranked first among mobile AI applications with 71.02 million monthly active users, surpassing well-known competitors Doubao and Kimi.

Image source: Analysys

What’s even more noteworthy is the "user stickiness" metric.

According to third-party report statistics, Quark's three-day retention rate exceeds 40%, while the retention rates of the much-hyped Doubao and Kimi smart assistants during the same period are around 25%; the "2024 Annual Strength AI Product List" released by Qimai Data shows that Quark ranks first in both the "Annual Strength AI Product App List" and the "Annual Product Download List," with a cumulative download volume exceeding 370 million in 2024, achieving a significant lead over various AI products.

Among the many AI products in the list, Quark was not the first to launch a large model, but it has quietly achieved a significant lead in traffic, download volume, and user stickiness. What allows Quark to stand out in a competitive market?

All of this is thanks to Quark's "application-first" product and model strategy.

2. Application-First, Driving the Scenario-Based Upgrade of Large Models

From the very first day of focusing on "intelligent precise search," Quark has not only quickly carved out a niche in the market with its simple, ad-free interface and more accurate search results, but has also derived vertical products like Quark Cloud Disk, Quark Scan King, Quark Document, and Quark Learning around student and office worker demographics based on its search business, gradually segmenting its scenarios into learning and work fields.

For example, in the learning field, in mid-2020, Quark launched the "photo search for questions" feature. During the pandemic, in response to many students being locked at home and facing difficulties in effective learning, the Quark learning team upgraded the "photo search for questions" feature multiple times.

In the office field, Quark also started from the vertical scenario of "scanning," launching a series of related features such as text extraction, table extraction, handwriting removal, document scanning, and document format conversion.

With a minimalist tool base, increasingly rich scenario applications, and an initial ad-free, no-charge user acquisition ecosystem, Quark's user base surged from millions to tens of millions, cumulatively serving over 100 million users.

In November 2023, Quark launched the trillion-parameter large model "Quark Large Model."

The Quark Large Model is a multi-modal large model independently developed by Quark based on the Transformer architecture, which trains and fine-tunes hundreds of millions of image and text data daily, characterized by low cost, high responsiveness, and strong comprehensive capabilities. Aimed at user needs and Quark's product vertical scenarios, the Quark Large Model focuses more on practical applications, deriving specialized models in general knowledge, healthcare, education, and more to provide more professional and precise technical capabilities.

At the same time as the launch of the Quark Large Model, Quark upgraded the AI recognition effects of its scanning products and the AI search capabilities of its cloud disk products.

The first application scenario of the Quark Large Model is health and medical.

In December 2023, Quark announced a comprehensive upgrade of its health search function and launched the "Quark Health Assistant" AI application. The "Quark Health Assistant" integrates a medical knowledge graph and generative dialogue capabilities, providing users with more comprehensive and accurate health information, and supports users in conducting multi-turn questions and dialogues regarding health issues.

In January 2024, Quark successively launched features such as "AI Learning Assistant," "AI Listening and Note-taking," and "AI PPT," and in July 2024, it introduced a one-stop AI service centered around AI search on mobile devices. In August 2024, a new Quark PC version with "system-level all-scenario AI" capabilities was released.

For example, when a user searches for "Which attractions in Shanxi inspired the game 'Black Myth: Wukong'?", the Quark super search box integrates AI responses, original sources, and historical searches—capable of generating intelligent summaries like other AI searches, while also providing source displays in the sidebar and retaining traditional search engine entry-style webpage presentations under the AI search answers. This enhances the efficiency of information retrieval for users and increases the credibility of AI responses.

Additionally, Quark has built a one-stop information service system around the "super search box," including intelligent tools such as cloud storage, scanning, document processing, and health assistants, achieving a full-process service from retrieval to creation, summarization, editing, storage, and sharing, providing users with a seamless information service experience.

Unlike many large companies that imitate ChatGPT to launch "All in One" chatbot-type AI assistants, Quark's strategy is "AI in All"—integrating AI capabilities into every aspect of the product and applying them to specific scenarios.

From the initial photo question search to college entrance examination consultation and intelligent office assistance, Quark's product evolution has always revolved around user needs in specific scenarios. Subsequently, Quark has continuously launched and updated features such as AI question search, AI academic search, and AI tips, creating differentiated AI applications focused on learning and office scenarios.

The development journey of Quark AI over the past year, illustrated by: Gizhi Light Year

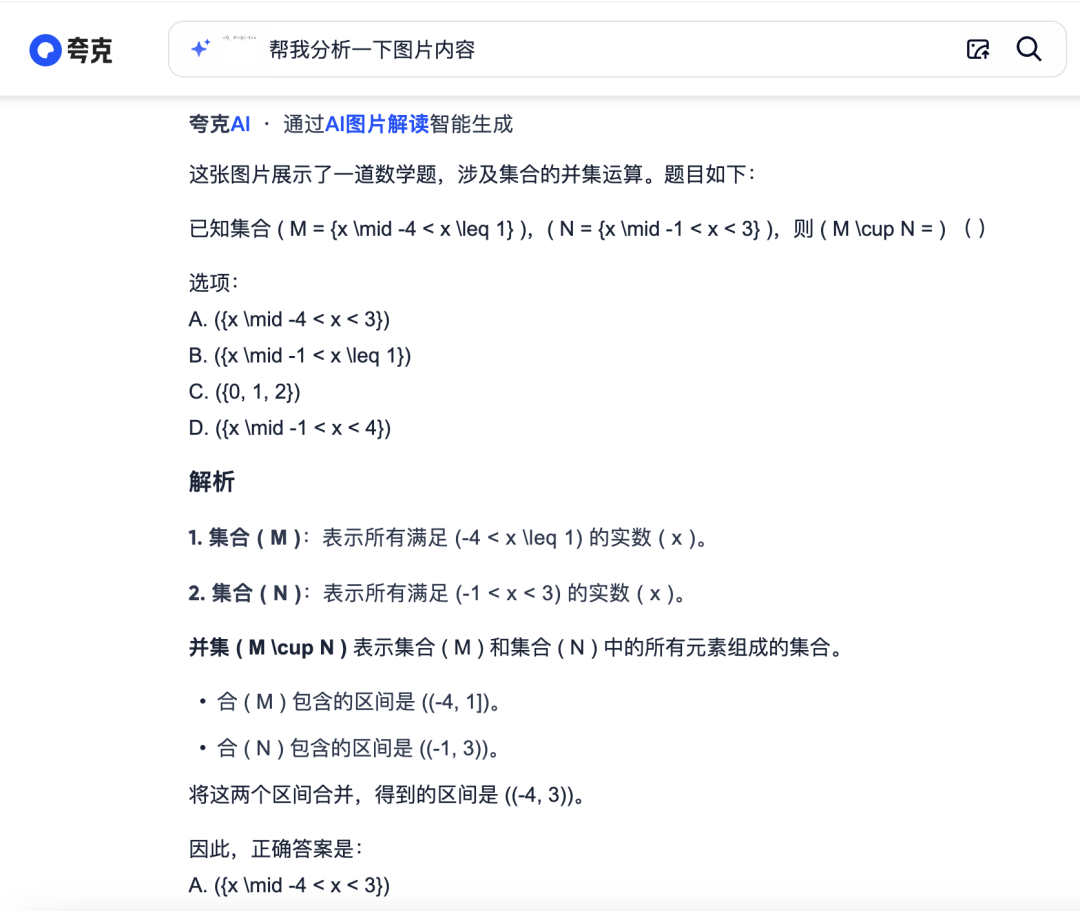

Among these, the upgraded "AI Question Search" feature in November 2024 is the most representative of Quark's AI capabilities.

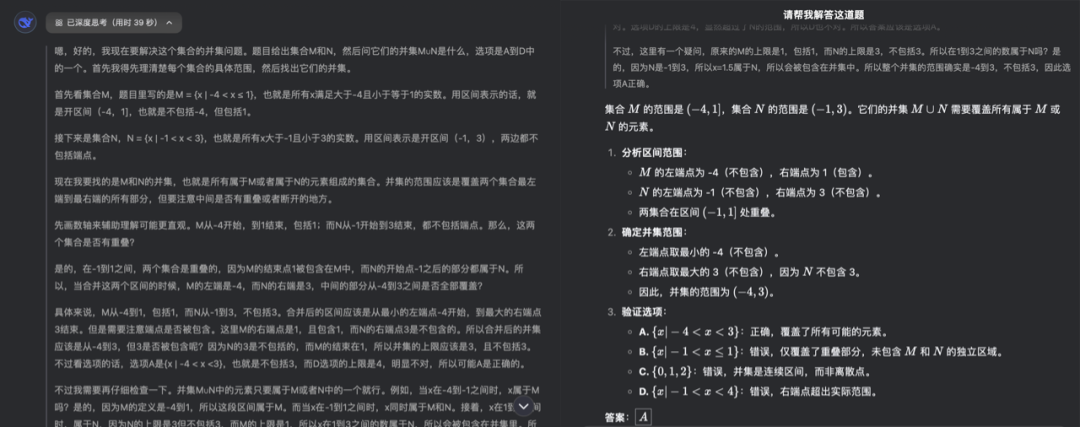

In fact, as early as December 2023, Quark launched the AI Topic Explanation Assistant. At that time, the AI Topic Explanation Assistant relied more on a question bank as its "knowledge base," and the AI could only teach users how to solve questions from that bank. The upgraded AI question search product possesses stronger "intelligence," capable of answering existing questions in the question bank and tackling new and difficult problems. The application of the large model "Chain of Thought (CoT)" allows Quark's AI question search to present the problem-solving thought process and steps sequentially, providing users with more detailed content analysis and learning guidance.

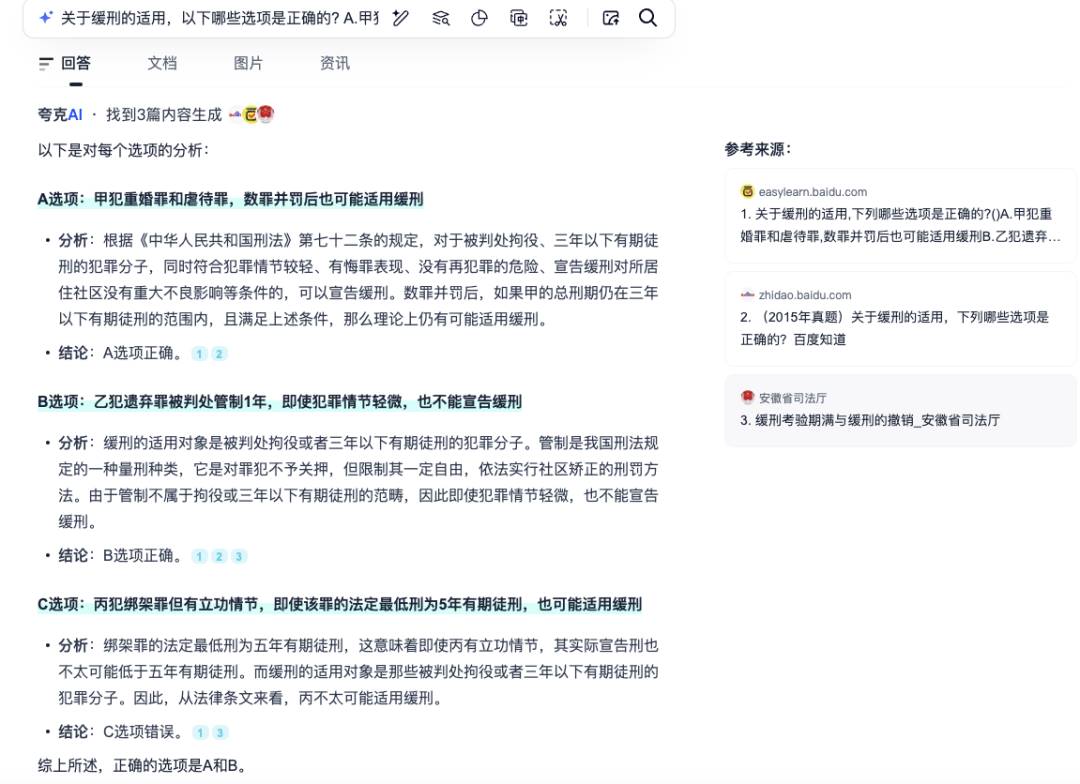

Compared to similar question search products that mostly rely on question banks and can only answer K12-level questions, Quark's AI question search product can answer new questions in the K12 field as well as specialized questions for graduate entrance exams, civil service exams, and various qualification exams. Users only need to take a photo or screenshot, and Quark can find the corresponding question and provide step-by-step graphical, textual, and video content along with professional answers from AI. Additionally, for questions in specialized fields such as law and medicine, Quark's "AI Question Search" can also provide answers.

Quark's response to judicial examination questions

At the same time, Quark's "AI Question Search" can leverage AI capabilities to provide in-depth explanations of knowledge points and exam points within the questions, accurately pinpointing key steps, allowing users not only to learn how to solve a specific question but also to apply that knowledge to similar questions.

The powerful capabilities of Quark's "AI Question Search" rely not only on Quark's years of experience in search and the accumulation of high-quality materials and user needs in learning scenarios but also on the support of the "Lingzhi" Learning Large Model launched around the same time.

The "Lingzhi" large model was trained by Quark's technical team based on the "Quark Large Model," utilizing high-quality data accumulated over years of deep cultivation in the education field. It possesses the Chain of Thought capability found in many top models and can translate the thought process into language that students can understand, aligning more closely with their learning processes.

In other words, when explaining a question to students, the "Lingzhi" large model knows which knowledge points to cover and how to construct the problem-solving thought process.

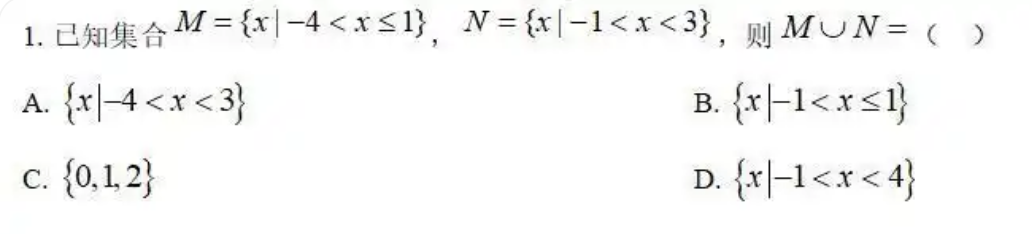

For example, when inputting the 2024 Beijing college entrance examination math questions into both DeepSeek and Quark, the responses are as follows:

Response from DeepSeek

Response from Quark

As can be seen, compared to DeepSeek's lengthy Chain of Thought narrative and detailed official responses, Quark's answers are more concise and resemble an explanation of a question.

The education industry, due to a large number of "knowledge explanations" and "popular science" scenarios, places high demands on the multimodal capabilities of models. However, existing multimodal models struggle with recognizing formulas, handwritten notes, and especially have poor fine-grained understanding of graphics.

To address this issue, Quark's "Lingzhi" large model has constructed a large-scale domain-specific training corpus through a large-scale multimodal pre-training foundation, while ensuring better understanding effects in the model structure.

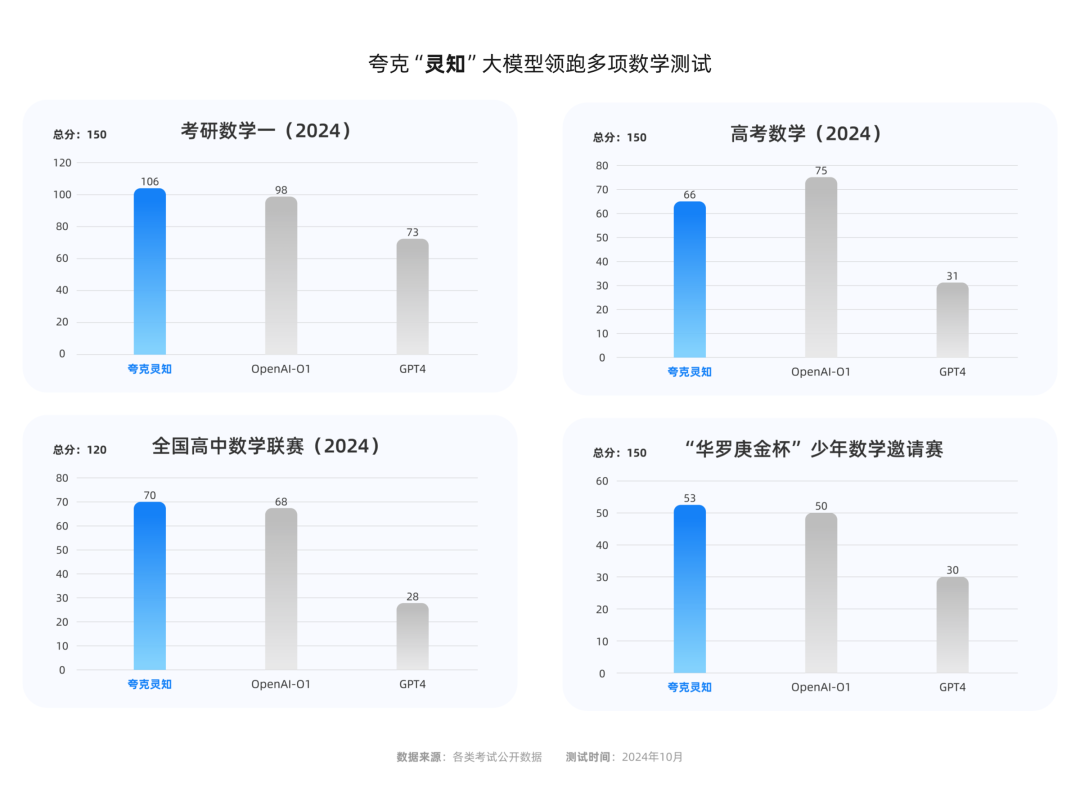

In the latest evaluations, Quark's "Lingzhi" learning large model has achieved accuracy and scoring rates in graduate entrance exam math questions that can rival OpenAI-o1, far surpassing other domestic models. In several important tests such as domestic math competitions and college entrance examinations, Quark's accuracy and scoring rates are also in an absolute leading position.

Display of "Lingzhi" large model math evaluation results

Image source: Quark

Unlike companies like DeepSeek that develop purely foundational model capabilities, Quark's model development is user demand-driven. For example, in AI writing, Quark's technical team developed the Quark Creative Model, which can generate long texts of over 8,000 words, using multi-stage CoT and retrieval enhancement techniques to meet the needs of Quark's young users for writing reports and papers. This ensures adherence to word count requirements. Even DeepSeek can currently only generate articles of up to 3,000 words.

Additionally, Quark's AI writing function also serves as an "online text editor," allowing users to perform complex operations such as editing, polishing, and expanding the generated articles, all supported by the capabilities of the Quark Creative Model.

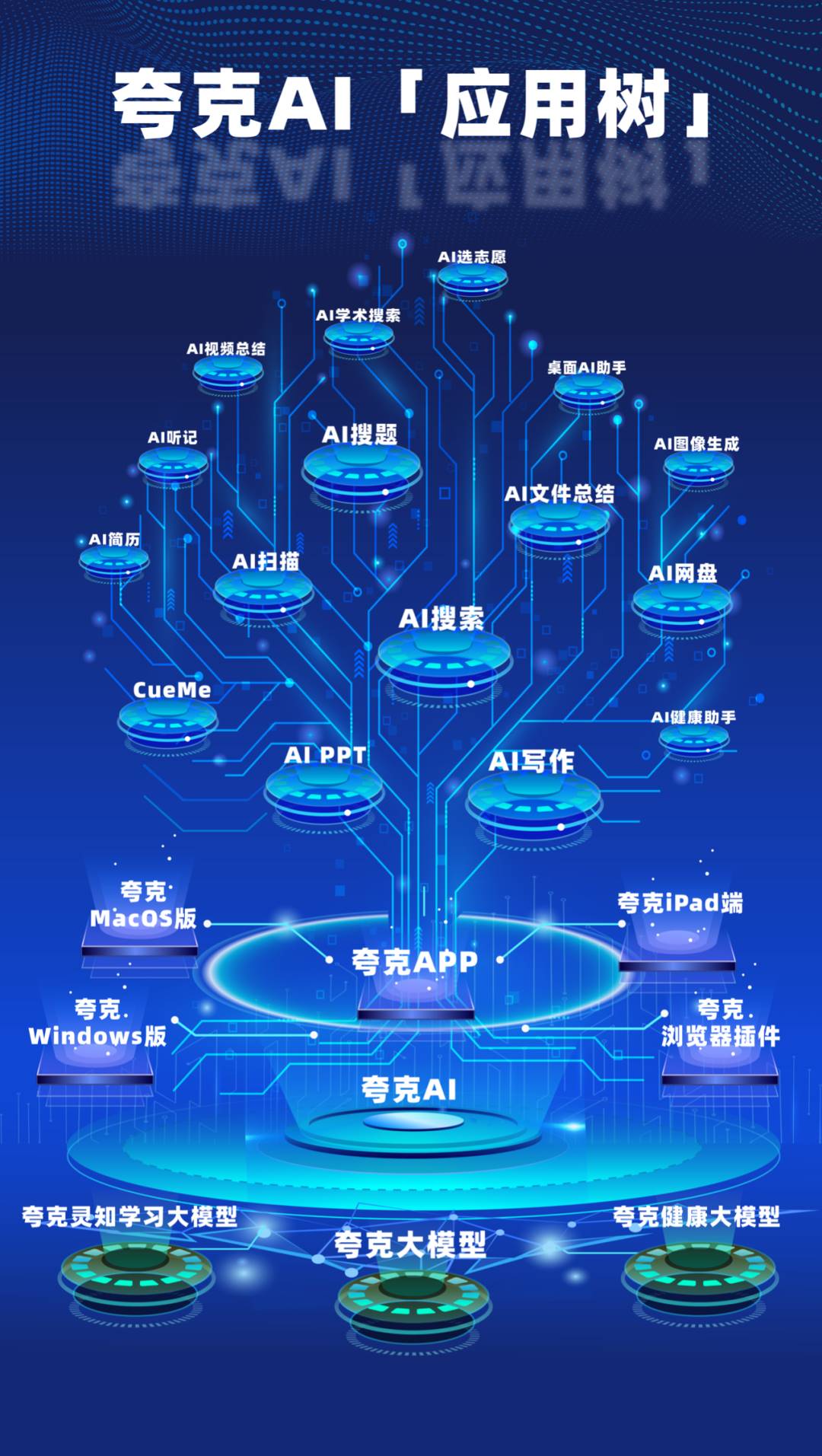

It can be said that while the world is focused on "rolling" large model parameters, Quark has shifted more focus to practical application scenarios, upgrading and optimizing model capabilities based on user needs. As of now, Quark has formed a system-level all-scenario AI capability.

Image source: Quark

3. Alibaba's AI To C Acceleration

As one of Alibaba's four strategic innovation businesses, every move Quark makes represents not only itself but also the direction of Alibaba's entire AI To C business.

On January 15, Quark upgraded its brand slogan to "AI All-Purpose Assistant for 200 Million People," showcasing a new business posture accelerating the exploration of AI To C applications. Recently, Alibaba founder Jack Ma suddenly "appeared" at Alibaba's Hangzhou campus, visiting the office area where Quark and other AI To C businesses are located.

In recent times, Alibaba has been active in the AI To C field: first, "young" executive Wu Jia returned to Alibaba Group to explore AI To C business; then, Alibaba's AI application "Tongyi" was officially spun off from Alibaba Cloud and merged into Alibaba's Intelligent Information Business Group; and recently, media reports indicated that the hardware team of Tmall Genie is now working with the Quark product team, focusing on planning and defining the next generation of AI products and integrating with Quark's AI capabilities. After the team merger, the new team will also explore new hardware directions, including AI glasses.

Thus, Quark, the Tongyi app, and Tmall Genie will serve as productivity tools, chatbots, and AI hardware, respectively, providing users with differentiated services.

On February 6, Alibaba's To C field welcomed a heavyweight figure—global top AI scientist Professor Steven Hoi officially joined Alibaba as Vice President, reporting to Wu Jia, responsible for foundational research and application solutions related to multimodal foundational models and agents in AI To C business.

According to insiders, Professor Hoi will focus on foundational research and application solutions related to multimodal foundational models and agents in AI To C business, significantly enhancing Alibaba's AI applications' end-to-end closed-loop capabilities in model integration and application. Once breakthroughs are achieved in multimodal foundational model capabilities, applications like Quark will have new exploration spaces in business.

At the same time, Alibaba's AI To C business is assembling a top-tier AI algorithm research and engineering team, attracting many outstanding talents from the industry. Industry insiders analyze that the arrival of world-class top scientists at the beginning of 2025 can be seen as an important signal of Alibaba's increased investment in talent and resources for AI To C. The top talent team in large models will support Alibaba's in-depth exploration in areas like multimodal agents and open up imaginative spaces for the next phase of building user-oriented AI application platforms.

Now, ByteDance has made significant investments in AI applications, rebooting its "App Factory" strategy through heavy investment in traffic, internal competitions, and active overseas expansion; Tencent has launched two products, "Yuanbao" and "Yuanqi," in the direction of AI assistants and intelligent agents, and has regained public attention with the recently launched personal knowledge management tool ima.copilot; Baidu has launched an AI product matrix including Wenxin Yiyan, Wenxin Yige, Chengpian AI, and Super Canvas, employing a "large and comprehensive" approach to conduct "saturation attacks" on competitors. Coupled with the efforts of new startups like the "Six Little Tigers" of large models and DeepSeek in AI applications, Alibaba's AI To C business faces fierce competition and significant pressure.

However, where there are challenges, there are solutions. Quark, through its "AI in All" strategy and precise grasp of user needs, has demonstrated that it is possible to achieve strong product power without competing on parameters, relying on "application-oriented large models" and a precise understanding of user needs, which is another version of "low cost and high efficiency"; and with over 200 million users and a top-ranking monthly active user count, it also proves the correctness of Quark's approach and the bright future of Alibaba's AI To C business.

As AI technology enters the "deep water zone" of applications, Quark's innovative paradigm provides us with a key insight: true technological advancement lies not only in how many technological peaks are climbed but also in how many scientific achievements can be transformed into tangible value at users' fingertips. Only when users truly make choices and take action to vote for AI applications can this breakthrough battle concerning the practicalization of AI technology reach the true turning point that determines the future industrial landscape.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。