The existence of the "Curse of Depth" poses serious challenges to the training and optimization of large language models.

High-performance large models typically require thousands of GPUs during training, taking months or even longer to complete a single training run. This enormous resource investment necessitates that every layer of the model be efficiently trained to ensure maximum utilization of computational resources.

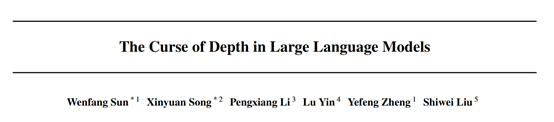

However, researchers from Dalian University of Technology, Westlake University, and the University of Oxford have found that models like DeepSeek, Qwen, Llama, and Mistral do not perform well in their deeper layers during training, and can even be completely pruned without affecting model performance.

For example, researchers conducted layer-wise pruning on the DeepSeek-7B model to assess the contribution of each layer to the overall performance of the model. The results showed that removing the deeper layers had a negligible impact on performance, while removing the shallower layers led to a significant decline in performance. This indicates that the deeper layers of the DeepSeek model failed to effectively learn useful features during training, while the shallower layers handled most of the feature extraction tasks.

This phenomenon is referred to as the "Curse of Depth," and researchers have proposed an effective solution—LayerNorm Scaling.

Introduction to the Curse of Depth

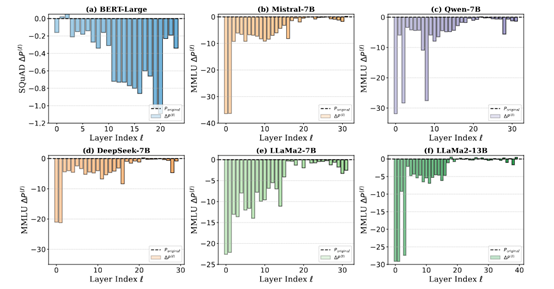

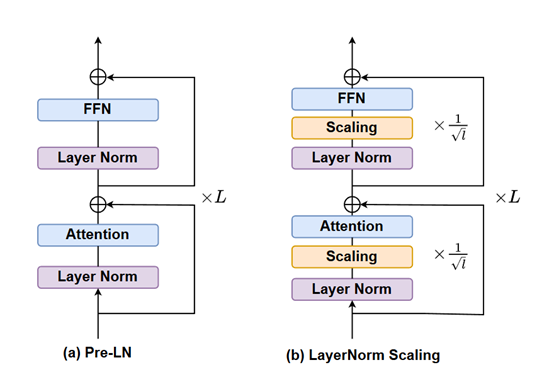

The root of the "Curse of Depth" phenomenon lies in the characteristics of Pre-LN. Pre-LN is a normalization technique widely used in Transformer architecture models, which normalizes the input at each layer rather than the output. While this normalization method stabilizes the training process of the model, it also introduces a serious problem: as the model depth increases, the output variance of Pre-LN grows exponentially.

This explosive growth in variance causes the derivatives of the deeper Transformer blocks to approach the identity matrix, resulting in these layers contributing almost no effective information during training. In other words, the deeper layers become identity mappings during training, unable to learn useful features.

The existence of the "Curse of Depth" presents serious challenges to the training and optimization of large language models. First, the insufficient training of deeper layers leads to resource wastage. Training large language models typically requires substantial computational resources and time. Due to the deeper layers failing to effectively learn useful features, computational resources are largely wasted.

The ineffectiveness of the deeper layers limits further improvements in model performance. Although the shallower layers can handle most of the feature extraction tasks, the ineffectiveness of the deeper layers prevents the model from fully leveraging its depth advantage.

Moreover, the "Curse of Depth" also poses challenges to the scalability of the model. As the model size increases, the ineffectiveness of the deeper layers becomes more pronounced, making training and optimization more difficult. For instance, when training ultra-large models, the insufficient training of deeper layers may slow down the model's convergence speed or even prevent convergence altogether.

Solution—LayerNorm Scaling

The core idea of LayerNorm Scaling is the precise control of the output variance of Pre-LN. In a multi-layer Transformer model, the layer normalization output of each layer is multiplied by a specific scaling factor. This scaling factor is closely related to the current layer's depth, being the reciprocal of the square root of the layer depth.

To provide a simple and understandable example, a large model is like a tall building, with each layer representing a floor, and LayerNorm Scaling finely adjusts the "energy output" of each floor.

For the lower floors (shallower layers), the scaling factor is relatively large, meaning their outputs are adjusted to a lesser extent, allowing them to maintain relatively strong "energy"; for the higher floors (deeper layers), the scaling factor is smaller, effectively reducing the "energy intensity" of the deeper outputs and preventing excessive accumulation of variance.

Through this approach, the output variance of the entire model is effectively controlled, eliminating the occurrence of deep layer variance explosion. (The entire computation process is quite complex; interested readers can refer directly to the paper.)

From the perspective of model training, in traditional Pre-LN model training, the continuous increase in deep layer variance significantly interferes with the gradients during backpropagation. The gradient information from the deeper layers becomes unstable, akin to a relay race where the baton keeps dropping during the later stages of the race, leading to poor information transfer.

This makes it difficult for the deeper layers to learn effective features during training, severely diminishing the overall training effectiveness of the model. LayerNorm Scaling stabilizes the gradient flow by controlling the variance.

During backpropagation, gradients can flow more smoothly from the model's output layer to the input layer, allowing each layer to receive accurate and stable gradient signals, thereby enabling more effective parameter updates and learning.

Experimental Results

To validate the effectiveness of LayerNorm Scaling, researchers conducted extensive experiments on models of varying sizes, ranging from 130 million parameters to 1 billion parameters.

The experimental results showed that LayerNorm Scaling significantly improved model performance during the pre-training phase, reducing perplexity and decreasing the number of tokens required for training compared to traditional Pre-LN.

For instance, in the LLaMA-130M model, LayerNorm Scaling reduced perplexity from 26.73 to 25.76, while in the 1 billion parameter LLaMA-1B model, perplexity decreased from 17.02 to 15.71. These results indicate that LayerNorm Scaling not only effectively controls the variance growth of deeper layers but also significantly enhances the training efficiency and performance of the model.

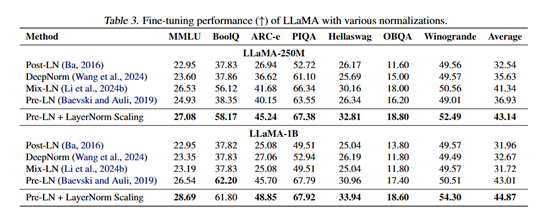

Researchers also evaluated the performance of LayerNorm Scaling during the supervised fine-tuning phase. The experimental results showed that LayerNorm Scaling outperformed other normalization techniques across multiple downstream tasks.

For example, in the LLaMA-250M model, LayerNorm Scaling improved performance on the ARC-e task by 3.56%, with an average performance improvement of 1.80% across all tasks. This indicates that LayerNorm Scaling not only performs excellently during the pre-training phase but also significantly enhances model performance during the fine-tuning phase.

Additionally, researchers replaced the normalization method of the DeepSeek-7B model from traditional Pre-LN to LayerNorm Scaling. Throughout the training process, the learning capability of the deeper blocks was significantly enhanced, allowing them to actively participate in the model's learning process and contribute to performance improvements. The decrease in perplexity was more pronounced, and the rate of decline was also more stable.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。