Author | Sid @IOSG

The Current State of Web3 Games

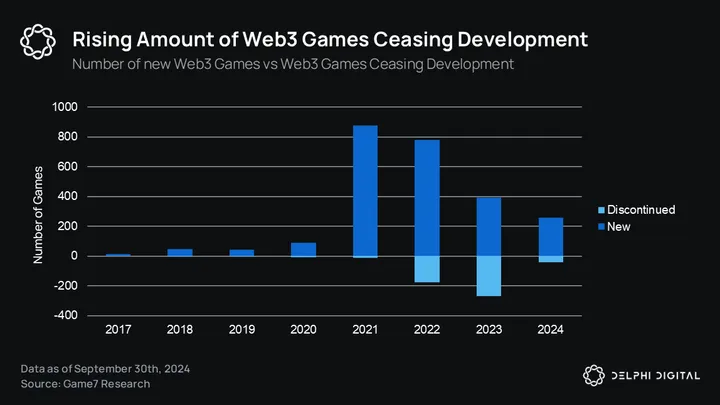

With the emergence of newer and more attention-grabbing narrative methods, Web3 games as an industry have taken a backseat in both primary and public market narratives. According to Delphi's 2024 report on the gaming industry, the cumulative financing for Web3 games in the primary market is less than $1 billion. This is not necessarily a bad thing; it indicates that the bubble has burst, and current capital may be moving towards higher-quality game compatibility. The following chart is a clear indicator:

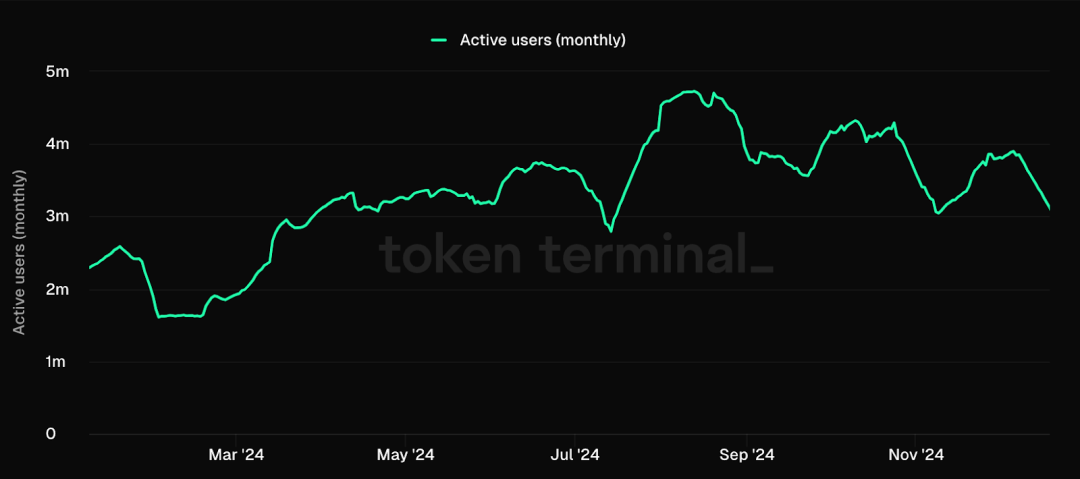

Throughout 2024, the number of users in gaming ecosystems like Ronin has surged significantly, and with the emergence of high-quality new games like Fableborn, it is almost comparable to the glory days of Axie in 2021.

Gaming ecosystems (L1s, L2s, RaaS) are increasingly resembling Web3's Steam, controlling distribution within the ecosystem, which has become a driving force for game developers to create games within these ecosystems, as it helps them acquire players. According to their previous reports, the user acquisition cost for Web3 games is about 70% higher than that of Web2 games.

Player Retention

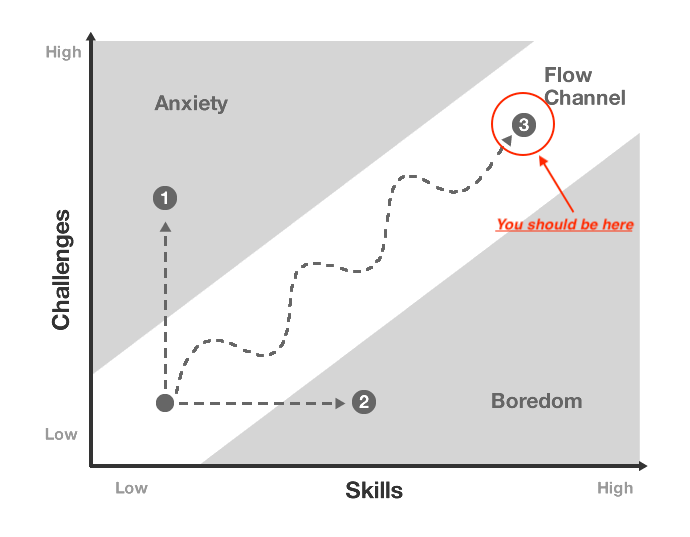

Retaining players is as important, if not more so, than attracting them. Although there is a lack of data on player retention rates in Web3 games, player retention is closely related to the concept of "Flow" (a term coined by Hungarian psychologist Mihaly Csikszentmihalyi).

"Flow state" is a psychological concept where players achieve a perfect balance between challenge and skill level. It’s like being "in the zone"—time seems to fly by, and you are completely immersed in the game.

Games that continuously create flow states tend to have higher retention rates due to the following mechanisms:

#Progressive Design

Early Game: Simple challenges to build confidence

Mid Game: Gradually increasing difficulty

Late Game: Complex challenges to master the game

This detailed difficulty adjustment allows players to stay within their rhythm as their skills improve.

#Engagement Loops

Short-term: Immediate feedback (kills, points, rewards)

Mid-term: Level completions, daily quests

Long-term: Character development, rankings

These nested loops can maintain player interest over different time frames.

#Factors that Disrupt Flow State:

Improper difficulty/complexity settings: This may be due to poor game design or even mismatched player numbers leading to imbalances.

Unclear objectives: Game design factors.

Feedback delays: Game design and technical issues.

Intrusive monetization: Game design + product.

Technical issues/lag.

The Symbiosis of Games and AI

AI agents can help players achieve this flow state. Before exploring how to achieve this, let’s first understand what types of agents are suitable for the gaming field:

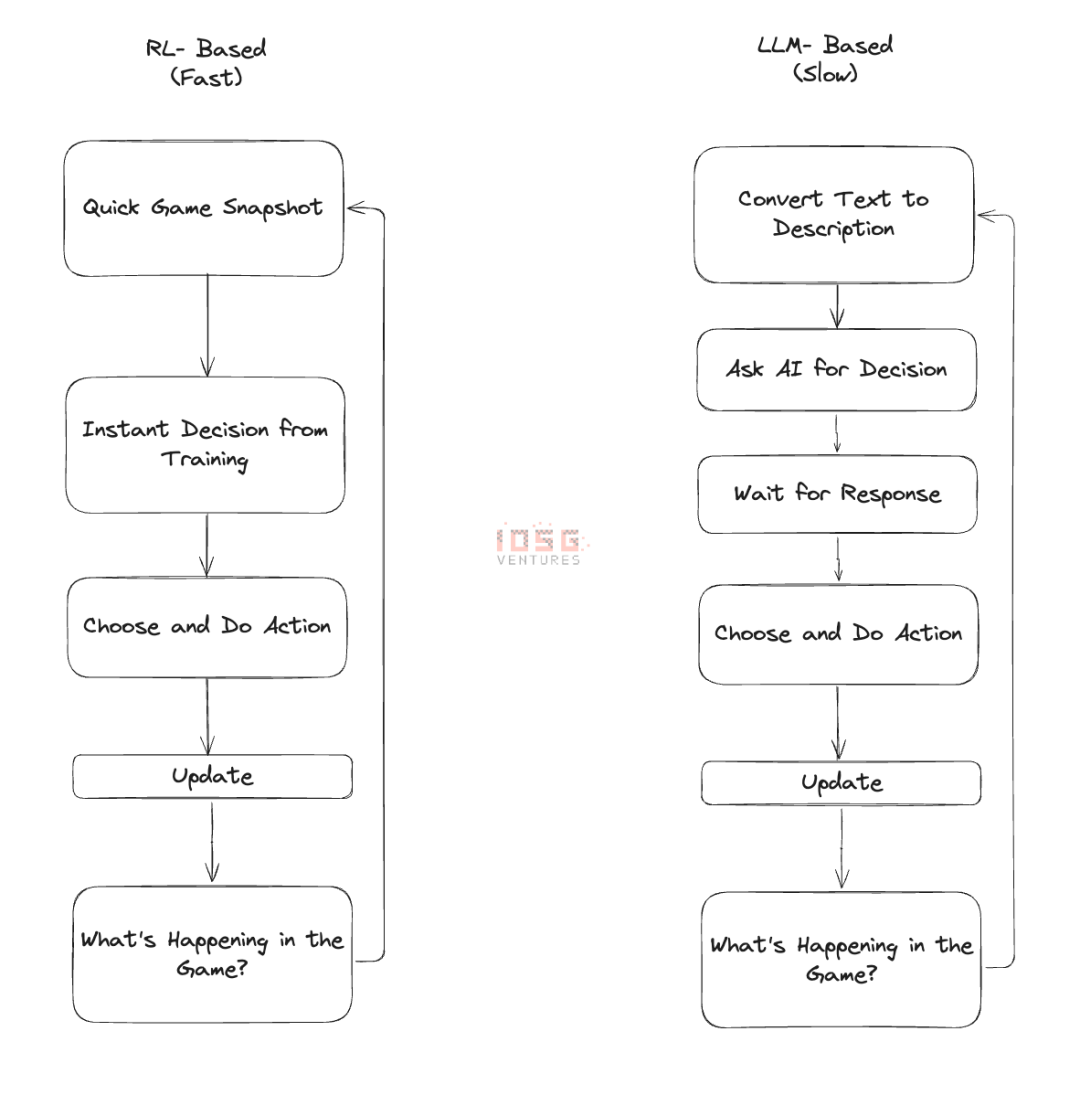

LLM and Reinforcement Learning

The key to game AI is speed and scale. When using LLM-driven agents in games, each decision requires calling a massive language model. It’s like having a middleman before taking each step. The middleman is smart, but waiting for their response can make everything painfully slow. Now imagine doing this for hundreds of characters in a game; it would not only be slow but also costly. This is the main reason we have not yet seen large-scale LLM agents in games. The largest experiment we currently see is a civilization of 1,000 agents developed in Minecraft. If there were 100,000 concurrent agents on different maps, it would be extremely expensive. Each new agent adds latency, which disrupts the flow state for players.

Reinforcement Learning (RL) is a different approach. We can think of it as training a dancer rather than giving step-by-step instructions through a headset. With reinforcement learning, you need to spend time teaching the AI how to "dance" and how to respond to different situations in the game. Once trained, the AI can make decisions naturally and smoothly within milliseconds without needing to ask for guidance. You can have hundreds of such trained agents running in your game, each capable of making independent decisions based on their observations. They may not be as articulate or flexible as LLM agents, but they are fast and efficient.

The true magic of RL shines when you need these agents to work together. LLM agents require lengthy "conversations" to coordinate, while RL agents can form an implicit understanding during training—like a football team that has trained together for months. They learn to predict each other's actions and coordinate naturally. While this may not be perfect, and they may make mistakes that LLMs wouldn’t, they can operate at a scale that LLMs cannot match. This trade-off always makes sense for game applications.

Agents and NPCs

Agents as NPCs will address a core issue many games face today: player liquidity. P2E was the first experiment to use cryptoeconomics to solve the player liquidity problem, and we all know the outcome.

Pre-trained agents serve two purposes:

- Filling the world in multiplayer games.

- Maintaining a set difficulty level for a group of players in the world, keeping them in a flow state.

While this seems very obvious, it is challenging to build. Independent games and early Web3 games lack the financial resources to hire AI teams, creating opportunities for any service provider focused on RL-based agent frameworks.

Games can collaborate with these service providers during playtesting and testing phases to lay the groundwork for player liquidity at launch.

This way, game developers can focus their main efforts on game mechanics, making their games more enjoyable. While we love to integrate tokens into games, at the end of the day, games are games, and they should be fun.

Agent Players

One of the most popular games in the world, League of Legends, has a black market where players train their characters with the best attributes, which the game prohibits.

This helps form the basis for game characters and attributes as NFTs, creating a market for this.

What if a new subset of "players" emerged, acting as coaches for these AI agents? Players could guide these AI agents and monetize them in various forms, such as winning matches, and could also serve as "training partners" for esports players or passionate gamers.

The Return of the Metaverse?

Early versions of the metaverse may have simply created another reality rather than an ideal one, thus failing to meet their goals. AI agents help metaverse residents create an ideal world—an escape.

In my view, this is where LLM-based agents can shine. Perhaps someone could add pre-trained agents to their world, with these agents being domain experts capable of conversing about their favorite topics. If I created an agent trained on 1,000 hours of Elon Musk interviews, and a user wanted to use an instance of this agent in their world, I could be rewarded for that. This could create a new economy.

With metaverse games like Nifty Island, this could become a reality.

In Today: The Game, the team has created an LLM-based AI agent named "Limbo" (which has released a speculative token), with the vision of multiple agents autonomously interacting in this world while we can watch a 24x7 live stream.

How Does Crypto Integrate with This?

Crypto can help address these issues in various ways:

- Players contribute their game data to improve models, gain better experiences, and earn rewards as a result.

- Coordinating multiple stakeholders like character designers and trainers to create the best in-game agents.

- Creating a market for ownership of in-game agents and monetizing them.

There is a team doing these things and more: ARC Agents. They are addressing all the issues mentioned above.

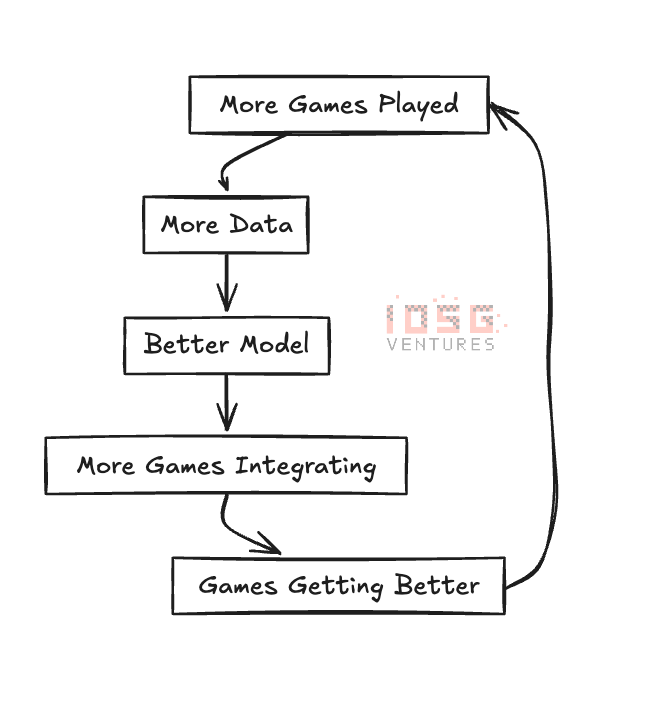

They have the ARC SDK, which allows game developers to create human-like AI agents based on game parameters. Through very simple integration, it can solve player liquidity issues, clean up game data and turn it into insights, and help players maintain flow states in games by adjusting difficulty levels. For this, they use reinforcement learning techniques.

They initially developed a game called "AI Arena," where you essentially train your AI character to fight. This helped them form a benchmark learning model that constitutes the foundation of the ARC SDK. This creates a sort of DePIN-like flywheel:

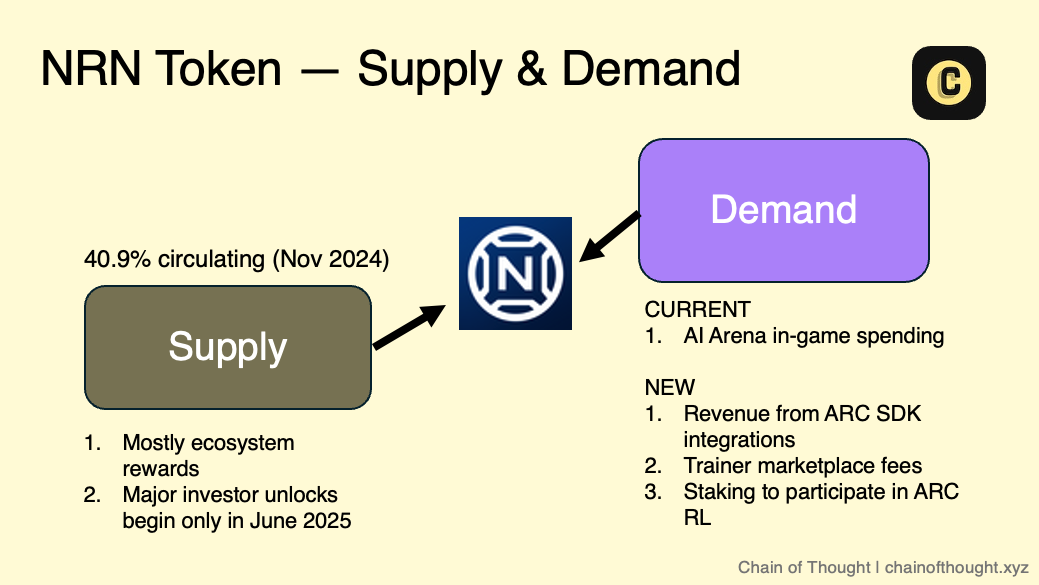

All of this is coordinated by their ecosystem token $NRN. The Chain of Thought team explains this well in their article about ARC agents:

Games like Bounty are taking an agent-first approach, building agents from scratch in a wild west world.

Conclusion

The fusion of AI agents, game design, and crypto is not just another technological trend; it has the potential to solve various problems that plague independent games. The brilliance of AI agents in the gaming field lies in their enhancement of the fun of games—good competition, rich interactions, and challenges that keep players coming back. As frameworks like ARC agents mature and more games integrate AI agents, we are likely to see entirely new gaming experiences emerge. Imagine a world that is vibrant not because of other players but because the agents within it can learn and evolve alongside the community.

We are transitioning from a "play-to-earn" model to a more exciting era: one that is rich in genuine fun and infinitely scalable gaming. The coming years will be thrilling for developers, players, and investors focused on this field. Games in 2025 and beyond will not only be more technologically advanced but fundamentally more engaging, accessible, and vibrant than any games we have seen before.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。