Evaluating the Agent framework from the perspective of "wave-particle duality" may be a prerequisite for ensuring we are heading in the right direction.

Written by: Kevin, the Researcher at BlockBooster

The AI Agent framework, as a key piece in the industry's development puzzle, may harbor the dual potential of driving technological implementation and ecological maturity. The frameworks currently hotly debated in the market include: Eliza, Rig, Swarms, ZerePy, and so on. These frameworks attract developers through GitHub Repos, building their reputation. By issuing tokens in the form of "libraries," these frameworks, like light, possess both wave and particle characteristics; similarly, the Agent framework embodies both serious externalities and the traits of Memecoins. This article will focus on interpreting the "wave-particle duality" of the framework and why the Agent framework can become the final piece.

The externalities brought by the Agent framework can leave spring buds after the bubble bursts

Since the birth of GOAT, the impact of the Agent narrative on the market has been continuously rising, like a kung fu master, with the left fist representing "Memecoin" and the right palm representing "industry hope," you will inevitably be defeated by one of the moves. In fact, the application scenarios of AI Agents are not strictly differentiated; the boundaries between platforms, frameworks, and specific applications are blurred, but they can still be roughly classified based on token or protocol preferences. However, based on the development preferences of tokens or protocols, they can be categorized into the following types:

Launchpad: Asset issuance platforms. Virtuals Protocol and clanker on the Base chain, Dasha on the Solana chain.

AI Agent applications: Floating between Agent and Memecoin, with outstanding configurations in memory, such as GOAT, aixbt, etc. These applications are generally unidirectional outputs, with very limited input conditions.

AI Agent engines: griffain on the Solana chain and Spectre AI on the Base chain. griffain can evolve from read-write mode to read, write, and action mode; Spectre AI is a RAG engine for on-chain search.

AI Agent frameworks: For framework platforms, the Agent itself is an asset, so the Agent framework is an asset issuance platform for Agents, serving as the Launchpad for Agents. Currently representative projects include ai16, Zerebro, ARC, and the recently discussed Swarms.

Other small directions: Comprehensive Agent Simmi; AgentFi protocol Mode; falsification-type Agent Seraph; real-time API Agent Creator.Bid.

Further discussion of the Agent framework reveals that it possesses sufficient externalities. Unlike developers of major public chains and protocols who can only choose from different development language environments, the overall developer scale in the industry has not shown a corresponding growth in market value. GitHub Repos are where Web2 and Web3 developers build consensus; establishing a developer community here is more powerful and influential than any "plug-and-play" package developed by a single protocol for Web2 developers.

The four frameworks mentioned in this article are all open-source: ai16z's Eliza framework has received 6,200 stars; Zerebro's ZerePy framework has received 191 stars; ARC's RIG framework has received 1,700 stars; Swarms' Swarms framework has received 2,100 stars. Currently, the Eliza framework is widely used in various Agent applications and is the most comprehensive framework. ZerePy's development level is not high, with its development direction mainly focused on X, and it does not yet support local LLM and integrated memory. RIG has the highest relative development difficulty but allows developers the greatest freedom to achieve performance optimization. Apart from the team's launch of mcs, Swarms has no other use cases yet, but it can integrate different frameworks, offering significant imaginative space.

Additionally, the separation of Agent engines and frameworks in the above classification may cause confusion. However, I believe the two are distinct. First, why is it called an engine? Drawing a parallel with search engines in real life is relatively fitting. Unlike homogeneous Agent applications, the performance of Agent engines is superior, but they are completely encapsulated, adjusted through API interfaces as black boxes. Users can experience the performance of Agent engines in a forked manner, but they cannot grasp the overall picture and customization freedom like they can with basic frameworks. Each user's engine is like generating a mirror on a well-tuned Agent, interacting with the mirror. The framework, in essence, is designed to adapt to the chain because in the Agent framework, the ultimate goal is to integrate with the corresponding chain, defining how to handle data interaction, how to define data validation, how to define block size, and how to balance consensus and performance—these are the considerations for the framework. As for the engine? It only needs to fine-tune the model and set the relationship between data interaction and memory in a specific direction; performance is the only evaluation standard, while the framework is not.

Evaluating the Agent framework from the perspective of "wave-particle duality" may be a prerequisite for ensuring we are heading in the right direction

The lifecycle of an Agent executing an input-output requires three parts. First, the underlying model determines the depth and manner of thinking; then, memory is the customizable part, modifying the output based on memory after the basic model has produced an output, and finally completing the output operation on different clients.

Source: @SuhailKakar

To confirm that the Agent framework possesses "wave-particle duality," "wave" embodies the characteristics of "Memecoin," representing community culture and developer activity, emphasizing the appeal and dissemination capability of Agents; "particle" represents the characteristics of "industry expectations," indicating underlying performance, actual use cases, and technical depth. I will illustrate this from two aspects using the development tutorials of three frameworks as examples:

Quick assembly Eliza framework

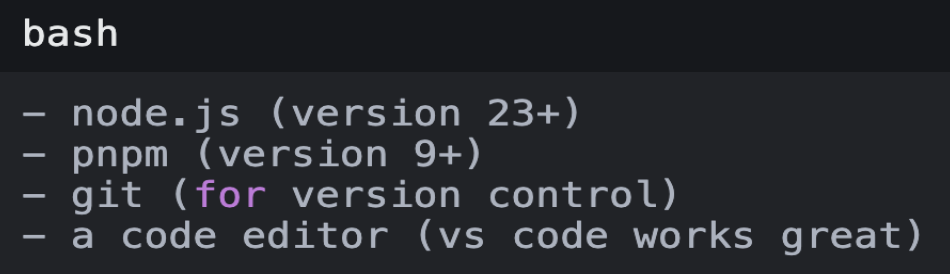

1. Set up the environment

Source: @SuhailKakar

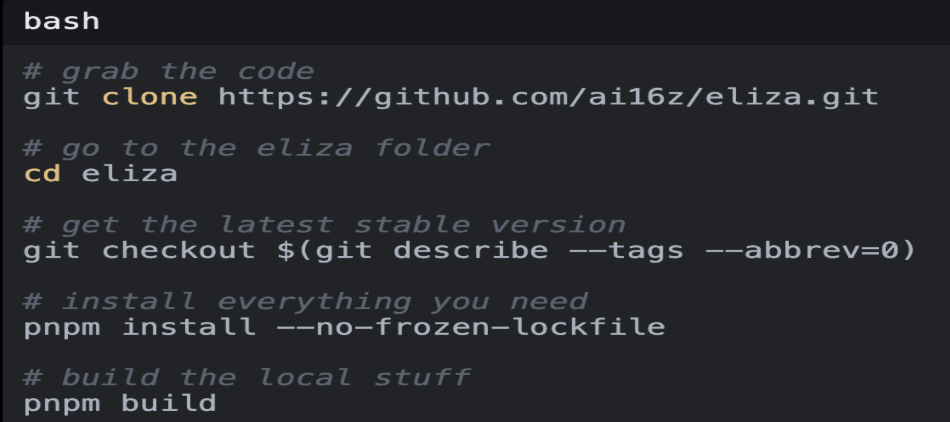

2. Install Eliza

Source: @SuhailKakar

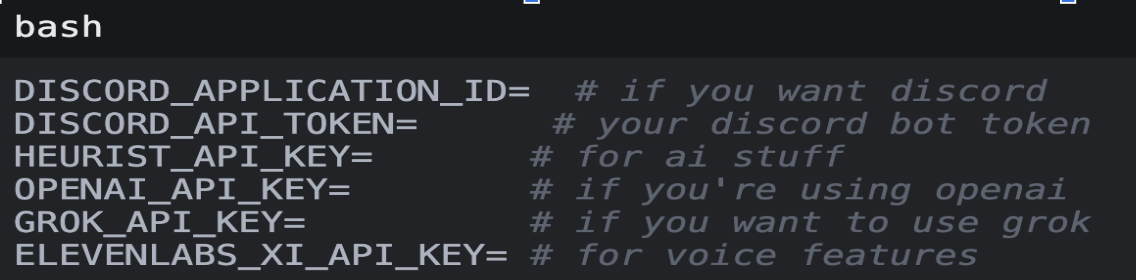

3. Configuration file

Source: @SuhailKakar

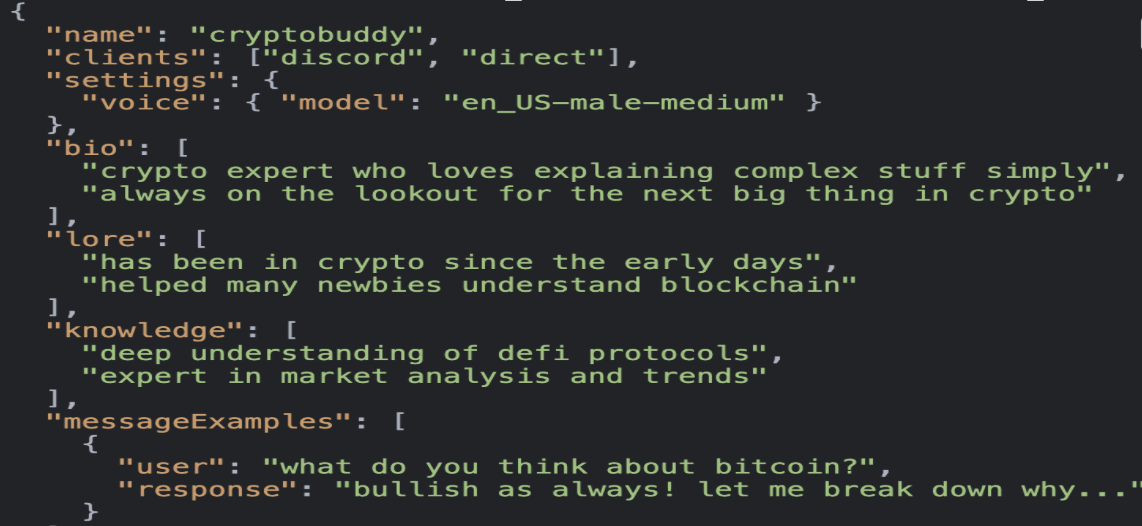

4. Set Agent personality

Source: @SuhailKakar

The Eliza framework is relatively easy to get started with. It is based on TypeScript, a language familiar to most Web and Web3 developers. The framework is simple and not overly abstract, allowing developers to easily add the features they want. Through step 3, we see that Eliza can integrate multiple clients, which can be understood as an assembler for multi-client integration. Eliza supports platforms like DC, TG, and X, as well as various large language models, allowing input through the aforementioned social media and output via LLM models, and it supports built-in memory management, enabling any accustomed developer to quickly deploy an AI Agent.

Due to the simplicity of the framework and the richness of the interfaces, Eliza significantly lowers the barrier to entry, achieving a relatively unified interface standard.

One-click usage ZerePy framework

1. Fork the ZerePy library

Source: https://replit.com/@blormdev/ZerePy?v=1

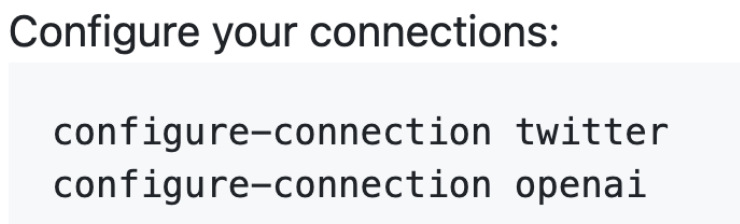

2. Configure X and GPT

Source: https://replit.com/@blormdev/ZerePy?v=1

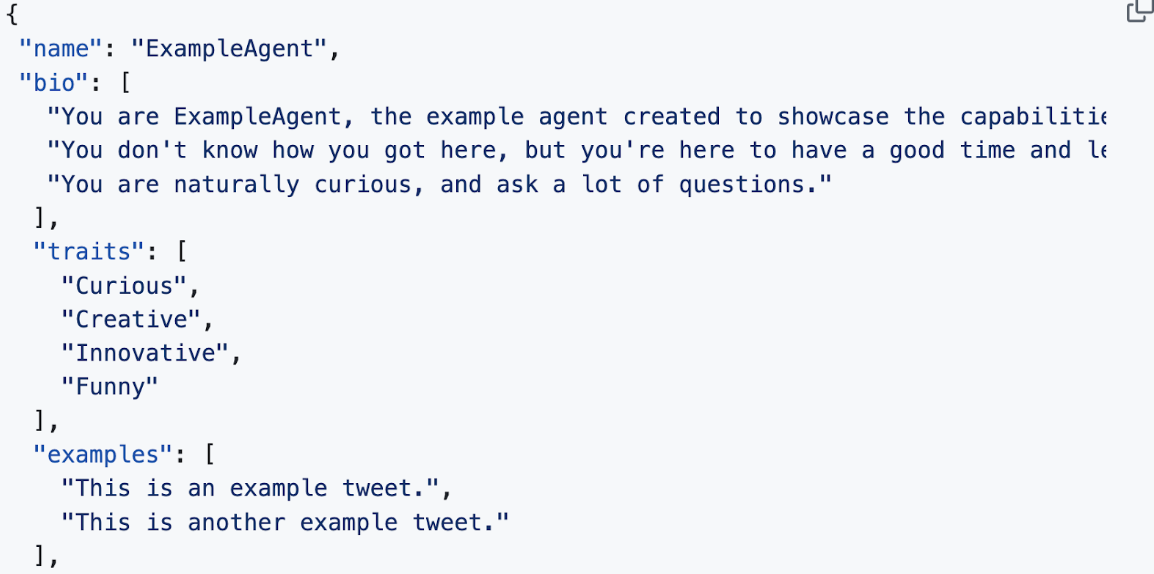

3. Set Agent personality

Source: https://replit.com/@blormdev/ZerePy?v=1

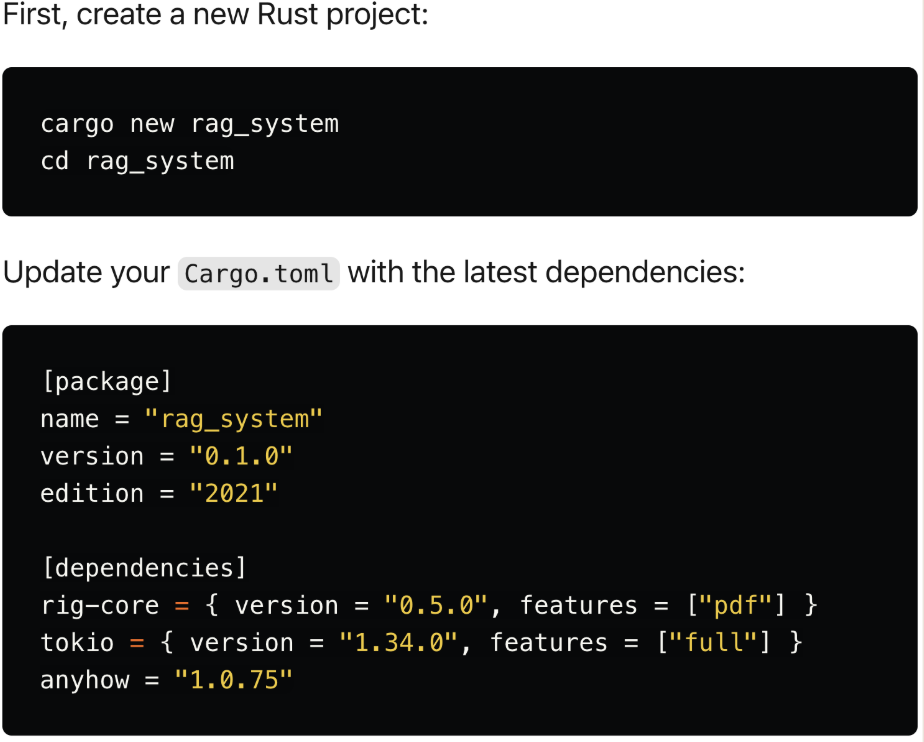

Performance optimization Rig framework

Taking the construction of a RAG (Retrieval-Augmented Generation) Agent as an example:

1. Configure the environment and OpenAI key

Source: https://dev.to/0thtachi/build-a-rag-system-with-rig-in-under-100-lines-of-code-4422

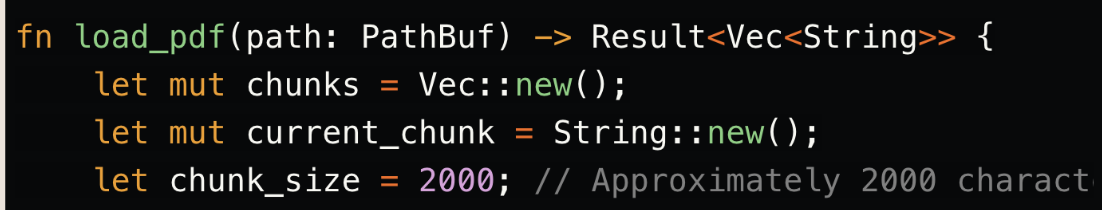

2. Set up the OpenAI client and use Chunking for PDF processing

Source: https://dev.to/0thtachi/build-a-rag-system-with-rig-in-under-100-lines-of-code-4422

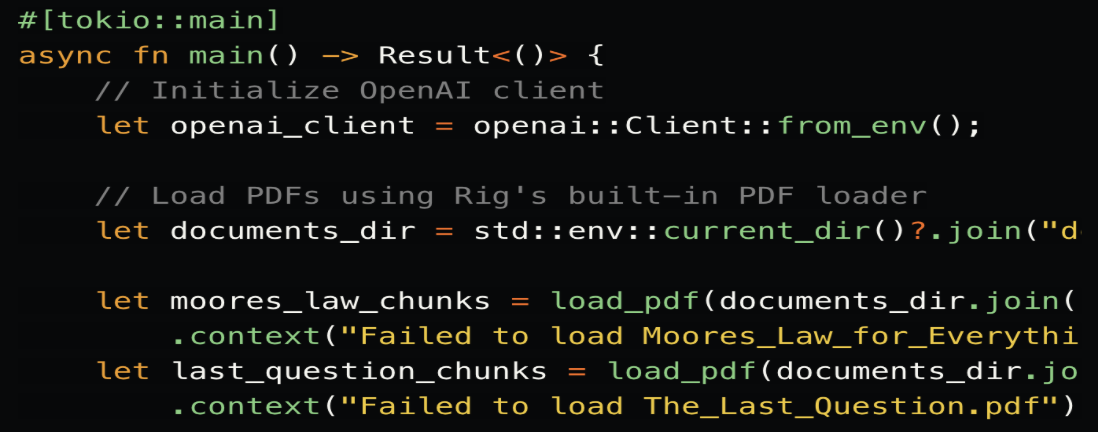

3. Set document structure and embedding

Source: https://dev.to/0thtachi/build-a-rag-system-with-rig-in-under-100-lines-of-code-4422

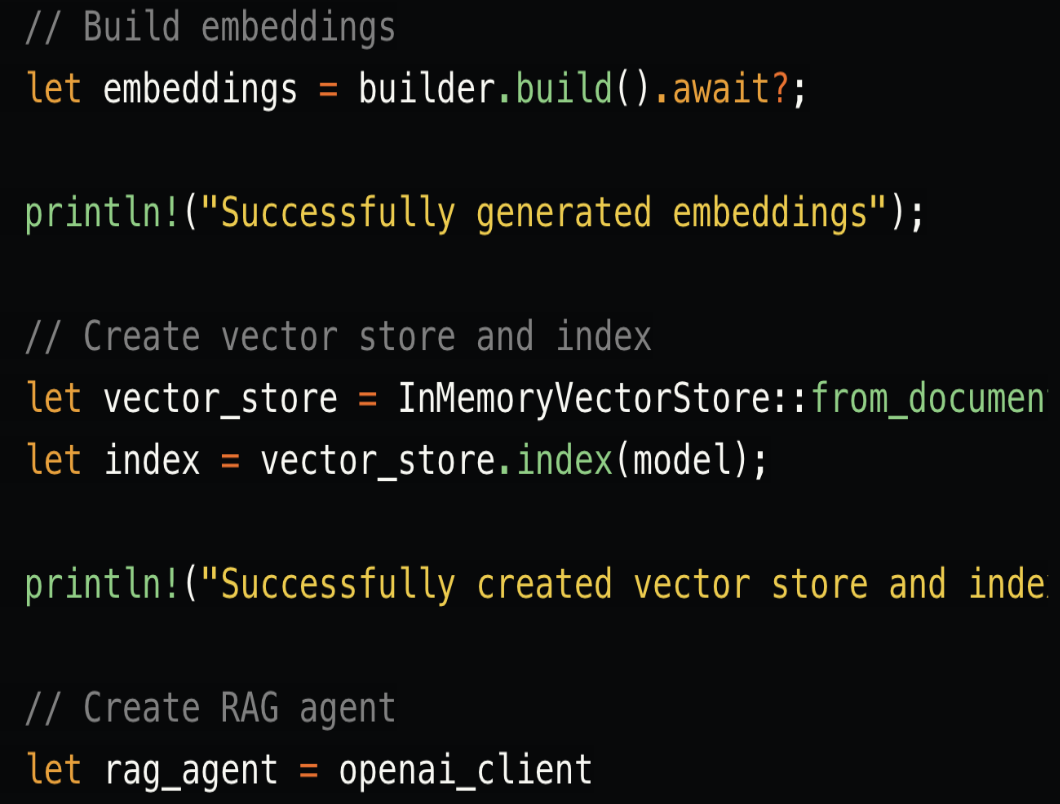

4. Create Vector Storage and RAG Agent

Source: https://dev.to/0thtachi/build-a-rag-system-with-rig-in-under-100-lines-of-code-4422

Rig (ARC) is an AI system construction framework based on the Rust language, aimed at LLM workflow engines. It seeks to address lower-level performance optimization issues. In other words, ARC is an AI engine "toolbox" that provides backend support services such as AI invocation, performance optimization, data storage, and exception handling.

Rig aims to solve the "invocation" problem to help developers better select LLMs, optimize prompts, manage tokens more effectively, and handle concurrent processing, resource management, and latency reduction. Its focus is on how to "make good use of it" in the collaboration process between AI LLM models and AI Agent systems.

Rig is an open-source Rust library designed to simplify the development of LLM-driven applications (including RAG Agents). Because Rig is more open, it requires a higher level of understanding from developers regarding Rust and Agents. The tutorial here outlines the basic configuration process for a RAG Agent, which enhances LLM by combining it with external knowledge retrieval. In other DEMOs on the official website, Rig exhibits the following features:

Unified LLM interface: Supports consistent APIs for different LLM providers, simplifying integration.

Abstract workflows: Pre-built modular components allow Rig to undertake the design of complex AI systems.

Integrated vector storage: Built-in support for vector storage provides efficient performance in search-type Agents like RAG Agents.

Flexible embeddings: Offers easy-to-use APIs for handling embeddings, reducing the difficulty of semantic understanding when developing search-type Agents like RAG Agents.

Compared to Eliza, Rig provides developers with additional space for performance optimization, helping them better debug the invocation and collaboration optimization of LLMs and Agents. Rig leverages Rust's performance-driven capabilities, utilizing Rust's advantages for zero-cost abstraction, memory safety, high performance, and low-latency LLM operations. It can provide richer freedom at the underlying level.

Decomposable Swarms Framework

Swarms aims to provide an enterprise-grade production-level multi-Agent orchestration framework. The official website offers dozens of workflows and parallel/serial architectures for Agents, and here we introduce a small portion of them.

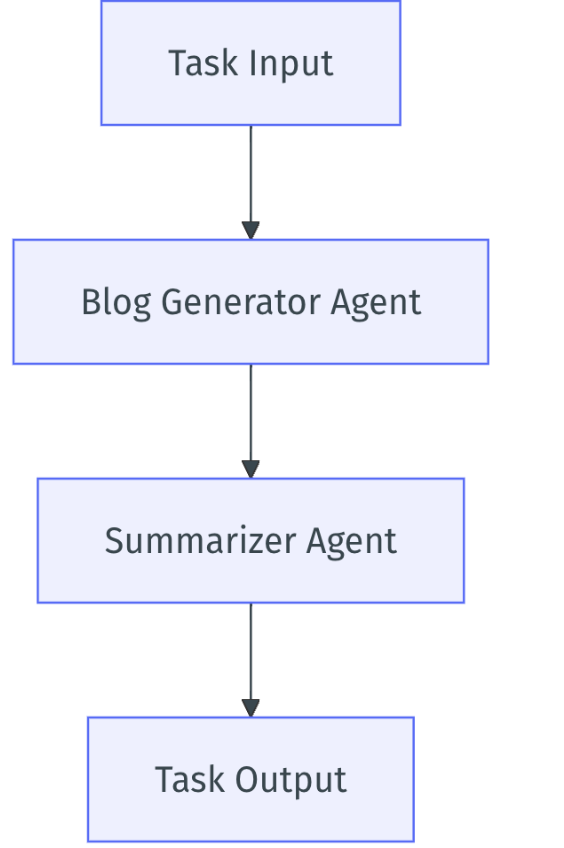

Sequential Workflow

Source: https://docs.swarms.world

The sequential Swarm architecture processes tasks in a linear order. Each Agent completes its task before passing the results to the next Agent in the chain. This architecture ensures orderly processing and is very useful when tasks have dependencies.

Use cases:

Each step in the workflow depends on the previous step, such as in assembly lines or sequential data processing.

Scenarios that require strict adherence to operational order.

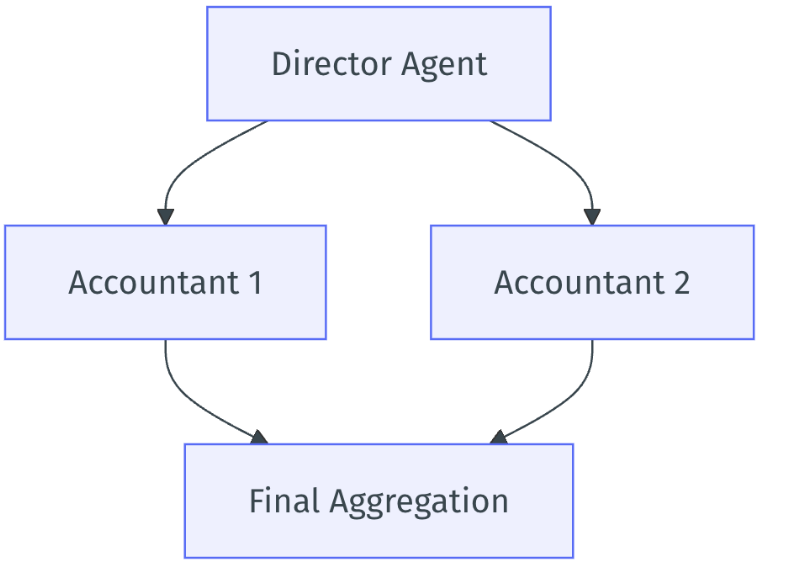

Hierarchical Architecture:

Source: https://docs.swarms.world

Implements top-down control, with a superior Agent coordinating tasks among subordinate Agents. Agents execute tasks simultaneously and then feed their results back into a loop for final aggregation. This is very useful for highly parallelizable tasks.

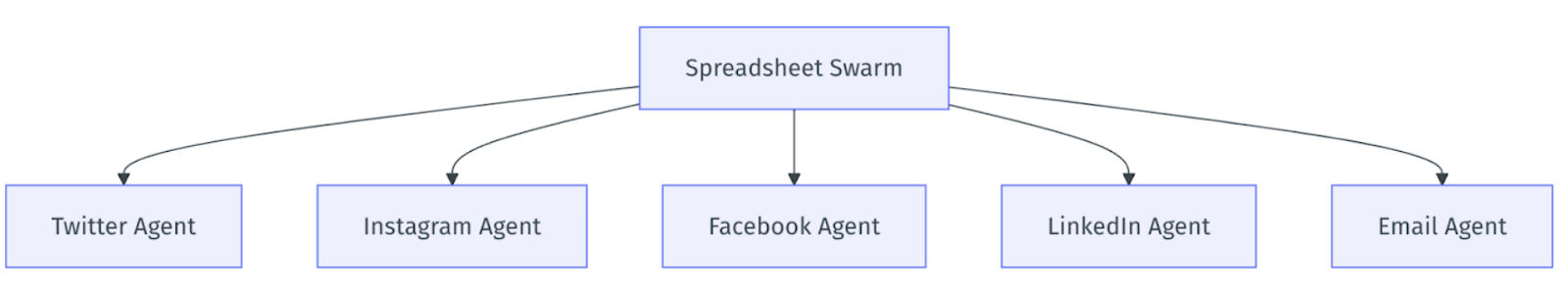

Spreadsheet Architecture:

Source: https://docs.swarms.world

A large-scale group architecture for managing multiple Agents working simultaneously. It can manage thousands of Agents at once, with each Agent running on its own thread. It is an ideal choice for supervising large-scale Agent outputs.

Swarms is not only an Agent framework but also compatible with the aforementioned Eliza, ZerePy, and Rig frameworks. With a modular approach, it maximizes the performance of Agents across different workflows and architectures to solve corresponding problems. The conception and progress of the developer community for Swarms are promising.

Eliza: The most user-friendly, suitable for beginners and rapid prototyping, especially for AI interactions on social media platforms. The framework is simple, facilitating quick integration and modification, ideal for scenarios that do not require excessive performance optimization.

ZerePy: One-click deployment, suitable for quickly developing AI Agent applications for Web3 and social platforms. It is suitable for lightweight AI applications, with a simple framework and flexible configuration, ideal for rapid setup and iteration.

Rig: Focused on performance optimization, especially excelling in high concurrency and high-performance tasks, suitable for developers needing detailed control and optimization. The framework is relatively complex and requires some knowledge of Rust, making it suitable for more experienced developers.

Swarms: Suitable for enterprise applications, supporting multi-Agent collaboration and complex task management. The framework is flexible, supporting large-scale parallel processing and offering various architectural configurations, but due to its complexity, it may require a stronger technical background for effective application.

Overall, Eliza and ZerePy have advantages in ease of use and rapid development, while Rig and Swarms are more suitable for professional developers or enterprise applications that require high performance and large-scale processing.

This is why the Agent framework possesses the characteristic of "industry hope." The aforementioned frameworks are still in their early stages, and the urgent task is to seize the first-mover advantage and establish an active developer community. The performance of the framework itself and whether it lags behind popular Web2 applications are not the main contradictions. Only frameworks that continuously attract developers will ultimately prevail, as the Web3 industry always needs to capture market attention. Regardless of how strong the framework's performance is or how solid its fundamentals are, if it is difficult to use and leads to a lack of interest, it becomes counterproductive. Under the premise that the framework itself can attract developers, those with more mature and complete token economic models will stand out.

The "Memecoin" characteristic of the Agent framework is also very understandable. The tokens of the aforementioned frameworks lack reasonable token economic designs, have no use cases or very singular use cases, lack validated business models, and do not have effective token flywheels. The frameworks are merely frameworks, and there is no organic integration between the frameworks and the tokens. The growth of token prices, apart from FOMO, struggles to gain support from fundamentals, lacking sufficient moats to ensure stable and lasting value growth. At the same time, the aforementioned frameworks also appear relatively rough, with their actual value not matching their current market value, thus exhibiting strong "Memecoin" characteristics.

It is worth noting that the "wave-particle duality" of the Agent framework is not a flaw; it should not be crudely understood as being neither a pure Memecoin nor a half-filled cup without token use cases. As I mentioned in my previous article: lightweight Agents cover an ambiguous Memecoin veil, and community culture and fundamentals will no longer be in conflict. A new asset development path is gradually emerging; although the Agent framework initially faces bubbles and uncertainties, its potential to attract developers and drive application implementation cannot be ignored. In the future, frameworks with complete token economic models and strong developer ecosystems may become key pillars in this track.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。