o1 may represent the next generation of large models from OpenAI.

Source: Forbes

Compiled by: MetaverseHub

Last week, there was news that OpenAI raised $6.5 billion in a new round of financing, bringing its market valuation to $150 billion.

This financing once again affirmed the huge value of OpenAI as an artificial intelligence startup and indicated its willingness to make structural changes to attract more investment.

Insiders added that given the rapid growth of OpenAI's revenue, this large-scale financing has received strong interest from investors and may be finalized in the next two weeks.

Existing investors such as Thrive Capital, Khosla Ventures, and Microsoft are expected to participate. New investors including Nvidia and Apple also plan to participate in the investment, and Sequoia Capital is also in talks to return to investment.

At the same time, OpenAI has launched the o1 series, which is its most complex artificial intelligence model to date, designed to excel in complex reasoning and problem-solving tasks. The o1 model uses reinforcement learning and chain of reasoning, representing a significant advancement in artificial intelligence capabilities.

OpenAI provides the o1 model to ChatGPT users and developers through different access levels. For ChatGPT users, users of the ChatGPT Plus plan can access the o1-preview model, which has advanced reasoning and problem-solving capabilities.

OpenAI's application programming interface (API) allows developers to access the o1-preview and o1-mini models in higher-level subscription plans.

These models are provided at the 5th level API, allowing developers to integrate the advanced features of the o1 model into their own applications. The 5th level API is a higher-level subscription plan provided by OpenAI for accessing its advanced models.

Here are 10 key points about the OpenAI o1 model:

01. Two Model Variants: o1-Preview and o1-Mini

OpenAI has released two variants: o1-preview and o1-mini. The o1-preview model performs well in complex tasks, while the o1-mini provides a faster and more cost-effective optimization solution for the STEM field, especially in coding and mathematics.

02. Advanced Chain of Reasoning

The o1 model uses a chain of reasoning process to gradually reason before providing an answer. This thoughtful approach improves accuracy and helps in handling complex problems requiring multi-step reasoning, making it superior to previous models such as GPT-4.

Chain of reasoning enhances the reasoning ability of artificial intelligence by breaking down complex problems into consecutive steps, thereby improving the model's logic and computational capabilities.

OpenAI's GPT-o1 model embeds this process into its architecture, simulating the human process of problem-solving, thus advancing this process.

This enables GPT-o1 to perform well in competitive programming, mathematics, and scientific fields, while also increasing transparency, as users can track the model's reasoning process, marking a leap in human-like artificial intelligence reasoning.

This advanced reasoning capability results in the model requiring some time before providing a response, which may appear slower compared to the GPT-4 series models.

03. Enhanced Security Features

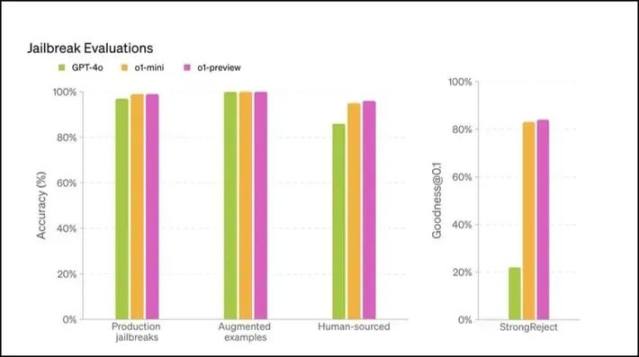

OpenAI has embedded advanced security mechanisms in the o1 model. These models demonstrate excellent performance in unauthorized content evaluation, showing resistance to "jailbreaking," making their deployment in sensitive use cases more secure.

"Jailbreaking" of artificial intelligence models involves bypassing security measures and can easily lead to harmful or unethical outputs. As artificial intelligence systems become increasingly complex, the security risks associated with "jailbreaking" also increase.

OpenAI's o1 model, especially the o1-preview variant, scores higher in security testing, demonstrating stronger resistance to such attacks.

This enhanced resistance is due to the model's advanced reasoning capabilities, which help it better adhere to ethical guidelines, making it more difficult for malicious users to manipulate it.

04. Better Performance in STEM Benchmark Tests

The o1 model ranks highly in various academic benchmark tests. For example, o1 ranks 89th in Codeforces (programming competition) and is among the top 500 in the USA Mathematical Olympiad.

05. Reducing "Advanced Illusions"

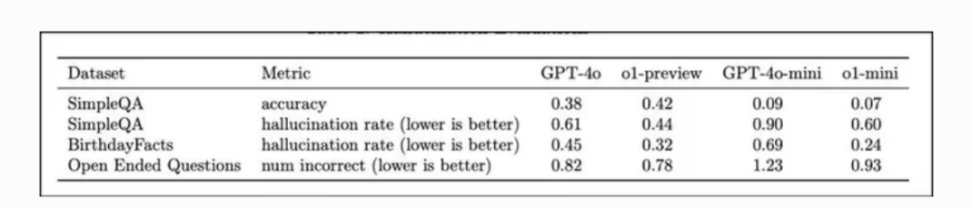

"Advanced illusions" in large language models refer to the generation of incorrect or unfounded information. OpenAI's o1 model addresses this issue using advanced reasoning and chain of reasoning processes, enabling it to think through problems step by step.

Compared to previous models, the o1 model reduces the occurrence of "illusions."

Evaluations on datasets such as SimpleQA and BirthdayFacts show that o1-preview outperforms GPT-4 in providing real, accurate answers, thus reducing the risk of misinformation.

06. Trained on Diverse Datasets

The o1 model has been comprehensively trained on public, proprietary, and custom datasets, making it proficient in general knowledge and familiar with specific domain topics. This diversity gives it powerful conversational and reasoning abilities.

07. Price-Friendly and Cost-Effective

OpenAI's o1-mini model is a cost-effective alternative to o1-preview, being 80% cheaper while still performing strongly in STEM fields such as mathematics and coding.

The o1-mini model is tailored for developers who require high precision at low cost, making it ideal for applications with limited budgets. This pricing strategy ensures that more people, especially educational institutions, startups, and small businesses, can access advanced artificial intelligence.

08. Security Work and External "Red Team Testing"

In large language models (LLMs), "red team testing" refers to rigorously testing artificial intelligence systems by simulating attacks from others or using methods that may lead to harmful, biased, or unintended behavior by the model.

This is crucial for identifying vulnerabilities in content security, misinformation, and ethical boundaries before deploying models on a large scale.

By using external testers and different testing scenarios, red team testing helps make LLMs more secure, robust, and ethically compliant. This ensures that the models can resist "jailbreaking" or other forms of manipulation.

Before deployment, the o1 model undergoes rigorous security assessments, including red team testing and preparedness framework evaluations. These efforts help ensure that the model meets OpenAI's high security and consistency standards.

09. Fairer, Less Biased

The o1-preview model performs better than GPT-4 in reducing stereotypical answers. In fairness evaluations, it is able to select the correct answers more often and shows improvement in handling ambiguous questions.

10. Chain of Reasoning Monitoring and Deception Detection

OpenAI employs experimental techniques to monitor the chain of reasoning in the o1 model to detect deceptive behavior when the model intentionally provides incorrect information. Preliminary results indicate promising prospects for this technology in mitigating the potential risks associated with reducing the generation of misinformation by the model.

OpenAI's o1 model represents a significant advancement in artificial intelligence reasoning and problem-solving, particularly excelling in STEM fields such as mathematics, coding, and scientific reasoning.

With the introduction of the high-performance o1-preview and cost-effective o1-mini, these models have been optimized for a range of complex tasks, while ensuring higher security and ethical compliance through extensive red team testing.

Original source: https://www.forbes.com/sites/janakirammsv/2024/09/13/openai-unveils-o110-key-facts-about-its-advanced-ai-models/

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。