Author: Zhao Jian, Jiazi Light Year

Just 8 days after Ilya Sutskever, the chief scientist of OpenAI, announced his departure, OpenAI quietly recruited an entire security-related team.

This team is called "Indent" and is a data security startup based in California, USA. On May 23, Fouad Matin, the co-founder and CEO of Indent, announced on X that he would be joining OpenAI to lead the security-related work.

Although the details have not been announced by both parties, it is highly likely that Indent will be fully integrated into OpenAI. Indent announced on its website, "After careful consideration, we have made a very difficult decision, and Indent will be closed in the coming months," "Services will stop being used after July 15."

It is worth mentioning that Sam Altman, the CEO of OpenAI, participated in the $5.6 million seed round financing of Indent in 2021, so the two parties are quite familiar with each other.

Recently, OpenAI has been in constant turmoil, with one of the most notable events being the chain reaction triggered after Ilya Sutskever's departure, leading to the resignation of Jan Leike, the co-director of the superalignment team responsible for security at OpenAI. The team they jointly led was established just last July, and now it has fallen apart.

However, upon closer inspection, it becomes clear that the addition of the Indent team, while a fresh addition to the security team, has nothing to do with the superalignment team.

The addition of the Indent team further confirms one thing: Sam Altman is turning OpenAI into a fully commercialized company.

1. Who is Indent?

Let's first introduce Indent.

Indent was founded in 2018 and is engaged in data security-related business. The services it provides are quite simple - automating the approval process for access permissions.

For example, when an engineer needs to view production server logs or customer support needs administrator access to sensitive systems, they can use Indent's application to request access permissions without the help of the IT department. Reviewers can receive messages through Slack and approve them directly from there, and once the time expires, access permissions will be automatically revoked.

Indent provides on-demand access control for everyone in the company, allowing them to access the content they need when they need it.

This seemingly simple service addresses an important need - as the team grows, more and more employees need access to more and more services, and the approval of these services may take days, weeks, or even months. While the approval process can be simplified, the simplest method is often not the correct one, as it may pose security risks. If it involves critical business, the difference between responding to a customer within a few hours or a few days can lead to completely different results.

Many companies use dozens of applications to handle critical services, collaboration, or customer data for different teams, each with dozens of different potential roles or sub-permissions, which can easily get out of control.

Indent provides the simplest and most secure way for teams to achieve democratized access management and accountability.

In 2023, with the rise of large models, Indent expanded its data security business to the field of large models.

In March 2024, Fouad Matin, co-founder and CEO of Indent, published an article titled "Million-Dollar AI Engineering Problems."

He mentioned that model weights, biases, and the data used to train them are the jewels in the crown of artificial intelligence and the most valuable assets of a company. For companies developing custom models or fine-tuning existing models, they invest millions of dollars in engineering time, computing power, and training data collection.

However, large language models have the risk of leakage. He used Llama as an example, where Meta initially did not consider making Llama completely open source, but instead imposed some restrictions. However, someone leaked it on the 4chan website. Meta had to go with the flow and make Llama completely open source.

Therefore, Indent specifically proposed security solutions for model weights, training data, and fine-tuning data.

2. Deep Ties with Altman

Indent and OpenAI have a long-standing relationship.

Indent has two co-founders, with Fouad Matin as CEO and Dan Gillespie as CTO.

Fouad Matin is an engineer, privacy advocate, and streetwear enthusiast. He previously worked on data infrastructure products at Segment. In 2016, he co-founded VotePlz, a non-partisan voter registration and turnout organization. He is passionate about helping people find satisfying work and previously founded a recommendation recruiting company through the YC W16 program.

Dan Gillespie was the first non-Google employee to manage the release of Kubernetes and has been a regular contributor since the early days of the project. As his entry point into K8s, he co-founded a collaborative deployment tool as CTO (YC W16), where he built Minikube. His company was acquired by CoreOS, which later became part of RedHat, and then part of IBM.

From their resumes, it can be seen that both of them have had close ties with YC in the early years. Sam Altman invested in the YC startup incubator in 2011 and served as the president of YC from 2014 until he became the CEO of OpenAI in 2019.

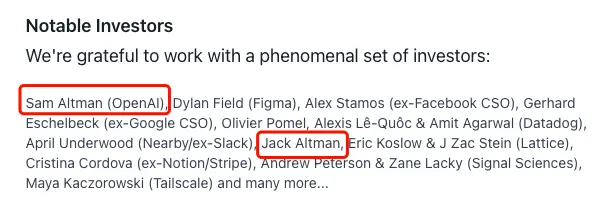

On December 21, 2021, Indent announced a $5.6 million seed round financing, with lead investors including Shardul Shah (partner at Index Ventures), Kevin Mahaffey (CTO of Lookout), and Swift Ventures. Among the co-investors, there are Sam Altman and his brother Jack Altman.

The close relationship between the two parties, as well as Indent's later involvement in the field of large model security, laid the groundwork for Indent's integration into OpenAI.

3. Indent is Not Joining the Superalignment Team

Recruiting the entire Indent team under its wing, is this a supplement to OpenAI's superalignment team? The answer is no, because these are two completely different teams.

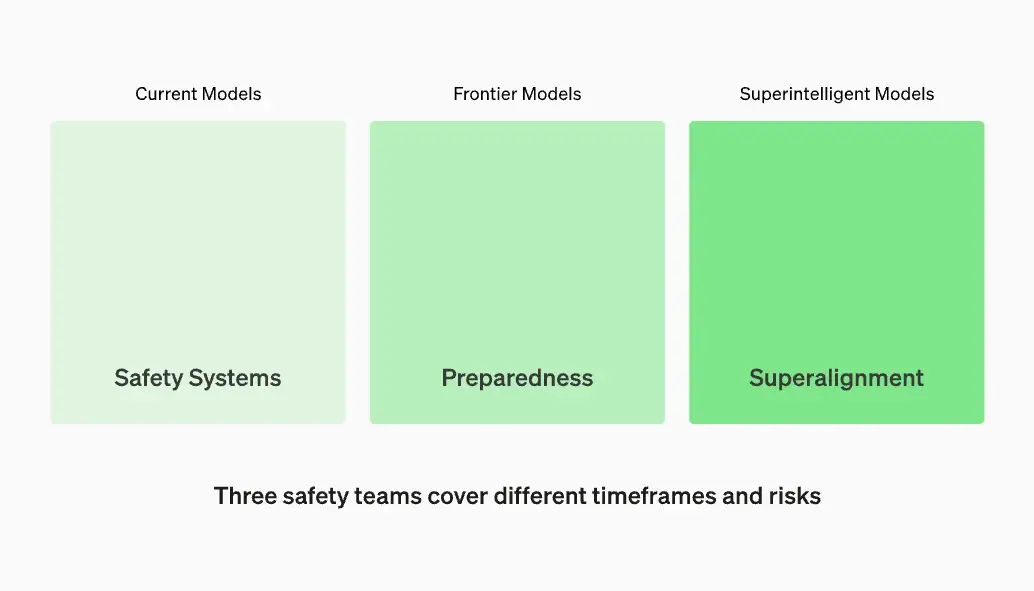

OpenAI's security team actually consists of three: Safety Systems, Preparedness, and Superalignment.

The division of labor for the three teams is as follows: the Safety Systems team focuses on the deployment risks of current models, focusing on reducing the abuse of existing models and products like ChatGPT; the Preparedness team focuses on the security assessment of cutting-edge models; and the Superalignment team focuses on coordinating superintelligence, laying the foundation for the safety of superintelligent models in the more distant future.

The Safety Systems team is a relatively mature team, divided into four subsystems: safety engineering, model security research, safety reasoning research, and human-computer interaction, bringing together a diverse team of experts in engineering, research, policy, AI collaboration, and product management. OpenAI has stated that this combination of talent has proven to be very effective, allowing OpenAI to access a wide range of solutions from pre-training improvements and model fine-tuning to monitoring and mitigating reasoning.

4. OpenAI's "Original Sin"

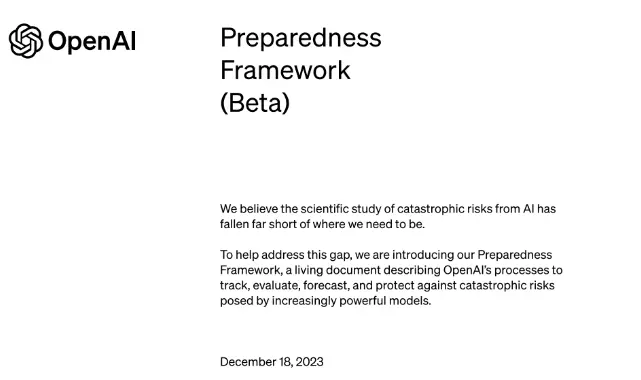

The research of the Preparedness team on the risks of cutting-edge artificial intelligence has not reached the level it needs to be. In order to bridge this gap and systematize security thinking, OpenAI released an initial version of a framework called "Preparedness Framework" in December 2023, which describes the process of tracking, assessing, predicting, and mitigating the catastrophic risks posed by increasingly powerful models.

OpenAI also stated that it will establish a dedicated team to oversee technical work and establish an operational structure for security decision-making. The Preparedness team will drive technical work to examine the limits of cutting-edge model capabilities, conduct assessments, and produce comprehensive reports. OpenAI is creating a cross-functional security advisory group to review all reports and simultaneously send them to the leadership and the board. While the leadership makes decisions, the board has the power to overturn them.

The Superalignment team is a new team that was just established on July 5, 2023, with the aim of guiding and controlling artificial intelligence systems that are much smarter than humans using science and technology by 2027. OpenAI claims that it will invest 20% of the company's computing resources into this work.

There are no clear feasible solutions for superalignment yet. OpenAI's research approach is to use aligned small models to supervise large models and then gradually align superintelligence by gradually scaling up the size of the models and stress-testing the entire process.

The OpenAI Superalignment team was co-led by Ilya Sutskever and Jan Leike, but both have now resigned. According to media reports, the Superalignment team has fallen apart after their departure.

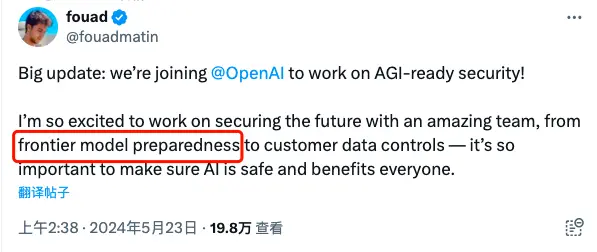

The Indent team did not join the Superalignment team. According to information released by the Indent team on X, they joined OpenAI's Preparedness team, responsible for preparing cutting-edge models and managing customer data.

This means that OpenAI is increasing its investment in cutting-edge models.

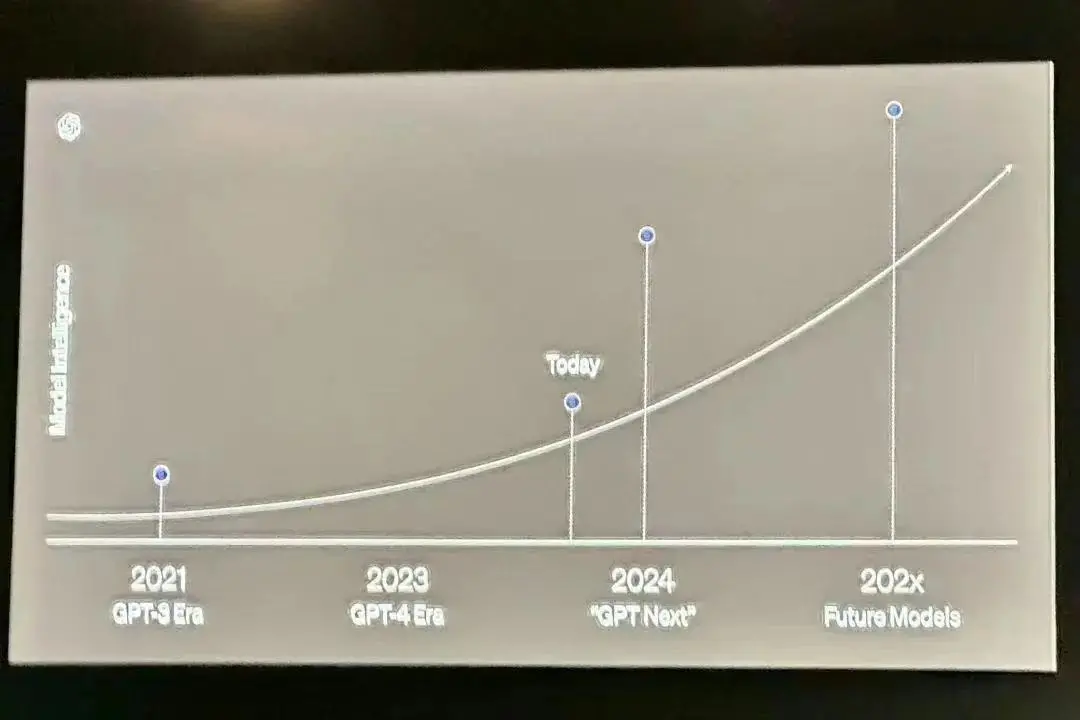

At the recent VivaTech summit in Paris, Romain Huet, the developer experience lead at OpenAI, revealed in a presentation slide that OpenAI's next new model "GPT Next" will be released later in 2024.

The image is from the PPT shared by OpenAI at VivaTech, sourced from X

The focus of OpenAI's upcoming work is likely to be on the capabilities and security of this new model.

4. OpenAI's "Original Sin"

The dissolution of the Superalignment team, the addition of the Indent team, and a series of related events all lead to one conclusion: OpenAI is accelerating its pursuit of model deployment and commercialization.

This point has already been openly stated in the resignation statement of Jan Leike, the head of the Superalignment team.

Jan Leike believes that more bandwidth should be spent on preparing for the next generation of models, including security, monitoring, preparedness, adversarial robustness, superconsistency, confidentiality, social impact, and related topics, but in the past few months, his team "has been struggling for computing resources" - not even the initially promised 20% of computing resources can be met.

He believes that OpenAI's security culture and processes are no longer being valued, while flashy products are favored.

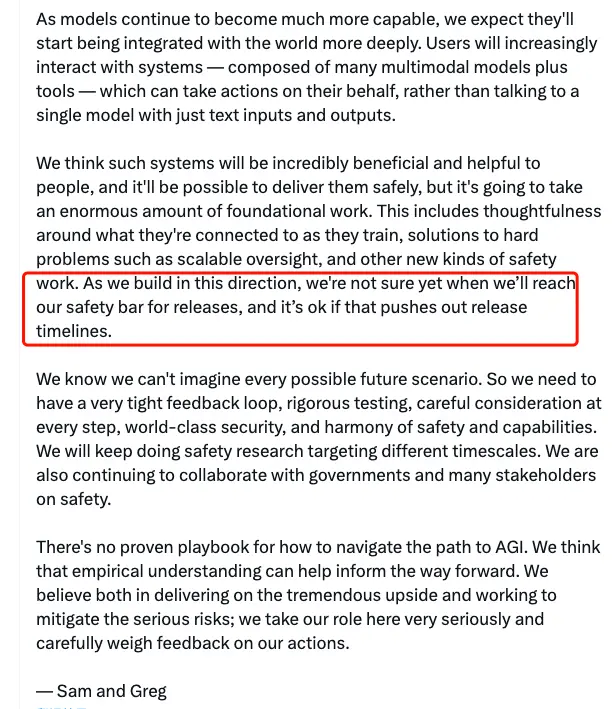

In response to this, in a lengthy response from OpenAI President Greg Brockman, there is a sentence that says:

We believe that such (increasingly powerful) systems will be very beneficial and helpful to people, and it is possible to deliver them safely, but this requires a lot of foundational work. This includes thoughtful considerations of what they connect to during training, solutions to difficult problems such as scalable supervision, and other new security work. As we build in this direction, we are not sure when we will meet our safety standards to release products, and it's okay if this leads to a delay in the release schedule.

As mentioned earlier, when the Superalignment team was established, OpenAI set the timeline for guiding and controlling superintelligent systems that are much smarter than humans to 2027. Greg Brockman's response is essentially changing this timeline - "it's okay if there's a delay in the release, too."

It is important to emphasize that OpenAI does value security, but apparently its emphasis on security needs to be accompanied by a condition - all security must be based on deployable models and commercializable products. Clearly, even OpenAI has to make trade-offs under relatively limited resources.

And Sam Altman seems to lean towards being a purer businessman.

Is security at odds with commercialization? For all other companies in the world, this is not a contradiction. But OpenAI is the exception.

In March 2023, after the release of GPT-4, Elon Musk raised a soul-searching question: "I'm confused. I donated $100 million to a non-profit organization, how did it become a for-profit organization worth $30 billion?"

When the power struggle at OpenAI occurred, the outside world had more or less speculated that the spark of the conflict was caused by this. After the investigation results were released on March 8 this year, in the official announcement, OpenAI not only announced Sam Altman's return, but also announced that the company will make significant improvements to its governance structure, including "adopting a new set of corporate governance guidelines" and "strengthening OpenAI's conflict of interest policy."

However, until the dissolution of OpenAI's Superalignment team, we have not seen the new policy. This may be the reason why many departing employees are disappointed with OpenAI.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。