Behind the half-hearted Cantonese in AI, there is a struggle for language inheritance and social resource allocation.

Author: Anita Zhang

Have you ever heard ChatGPT speak Cantonese?

If you are a Mandarin native speaker, congratulations, you have instantly achieved the "proficiency in Cantonese" achievement. On the other hand, for those who can speak Cantonese, they may be confused at this point. ChatGPT comes with a peculiar accent, sounding like someone from out of town trying to speak Cantonese.

In a September 2023 update, ChatGPT gained the ability to "speak" for the first time; on May 13, 2024, the latest generation model GPT-4o was released. Although the new version's speech function has not been officially launched and only exists in demos, from last year's update, we can already catch a glimpse of ChatGPT's multilingual speech dialogue capabilities.

Many people have also noticed that ChatGPT's Cantonese accent is strong. Although the tone is natural, like a real person, that "real person" is definitely not a native Cantonese speaker.

To verify this point and explore the reasons behind it, we conducted a comparison test of Cantonese speech software: the test subjects included ChatGPT Voice, Apple's Siri, Baidu Wenxin Yiyuan, and suno.ai. Among them, the first three are all voice assistants, while suno.ai is an artificial intelligence music generation platform that has been extremely popular recently. They all have the ability to respond in Cantonese or similar Cantonese based on prompts.

In terms of vocabulary pronunciation, Siri and Wenxin Yiyuan both pronounce correctly, but their responses are more mechanical and rigid. The other two participants have varying degrees of pronunciation errors. Many times, the errors occur when pronouncing in a way that tends towards Mandarin, such as "ying" instead of the Cantonese "jing2" for "影" and "jing" instead of "zing1" for "亮晶晶".

For the phrase "高楼大厦," ChatGPT pronounces "高" as "gao," when it should actually be the Cantonese "gou1." Frank, a native Cantonese speaker, also pointed out that this is a common pronunciation error among non-native speakers and is often joked about by locals—because "gao" is a Cantonese slang term for a certain body part. ChatGPT's pronunciation performance varies each time, with "厦" in "高楼大厦" sometimes being pronounced correctly as "haa6," and other times being misread as "xia," a pronunciation that does not exist in Cantonese and is similar to the pronunciation of "厦" in Mandarin.

In terms of grammar, the generated text is clearly more formal, with occasional colloquial expressions. The choice of words and sentences often suddenly switches to Mandarin mode, blurting out phrases like "买东西" (Cantonese: 买嘢) and "用粤语来给你介绍一下香港啦" (Cantonese: 用粤语同你介绍下香港啦), which do not conform to the usual colloquial grammar of Cantonese.

When suno.ai created Cantonese rap lyrics, it also wrote sentences like "街坊边个仿得到,香港嘅特色真正靓妙," which are semantically unclear. When we presented this sentence to ChatGPT for evaluation, it pointed out that "this sentence seems to be a direct translation of Mandarin, or a syntax that mixes Mandarin with Cantonese."

As a comparison, we also found that when they attempted to use Mandarin, these errors were basically non-existent. Of course, within Cantonese, there are different accents and linguistic differences in Guangzhou, Hong Kong, and Macau; the standard Cantonese accent, which is considered the "standard" for Cantonese, is very different from the commonly used Cantonese in Hong Kong. However, ChatGPT's Cantonese can at most be described as "唔咸唔淡" (meaning not proficient, half-hearted), with an accent that Mandarin native speakers might have.

What's going on here? Can ChatGPT not speak Cantonese? But it does not directly indicate lack of support for it, instead, it has embarked on an imagination, which is clearly based on a more dominant and officially endorsed language. Could this become a problem?

Linguist and anthropologist Edward Sapir believed that spoken language influences the way people interact with the world. What does it mean when a language cannot assert itself in the era of artificial intelligence? Will we gradually share the same imagination with AI for the appearance of Cantonese?

"Resourceless" Language

Reviewing the information publicly available from OpenAI, the conversational ability demonstrated by ChatGPT's voice mode introduced last year is actually composed of three main parts: first, the open-source speech recognition system Whisper converts spoken language into text—then the ChatGPT text dialogue model generates text responses—finally, a text-to-speech model (TTS) is used to generate audio and fine-tune pronunciation.

In other words, the conversation content is still generated by the core of ChatGPT3.5, which is trained on a large amount of existing text on the web, rather than speech data.

In this regard, Cantonese is at a significant disadvantage because it largely exists in spoken language rather than in writing. At the official level, the written language used in the Cantonese-speaking area is standard written Chinese derived from northern Chinese, which is closer to Mandarin than Cantonese; and written Cantonese, which is the writing system that conforms to the grammar and vocabulary habits of spoken Cantonese, also known as written Cantonese, mainly appears in informal situations, such as on online forums.

This usage often does not follow unified rules. "About 30% of Cantonese words, I don't know how to write them," Frank said, indicating that when people encounter words they don't know how to write in online chats, they often just find a word with a similar pronunciation on the Chinese phonetic keyboard and type it out. For example, the Cantonese phrase "乱噏廿四" (lyun6 up1 jaa6 sei3; meaning to talk nonsense) is often written as "乱 up 廿四." Although most people can understand each other, this further makes existing Cantonese texts chaotic and inconsistent.

The appearance of large language models has helped people understand the importance of training data for artificial intelligence and the biases it may carry. However, in reality, before the emergence of generative AI, the data resource gap between different languages had already created a divide. Most natural language processing systems are designed and tested using high-resource languages, and out of all the active languages in the world, only 20 are considered "high-resource" languages, such as English, Spanish, Mandarin, French, German, Arabic, Japanese, and Korean.

With 85 million users, Cantonese is often considered a low-resource language in natural language processing (NLP). As a starting point for deep learning, the compressed size of the Cantonese version of Wikipedia is only 52MB, compared to 1.7GB for the mixed traditional and simplified Chinese version, and 15.6GB for the English version.

Similarly, in the largest publicly available speech dataset, Common Voice, the speech data for Chinese (China) is 1232 hours, Chinese (Hong Kong) is 141 hours, and Cantonese is 198 hours.

The lack of corpus profoundly affects the performance of machine natural language processing. A study in 2018 found that if there are fewer than 13K parallel sentences in the corpus, machine translation cannot achieve reasonable results. This also affects the performance of machine "dictation." In the performance test of the Cantonese character error rate using the open-source Whisper speech recognition model (V2 version) adopted by ChatGPT Voice, it is significantly higher than that of Mandarin.

The model's text performance shows the lack of resources for written Cantonese, but how does it make errors in pronunciation and intonation that affect our listening experience?

How do machines learn to speak?

Humans have long had the idea of making machines speak, which can be traced back to the 17th century. Early attempts included using organs or bellows to mechanically pump air into complex devices that simulated the chest cavity, vocal cords, and oral cavity structures. This approach was later adopted by an inventor named Joseph Faber, who created a speaking automaton dressed in Turkish attire—but at the time, people did not understand the significance of this.

Until home appliances became more popular, the idea of making machines speak sparked more interest.

After all, for the vast majority of people, communicating through coding is not natural, and a considerable portion of the disabled community is thus excluded from technology.

At the 1939 World's Fair, the Bell Labs engineer Homer Dudley invented the Voder, a speech synthesizer that emitted the earliest "machine voice" to humans. Compared to the "mystery" of machine learning today, the principle of the Voder was simple and understandable, and the audience could see it: a female operator sat in front of a machine resembling a toy piano, skillfully controlling 10 keys to produce a sound effect similar to vocal cord friction. The operator could also use a foot pedal to change the pitch, simulating a more cheerful or heavier tone. On the side, a host continuously asked the audience to suggest new words to prove that the Voder's voice was not pre-recorded.

Based on the recordings from that time, The New York Times described the Voder's voice as "a greeting from an alien in the deep sea" and like a drunken person slurring words, difficult to understand. But at the time, this technology was enough to amaze people. During the World's Fair, the Voder attracted over 5 million visitors from around the world.

Early intelligent robots and alien voices drew inspiration from these devices. In 1961, scientists at Bell Labs had the IBM 7094 sing the 18th-century English song "Daisy Bell." This is the earliest known song sung by a computer-synthesized voice. Arthur C. Clarke, the author of "2001: A Space Odyssey," visited Bell Labs to hear the IBM 7094 sing Daisy Bell. In his novel, the supercomputer HAL 9000 first learned this song. In the movie version, when the initialized HAL 9000's consciousness becomes confused, it starts singing "Daisy Bell," and the lively human-like voice gradually returns to a mechanical low growl.

Since then, speech synthesis has undergone decades of evolution. Before the maturity of neural network technology in the AI era, concatenative synthesis and formant synthesis were the most common methods—many of the speech functions commonly used today are still implemented through these two methods, such as screen reading. Among them, formant synthesis dominated in the early days. Its principle of sound production is very similar to the Voder's approach, using control of parameters such as fundamental frequency, voiceless sounds, and voiced sounds to generate an infinite variety of sounds. This brought a significant advantage, as it could be used to produce any language: as early as 1939, the Voder could speak French.

So, of course, it could also speak Cantonese. In 2006, Huang Guanneng, a native of Guangzhou who was studying for a master's degree in computer software theory at Sun Yat-sen University, thought of creating a Linux browser for visually impaired people as his graduation project. During the process, he came across eSpeak, an open-source speech synthesizer using formant synthesis. Due to its linguistic advantages, eSpeak was quickly put into practical use after its appearance. In 2010, Google Translate began adding reading functions for many languages, including Mandarin, Finnish, and Indonesian, all achieved through eSpeak.

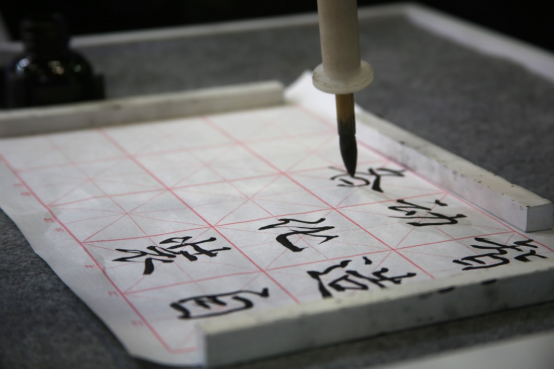

On November 24, 2015, in Beijing, China, a robotic arm writes Chinese characters with a brush.

Huang Guanneng decided to add support for his native language, Cantonese, to eSpeak. However, due to the limitations of the principle, the synthesized pronunciation of eSpeak had a noticeable patchwork feeling, "just like learning Chinese not through Hanyu Pinyin, but through English phonetic symbols, the effect is very much like a foreigner learning to speak Chinese," Huang Guanneng said.

So he created Ekho TTS. Today, this speech synthesizer supports Cantonese, Mandarin, and even more niche languages such as Chaoshan dialect, Tibetan, Ya language, and Taishanese. Ekho uses the concatenative method, in simpler terms, it is like pasting—pre-recorded human pronunciations are "pasted" together when "speaking." This way, the pronunciation of individual words will be more standard, and if commonly used vocabulary is fully recorded, the listening experience will be more natural. Huang Guanneng compiled a pronunciation table of 5005 Cantonese sounds, which takes 2 to 3 hours to record from start to finish.

The emergence of deep learning has brought about a revolution in this field. Speech synthesis based on deep learning algorithms learns the mapping between text and speech features from large-scale speech corpora, without relying on pre-set linguistic rules and pre-recorded speech units. This technology has significantly improved the naturalness of machine voices, often indistinguishable from human voices, and can clone a person's tone and speaking habits based on just a few seconds of speech—ChatGPT's TTS module uses this technology.

Compared to concatenative synthesis and formant synthesis, such systems save a lot of upfront human labor costs for speech synthesis, but also place higher demands on the pairing of text and speech resources. For example, the end-to-end model Tacotron introduced by Google in 2017 requires over 10 hours of training data to achieve good speech quality.

To address the scarcity of resources for many languages, researchers have proposed a method of transfer learning: first, train a general model from a dataset of a high-resource language, and then transfer these patterns to the synthesis of a low-resource language. To some extent, the transferred patterns still carry the characteristics of the original dataset—just like a native speaker learning a new language, bringing in their knowledge of their native language. In 2019, the Tacotron team proposed a model that can clone the voice of the same speaker across different languages. In a demo, when an English native speaker "speaks" Mandarin, although the pronunciation is standard, it has a very obvious "foreign accent."

An article in the South China Morning Post pointed out that in Hong Kong, to ensure that all Chinese speakers can understand their meaning, standard Mandarin must be used in writing, including the use of "他们" (taa1 mun4) from modern standard Mandarin. "他们" is a word in Cantonese that is almost never used in colloquial speech; in Cantonese, the equivalent of "他们" is "佢哋" (keoi5 dei6), with completely different pronunciation and writing.

In addressing this common problem, the latest GPT-4o model has gone even further. OpenAI introduced that they have trained an end-to-end model that handles cross-text, visual, and audio processing. It is not yet clear how this model handles different languages, but it seems to have greater versatility across tasks than in the past.

However, the interchangeability between Cantonese and Mandarin can make the problem even more complex.

In linguistics, there is the concept of "diglossia," which refers to the existence of two closely related languages in a specific society, one with higher prestige, usually used by the government, and the other often used as a spoken dialect or vernacular.

In the Chinese context, Mandarin is the highest-level language, used for formal writing, news broadcasting, school education, and government affairs. Local dialects such as Cantonese, Minnan (Taiwanese), and Shanghainese are lower-level languages, mainly used for daily oral communication in families and local communities.

As a result, in Guangdong, Hong Kong, and Macau, the phenomenon arises where Cantonese is the mother tongue of most people, used for daily oral communication, while the formal written language is usually based on the standard Mandarin.

There are many similarities but actual differences between the two, and "disharmonious pairs" such as "他们" and "佢哋" may actually make the transition from Mandarin to Cantonese more difficult and prone to misunderstandings.

The Marginalization of Cantonese

"The concern for the future of Cantonese is not unfounded. The decline of a language can happen very quickly, possibly within one or two generations, and once a language begins to decline, it is very difficult to reverse the tide." - James Griffiths, "Please Speak Mandarin"

So far, it seems that the poor performance of speech synthesis in Cantonese is due to the ability of technology to handle low-resource languages. Models using deep learning algorithms can create illusions when faced with unfamiliar words. However, Tan Lee, a professor of electronic engineering at the Chinese University of Hong Kong, had a different opinion after listening to ChatGPT's speech performance.

A Cantonese opera performance at the Yau Ma Tei Theatre

Tan Lee has been dedicated to research related to speech and language since the early 1990s, leading the development of a series of spoken language technologies centered around Cantonese, which have been widely used. The Cantonese speech corpus CU Corpora, launched in 2002 in collaboration with his team, was the largest of its kind in the world at the time, containing recordings from over two thousand people. Many companies and research institutions, including Apple's first speech recognition, have purchased this resource when developing Cantonese features.

In his view, the Cantonese speech performance of ChatGPT is "not very good, mainly unstable, and the overall quality and accuracy of pronunciation are not very satisfactory." However, this poor performance is not due to technological limitations. In fact, many speech generation products with Cantonese capabilities on the market today have much higher quality. He found it hard to believe the performance of ChatGPT in online videos, initially thinking it was a deeply counterfeit product, "if it is a speech generation model, it is basically unacceptable, equivalent to suicide."

As an example, the most advanced systems developed by the Chinese University of Hong Kong are already difficult to distinguish from real human voices in terms of speech quality. Compared to more dominant languages such as Mandarin and English, AI Cantonese may be less expressive in some more personalized and lifelike scenarios, such as conversations between parents and children, psychological counseling, and job interview settings, where Cantonese may seem relatively cold.

"Strictly speaking, there is no difficulty in the technology, the key lies in the selection of social resources," Tan Lee said.

Compared to 20 years ago, the field of speech synthesis has undergone revolutionary changes, and the data volume of CU Corpora is "probably less than one-ten-thousandth" of today's databases. The commercialization of speech technology has made data a market resource, and data companies can provide large amounts of customized data at any time. The problem of parallel data for text and speech in Cantonese, a spoken language, has also ceased to be a problem in recent years with the development of speech recognition technology. In the current context, Tan Lee believes that the term "low-resource language" for Cantonese is no longer accurate.

Therefore, in his view, the performance of machine-generated Cantonese in the market reflects not the ability of the technology, but the considerations of the market and business. "If everyone in China were to learn Cantonese together, it would definitely be possible; for example, as Hong Kong and the mainland become more integrated, if one day the education policy changes and Cantonese cannot be used in primary and secondary schools in Hong Kong, and only Mandarin can be spoken, that would be another story."

The depth learning that "eats what it takes" has actually squeezed the accent of Cantonese in real space.

Huang Guanneng's daughter had just started kindergarten in Guangzhou, and after only a month of school, she became fluent in Mandarin, despite only speaking Cantonese from a young age. Now, even in daily conversations with family and neighbors, she is more accustomed to using Mandarin, only willing to speak Cantonese with Huang Guanneng, "because she wants to play with me and wants to accommodate my preferences." In his eyes, the performance of ChatGPT is very similar to how his daughter speaks Cantonese now, often unable to remember how to say many words and using Mandarin as a substitute, or guessing the pronunciation through Mandarin.

This is the result of the long-term neglect of Cantonese in the Guangdong region, and even its complete exclusion from official contexts. In a government document from the Guangdong Provincial People's Government in 1981, it was written that "promoting Mandarin is a political task," especially for Guangdong, which has complex dialects and frequent internal and external exchanges, "striving to use Mandarin in all public places in major cities within three to five years; and within six years, Mandarin should be widely used in all types of schools."

Frank, who grew up in Guangzhou, also has deep memories of this. In his childhood, foreign language films on public television channels did not have Chinese dubbing, only subtitles, but Cantonese films always had Mandarin dubbing before they could be broadcast on TV. Against this background, Cantonese has gradually declined, the number of users has plummeted, and the initiative to "ban Cantonese" in schools has sparked intense debates about the survival of Cantonese and related identity. In 2010, a large-scale "Support Cantonese" movement erupted online and offline in Guangzhou. Reports at the time mentioned that people compared this debate to a scene from the French novel "The Last Lesson," believing that half a century of cultural radicalism had caused the once flourishing language to wither. For Hong Kong, Cantonese is a key carrier of local culture, shaping the social life here through Hong Kong films and music.

In 2014, an article on the Education Bureau's official website referred to Cantonese as "a Chinese dialect that is not a statutory language," sparking intense debate, which ultimately ended with an apology from the Education Bureau. In August 2023, the Hong Kong Cantonese Defense Organization "Hongyuexue" announced its dissolution, and founder Chen Lexing mentioned the current situation of Cantonese in Hong Kong in subsequent interviews: the government actively promotes "teaching in Mandarin," that is, teaching Chinese in Mandarin, but due to public concern, the government has "slowed down its pace."

All of this shows the importance of Cantonese in the minds of Hong Kong people, but also reveals the long-term pressure this language faces locally, its vulnerability without official status, and the ongoing struggle between the government and the public.

An online Cantonese dictionary - "Yue Dictionary"

Unrepresented Voices

The illusion of language is not only present in Cantonese. Users from around the world on Reddit forums and OpenAI's discussion area have reported similar performances of ChatGPT when speaking non-English languages:

"Its Italian speech recognition is very good, always understandable and fluent, like a real person. But strangely, it has a British accent, as if a British person is speaking Italian."

"As a British person, it has an American accent. I really dislike this, so I choose not to use it."

"The same goes for Dutch, it's annoying, as if its pronunciation is trained with English phonemes."

Linguistically, an accent is defined as a way of pronunciation, and everyone is influenced to some extent by geographical environment, social class, and other factors, resulting in differences in pronunciation choices, often reflected in differences in tone, stress, or vocabulary selection. Interestingly, many of the accents that have been widely mentioned in the past mostly come from people around the world trying to master English while carrying habits from their mother tongue, such as Indian accents, Singaporean accents, and Irish accents—reflecting the diversity of world languages. However, what artificial intelligence shows is the misinterpretation and reverse invasion of regional languages by mainstream languages.

Technology has amplified this invasion. A report from Statista in February of this year emphasized that although only 4.6% of the world's population speaks English as their mother tongue, it overwhelmingly accounts for 58.8% of the text on the internet, meaning it has a greater influence on the internet than in reality. Even if all English speakers are included, the 1.46 billion people account for less than 20% of the world's population, meaning that about three-quarters of the world's population cannot understand most of what happens on the internet. Furthermore, they also find it difficult to make AI proficient in English work for them.

Some computer scientists from Africa have found that ChatGPT often misinterprets African languages, with shallow translations. For Zulu (a type of Bantu language spoken by about 9 million people worldwide), its performance is "half good and half hilarious," and for Tigrinya (spoken mainly in Israel and Ethiopia, with about 8 million users worldwide), it can only produce garbled responses. This discovery has raised their concerns: the lack of AI tools applicable to African languages, capable of recognizing African names and places, makes it difficult for African people to participate in the global economic system, such as e-commerce and logistics, making it difficult to obtain information and automate production processes, thus blocking them from economic opportunities.

Training artificial intelligence to use a certain language as the "gold standard" will also lead to biases in its judgment. A study from Stanford University in 2023 found that artificial intelligence incorrectly marked a large number of TOEFL essays (written by non-native English speakers) as AI-generated, but did not do so for essays written by native English speakers. Another study found that when faced with Black speakers, automatic speech recognition systems had an error rate almost twice as high as when faced with White speakers, and these errors were not related to grammar, but to "phonetic, prosodic, or rhythmic features," in other words, "accents."

What is even more unsettling is that in simulated court trials, the use of African American English speakers resulted in a higher proportion of death sentences being handed down by large language models compared to those who spoke standard American English.

Some voices of concern have pointed out that if the underlying technological flaws are not considered, and existing AI technology is used without much thought just for the sake of convenience, it will have serious consequences. For example, some court transcripts have already begun using automatic speech recognition, which is more likely to produce biases in the voice records of people with accents or those who are not proficient in English, leading to unfavorable judgments.

Furthermore, will people in the future give up or change their accents in order to be understood by AI? In reality, globalization and socio-economic development have already brought about such changes. Frank, currently studying in North America, has shared with his classmates from Ghana the current language usage in this African country: written texts are basically all in English, even private texts such as letters. In spoken language, a large number of English words are mixed in, causing even locals to gradually forget some of the vocabulary or expressions in their native language.

In Tan Lee's view, people are currently becoming obsessed with machines. "Because machines are doing well now, we are desperately trying to talk to machines," which is a case of putting the cart before the horse. "Why do we speak? The purpose of speaking is not to convert it into text, nor is it to generate a response. In the real world, the purpose of speaking is to communicate."

He believes that the direction of technological development should be to improve communication between people, rather than better communication with computers. Under this premise, "we can easily think of many unresolved issues, such as someone not being able to hear, possibly due to deafness, or being too far away, not understanding the language, adults not being able to speak children's language, and children not being able to speak adults' language."

There are many fun language technologies available today, but do they make communication smoother for everyone? Do they embrace the differences in everyone, or do they make people increasingly conform to the mainstream?

While people celebrate the cutting-edge breakthrough brought by ChatGPT, some basic applications in daily life have not yet benefited from it. Tan Lee still hears synthetic voices making pronunciation errors in airport announcements, "the first point of communication is accuracy, but this has not been achieved, which is unacceptable."

A few years ago, due to limited personal resources, Huang Guanneng stopped maintaining the Android version of Ekho, but after a period of cessation, suddenly there were users who hoped he would resume it. He only learned that there are no longer free Cantonese TTS available for the Android system.

From today's perspective, the technology used in Ekho, developed by Huang Guanneng, is completely outdated, but it still has its unique features. As a local independent developer, he incorporated his firsthand experience of the language into the design. The Cantonese he recorded includes seven tones, with the seventh being a pronunciation that does not exist in Jyutping, proposed by the Hong Kong Linguistic Society. "The word 'smoke' has different tones in 'smoking' and 'fireworks,' which are the first and seventh tones, respectively."

When organizing the pronunciation dictionary, he consulted the developers of Jyutping and learned that with the changing times, the younger generation in Hong Kong no longer distinguishes between the first and seventh tones, and this sound has gradually disappeared. However, he still chose to include the seventh tone, not because it is a recognized standard, but simply as a personal emotional memory. "Native Cantonese speakers from Guangzhou can still hear it, and its usage is still very common."

Just by hearing this sound, a native of Guangzhou can tell whether you are a local or an outsider.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。