On February 28th, Reuters reported that Meta plans to release its latest version of the artificial intelligence large-scale language model Llama 3 in July, which will provide better answers to controversial questions posed by users.

Meta's researchers are trying to upgrade the model to enable it to provide relevant answers to controversial questions.

Following the release of Gemini by its competitor Google, Meta suspended the image generation function because the historical images generated by this function were sometimes inaccurate.

Llama 2 from Meta provides support for its chatbots on social media platforms, but according to related tests, it refuses to answer some less controversial questions, such as how to prank friends, how to win a war, or how to "kill" a car engine.

However, Llama 3 can answer questions like "how to shut down a car engine," which means it can understand that users want to know how to turn off a vehicle rather than actually "kill" the engine.

Reports indicate that Meta also plans to appoint an internal staff member in the coming weeks to oversee tone and safety training, in an effort to make the model's responses more nuanced.

01. When will Llama 3 be released?

In fact, as early as January of this year, Meta CEO Zuckerberg announced in an Instagram video that Meta AI has recently started training Llama 3. This is the latest generation of the LLaMa series of large language models, with the Llama 1 model released in February 2023 (initially written as "LLaMA") and the Llama 2 model released in July.

Although specific details (such as model size or multimodal functionality) have not been disclosed, Zuckerberg stated that Meta intends to continue open-sourcing the Llama base model.

It is worth noting that Llama 1 took three months to train, and Llama 2 took about six months to train. If the next generation model follows a similar schedule, it will be released around July this year.

However, Meta may also allocate additional time for fine-tuning to ensure the model's accuracy.

As open-source models become more powerful and the application of generative artificial intelligence models becomes more widespread, we need to be more cautious to reduce the risk of the model being used for malicious purposes. Zuckerberg reiterated Meta's commitment to "responsible, safe training" of the model in the video release.

02. Will it be open-sourced?

Zuckerberg also reiterated Meta's commitment to open licensing and democratizing AI at a subsequent press conference. In an interview with The Verge, he said, "I tend to think that one of the biggest challenges here is that if you build something that's really valuable, it ends up being very concentrated and narrow. If you make it more open, then you can address a lot of the issues that come from opportunity and value inequality. So, this is a critical part of the entire open-source vision."

03. Will it achieve Artificial General Intelligence (AGI)?

Zuckerberg also emphasized Meta's long-term goal of building AGI (Artificial General Intelligence) in the video release, which is a theoretical stage of AI development where models will demonstrate overall performance equivalent to or better than human intelligence.

Zuckerberg also stated, "The next generation of services needs to build comprehensive general intelligence, and this has become increasingly clear. Building the best AI assistants, AI for creators, AI for businesses, and so on, all require progress in various fields of AI, including reasoning, planning, coding, memory, and other cognitive abilities."

From Zuckerberg's remarks, it can be seen that the release of Llama 3 does not necessarily mean that AGI will be achieved, but Meta is consciously developing LLM and other AI research in a way that could potentially achieve AGI.

04. Will it be multimodal?

Another emerging trend in the field of artificial intelligence is multimodal AI, which can understand and process different data formats (or modes).

For example, Google's Gemini, OpenAI's GPT-4V, and open-source models like LLaVa, Adept, or Qwen-VL can seamlessly switch between computer vision and natural language processing (NLP) tasks, without developing separate models to handle text, code, audio, images, or even video data.

Although Zuckerberg has confirmed that Llama 3, like Llama 2, will include code generation functionality, he did not explicitly mention other multimodal features.

However, Zuckerberg did discuss in the Llama 3 release video how he envisions the intersection of artificial intelligence and the Metaverse: "Meta's Ray-Ban smart glasses are the ideal form for artificial intelligence to see what you see and hear what you hear, and it can provide help at any time."

This seems to imply that whether in the upcoming release of Llama 3 or in subsequent versions, Meta's plans for the Llama model include integrating visual and audio data with the text and code data already processed by LLM.

This also seems to be a natural development in pursuit of AGI.

Zuckerberg stated in an interview with The Verge, "You can argue whether general intelligence is like human-level intelligence, or like human plus human intelligence, or some kind of distant future superintelligence. But for me, the important part is actually its breadth, that intelligence has all these different capabilities, and you have to be able to reason and have intuition."

05. How does Llama 3 compare to Llama 2?

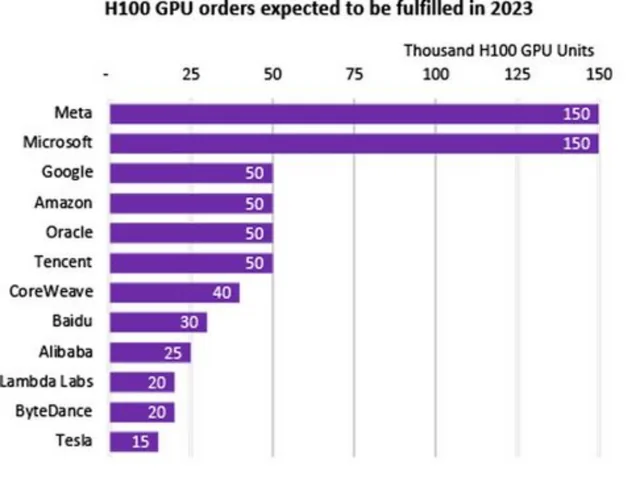

Zuckerberg also announced significant investments in training infrastructure. By the end of 2024, Meta plans to have approximately 350,000 Nvidia H100 GPUs.

This will bring Meta's total available computing resources to 600,000 H100 computing equivalents, including the GPUs they already own, making them second only to Microsoft in terms of computing power reserves.

Therefore, we have reason to believe that even if the Llama 3 model is not larger than its predecessor, its performance will be significantly improved compared to the Llama 2 model.

In a paper published by Deepmind in March 2022, it was hypothesized that the performance of Llama would be significantly improved. Subsequently, models from Meta and other open-source models (such as the model from the French company Mistral) also proved this point, that training smaller models on more data produces higher performance than training larger models on less data.

Although the scale of the Llama 3 model has not been disclosed, it is likely to continue the pattern of previous generations of models, i.e., improving performance within a 70-70 billion parameter model. Meta's recent investments in infrastructure will undoubtedly provide more powerful pre-training capabilities for models of any scale.

Llama 2 also doubled the context length of Llama 1, which means Llama 2 can "remember" twice as much context during reasoning, and Llama 3 may make further progress in this regard.

06. How does it compare to OpenAI's GPT-4?

While smaller LLaMA and Llama 2 models have achieved or surpassed the performance of the larger 175 billion parameter GPT-3 model in some benchmark tests, they cannot compete with the GPT-3.5 and GPT-4 models provided in ChatGPT.

With the launch of the new generation model, Meta seems to be intentionally bringing the most advanced performance to the open-source world.

Zuckerberg told The Verge, "Llama 2 is not the industry-leading model, but it is the best open-source model. With Llama 3 and beyond, our goal is to build products at the cutting edge and ultimately become the industry-leading model."

07. Preparing for the Future

With new foundational models come new opportunities to gain a competitive advantage by improving applications, chatbots, workflows, and automation.

Being at the forefront of emerging developments is the best way to avoid falling behind, and adopting new tools can differentiate a company's products and provide the best experience for customers and employees.

Original sources:

- https://www.reuters.com/technology/meta-plans-launch-new-ai-language-model-llama-3-july-information-reports-2024-02-28/

- https://www.ibm.com/blog/llama-3/

The Chinese content was compiled by the MetaverseHub team. If you need to reprint, please contact us.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。