Pick an AI tool that suits you best.

Author: Mù Mù

The AI video tool race is becoming more and more intense, with various upgrades that are overwhelming. Especially Gen-2, Pika 1.0, Stable Video Diffusion, and Magic Animate, they are becoming the most commonly used video generation products for users, backed by powerful AI large models.

Although the current video large models still cannot reach the level of "describing a story with text to generate a movie," they can already create vivid videos through a series of prompt words, and have developed strong multimodal capabilities such as image-to-video.

"Metaverse Daily" tested four major AI video tools, hoping to help you quickly get started with the experience. It is worth reminding that it is best to use English prompt words for all tools, as the generation effect will be better than using Chinese prompts. Here, we also hope that domestic AI video generation tools can catch up quickly and create good products suitable for Chinese users.

Runway Gen-2

Gen-2 is the first publicly available text-to-video generation tool developed by Runway Research. Its related functions include text/image-to-video, video stylization, image expansion, one-click background removal, erasing specific elements in the video, training specific AI models, etc., making it the most powerful AI video generation/editing tool currently available.

The text-to-video function of Gen-2 has been greatly improved compared to Gen-1. Here you can see the generated effect of inputting "raccoon play snow ball fight in sunny snow Christmas playground." It can be said that both the image quality and composition of Gen-2 are excellent. However, there is a problem of missing key words, such as the lack of "Christmas" and "snowball fight" effects in the picture.

Just a few days ago, Runway launched a new feature called "Motion Brush," where we only need to brush an area on the image to turn a static image into dynamic content. The "Motion Brush" feature is very user-friendly in operation. After selecting an image, use the brush to paint the area you want to animate, then adjust the approximate direction of movement, and the static image can move according to the predetermined motion.

However, currently, the "Motion Brush" feature also has some shortcomings, such as being suitable for slow-moving scenes and unable to generate fast-moving scenes like vehicles speeding. In addition, if you use the motion brush, the areas outside the brush almost remain static, and it is impossible to fine-tune the motion trajectories of multiple objects.

Currently, a free Runway account can only generate videos of up to 4 seconds in length, consuming 5 points per second, with a maximum of 31 videos, and cannot remove watermarks. If you want higher resolution, watermark-free, and longer videos, you will need to upgrade your account for a fee.

In addition, if you want to learn about AI videos, you can try Runway's video channel, Runway TV, where AI-made videos are played in a loop 24 hours a day, and you may find some creative inspiration through these AI videos.

Website: https://app.runwayml.com/video-tools/teams/wuxiaohui557/ai-tools/gen-2

Pika 1.0

Pika 1.0 is the first official product released by Pika Labs, a startup AI technology company founded by a Chinese team. Pika 1.0 can not only generate 3D animations, cartoons, and movies, but also achieve style transformation, canvas expansion, video editing, and other heavyweight capabilities. Pika 1.0 is very good at creating anime-style images and can generate short videos with movie effects.

The most popular small tool among netizens is the "AI Magic Wand," which is the local modification function. A few months ago, this was an ability that the AI drawing field had just acquired. Now, "local modification" can modify the local features of all backgrounds and subjects in the video, and it is very convenient to implement, only requiring three steps: upload a dynamic video; select the area to be modified in Pika's console; enter prompt words to tell Pika what you want to use to replace it.

In addition to the "local modification" function, Pika 1.0 brings the "image expansion" function of the text-to-image tool Midjourney to the video field for the first time. Unlike the "AI expansion" that has been overplayed on Douyin, Pika 1.0's video expansion is quite reliable, with natural and logical images.

Currently, Pika 1.0 supports users to experience for free, but requires applying for a trial quota. If you are still in line, you can log in to the official website and choose Discord. Similar to Midjourney, users need to create in the cloud on Discord and can experience two major functions: text-to-video and image-to-video.

After entering Pika 1.0's Discord server, click on any channel in Generat, enter "/", select "Create," and enter prompt words in the prompt text box that pops up.

Website: https://pika.art/waitlist

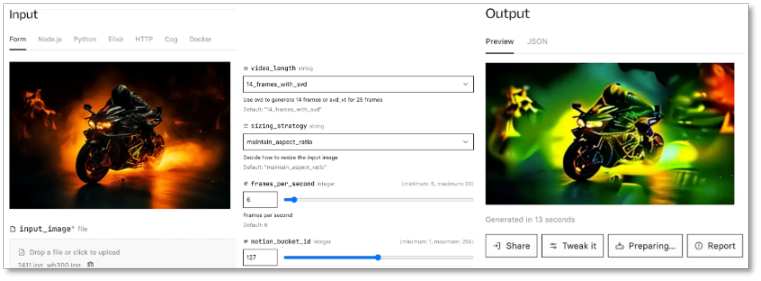

Stable Video Diffusion

On November 22, Stability AI released an open-source project for AI-generated videos: Stable Video Diffusion (SVD). The official blog of Stability AI shows that the new SVD supports text-to-video, image-to-video generation, and also supports the transformation of objects from a single perspective to multiple perspectives, that is, 3D synthesis. The generation effect is no less than Runway Gen2 and Pika 1.0.

There are currently two ways to use it online, one is the trial demo published by the official on replicate, and the other is the newly launched online website, both of which are free.

We tested the first one because it supports parameter adjustment, and the operation is relatively convenient: upload images, adjust the number of frames, aspect ratio, overall movement, etc. However, the downside is that the generated image effect is relatively random and requires continuous adjustments to achieve the desired effect.

Stable Video Diffusion

Stable Video Diffusion is currently only a basic model and has not been productized yet, but the official revealed that they are "planning to continue expanding and building an ecosystem similar to Stable Diffusion," and plan to continuously improve the model based on user feedback on safety and quality.

Website: Trial demo version and online version

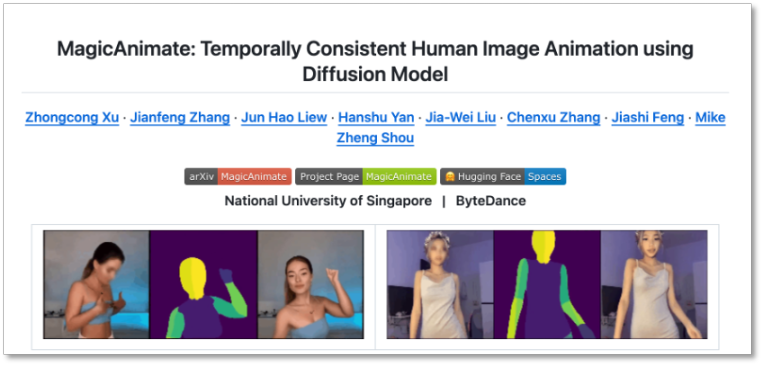

Magic Animate

Magic Animate is a portrait animation generation method based on the diffusion model, aimed at enhancing temporal consistency, maintaining the authenticity of the reference image, and improving animation fidelity. It is jointly launched by Show Lab at the National University of Singapore and ByteDance.

In simple terms, given a reference image and a pose sequence (video), it can generate an animated video that follows the pose motion and maintains the identity features of the reference image. The operation is also very simple, requiring only three steps: upload a static portrait photo; upload a demo video of the desired motion; adjust parameters.

Magic Animate also provides a way for local experience on GitHub for interested users to try!

Website: https://github.com/magic-research/magic-animate

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。