The three new AI chips from NVIDIA are not "improved versions," but "shrunken versions." Among them, HGX H20 has limitations in bandwidth, computing speed, and other aspects. It is expected that the price of H20 will decrease, but it will still be higher than domestic AI chip 910B.

Original Source: Titanium Media

Image Source: Generated by Wujie AI

On November 10, it was reported that chip giant NVIDIA will launch three AI chips for the Chinese market based on the H100 to cope with the latest chip export control from the United States.

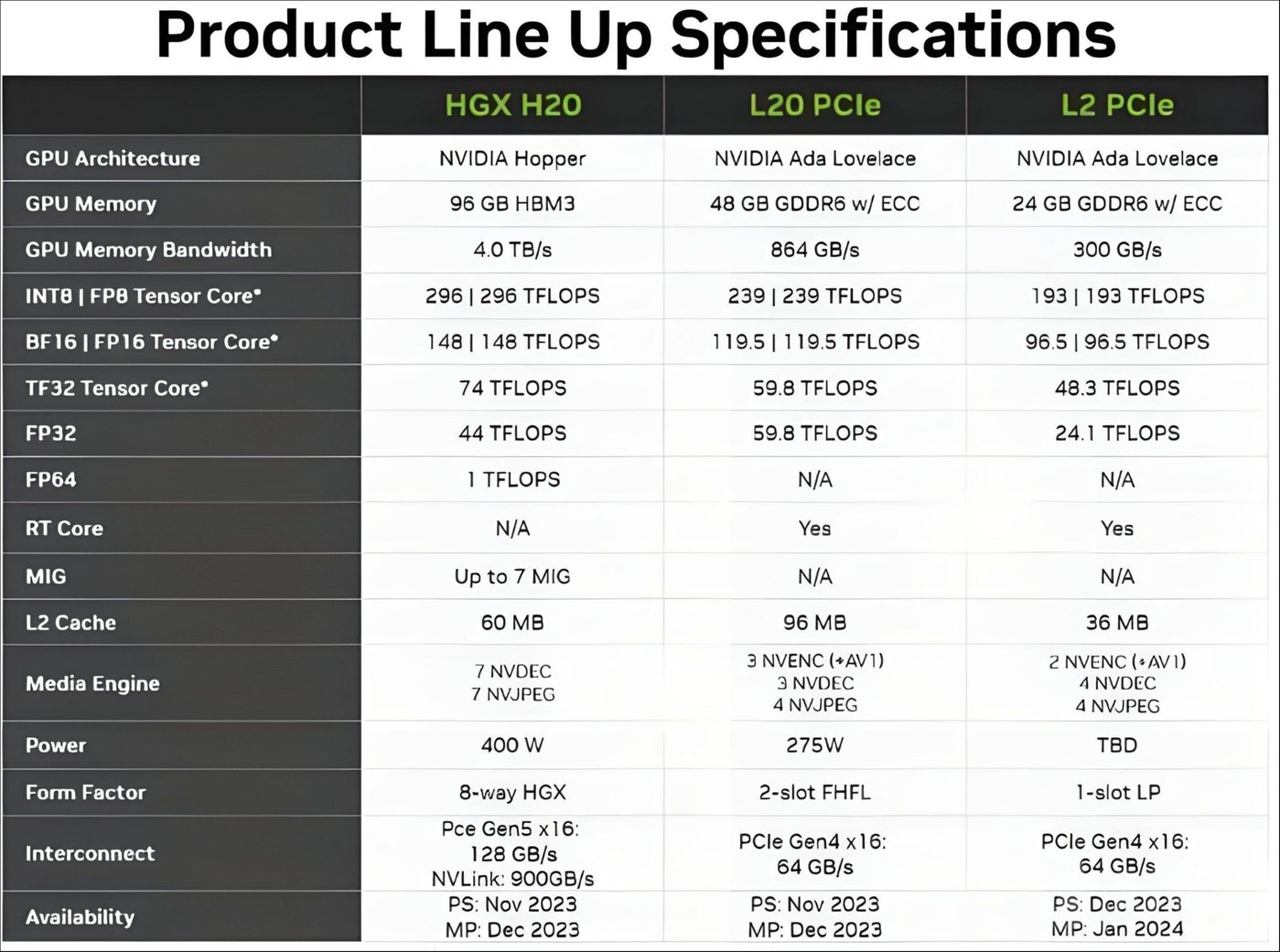

The specification documents show that NVIDIA is about to launch new products for Chinese customers named HGX H20, L20 PCle, and L2 PCle, based on NVIDIA's Hopper and Ada Lovelace architectures. From the specifications and naming, the three products are aimed at training, inference, and edge scenarios, and will be announced as early as November 16. The product sampling time will be from November to December this year, and mass production will be from December this year to January next year.

Titanium Media App has learned from multiple companies in the NVIDIA industry chain that the above information is true.

Titanium Media App also exclusively learned that these three AI chips from NVIDIA are not "improved versions," but "shrunken versions." Among them, the HGX H20 used for AI model training has limitations in bandwidth, computing speed, and other aspects. The overall computing power is theoretically about 80% lower than the NVIDIA H100 GPU chip, which means that the H20 has about 20% of the comprehensive computing power of the H100, and it adds HBM memory and NVLink interconnection modules to increase computing power costs. Therefore, although the price of H20 will decrease compared to H100, it is expected that the price of this product will still be higher than the domestic AI chip 910B.

"This is equivalent to widening the highway lanes, but not widening the toll booth entrance, which restricts the traffic. Similarly, technically, through hardware and software locks, the performance of the chip can be precisely controlled without the need for large-scale production line changes. Even if the hardware is upgraded, the performance can still be adjusted as needed. Currently, the new H20 has 'capped' the performance from the source," explained an industry insider about the new H20 chip. "For example, a task that originally took 20 days with H100 may now take 100 days with H20."

Despite the new round of chip restrictions imposed by the United States, NVIDIA seems to have not given up on the huge AI computing market in China.

So, can domestic chips be replaced? Titanium Media App has learned that, after testing, currently, in large-scale model inference, the domestic AI chip 910B can only achieve about 60%-70% of the A100's performance, and cluster model training is difficult to continue; at the same time, 910B has much higher computing power consumption, heat dissipation, and is not compatible with CUDA, making it difficult to fully meet the long-term model training needs of intelligent computing centers.

As of now, NVIDIA has not made any comments on this.

It is reported that on October 17, the Bureau of Industry and Security (BIS) of the U.S. Department of Commerce issued new export control regulations for chips, imposing new export controls on semiconductor products, including high-performance AI chips such as NVIDIA; the restrictions took effect on October 23. According to NVIDIA's filing with the U.S. SEC, immediately banned products include A800, H800, and L40S, which are the most powerful AI chips.

In addition, the L40 and RTX 4090 chip processors retain the original 30-day window.

On October 31, there were reports that NVIDIA may be forced to cancel a $5 billion worth of advanced chip orders. Influenced by the news, NVIDIA's stock price once plummeted. Previously, the A800 and H800, which were exclusively provided by NVIDIA for China, could not be sold in the Chinese market due to the new U.S. regulations, and these two chips were referred to as "castrated versions" of A100 and H100, as NVIDIA had reduced the performance of the chips to comply with the previous U.S. regulations.

On October 31, Zhang Xin, a spokesperson for the China Council for the Promotion of International Trade, stated that the new U.S. export control rules on semiconductor exports to China further tightened restrictions on AI-related chips and semiconductor manufacturing equipment to China, and included multiple Chinese entities in the export control "entity list." These U.S. measures seriously violate market economic principles and international economic and trade rules, exacerbate the fragmentation and risks of the global semiconductor supply chain, and have changed the global supply and demand for semiconductors since the second half of 2022, causing a supply imbalance in 2023, affecting the global semiconductor industry landscape, and harming the interests of enterprises in various countries, including Chinese enterprises.

Performance comparison of NVIDIA HGX H20, L20, L2, and other products

Titanium Media App has learned that the new HGX H20, L20, and L2 three AI chip products are based on NVIDIA's Hopper and Ada architectures, suitable for cloud training, cloud inference, and edge inference.

Among them, the latter two, L20 and L2, have similar "domestic alternatives" and compatible CUDA solutions for AI inference, while HGX H20 is a chip product based on H100, achieved through firmware castration for AI training, mainly replacing A100/H800, and there are few similar domestic solutions for model training in China except for NVIDIA.

The documents show that the new H20 has advanced CoWoS packaging technology and has added an HBM3 (high-performance memory) to 96GB, but the cost has also increased by $240; the FP16 dense computing power of H20 reaches about 148TFLOPS (trillions of floating-point operations per second), which is about 15% of the H100's computing power, so additional algorithm and personnel costs are required; NVLink has been upgraded from 400GB/s to 900GB/s, so the interconnection rate will be greatly improved.

According to the evaluation, H100/H800 is the mainstream practice solution for computing clusters at present. Among them, the theoretical limit of H100 is 50,000 card clusters, with a maximum of 100,000P computing power; the maximum practical cluster of H800 is 20,000-30,000 cards, totaling 40,000P computing power; the maximum practical cluster of A100 is 16,000 cards, with a maximum of 9,600P computing power.

However, the new H20 chip, the theoretical limit of 50,000 card clusters, has a computing power of about 0.148P per card, totaling nearly 7,400P computing power, which is lower than H100/H800 and A100. Therefore, the H20 cluster scale is far from the theoretical scale of H100, and based on the balance of computing power and communication, the reasonable median of the overall computing power is about 3,000P, requiring additional costs and expansion of more computing power to complete the training of models with trillions of parameters.

Two semiconductor industry experts told Titanium Media App that based on the current performance parameters, it is very likely that NVIDIA's B100 GPU products will no longer be sold to the Chinese market next year.

Overall, if large-scale enterprises want to conduct large-scale model training for parameters such as GPT-4, the scale of the computing cluster is crucial. Currently, only H800 and H100 can handle large-scale model training, and the performance of the domestic 910B is between A100 and H100, only being a "last resort backup choice."

The new H20 introduced by NVIDIA is more suitable for vertical model training and inference, unable to meet the trillion-level large model training needs, but its overall performance is slightly higher than 910B, coupled with the NVIDIA CUDA ecosystem, thereby blocking the only choice path for domestic cards in the Chinese AI chip market under the U.S. chip restriction order.

According to the latest financial report, in a quarter ending on July 30, NVIDIA's sales of $13.5 billion came from the United States and China, accounting for over 85% of the total, with only about 14% of sales from other countries and regions.

Impacted by the news about H20, as of the close of the U.S. stock market on November 9, NVIDIA's stock price rose slightly by 0.81% to $469.5 per share. In the past five trading days, NVIDIA's stock has risen by over 10%, reaching a new market value of $1.16 trillion.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。