Source: New Intelligence Element

Image Source: Generated by Wujie AI

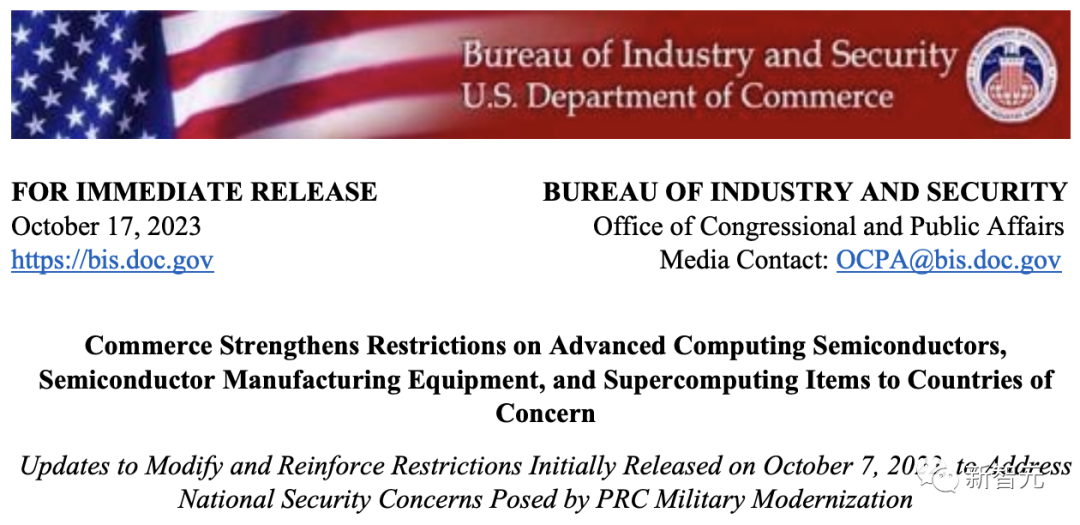

On October 17th local time, the United States officially announced new regulations, tightening the export of advanced AI chips to China.

Commerce Secretary Raimondo stated that the purpose of the control is to curb China's access to advanced chips, thereby hindering "breakthroughs in the field of artificial intelligence and complex computing."

Since then, restrictions on the sale of high-performance semiconductors to China by companies such as Nvidia and other chip manufacturers have become more severe, and it has become increasingly difficult for related companies to find ways to bypass the restrictions.

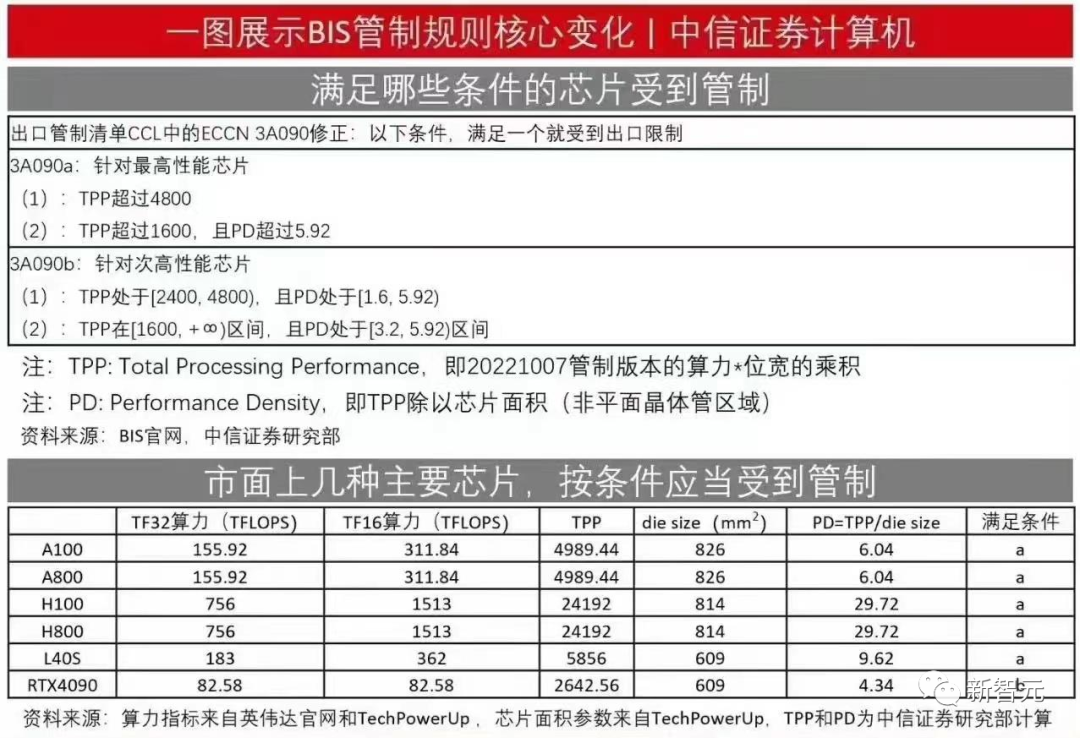

According to relevant documents, GPU chips will be subject to export restrictions as long as they meet one of the following conditions—

Source: CITIC Securities

With the introduction of the new regulations, the stock prices of Nvidia, AMD, and Intel also experienced a significant drop.

It is reported that as much as 25% of Nvidia's revenue in the data center chip sector relies on the Chinese market.

In addition, as part of the new regulations, chip design companies Moore Thread and Birun Technology have also been included in the latest blacklist.

Effective Immediately Within 30 Days!

According to the documents issued by the U.S. Department of Commerce, the ban will take effect within 30 days.

At the same time, Raimondo also stated that regulations may be updated at least once a year in the future.

Document link: https://www.bis.doc.gov/index.php/about-bis/newsroom/2082

Total Computing Power Less Than 300 TFLOPS, and Below 370 GFLOPS per Square Millimeter

In last year's ban, the United States had previously prohibited the export of chips that exceeded two thresholds: one was the size of the chip's computing power, and the other was the speed of communication between chips.

The reason for such provisions is that AI systems need to connect thousands of chips together at the same time to process large amounts of data.

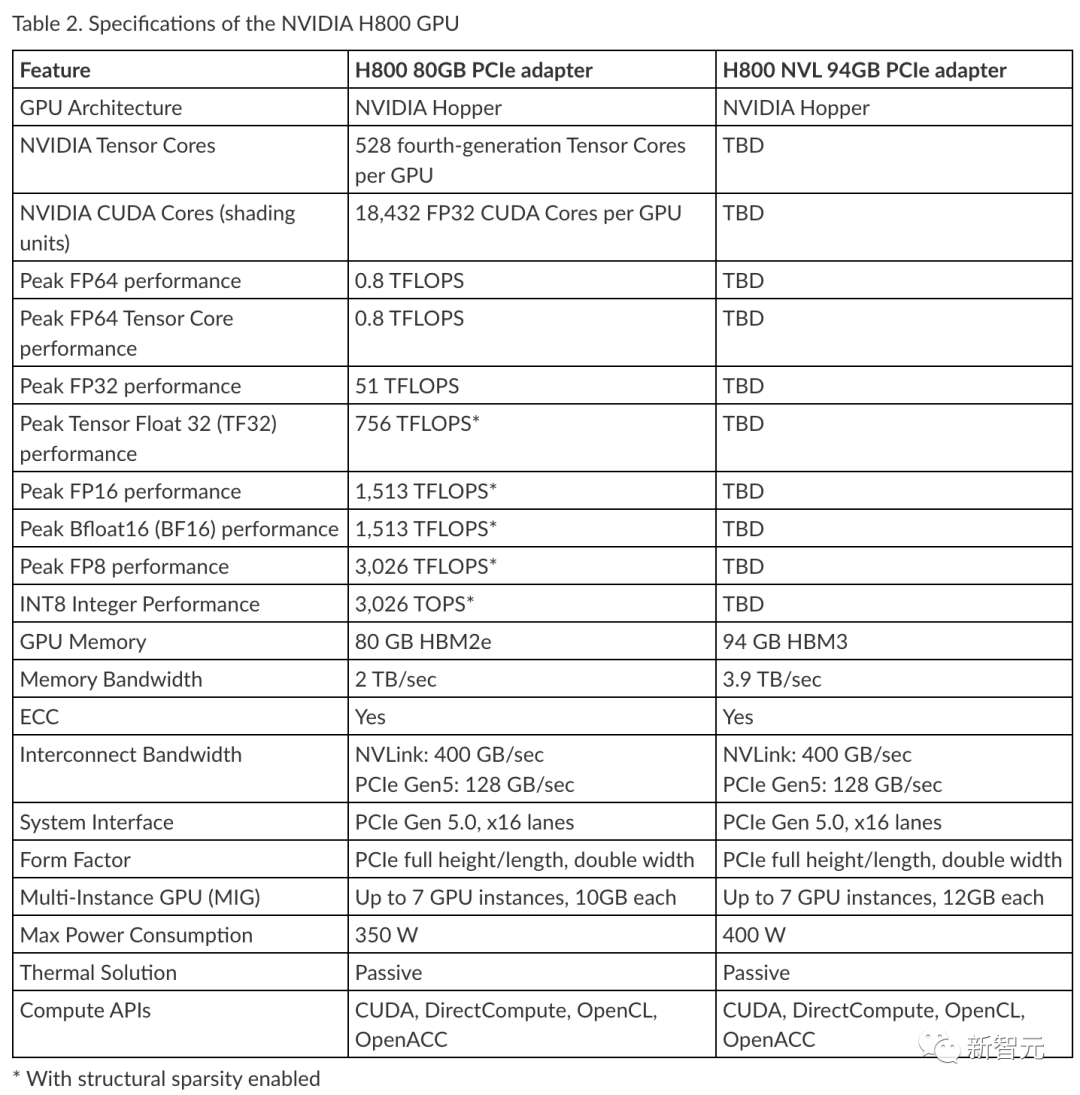

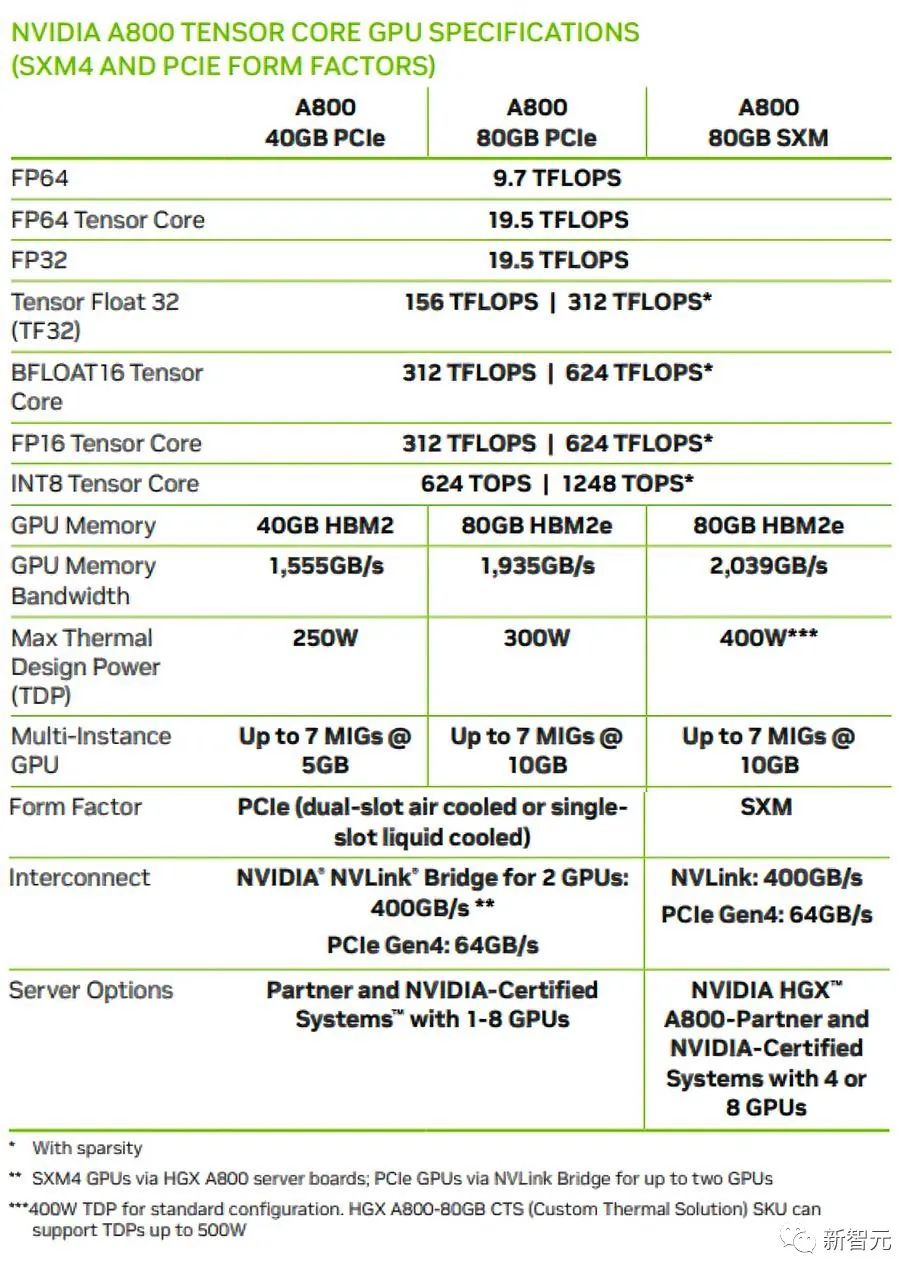

Nvidia's special edition chips H800/A800 were able to maintain communication within the limits while still being able to train AI models because they retained powerful computing capabilities.

Earlier, Reuters reported that the data transfer speed between Nvidia H800 chips is 400 GB/s, less than half of the peak speed of H100 (900 GB/s).

This time, U.S. officials stated that the new regulations will focus more on computing power, which will allow for the control of more chips, including Nvidia's special edition.

In terms of computing power, the U.S. Department of Commerce has replaced "bandwidth parameters" with "performance density" in this round of export control, prohibiting the sale of data center chips with operating speeds of 300 TFLOPS (trillion operations per second) or higher to Chinese companies.

If the performance density of chips with speeds of 150-300 TFLOPS is 370 GFLOPS (billion operations per second) per square millimeter or higher, they will be prohibited from being sold.

Chips running at the above speeds but with lower performance density fall into a "gray area," which means that sales to China must be reported to the U.S. government.

Although these rules do not apply to chips for "consumer products," the U.S. Department of Commerce stated that exporters must also report when exporting chips with speeds exceeding 300 TFLOPS, so that authorities can track whether these chips are being used in large quantities for training AI models.

According to the new regulations, affected Nvidia chips include but are not limited to A100, A800, H100, H800, L40, and L40S, and even RTX 4090 requires additional licensing requirements.

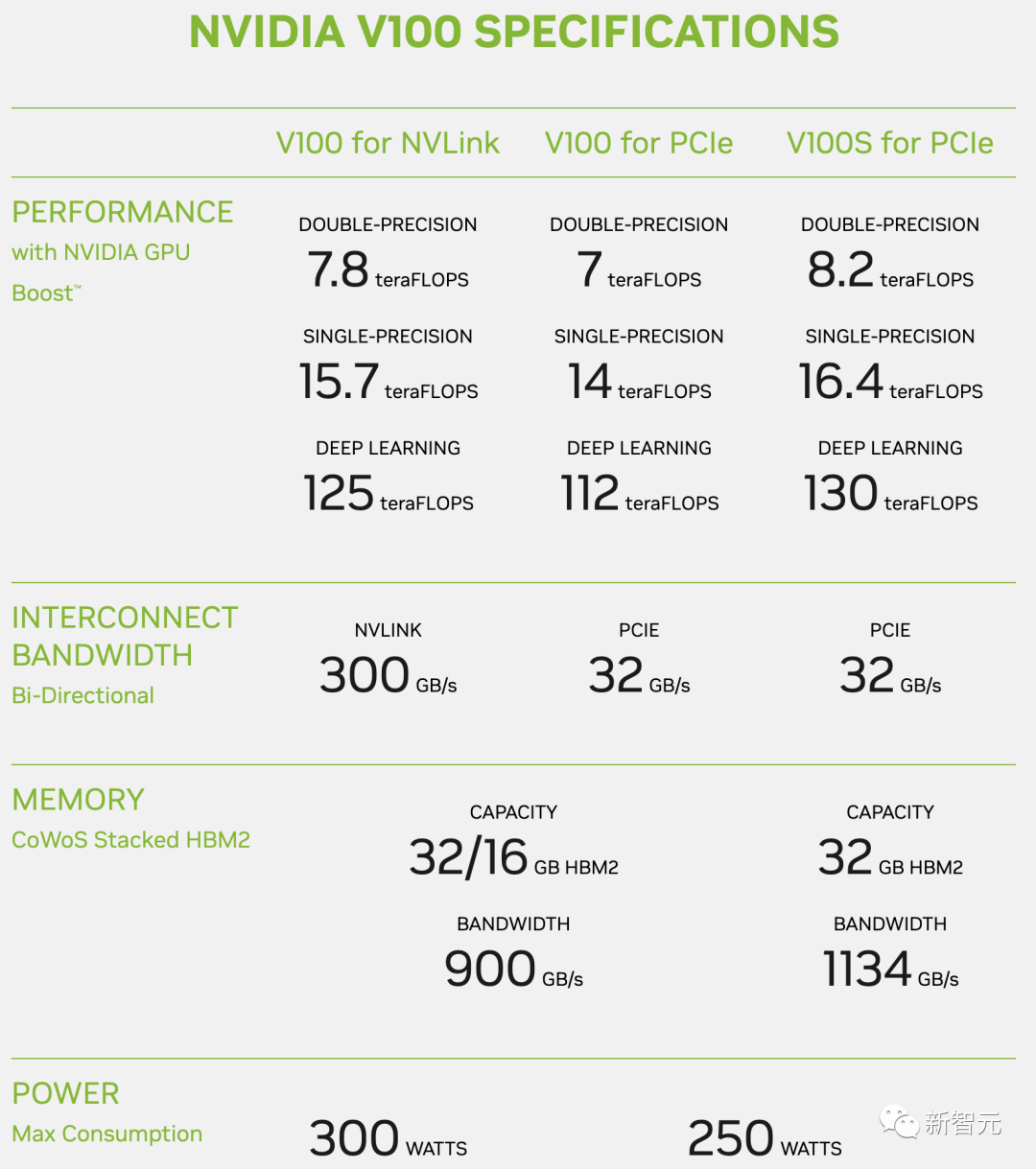

Now, it seems that only the Nvidia V100 remains. According to the parameters of this chip, the chip-to-chip communication speed is 300 GB/s, and the maximum operating speed is 125 TFLOPS.

In addition, any system that integrates one or more integrated circuits (including but not limited to DGX and HGX systems) is also within the scope of the new licensing requirements.

However, Nvidia expects that the latest U.S. restrictions will not have a significant impact in the short term.

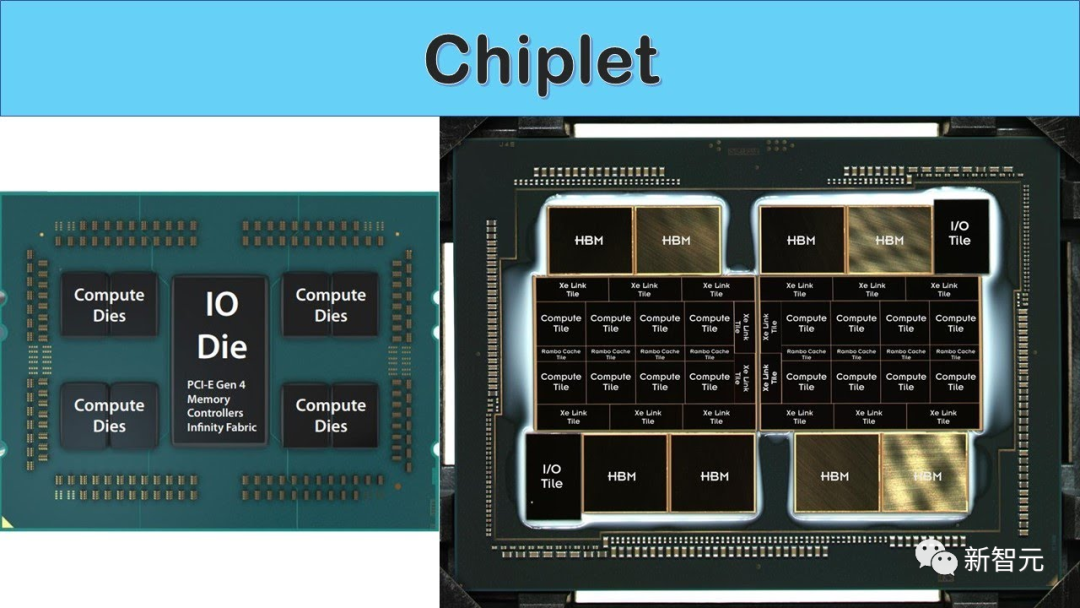

Blocking the Chiplet Loophole

Another issue that the United States is attempting to address this time is Chiplet. Through this technology, smaller parts of a chip can be connected together to form a complete chip.

The United States is concerned that Chinese companies could use Chiplet to obtain smaller chips that comply with regulations and then secretly assemble them into larger chips.

The new regulations this time have added restrictions on "performance density," limiting the computing power of chips within a certain size, which is aimed at such alternative methods.

The Chiplet method may be the future core of the Chinese semiconductor industry.

Chinese GPU Companies All Added to the Blacklist

Industry insiders believe that if U.S. chips are banned in China, Chinese companies will strive to fill the gaps in the market.

Both Moore Thread and Birun Technology were founded by former Nvidia employees and are considered the best candidates for domestically produced alternatives to Nvidia chips.

But now, these two companies have also been added to the Entity List, which has blocked Taiwanese semiconductor companies or other manufacturers using U.S. equipment from producing chips for them.

Danger Signals from Chip Factories

The United States stated that any chip containing 500 billion or more transistors and using high-bandwidth memory contains danger signals.

Exporters need to be extra careful about whether they need a license to ship to China.

This threshold covers almost all advanced AI chips, helping chip factories detect rule-evading behavior.

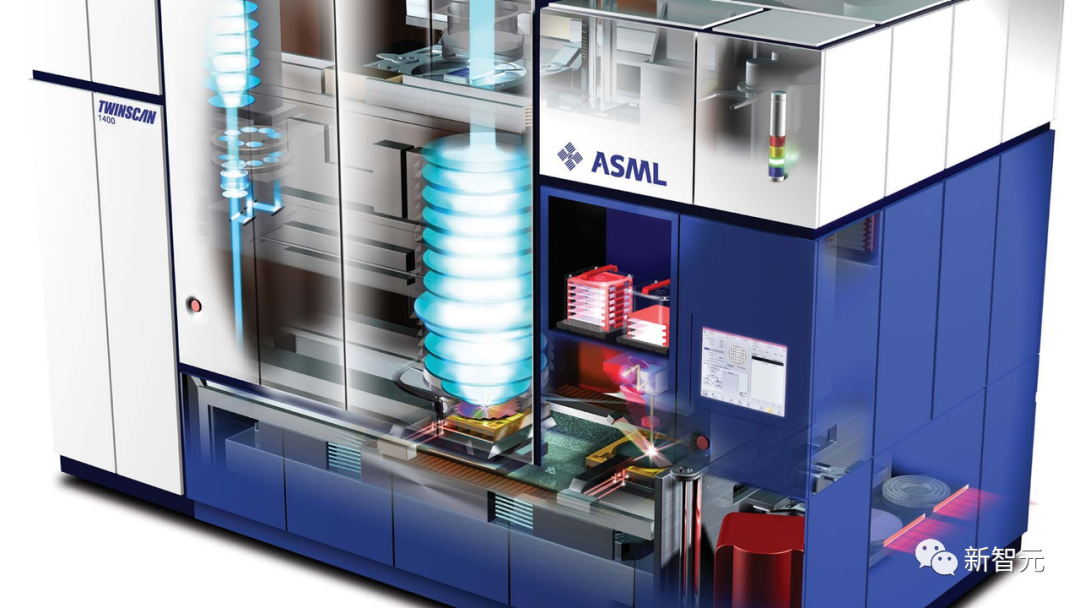

DUV Lithography Machines Also Restricted

The United States has also increased the export license requirements for advanced chips to 22 countries.

The scope of control has also expanded to include any company with its ultimate headquarters in the aforementioned countries, to prevent overseas subsidiaries from purchasing prohibited chips.

The United States has also imposed licensing requirements for chip manufacturing tools on the remaining 21 countries, fearing that these devices may be transferred to China.

Netherlands' DUV lithography systems are also restricted to prevent ASML from shipping older DUV models and components to chip factories in China.

DUV equipment is not as advanced as EUV equipment, but it can manufacture chips at a higher cost. EUV has already been completely banned.

Major Companies Stockpiling: 100,000 A800 Chips to be Delivered This Year

For domestic internet giants, how much inventory do they currently have?

The specific amount is currently unknown. However, major domestic companies have just spoken out: we have enough stockpiled.

Earlier, the foreign media FT reported that domestic internet giants had ordered Nvidia chips worth about $5 billion.

According to reports, Baidu, ByteDance, Tencent, and Alibaba have ordered A800 chips from Nvidia, worth $1 billion, totaling 100,000 chips, to be delivered this year.

In addition, there is also a $4 billion GPU order to be delivered in 2024.

Two insiders revealed that ByteDance has already stockpiled at least 10,000 Nvidia GPUs to support various generative AI products.

They added that the company has also ordered nearly 70,000 A800 chips, to be delivered next year, worth about $700 million.

Nvidia stated in a statement, "Consumer internet companies and cloud providers invest billions of dollars in data center components each year, and often place orders months in advance."

Earlier this year, as global generative AI continued to advance, according to insiders from domestic technology companies, most Chinese internet giants had fewer than a few thousand chips available for training large language models.

Since then, with growing demand, the cost of these chips has also increased. A Nvidia distributor stated, "The price of A800 in the hands of distributors has increased by over 50%."

For example, Alibaba released its own large model, TongYi QianWen, and integrated it into various product lines.

At the same time, Baidu is also fully committed to the research and application of large models, continuously iterating and upgrading WenXin YiYan, which can now rival GPT-4.

In April of this year, Tencent Cloud released a new server cluster, which includes Nvidia H800.

According to two sources, Alibaba Cloud has also obtained thousands of H800 from Nvidia, and many customers have contacted Alibaba to use these chip-driven cloud services to drive their own model research and development.

What Chips are Used to Train Large Models?

From the beginning of the year to the present, the industry has been developing its own large models, usually benchmarked against the "world's strongest" GPT-4 model.

It was previously reported that GPT-4 uses the MoE architecture, consisting of 8 220B models, with a parameter count of 1.76 trillion.

This parameter count has left many people in awe, and the computational power required has reached its maximum limit.

Specifically, OpenAI trained GPT-4 with approximately 2.15e25 FLOPS, over 90 to 100 days on about 25,000 A100s, with a utilization rate between 32% and 36%.

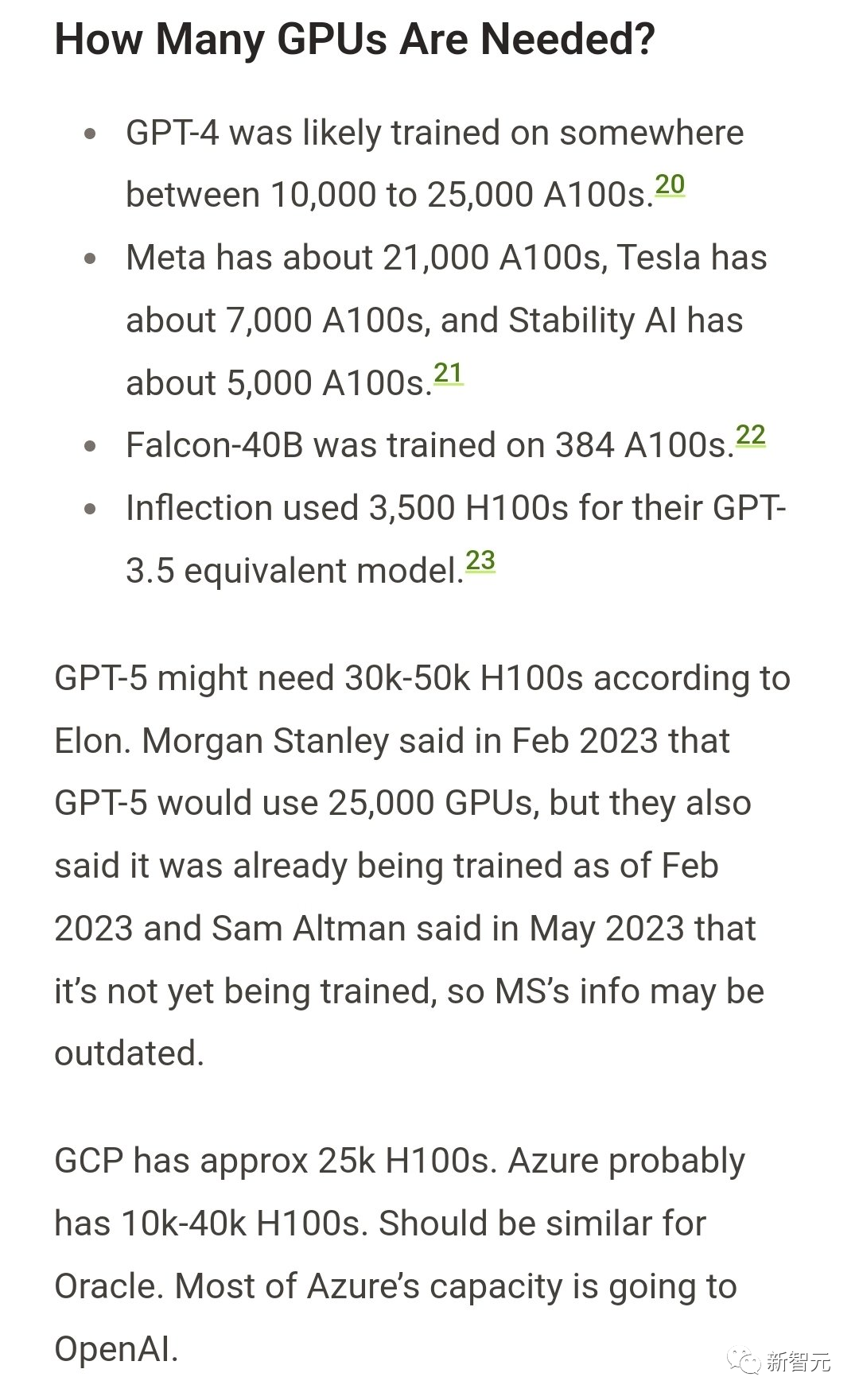

So, for the next generation model, the so-called "GPT-5," what will be the demand for computational power?

Previously, Morgan Stanley stated that GPT-5 will use 25,000 GPUs and has already begun training since February, but Sam Altman later clarified that GPT-5 has not yet been trained.

According to Musk, GPT-5 may require 30,000-50,000 H100s.

This means that if tech giants want to further advance the iteration and upgrade of large models, they will need tremendous computational power support.

In response, Nvidia's Chief Scientist Bill Dally once stated, "As training demand doubles every 6 to 12 months, this gap will rapidly widen over time."

References:

https://www.ft.com/content/be680102-5543-4867-9996-6fc071cb9212

https://www.reuters.com/technology/how-us-will-cut-off-china-more-ai-chips-2023-10-17/

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。