Source: New Wisdom

Image source: Generated by Unbounded AI

Note that this player is skillfully playing "Minecraft," effortlessly collecting snacks and breaking blocks.

As the camera turns, we realize: the player's true identity is actually a chimpanzee!

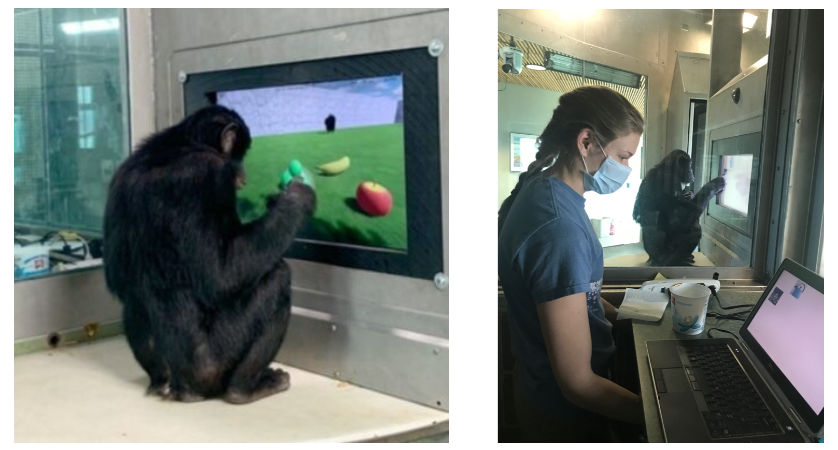

Yes, this is a non-human biological neural network experiment from the "Ape Initiative."

And the star of the experiment, Kanzi, is a 42-year-old bonobo.

After training, it learned various skills, challenging environments such as villages, desert temples, and the Nether portal, and successfully reached the finish line.

AI experts discovered that the process of the chimpanzee trainer teaching it skills surprisingly has many similarities to teaching AI to play Minecraft, such as contextual reinforcement learning, RLHF, imitation learning, and curriculum learning.

When the Chimpanzee Learns to Play "Minecraft"

Kanzi, a bonobo from the Ape Initiative, is one of the smartest chimpanzees in the world. It understands English and can use touch screens.

At the Ape Initiative, Kanzi has access to various electronic touch screens, which may have laid a solid foundation for it to quickly pick up "Minecraft."

When people first showed "Minecraft" to Kanzi, it immediately noticed the green arrow on the screen and used its finger to swipe towards the target.

Learning Three Skills

In just a few seconds, Kanzi figured out how to move in "Minecraft."

Later, it also learned to collect rewards.

Each time it collected a reward, it received snacks such as peanuts, grapes, and apples.

Kanzi's operation became more and more skillful.

It could distinguish obstacles that were the same green column as the target arrow and avoid them while collecting rewards.

Of course, Kanzi also encountered challenges. It needed to use a break tool to break large blocks, an operation it had never seen before.

Seeing Kanzi stuck, humans nearby started to help, pointing to the necessary tool button. However, even after watching, Kanzi still couldn't comprehend.

Humans had to take action themselves, using the tool to break the block. After watching, Kanzi seemed to ponder, and to everyone's surprise, it imitated and clicked the button to break the block. The crowd erupted in cheers.

Now, Kanzi's skill tree has gathered two skills: collecting snacks and breaking blocks.

While learning the cave skill, the staff found that if Kanzi fell from the block it was trying to break, it would just walk away. Therefore, they specially customized a task for it—

To break blocks in a cave full of diamond walls, to prove that it had mastered the skills of collecting and breaking.

Everything went smoothly in the cave, but Kanzi got stuck in a corner. At this point, it needed human assistance.

Finally, Kanzi reached the bottom of the cave and broke the last wall.

The crowd erupted in cheers, and Kanzi happily high-fived the staff.

Deceiving Humans

Next, something interesting happened: the staff invited a human player to play the game with Kanzi, of course, without the player knowing Kanzi's identity.

The staff intended to see how long it would take for the player to realize that they were not playing with a human.

At first, the guy just thought that the other party's movement speed was unbelievably slow,

When Kanzi's screen was shown to him, the guy was directly startled and leaned back.

Escaping the Maze

After playing "Minecraft" again, Kanzi became more and more courageous.

Every time Kanzi collected a reward, people would affirm its behavior with cheers. If it failed, the trainers would applaud and cheer to encourage it to continue playing.

At this point, it had learned to unlock the map of the underground maze:

Break the obstacles in front:

Find the amethyst:

When Kanzi got stuck, it would go out to relax and bring back a stick to put next to itself.

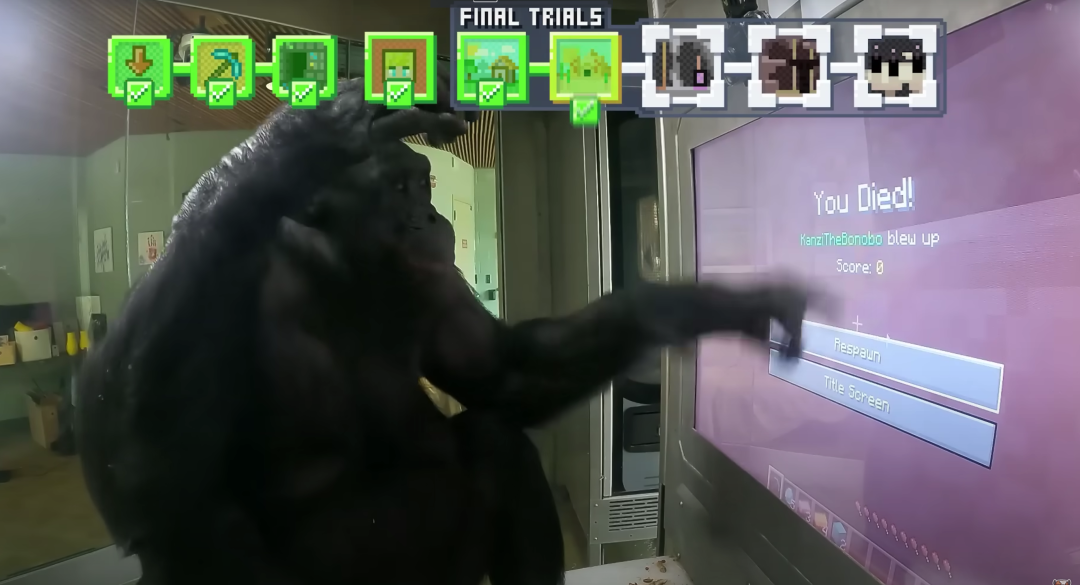

Even if it failed, Kanzi would click the button to respawn.

The final challenge was a huge maze full of forks.

Because it couldn't get out of the maze for a long time, Kanzi became agitated, started screaming with a stick, or got so angry that it broke the stick.

Finally, it calmed down and continued to break through the maze, finally escaping.

Immediately, applause and cheers surrounded Kanzi.

It seems that Kanzi, this bonobo, has mastered "Minecraft."

Similarities Between Teaching Chimpanzees and Teaching AI

Watching a bonobo skillfully play an electronic game may seem somewhat absurd and incredible.

NVIDIA's senior scientist Jim Fan commented—

Although Kanzi and its ancestors have never seen "Minecraft" in their lives, it quickly adapted to the textures and physical properties of "Minecraft" displayed on the electronic screen.

This is in stark contrast to the natural environment they have always been in. This level of generalization far exceeds the most powerful visual models to date.

Training animals to play "Minecraft" essentially follows the same principles as training artificial intelligence:

- Contextual Reinforcement Learning:

Whenever Kanzi reaches a milestone in the game, he receives a fruit or peanuts as a reward, motivating him to continue following the rules of the game.

- RLHF:

Kanzi doesn't understand human language, but it can see the trainers cheering for him and occasionally responds. The cheers from the trainers give Kanzi a strong signal that he is on the right path.

- Imitation Learning:

After the trainers demonstrate how to complete a task, Kanzi immediately grasps the meaning of the related operations. The demonstration's effectiveness far exceeds the strategy of using rewards alone.

- Curriculum Learning:

The trainers start with very simple environments and gradually teach Kanzi to master control skills. Eventually, Kanzi is able to navigate complex caves, mazes, and the Nether.

Moreover, even with similar training techniques, the visual system of animals can recognize and adapt to new environments in a very short time, while AI visual models often require more time and training costs, and frequently struggle to achieve the desired results.

Once again, we are plunged into the abyss of Moravec's paradox:

Artificial intelligence performs opposite to human capabilities. In what we consider low-level intelligence activities that do not require thought or are instinctive (such as perception and motor control), artificial intelligence performs poorly. However, in high-level intelligence activities that require reasoning and abstraction (such as logical reasoning and language understanding), artificial intelligence easily surpasses humans.

This perfectly corresponds to the results of this experiment:

Our best artificial intelligence (GPT-4) approaches human-level understanding of language, but lags far behind animals in perception and recognition.

Netizen: So Chimpanzees Get Angry When Playing Games

Kanzi and LLMs can both play "Minecraft," but there are significant differences in the way Kanzi learns compared to LLMs, and we should take note of this.

Faced with Kanzi's exceptional learning ability, netizens began to make jokes.

Some predicted that the world would become a "Planet of the Apes" in 6 years…

Or that chimpanzees would drink cola and integrate into human society…

Even Jack Ma was not spared, being turned into a "monkey version" of Elon Musk.

Some also said that Kanzi is the first non-human with gamer rage, and he's quite satisfied.

"If Kanzi had his own gaming channel, I would definitely watch it."

"In playing games, there isn't much difference between humans and bonobos. We are both motivated by rewards to perform certain tasks and achieve goals, the only difference is the actual content of the rewards."

"In 'Minecraft,' Kanzi's reward for mining diamonds is more immediate and primitive (food), while our reward for mining diamonds is more delayed and game-related. In short, it's a bit crazy."

First, GPT learned to play "Minecraft," and now bonobos can play too, which makes people start to look forward to the future with Neuralink.

Jim Fan Teaches AI Agents to Play "Minecraft"

In teaching AI to play Minecraft, humans have already accumulated a lot of advanced experience.

As early as May of this year, Jim Fan's team connected NVIDIA's AI agent to GPT-4 and created a new AI agent, Voyager.

Voyager not only outperforms AutoGPT in performance, but can also engage in lifelong learning in the game!

It can autonomously write code to dominate "Minecraft," without any human intervention.

It can be said that with the appearance of Voyager, we have taken a step closer to achieving general artificial intelligence AGI.

True Digital Life

After being connected to GPT-4, Voyager doesn't need human intervention at all, it is completely self-taught.

It has not only mastered basic survival skills such as mining, building houses, collecting, and hunting, but has also learned to explore open-endedly.

By being self-driven, it continuously expands its inventory and equipment, equips itself with armor of different levels, uses shields to block attacks, and uses fences to contain animals.

The emergence of large language models has brought new possibilities for building embodied intelligent agents. Because intelligent agents based on LLMs can use the world knowledge embedded in pre-trained models to generate consistent action plans or executable strategies.

Jim Fan: We had this idea before BabyAGI/AutoGPT, and spent a lot of time finding the best gradient-free architecture.

Introducing GPT-4 into the intelligent agent has opened up a new paradigm (training based on code execution, rather than gradient descent), allowing the intelligent agent to overcome the flaw of not being able to learn lifelong.

OpenAI scientist Karpathy also praised this: it's a "gradient-free architecture" for advanced skills. Here, LLM is like the prefrontal cortex, generating the mineflayer API through code.

3 Key Components

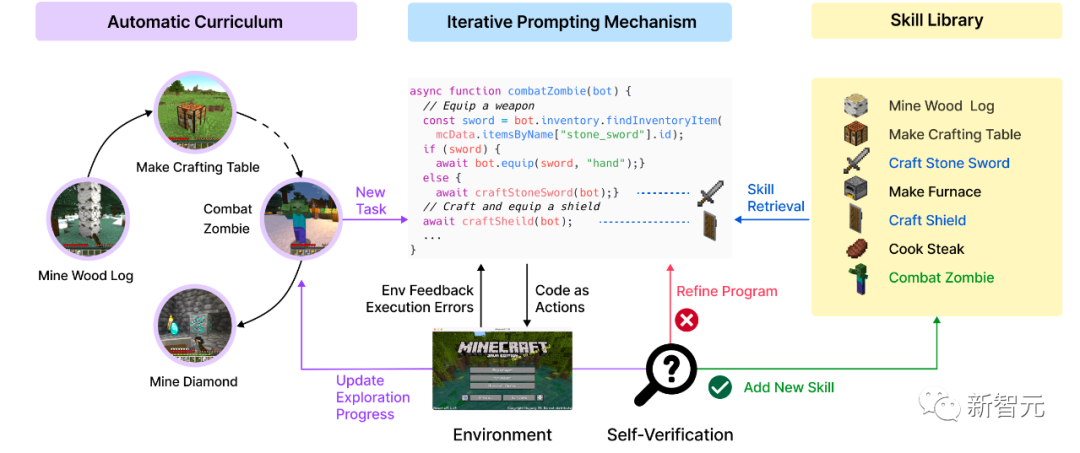

To make Voyager an effective lifelong learning intelligent agent, teams from institutions such as NVIDIA and Caltech have proposed 3 key components:

1. An iterative prompting mechanism that combines game feedback, execution errors, and self-verification to improve programs

2. A skill code library for storing and retrieving complex behaviors

3. An automatic curriculum to maximize the agent's exploration

First, Voyager will attempt to use a popular Minecraft JavaScript API (Mineflayer) to write a program to achieve a specific goal.

Game environment feedback and JavaScript execution errors (if any) will help GPT-4 improve the program.

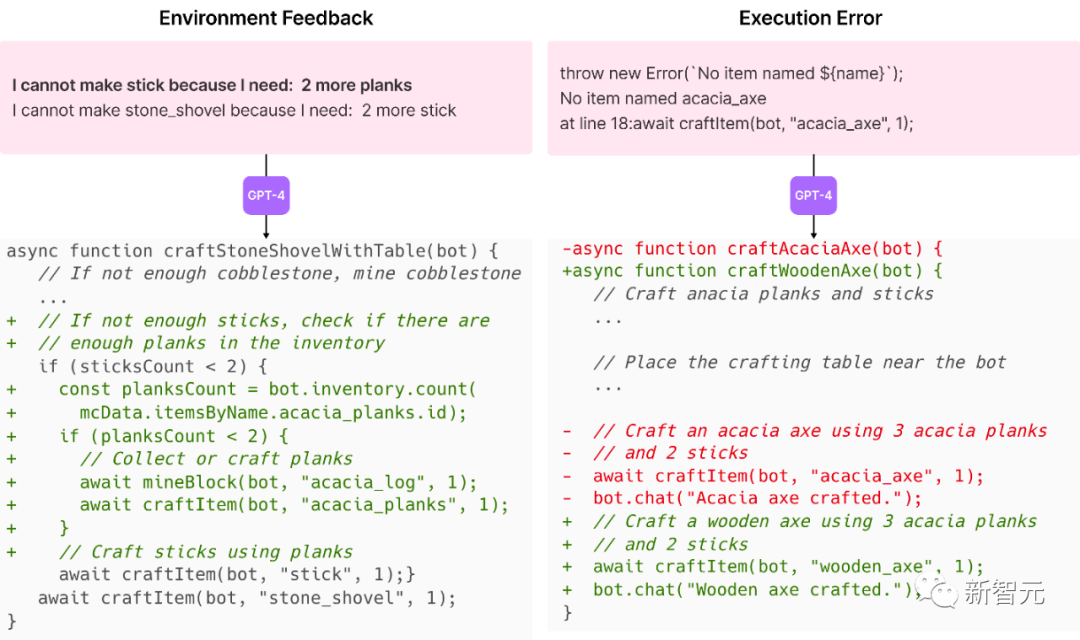

Left: Environment feedback. GPT-4 realizes that it needs 2 more wooden planks before making a stick.

Right: Execution error. GPT-4 realizes that it should make a wooden axe, not a "yearning wood" axe, because there is no "yearning wood" axe in Minecraft.

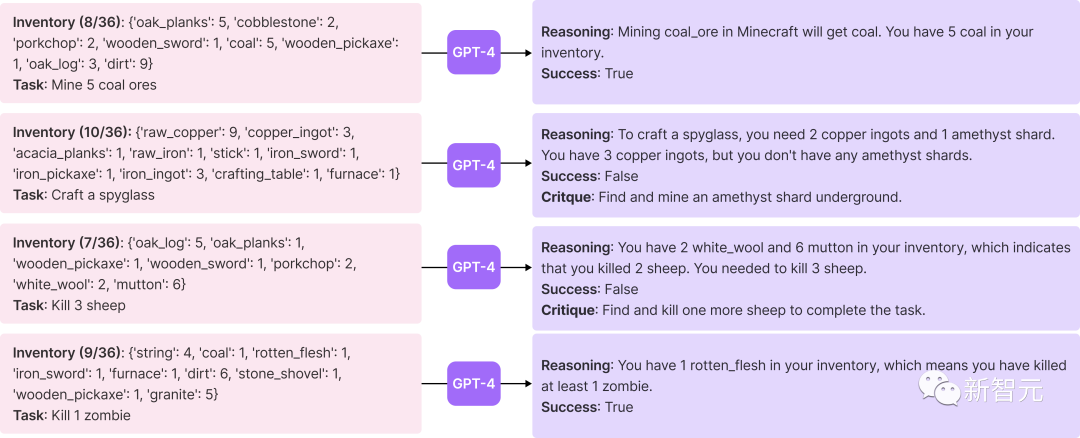

By providing the agent's current state and task, GPT-4 will tell the program whether the task has been completed.

Additionally, if the task fails, GPT-4 will provide criticism and suggest how to complete the task.

Self-verification

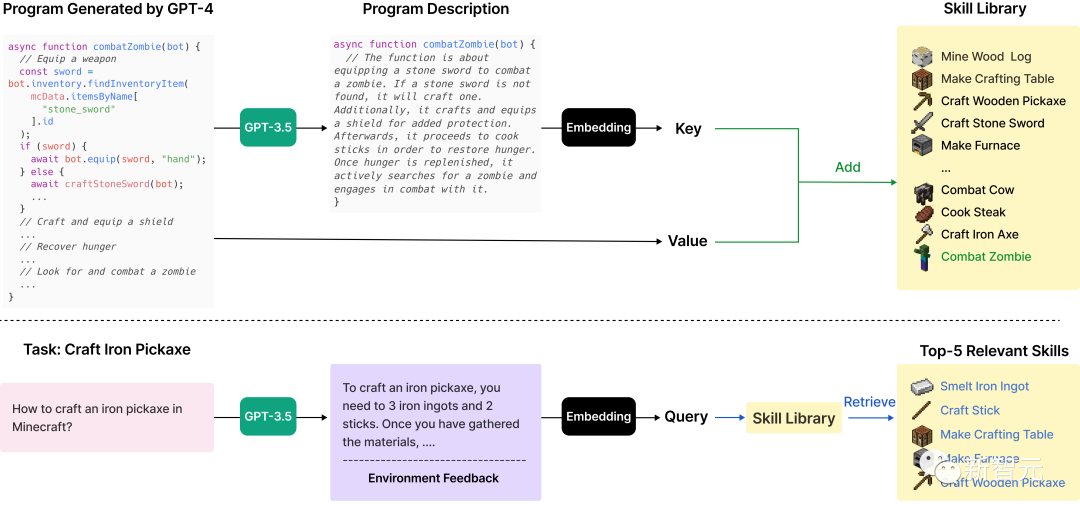

Secondly, Voyager gradually builds a skill library by storing successful programs in a vector database. Each program can be retrieved through the embedding of its documentation string.

Complex skills are synthesized by combining simple skills, rapidly increasing Voyager's capabilities over time and mitigating catastrophic forgetting.

Top: Adding skills. Each skill is indexed by the embedding of its description and can be retrieved in similar situations in the future.

Bottom: Retrieving skills. When faced with a new task proposed by the automatic curriculum, a query is made to identify the top 5 relevant skills.

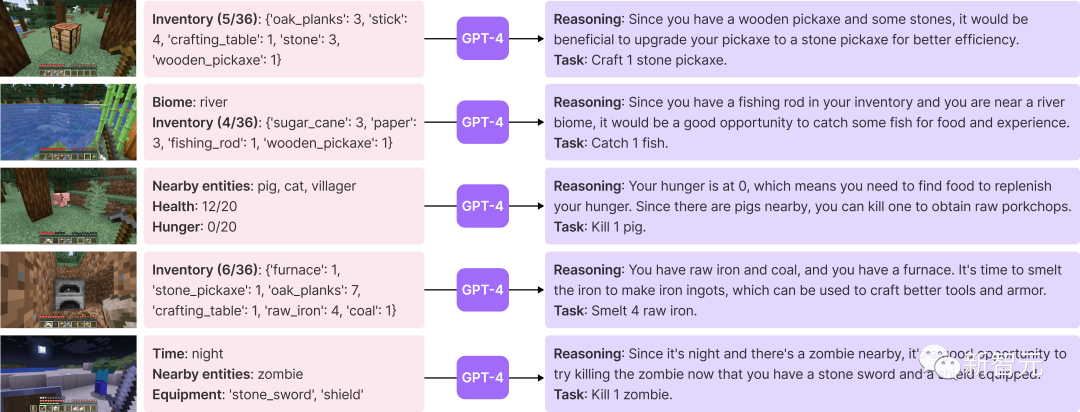

Thirdly, the automatic curriculum will propose appropriate exploration tasks based on the agent's current skill level and world state.

For example, if it finds itself in a desert instead of a forest, it will learn to collect sand and cacti instead of iron. The curriculum is generated by GPT-4 based on the goal of "discovering as much diversity as possible."

Automatic curriculum

As the first embodied intelligent agent driven by LLMs and capable of lifelong learning, the similarities between Voyager's training process and the chimpanzee training process can provide us with many insights.

Reference: Twitter - Dr. Jim Fan

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。