划重点

- 1 随着微软和谷歌在生成式人工智能领域首先发力,亚马逊也正奋起直追,据悉该公司已在美国得州奥斯汀秘密设计两种芯片,用于训练和加速生成式人工智能。

- 2 亚马逊开发的两款定制芯片分别为Inentia和Trainium,它们为亚马逊云服务AWS客户提供了一种替代选择,可以代替越来越难采购的英伟达GPU培训大语言模型。

- 3 AWS在云计算领域的主导地位是亚马逊的一大优势。AWS是全球最大的云计算提供商,2022年占据了40%的市场份额。

- 4 分析师认为,从长远来看,亚马逊的定制芯片可能会让它在生成式人工智能领域占据优势。

在美国得克萨斯州奥斯汀一栋丝毫不起眼的办公楼里,几个亚马逊员工正在两个小房间里设计两种微芯片,用于训练和加速生成式人工智能。这两款定制芯片的代号分别为Inentia和Trainium,它们为亚马逊云服务AWS客户提供了一种替代选择,可以代替英伟达图形处理器培训大语言模型。目前,采购英伟达的图形处理器越来越难,而价格也越来越高。

AWS首席执行官亚当·塞利普斯基(Adam Selipsky)在6月份接受采访时表示:“全世界都希望有更多的芯片用于支持生成式人工智能,无论是图形处理器还是亚马逊自己设计的芯片。我认为,与世界上任何其他公司相比,我们更有可能为客户提供这种人人都想要的能力。”

然而,其他公司采取了更快的行动,投入了更多的资金,并从人工智能热潮中借到了东风。当OpenAI于去年11月推出ChatGPT时,微软因托管这款爆火的人工智能聊天机器人而受到广泛关注。据报道,微软向OpenAI投资了130亿美元。今年2月,微软迅速将生成式人工智能模型添加到自己的产品中,并将其整合到必应中。

同月,谷歌推出了自己的大语言模型Bard,随后又向OpenAI的竞争对手Anthropic投资了3亿美元。

而直到今年4月,亚马逊才宣布了自己的大语言模型Titan,同时推出了名为Bedrock的服务,以帮助开发人员使用生成式人工智能来增强软件能力。

市场研究机构Gartner副总裁、分析师奇拉格·德凯特(Chirag Dekate)表示:“亚马逊不习惯于追逐市场,而是习惯于创造市场。我认为,很长一段时间以来,他们第一次发现自己处于劣势,现在正在努力追赶。”

Meta最近也发布了自己的大语言模型Llama 2,这个奉行开源模式的ChatGPT竞争对手现在可以在微软Azure公共云上进行测试。

芯片代表着“真正的差异化”

德凯特说,从长远来看,亚马逊的定制芯片可能会让它在生成式人工智能方面占据优势。他解释称:“我认为,真正的区别在于他们所拥有的技术能力,因为微软没有Trainium或Interentia。”

图:AWS在2013年就开始生产定制芯片Nitro,它是目前容量最大的AWS芯片

早在2013年,AWS就通过一款名为Nitro的专用硬件悄然开始生产定制芯片。亚马逊透露,Nitro是目前容量最大的AWS芯片,每台AWS服务器上至少有一个,使用总数超过2000万。

2015年,亚马逊收购了以色列芯片初创公司Annapurna Labs。然后在2018年,亚马逊推出了基于英国芯片设计公司Arm架构的服务器芯片Graviton,这是AMD和英伟达等巨头x86 CPU的竞争对手。

伯恩斯坦研究公司(Bernstein Research)高级分析师斯泰西·拉斯贡(Stacy Rasgon)表示:“Arm芯片在服务器总销售额中所占的比例可能高达10%,其中很大一部分将来自亚马逊。所以在CPU方面,他们做得相当不错。”

同样在2018年,亚马逊推出了专注于人工智能的芯片。两年前,谷歌发布了首款张量处理器(Tensor Processor Unit,简称TPU)。微软尚未宣布其正在与AMD合作开发的人工智能芯片Athena。

亚马逊在美国得克萨斯州奥斯汀设有芯片实验室,并在那里开发和测试Trainium和Inferentia。该公司产品副总裁马特·伍德(Matt Wood)解释了这两种芯片的用途。

他说:“机器学习分为这两个不同的阶段。所以,你需要训练机器学习模型,然后对这些训练过的模型进行推理。与在AWS上训练机器学习模型的其他方式相比,Tradium在性价比方面提高了约50%。”

继2019年推出第二代Interentia之后,Trainium于2021年首次上市。伍德说,Interentia允许客户“提供低成本、高吞吐量、低延迟的机器学习推理,这是你在生成式人工智能模型中输入提示时获得的所有预测,所有这些都会得到处理,然后获得响应。”

然而,就目前而言,在训练模型方面,英伟达的图形处理器仍然是无可争议的王者。今年7月,AWS推出了基于英伟达H100的新AI加速硬件。

拉斯贡说:“在过去15年里,英伟达围绕其芯片建立起了庞大的软件生态系统,这是其他公司所没有的。目前,人工智能领域的最大赢家就是英伟达。”

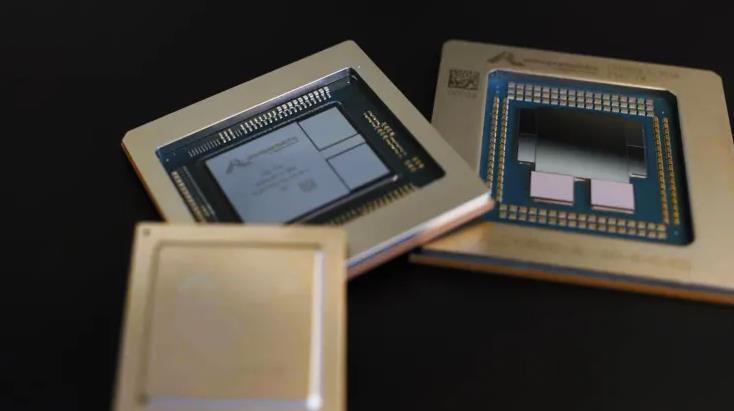

图:亚马逊的定制芯片,从左到右分别为Inferentia、Trainium和Graviton

亚马逊拥有云计算优势

然而,AWS在云计算领域的主导地位是亚马逊的一大优势。

市场研究机构Gartner副总裁分析师奇拉格·德凯特说:“亚马逊不需要额外关注,该公司已经拥有非常强大的云安装基础。他们所需要做的就是弄清楚如何利用生成式人工智能使现有客户扩展到价值创造运动中。”

当在亚马逊、谷歌和微软之间选择生成式人工智能时,数百万AWS客户可能会被亚马逊吸引,因为他们已经熟悉亚马逊,并在那里运行其他应用并存储数据。

AWS技术副总裁麦兰·汤姆森·布科维奇(Mai-Lan Tomsen Bukovec)解释说:“这是一个有关速度的问题。这些公司能够以多快的速度开发这些生成式人工智能应用程序,取决于他们首先从AWS中的数据开始,并使用我们提供的计算和机器学习工具来推动的。”

Gartner提供的数据显示,AWS是全球最大的云计算提供商,2022年占据了40%的市场份额。尽管亚马逊的营业利润已经连续三个季度同比下降,但在亚马逊第二季度77亿美元的营业利润中,AWS仍然占到了70%。从历史上看,AWS的运营利润率远高于谷歌云。

此外,AWS还拥有越来越多专注于生成式人工智能的开发者工具组合。AWS负责数据库、分析和机器学习的副总裁斯瓦米·西瓦苏布拉马尼亚(Swami Sivasubramanian)表示:“让我们把时钟倒回去,甚至回到ChatGPT之前。这不像发生在那之后,我们突然匆忙拿出了一个计划,因为你不可能在那么快的时间内设计出一款新芯片,更不可能在2到3个月的时间内建立一项基础服务。”

Bedrock可以让AWS客户访问由Anthropic、Stability AI、AI21 Labs和亚马逊Titan开发的大语言模型。西瓦苏布拉马尼亚说:“我们不相信一种模型会统治世界,我们希望我们的客户拥有来自多个供应商的最先进模型,因为他们会为正确的工作选择正确的工具。”

图:在得克萨斯州奥斯汀的AWS芯片实验室,亚马逊员工正在研发定制人工智能芯片

亚马逊最新的人工智能产品之一是AWS HealthScribe,该服务于7月推出,旨在帮助医生使用生成式人工智能起草患者就诊摘要。亚马逊还有机器学习中心SageMaker,提供算法、模型等服务。

另一个重要工具是CodeWhisperer,亚马逊表示,它使开发人员完成任务的速度平均提高了57%。去年,微软也报告说,其编码工具GitHub Copilot提高了工作效率。

今年6月,AWS宣布了斥资1亿美元建立生成式人工智能创新中心。AWS首席执行官塞利普斯基表示:“我们有很多客户都想要生成式人工智能技术,但他们不一定知道这在他们自己的业务背景下对他们意味着什么。因此,我们将引入解决方案架构师、工程师、策略师和数据科学家,与他们一对一地合作。”

CEO贾西亲自带队构建大语言模型

虽然到目前为止,AWS主要专注于开发工具,而不是打造ChatGPT的竞争对手,但最近被泄露的一份内部邮件显示,亚马逊首席执行官安迪·贾西(Andy Jassy)正在直接监督一个新的中央团队,该团队也在构建可扩展的大语言模型。

在第二季度财报电话会议上,贾西曾表示,AWS“相当大的一部分”业务现在是由人工智能及其支持的20多种机器学习服务所驱动,其客户包括飞利浦、3M、Old Mutual和汇丰银行。

人工智能的爆炸式增长带来了一系列安全担忧,公司担心员工将专有信息放入公共大语言模型使用的培训数据中。

AWS首席执行官塞利普斯基说:“我无法告诉你,我接触过的财富500强公司有多少家禁用了ChatGPT。因此,通过我们的生成式人工智能方法和Bedrock服务,你通过Bedrock所做的任何事情、使用的任何模型,都将在你自己独立的虚拟私有云环境中。它将被加密,它将具有相同的AWS访问控制。”

目前,亚马逊只是在加速推进生成式人工智能,声称目前有“超过10万”客户在AWS上使用机器学习。虽然这只占AWS数百万客户的一小部分,但分析师表示,这种情况可能会改变。

市场研究机构Gartner副总裁分析师奇拉格·德凯特称:“我们没有看到企业在说:哦,等一下,微软已经在生成式人工智能方面领先,让我们走出去,让我们改变我们的基础设施战略,把所有东西都迁移到微软。如果你已经是亚马逊的客户,你很可能会更广泛地探索亚马逊的生态系统。”(文/金鹿)

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。