Once an AI pioneer, now a "counter-AI" vanguard.

Author: Moonshot

In 1947, Alan Turing mentioned in a speech, "What we want is a machine that can learn from experience."

Seventy-eight years later, the Turing Award, named after Turing and known as the "Nobel Prize of Computing," was awarded to two scientists who dedicated their lives to solving Turing's problem.

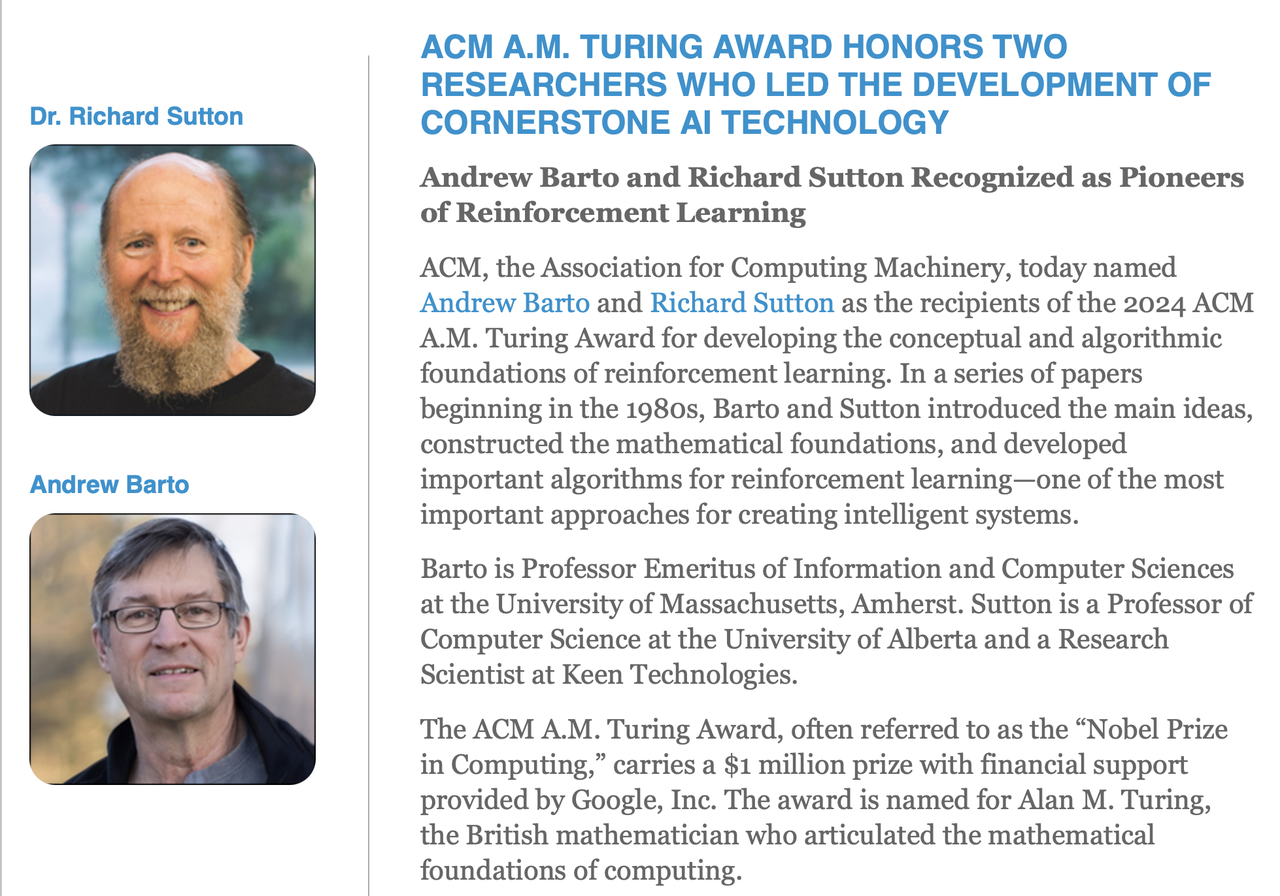

Andrew Barto and Richard Sutton jointly received the 2024 Turing Award. They are a mentor-mentee pair with a nine-year age difference, foundational figures behind the technologies of AlphaGo and ChatGPT, and pioneers in the field of machine learning.

Turing Award winners Andrew Barto and Richard Sutton

Image source: Turing Award official website

Google's Chief Scientist Jeff Dean wrote in the award citation, "The reinforcement learning techniques pioneered by Barto and Sutton directly answer Turing's question. Their work has been key to AI advancements over the past few decades. The tools they developed remain core pillars of AI prosperity… Google is honored to sponsor the ACM A.M. Turing Award."

Google is the sole sponsor of the $1 million Turing Award.

However, after receiving the award, the two scientists, now in the spotlight, pointed their fingers at AI big companies, issuing a "thank you" to the media: current AI companies are "motivated by commercial incentives" rather than focusing on technological research, creating "an untested bridge for society, allowing people to cross and test it."

Coincidentally, the last time the Turing Award was given to scientists in the field of artificial intelligence was in 2018, when Yoshua Bengio, Geoffrey Hinton, and Yann LeCun were awarded for their contributions to deep learning.

2018 Turing Award winners

Image source: eurekalert

Among them, Yoshua Bengio and Geoffrey Hinton (also the 2024 Nobel Prize winners in Physics) have frequently called on global society and the scientific community to be wary of the misuse of artificial intelligence by big companies during the recent AI wave.

Geoffrey Hinton even resigned from Google to "speak freely." Sutton, who received this award, was also a research scientist at DeepMind from 2017 to 2023.

As the highest honors in the computing world are repeatedly awarded to the founders of core AI technologies, a thought-provoking phenomenon gradually emerges:

Why do these peak scientists always turn around to sound the alarm on AI in the spotlight?

The "Bridge Builders" of Artificial Intelligence

If Alan Turing is the guide of artificial intelligence, then Andrew Barto and Richard Sutton are the "bridge builders" on this road.

As artificial intelligence speeds ahead, after receiving accolades, they are re-evaluating whether the bridges they built can safely carry humanity across.

Perhaps the answer lies in their academic careers spanning half a century—only by tracing how they constructed "machine learning" can we understand why they are wary of "technological out-of-control."

Image source: Carnegie Mellon University

In 1950, Alan Turing posed a philosophical and technical question in his famous paper "Computing Machinery and Intelligence":

"Can machines think?"

Thus, Turing designed the "imitation game," known later as the "Turing Test."

At the same time, Turing proposed that machine intelligence could be acquired through learning rather than solely relying on pre-programming. He envisioned the concept of a "Child Machine," which would learn gradually like a child through training and experience.

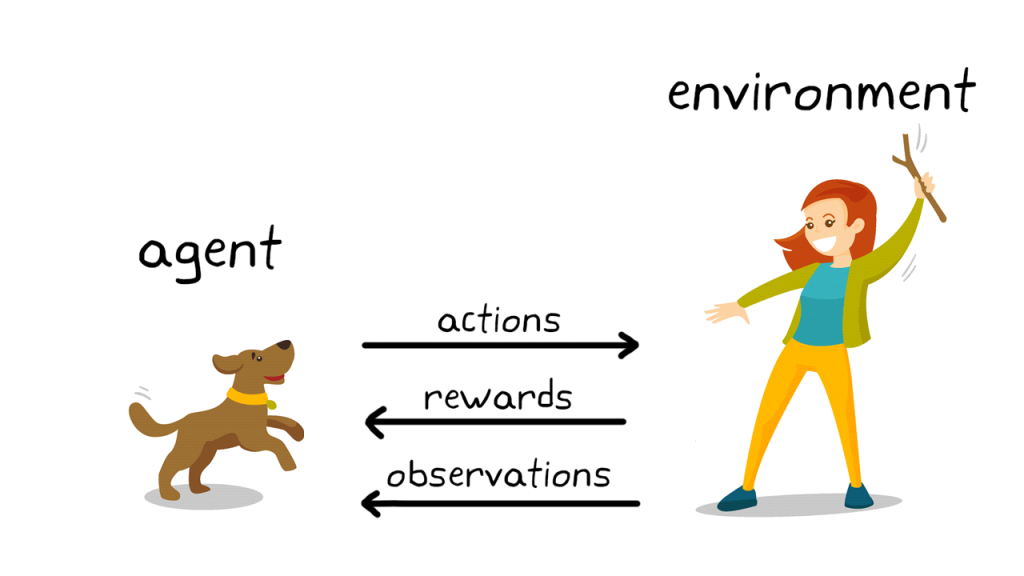

The core goal of artificial intelligence is to construct intelligent agents that can perceive and take better actions, with the standard for measuring intelligence being the agent's ability to judge "some actions are better than others."

The purpose of machine learning is to provide machines with feedback after actions and enable them to learn autonomously from feedback experiences. In other words, Turing conceived a machine learning method based on rewards and punishments, akin to Pavlov training dogs.

Losing more and getting stronger in a game is also a form of "reinforcement learning."

Image source: zequance.ai

The path of machine learning initiated by Turing was bridged thirty years later by a mentor-mentee pair—reinforcement learning (RL).

In 1977, Andrew Barto, inspired by psychology and neuroscience, began exploring a new theory of human intelligence: neurons act like "hedonists," with billions of neurons in the human brain, each trying to maximize pleasure (rewards) and minimize pain (punishments). Neurons do not mechanically receive and transmit signals; if a neuron's activity pattern leads to positive feedback, it will tend to repeat that pattern, thus driving the human learning process.

By the 1980s, Barto brought his doctoral student Richard Sutton along, aiming to apply this "trial and error, adjusting connections based on feedback to find the optimal behavior pattern" neuron theory to artificial intelligence, leading to the birth of reinforcement learning.

"Reinforcement Learning: An Introduction" has become a classic textbook, cited nearly 80,000 times.

Image source: IEEE

The mentor-mentee duo utilized the mathematical foundation of Markov decision processes to develop and write many core algorithms for reinforcement learning, systematically constructing the theoretical framework of reinforcement learning, and authored the textbook "Reinforcement Learning: An Introduction," allowing tens of thousands of researchers to enter the field of reinforcement learning. They are regarded as the fathers of reinforcement learning.

Their research on reinforcement learning aimed to explore efficient, accurate, reward-maximizing, and optimal action machine learning methods.

The "Divine Hand" of Reinforcement Learning

If machine learning is "cram learning," then reinforcement learning is "free-range learning."

Traditional machine learning involves feeding a model a large amount of labeled data to establish a fixed mapping relationship between input and output. The classic scenario is showing a computer a bunch of cat and dog photos, telling it which is which; as long as enough images are fed, the computer will recognize cats and dogs.

In contrast, reinforcement learning allows machines to gradually adjust their behavior to optimize results through trial and error and a reward-punishment mechanism without explicit guidance. It's like a robot learning to walk; it doesn't need humans to constantly tell it "this step is right, that step is wrong." It just tries, falls, adjusts, and eventually learns to walk, even developing its unique gait.

Clearly, the principles of reinforcement learning are closer to human intelligence, just as every toddler learns to walk through falling, learns to grasp through exploration, and learns language through babbling.

The popular "spinning kick robot" is also trained through reinforcement learning.

Image source: Yushu Technology

The "highlight moment" of reinforcement learning was AlphaGo's "divine hand" in 2016. At that time, AlphaGo made a surprising move with white pieces in its match against Lee Sedol, turning the tide and winning the game.

Top Go players and commentators did not anticipate AlphaGo's move at that position, as it seemed "nonsensical" based on human experience. After the match, Lee Sedol admitted he had never considered that move.

AlphaGo's "divine hand" was not a memorized move from a game record but was discovered through countless self-play, trial and error, long-term planning, and strategy optimization, which is the essence of reinforcement learning.

Lee Sedol, disrupted by AlphaGo's "divine hand."

Image source: AP

Reinforcement learning even influences human intelligence; after AlphaGo revealed its "divine hand," players began to learn and study AI's Go strategies. Scientists are also using reinforcement learning algorithms and principles to understand the learning mechanisms of the human brain. One of Barto and Sutton's research outcomes established a computational model to explain the role of dopamine in human decision-making and learning.

Moreover, reinforcement learning excels at handling complex rules and dynamic environments, finding optimal solutions in areas like Go, autonomous driving, robot control, and conversing with vague humans.

These are currently the most cutting-edge and popular AI application fields, especially in large language models, where almost all leading large language models use the RLHF (Reinforcement Learning from Human Feedback) training method, allowing humans to rate the model's responses, which the model then improves based on feedback.

But this is precisely Barto's concern: after big companies build the bridge, they test its safety by letting people walk back and forth on it.

"It is not responsible to push software directly to millions of users without any safeguards," Barto said in an interview after receiving the award.

"Technological development should be accompanied by control and avoidance of potential negative impacts, but I do not see these AI companies genuinely doing that," he added.

What Are the Top AI Figures Worried About?

The discourse on AI threats is endless because scientists fear the future they helped create may spiral out of control.

In their "thank you" remarks, there is no harsh criticism of current AI technology, but rather a sense of dissatisfaction with AI companies.

In interviews, they both warned that the current development of artificial intelligence relies on big companies racing to launch powerful but error-prone models, which they use to raise substantial funds and continue investing billions of dollars in a chip and data arms race.

Major investment banks are re-evaluating the AI industry.

Image source: Goldman Sachs

Indeed, according to research from Deutsche Bank, the total investment of tech giants in the AI field is approximately $340 billion, a scale that exceeds Greece's annual GDP. The industry leader, OpenAI, has a company valuation of $260 billion and is preparing for a new round of $40 billion financing.

In fact, many AI experts share the views of Barto and Sutton.

Previously, former Microsoft executive Stephen Sinofsky stated that the AI industry is trapped in a scaling dilemma, relying on burning money for technological progress, which contradicts the historical trend of technology development where costs gradually decrease rather than increase.

On March 7, former Google CEO Eric Schmidt, Scale AI founder Alex Wang, and AI Safety Center director Dan Hendricks co-authored a cautionary paper.

The three tech leaders believe that the current development situation in the forefront of artificial intelligence is similar to the nuclear arms race that birthed the Manhattan Project, with AI companies quietly conducting their own "Manhattan Projects." Over the past decade, their investments in AI have doubled each year, and without regulatory intervention, AI could become the most unstable technology since the nuclear bomb.

"Superintelligence Strategy" and co-authors

Image source: nationalsecurity.ai

Yoshua Bengio, who won the Turing Award in 2019 for deep learning, also issued a lengthy warning on his blog, stating that the AI industry now has trillions of dollars in value for capital to chase and seize, with the potential to severely disrupt the current world order.

Many tech professionals with technical backgrounds believe that the current AI industry has deviated from the pursuit of technology, the examination of intelligence, and the vigilance against technological abuse, moving towards a capital-driven model focused on spending money to pile up chips.

"Building massive data centers, charging users while providing them with potentially unsafe software is not a motive I agree with," Barto said in an interview after receiving the award.

The first edition of the "International Scientific Report on Advanced Artificial Intelligence Safety," co-authored by 75 AI experts from 30 countries, states, "The methods for managing the risks of general artificial intelligence often rest on the assumption that AI developers and policymakers can accurately assess the capabilities and potential impacts of AGI models and systems. However, scientific understanding of the internal workings, capabilities, and societal impacts of AGI is actually very limited."

Yoshua Bengio's warning article

Image source: Yoshua Bengio

It is not difficult to see that today's "AI threat theory" has shifted its focus from technology to big companies.

Experts are warning big companies: you burn money, pile up resources, and roll parameters, but do you truly understand the products you are developing? This is also the origin of Barto and Sutton's metaphor of "building bridges," because technology belongs to all humanity, but capital only belongs to big companies.

Moreover, Barto and Sutton have always focused on the field of reinforcement learning. Its principles align more closely with human intelligence and have a "black box" characteristic, especially in deep reinforcement learning, where AI behavior patterns become complex and difficult to explain.

This is also the concern of human scientists: having aided and witnessed the growth of artificial intelligence, they find it difficult to interpret its intentions.

The Turing Award winners who pioneered deep learning and reinforcement learning are not worried about the development of AGI (Artificial General Intelligence) but rather about the arms race between big companies, which could lead to an "intelligence explosion" in the AGI field, inadvertently creating ASI (Artificial Superintelligence). The distinction between the two is not just a technical issue but also concerns the future fate of human civilization.

ASI, which surpasses human intelligence, will possess an amount of information, decision-making speed, and self-evolution capabilities far beyond human comprehension. If ASI is not designed and governed with extreme caution, it could become the last and most uncontrollable technological singularity in human history.

In the current AI frenzy, these scientists may be the most qualified to "pour cold water" on the situation. After all, fifty years ago, when computers were still massive machines, they had already begun research in the field of artificial intelligence. They have shaped the present from the past and have the standing to question the future.

Will AI leaders face an Oppenheimer-like fate?

Image source: The Economist

In a February interview with The Economist, the CEOs of DeepMind and Anthropic stated:

They worry about becoming the next Oppenheimer, causing them sleepless nights.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。