DeepSeek's newly open-sourced full-stack communication library, DeepEP, significantly alleviates the computational anxiety of practitioners by optimizing the efficiency of information transfer between GPUs.

Author: Liang Siqi

Image source: Generated by Wujie AI

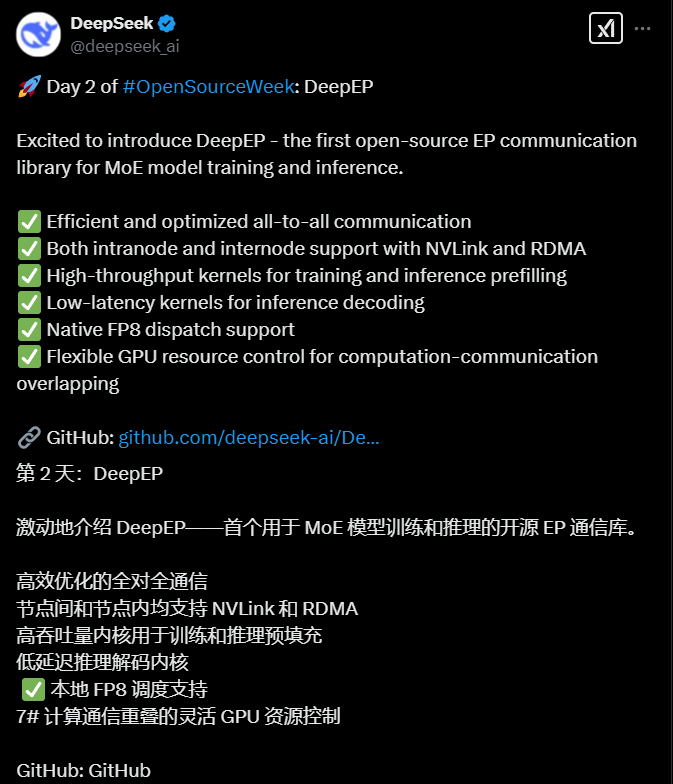

On February 25, DeepSeek, offering open-source benefits, dropped a bombshell—launching the world's first full-stack communication library, DeepEP, aimed at MoE models. By directly addressing AI computational anxiety, GitHub saw an instant surge of 1500 stars (referring to the number of favorites), causing a stir in the community, highlighting its significance.

Many are curious about what DeepEP signifies. Imagine a Double Eleven delivery station: 2048 delivery personnel (GPUs) frantically transporting packages (AI data) among 200 warehouses (servers). The traditional transport system is akin to having the delivery personnel ride tricycles for deliveries, while DeepEP equips everyone with a "maglev + quantum teleportation" setup, ensuring stable and efficient information transfer.

Feature One: Directly Changing Transport Rules

During NVIDIA's conference call on August 29, 2024, Jensen Huang specifically emphasized the importance of NVLink (a technology developed by NVIDIA that allows direct interconnection between GPUs, with bidirectional transfer speeds reaching 1.8TB/S) for low latency, high output, and large language models, considering it one of the key technologies driving the development of large models.

However, this highly praised NVLink technology has been taken to new heights by a Chinese team. The brilliance of DeepEP lies in its optimization of NVLink, meaning that among delivery personnel in the same warehouse, transport via maglev tracks can achieve speeds of up to 158 containers per second (GB/s), effectively reducing the distance from Beijing to Shanghai to the time it takes to take a sip of water.

The second black technology is its low-latency kernel based on RDMA technology. Imagine, between warehouses in different cities, goods are directly "quantum teleported," with each plane (network card) capable of carrying 47 containers per second, allowing planes to load cargo while flying, overlapping computation and communication, completely eliminating downtime.

Feature Two: Intelligent Sorting Black Technology: AI Version of "The Strongest Brain"

When goods need to be distributed to different experts (sub-networks in the MoE model), traditional sorters must unpack and check each box one by one, while DeepEP's "scheduling-combination" system acts as if it has precognitive abilities: in the training pre-fill mode, 4096 data packets move simultaneously on an intelligent conveyor belt, automatically identifying local or cross-city items; in the inference pre-fill mode, 128 urgent packages take the VIP lane, arriving in 163 microseconds, five times faster than a human blink. Meanwhile, it employs dynamic track-changing technology, instantly switching transmission modes during peak traffic, perfectly adapting to different scenario needs.

Feature Three: FP8 "Bone Shrinking Technique"

Ordinary goods are transported in standard boxes (FP32/FP16 format), while DeepEP can compress goods into micro-capsules (FP8 format), allowing trucks to carry three times more cargo. Even more astonishing, these capsules automatically restore to their original state upon reaching their destination, saving both shipping costs and time.

This system has been tested in DeepSeek's own warehouse (H800 GPU cluster): the speed of intra-city freight has increased threefold, and inter-city latency has been reduced to a level imperceptible to humans, while the most disruptive aspect is that it achieves true "unconscious transmission"—just like a delivery person stuffing packages into a locker while riding a bike, the entire process flows seamlessly.

Now that DeepSeek has open-sourced this ace, it is akin to making the blueprint of SF Express's unmanned sorting system public; a heavy task that originally required 2000 GPUs can now be easily managed with just a few hundred.

Earlier, DeepSeek released the first achievement of its "Open Source Week": the code for FlashMLA (translated as Fast Multi-Head Latent Attention Mechanism), which is also one of the key technologies for reducing costs in the training process of large models. To alleviate cost anxiety along the industry chain, DeepSeek is generously sharing its knowledge.

Previously, Yu Yang, founder of Luchen Technology, stated on social media, "In the short term, China's MaaS model may be the worst business model." He estimated that if 100 billion tokens are output daily, the monthly machine cost based on DeepSeek's services would be 450 million yuan, resulting in a loss of 400 million yuan; using AMD chips, the monthly revenue would be 45 million yuan, with a monthly machine cost of 270 million yuan, meaning a loss exceeding 200 million yuan.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。