As DeepSeek emerged from a steep growth curve, complaints about its busy servers flooded in, and users worldwide began to experience the inconvenience of crashes after asking just a few questions.

Image source: Generated by Wujie AI

The frequent response of "server busy, please try again later" from DeepSeek is driving users in various regions crazy.

Previously not well-known to the public, DeepSeek gained fame with the launch of its language model V3, which rivals GPT-4, on December 26, 2024. On January 20, DeepSeek released its language model R1, which competes with OpenAI's O1. The high quality of answers generated in "deep thinking" mode and the positive signals revealing a potential sharp drop in model training costs propelled the company and its application into the spotlight. Since then, DeepSeek R1 has been experiencing congestion, with its online search function intermittently down, and the deep thinking mode frequently displaying "server busy," causing significant frustration for many users.

About ten days ago, DeepSeek began experiencing server interruptions. By noon on January 27, the DeepSeek official website had repeatedly displayed "deepseek webpage/api unavailable." On that day, DeepSeek became the highest downloaded application on iPhone over the weekend, surpassing ChatGPT in the US download rankings.

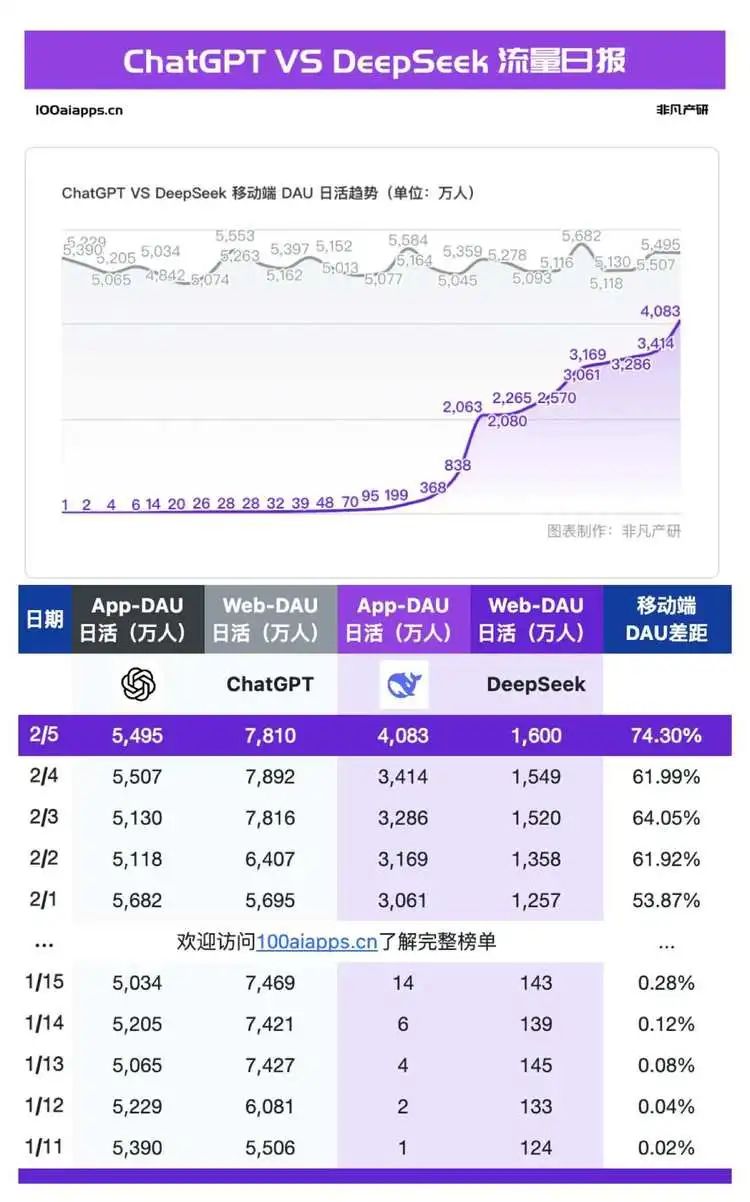

On February 5, just 26 days after the launch of DeepSeek's mobile version, daily active users surpassed 40 million, while ChatGPT's mobile daily active users were 54.95 million, making DeepSeek 74.3% of ChatGPT's user base. Almost simultaneously with DeepSeek's steep growth curve, complaints about its busy servers surged, and users worldwide began to face the inconvenience of crashes after asking just a few questions. Various alternative access methods began to emerge, such as DeepSeek's alternative websites, and major cloud service providers, chip manufacturers, and infrastructure companies all launched their own versions. Personal deployment tutorials also became widespread. However, the frustration among users did not ease: almost all major manufacturers globally claimed to support the deployment of DeepSeek, yet users in various regions continued to complain about service instability.

What exactly is happening behind the scenes?

1. Users Accustomed to ChatGPT Can't Stand DeepSeek's Inaccessibility

The dissatisfaction with "DeepSeek server busy" stems from the previous dominance of AI applications led by ChatGPT, which rarely experienced lag.

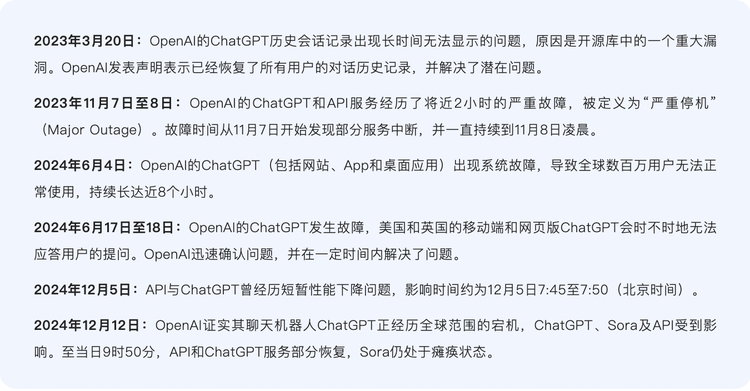

Since the launch of OpenAI's services, ChatGPT has also experienced a few P0-level (the most severe incident level) outages, but overall, it has proven to be relatively reliable, finding a balance between innovation and stability, and gradually becoming a key component similar to traditional cloud services.

The number of widespread outages for ChatGPT is not particularly high.

The reasoning process of ChatGPT is relatively stable, involving two steps: encoding and decoding. In the encoding phase, the input text is converted into vectors that contain the semantic information of the input text. In the decoding phase, ChatGPT uses previously generated text as context to generate the next word or phrase through the Transformer model until a complete statement that meets the requirements is generated. The large model itself belongs to a Decoder architecture, and the decoding phase is an output process of tokens (the smallest unit of text processed by the large model). Each time a question is posed to ChatGPT, a reasoning process is initiated.

For example, if you ask ChatGPT, "How are you feeling today?" ChatGPT encodes this sentence, generating attention representations at each layer. Based on the attention representations of all previous tokens, it predicts the first output token "I," then decodes it, appending "I" to "How are you feeling today?" to get "How are you feeling today? I," which generates a new attention representation, and then predicts the next token: "am." This process continues in a loop until it finally produces "How are you feeling today? I am feeling great."

The orchestration tool Kubernetes acts as the "conductor" behind ChatGPT, responsible for scheduling and allocating server resources. When the influx of users exceeds the capacity of Kubernetes' control plane, it leads to a complete system failure for ChatGPT.

While the total number of outages for ChatGPT is not particularly high, it is supported by powerful resources that maintain stable operation, which is often overlooked.

Generally, since the data scale for reasoning processing is often smaller, the computational requirements are not as high as during training. Industry insiders estimate that during normal large model reasoning processes, the main memory usage is dominated by model parameter weights, accounting for over 80%. In reality, among the multiple models built into ChatGPT, the default model sizes are all smaller than DeepSeek-R1's 671B. Additionally, ChatGPT has significantly more GPU computing power than DeepSeek, naturally exhibiting more stable performance than DS-R1.

Both DeepSeek-V3 and R1 are 671B models, and the model startup process is essentially the reasoning process. The computational power required during reasoning needs to match the user volume; for instance, with 100 million users, 100 million GPUs are needed. This requirement is not only massive but also independent of the computational power reserved during training. From various sources, it appears that DS's GPU and computational power reserves are clearly insufficient, leading to frequent lags.

This comparison makes users accustomed to the smooth experience of ChatGPT uncomfortable, especially as their interest in R1 grows.

2. Lag, Lag, and More Lag

Moreover, a careful comparison reveals that OpenAI and DeepSeek are facing very different situations.

The former has Microsoft as a backing, with Microsoft Azure cloud services hosting ChatGPT, Dalle-E 2 image generator, and GitHub Copilot automatic coding tool. This combination has quickly become a classic paradigm of cloud + AI and has rapidly spread to become an industry standard. In contrast, while DeepSeek is a startup, it largely relies on its own data centers, similar to Google, rather than depending on third-party cloud computing providers. After reviewing public information, it was found that DeepSeek has not established any partnerships with cloud or chip manufacturers (although during the Spring Festival, cloud providers announced that they would allow DeepSeek models to run on their platforms, no meaningful collaborations have been initiated).

Additionally, DeepSeek has encountered unprecedented user growth, meaning its preparation time for corresponding situations is shorter than that of ChatGPT.

DeepSeek's good performance stems from overall optimizations made at the hardware and system levels. DeepSeek's parent company, Huanfang Quantitative, invested 200 million in 2019 to build the Firefly-1 supercomputing cluster and quietly stored thousands of A100 GPUs by 2022. To achieve more efficient parallel training, DeepSeek developed its own HAI LLM training framework. Industry experts believe that the Firefly cluster may utilize thousands to tens of thousands of high-performance GPUs (such as NVIDIA A100/H100 or domestic chips) to provide powerful parallel computing capabilities. Currently, the Firefly cluster supports the training of models like DeepSeek-R1 and DeepSeek-MoE, which perform close to GPT-4 levels in complex tasks such as mathematics and coding.

The Firefly cluster represents DeepSeek's exploration journey in new architectures and methods, leading outsiders to believe that through such innovative technologies, DS has reduced training costs, requiring only a fraction of the computational power of the most advanced Western models to train R1, which performs comparably to top AI models. SemiAnalysis estimates that DeepSeek actually possesses a vast computational power reserve: DeepSeek has stacked 60,000 NVIDIA GPU cards, including 10,000 A100s, 10,000 H100s, 10,000 "special edition" H800s, and 30,000 "special edition" H20s.

This seems to indicate that R1 has a sufficient amount of computational power. However, in reality, as a reasoning model, R1 is comparable to OpenAI's O3, which requires more computational power for the response phase. The balance between the computational power saved on the training cost side and the explosive increase in the reasoning cost side is currently unclear.

It is worth mentioning that while DeepSeek-V3 and DeepSeek-R1 are both large language models, their operational methods differ. DeepSeek-V3 is an instruction model, similar to ChatGPT, which receives prompts to generate corresponding text responses. However, DeepSeek-R1 is a reasoning model; when users ask R1 questions, it first undergoes a significant reasoning process before generating the final answer. The tokens generated by R1 initially contain a large number of reasoning chains, where the model explains and breaks down the question before generating the answer, and all these reasoning processes are quickly generated in token form.

According to Wen Tingcan, vice president of Yaotu Capital, the aforementioned vast computational power reserve of DeepSeek refers to the training phase, where the computational power team can be planned and anticipated, making it less likely to encounter insufficient computational power. However, reasoning computational power has greater uncertainty, as it mainly depends on user scale and usage, making it relatively elastic. "Reasoning computational power will grow according to certain patterns, but as DeepSeek becomes a phenomenon-level product, the explosive growth in user scale and usage in a short time leads to an explosive increase in reasoning phase computational power demand, resulting in lags."

Independent developer Guizang, an active model product designer, agrees that the insufficient computational power is the main reason for DeepSeek's lags. He believes that as the highest downloaded mobile application in 140 global markets, the current computational power simply cannot keep up, even with new cards, because "new cards take time to set up for cloud services."

"The cost of running NVIDIA A100, H100, and other chips for an hour has a fair market price. From the perspective of output token reasoning costs, DeepSeek is over 90% cheaper than OpenAI's comparable model O1, which aligns with everyone's calculations. Therefore, the model architecture MOE itself is not the main issue, but the number of GPUs DS has determines the maximum number of tokens they can produce per minute. Even if more GPUs can be used for reasoning services rather than pre-training research, there is still an upper limit." Chen Yunfei, a developer of the AI-native application Cat Light, holds a similar view.

Some industry insiders have also mentioned to Silicon Star that the essence of DeepSeek's lags lies in the inadequacy of its private cloud.

Hacker attacks are another driving factor behind R1's lags. On January 30, media reports from the cybersecurity company Qihoo 360 revealed that the intensity of attacks on DeepSeek's online services suddenly escalated, with attack commands increasing by hundreds of times compared to January 28. Qihoo 360's Xlab laboratory observed that at least two botnets were involved in the attacks.

However, there seems to be a relatively obvious solution to the lag in R1's own services: third-party service providers. This was the most lively scene we witnessed during the Spring Festival—various manufacturers deployed services to meet the demand for DeepSeek.

On January 31, NVIDIA announced that NVIDIA NIM could now use DeepSeek-R1. Previously, NVIDIA's market value evaporated by nearly $600 billion overnight due to the impact of DeepSeek. On the same day, Amazon Web Services (AWS) users could deploy DeepSeek's latest R1 base model on its AI platforms, Amazon Bedrock and Amazon SageMaker AI. Subsequently, new AI application players like Perplexity and Cursor also began to integrate DeepSeek in bulk. Microsoft, however, was the first to deploy DeepSeek-R1 on its cloud service Azure and GitHub, ahead of Amazon and NVIDIA.

Starting from February 1, the fourth day of the Lunar New Year, Huawei Cloud, Alibaba Cloud, ByteDance's Volcano Engine, and Tencent Cloud also joined in, generally providing deployment services for the full range and sizes of DeepSeek models. Following them were AI chip manufacturers like Birun Technology, Hanbo Semiconductor, Ascend, and Muxi, who claimed to have adapted the original DeepSeek model or smaller distilled versions. Software companies like Yonyou and Kingdee integrated DeepSeek models into some of their products to enhance their capabilities. Finally, terminal manufacturers such as Lenovo, Huawei, and some products under Honor integrated DeepSeek models for use as personal assistants and in smart car cockpits.

To date, DeepSeek has attracted a vast network of partners, including domestic and international cloud providers, operators, brokerages, and national-level platforms like the National Supercomputing Internet Platform. Since DeepSeek-R1 is a completely open-source model, all integrated service providers have become beneficiaries of the DS model. This has significantly raised DS's profile but has also led to more frequent lag issues, as both service providers and DeepSeek itself are increasingly overwhelmed by the influx of users, and neither has found the key to solving the stability issues.

Considering that both DeepSeek V3 and R1 models have a staggering 671 billion parameters, they are suitable for running in the cloud, where cloud providers have more ample computational power and reasoning capabilities. Their deployment of DeepSeek-related services aims to lower the barriers for enterprises. After deploying the DeepSeek model, they provide APIs for the DS model, which are believed to offer a better user experience compared to the APIs provided directly by DS.

However, in reality, the operational experience issues of the DeepSeek-R1 model have not been resolved across various services. Outsiders believe that service providers do not lack computational power, but in fact, the R1 they deploy receives feedback from developers about unstable response experiences at a frequency comparable to R1 itself. This is largely due to the limited number of GPUs available for reasoning with R1.

"With R1's popularity remaining high, service providers need to balance the integration of other models, and the GPUs available for R1 are very limited. As R1's popularity increases, any provider that offers R1 at a relatively low price will be overwhelmed," explained Guizang, a model product designer and independent developer.

Model deployment optimization is a broad field that encompasses many aspects, from training completion to actual hardware deployment, involving multiple layers of work. However, for the lag events of DeepSeek, the reasons may be simpler, such as the model being too large and insufficient optimization preparation before going live.

A popular large model faces various technical, engineering, and business challenges before going live, such as ensuring consistency between training data and production environment data, data latency affecting model reasoning performance, high online reasoning efficiency and resource consumption, insufficient model generalization ability, and engineering aspects like service stability, API, and system integration.

Many popular large models place great emphasis on optimizing reasoning before going live due to concerns about computation time and memory issues. The former refers to excessive reasoning delays that lead to poor user experiences, even failing to meet latency requirements, resulting in lags. The latter refers to the large number of model parameters consuming memory, which can even exceed the capacity of a single GPU card, also leading to lags.

Wen Tingcan explained to Silicon Star that the challenges faced by service providers offering R1 services stem from the unique structure of the DS model, which is too large combined with the MOE (Mixture of Experts) architecture, a method for efficient computation. "(Service providers) need time to optimize, but the market's popularity has a time window, so they tend to launch first and optimize later, rather than fully optimizing before going live."

For R1 to run stably, the core issue now lies in the reserves and optimization capabilities on the reasoning side. What DeepSeek needs to do is find ways to reduce the costs of reasoning and decrease the number of tokens output per instance.

At the same time, the lags also indicate that DS's computational power reserves may not be as vast as described by SemiAnalysis. Huanfang Fund needs GPUs, and the DeepSeek training team also requires GPUs, so the number of GPUs available for users has always been limited. Given the current development situation, DeepSeek is unlikely to be motivated to spend money renting services to provide users with a better experience for free. They are more likely to wait until the first wave of C-end business models is clearly defined before considering service leasing, which also means that lags will continue for some time.

"They probably need to take two steps: 1) implement a paid mechanism to limit the model usage of free users; 2) collaborate with cloud service providers to utilize others' GPU resources." Developer Chen Yunfei's temporary solution has gained considerable consensus in the industry.

However, at present, DeepSeek does not seem to be in a hurry about its "server busy" issue. As a company pursuing AGI, DeepSeek appears unwilling to focus too much on the influx of user traffic. Users may have to get used to facing the "server busy" interface for the foreseeable future.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。