Where is AI headed? The answer lies in the four words "cost reduction era."

Source: Brain Extreme

As we enter 2025, the popularity of AI continues to rise globally. The influence of large models is radiating across production, daily life, social and cultural activities, and various aspects of the financial market. We have a vague feeling that the AI industry is undergoing rapid changes. Its operational logic and the supply-demand relationship within the industry are quickly integrating and restructuring. But what is the true core of these changes? What is the latest global consensus on the development of the AI industry? It seems there is still some obscurity.

To clearly understand the new changes in AI technology and industry, it is crucial to combine the judgments of technology leaders with the trends in the tech industry. On February 11, the World Governments Summit 2025 was held in Dubai, UAE. Baidu founder Li Yanhong, Google CEO Sundar Pichai, and Tesla and xAI founder Elon Musk all shared their latest views and predictions on the AI industry at the summit.

These tech leaders unanimously believe that large models are entering a very critical cost reduction cycle. For instance, Li Yanhong stated, "We live in a very exciting era. In the past, when we talked about Moore's Law, we said that performance would double and costs would be halved every 18 months; but today, when we talk about large language models, we can say that every 12 months, reasoning costs can be reduced by more than 90%."

In line with the global consensus on the declining costs of large models, on February 13, the two largest large language models from the East and West, Wenxin Yiyan and ChatGPT, both announced free usage. Perhaps we can think this way: we must understand the trend and logic of cost reduction in large models to predict the future trajectory of global AI.

Let’s start from the gathering of tech leaders in Dubai and combine it with the recent movements of AI companies like Baidu and OpenAI to discuss the inevitability of large models entering a cost reduction cycle and the industrial driving force that comes with it.

In the coming period, where will AI head? The answer lies in the four words "cost reduction era."

We must first make this judgment: any innovation at the level of general technology must go through four steps: experimental phase - high-cost trial phase - cost reduction phase - popularization phase.

The best example of this characteristic is household electricity. After inventors like Edison created the light bulb, there was a long period during which various sectors believed that home generators were essential. However, the high cost of home electricity generation and significant safety risks made it unaffordable for most families. This stage saw innovation occur, but the costs were too high for practical application.

Thus, high-voltage transmission and regional power grids emerged. Each household no longer needed to prepare a separate power generation system, and the cost per kilowatt-hour significantly decreased. In this context, household electricity and lighting truly became widespread. Today’s large language models have reached a cost reduction cycle akin to the "power grid" moment.

At the World Governments Summit 2025, UAE AI Minister Omar Sultan AI Olama had a dialogue with Li Yanhong. During this conversation, Li Yanhong noted that looking back over the past few hundred years, most innovations have been related to cost reduction, not only in the field of artificial intelligence but also beyond the IT industry. "If you can reduce costs by a certain amount or percentage, it means your productivity has increased by the same percentage. I believe this is almost the essence of innovation. And today, the speed of innovation is much faster than before."

In the past cycle, the training and reasoning costs of large models have been rapidly declining. But this does not mean that the previously high-cost innovations were meaningless. On the contrary, it is precisely because of the significant investment in costly AI infrastructure that subsequent AI innovations can have a solid foundation for trial and exploration. Similarly, it is because the Scaling Laws have been discovered and implemented, leading to the training of high-quality large models, that we can consider how to reduce costs and optimize model architecture based on this work. It’s like having the light bulb first, and then the power grid; these two cycles cannot replace each other.

Under the continuous push for large language models, mainstream AI companies worldwide have already seen the feasibility of cost reduction.

For example, Google CEO Sundar Pichai stated at the recently held Paris AI Action Summit that AI technology is experiencing rapid advancements, with a particularly significant drop in costs. In the past 18 months, the cost of processing tokens has decreased from $4 per million to $0.13, a reduction of up to 97%.

A few days earlier, OpenAI founder Sam Altman mentioned on social media that "the cost of using a certain level of artificial intelligence decreases by about ten times every 12 months, and lower prices will lead to more usage."

This means that top global AI companies like Baidu, Google, and OpenAI have all recognized the rapid annual decline in the costs of large models. The reduction in AI innovation costs means that foundational large models and AI-native applications will experience faster development speeds. Just like a standout app in the internet era can create a trend, we will soon see a flourishing of various AI models and applications, leading to "small efforts yielding miraculous results."

This is an inevitable trend in the development of large models and will drive the AI market into a new phase—free usage of high-quality models.

The transition of large models into a cost reduction cycle will bring us many surprises. Among them are the stunning new models and the democratization of mature models moving towards free usage. Supported by declining costs, the free use of the most mature and cutting-edge large models is becoming a new consensus in AI.

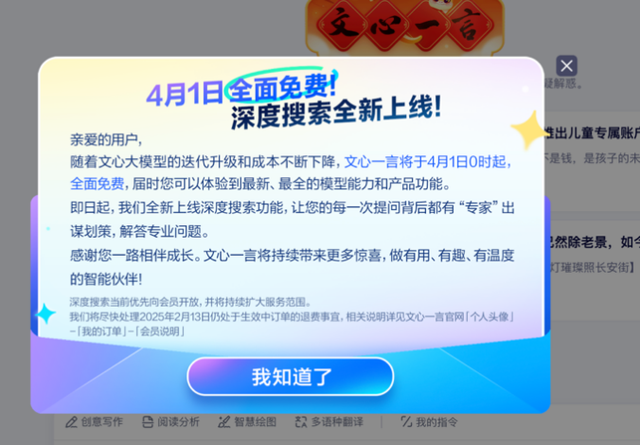

The most compelling evidence supporting this viewpoint is that on February 13, both Wenxin Yiyan and ChatGPT announced free usage simultaneously.

With the iterative upgrades of the Wenxin large model and continuous cost reductions, the Wenxin Yiyan official website announced that starting from April 1 at 0:00, it will be completely free, allowing all PC and app users to experience the latest models in the Wenxin series, as well as features like long document processing, enhanced professional search, advanced AI drawing, and multilingual dialogue.

At the same time, Wenxin Yiyan has also launched a deep search function, enabling the large model to possess stronger planning and tool-calling capabilities. It can provide expert-level responses and handle multi-scenario tasks, achieving multimodal input and output.

Meanwhile, across the ocean, OpenAI announced the latest progress of GPT-4.5 and GPT-5, stating that the free version of ChatGPT will allow unlimited conversations using GPT-5 under standard intelligent settings. Previously, OpenAI had already announced that ChatGPT Search is open to everyone without registration.

Earlier, Google also announced that the latest Gemini 2.0 model, including three versions: Flash, Pro Experimental, and Flash-Lite, is open to everyone.

It is evident that driven by the cost reduction cycle of large models, democratization will become a common choice for global AI companies. We will soon apply large models with lower costs and faster access methods, and the ubiquitous and low-threshold capabilities of large models will showcase the imaginative applications of intelligence that change everything, just like the spread of the internet.

So, in the face of the wave of AI democratization and the trend of cost reduction, what new AI trends will enterprises, developers, and individuals encounter? I believe Baidu's actions have already constructed a reference blueprint.

As large models enter the cost reduction era, enterprises need to timely adjust their strategic direction to align their development intentions with the upcoming market demands and user expectations. What opportunity points can we discover in the era of large model cost reduction? Baidu's actions have already provided us with answers. In the development cycle where cost reduction and democratization are the main themes, three opportunities are particularly crucial:

1. Continuous AI infrastructure construction.

The cost reduction of large models does not mean that investment in infrastructure can stagnate. On the contrary, declining costs will bring larger development space and a greater scale of users for large models. Effective infrastructure construction will be the cornerstone to ensure the continuous development of AI innovation and its reach to the public. Li Yanhong believes that we still need to continuously invest in chips, data centers, and cloud infrastructure to create better and smarter next-generation models. From Kunlun chips to the PaddlePaddle deep learning framework, foundational large models, and Baidu Smart Cloud, Baidu is making broader and more substantial investments in full-stack AI infrastructure.

2. Exploring AI-native applications.

Indeed, large language models themselves are a very valuable application form, but AI's capabilities go far beyond that. Exploring AI-native application forms and capabilities based on large models will be the real focus of the AI field moving forward. Users can look forward to a colorful array of AI-native applications in the near future. For enterprises and developers, investing large model capabilities into the application layer remains the core opportunity.

In terms of AI-native applications, Baidu has already achieved many successes, such as the Baidu app, Baidu Wenku, and the Wenxin Intelligent Agent platform, which have all demonstrated advantages. Li Yanhong believes that even at the current level, large language models have already created significant value across various scenarios, while attention needs to be paid to value creation at the application layer.

3. Building the next generation of large models.

The continuous decline in the training and reasoning costs of large models means that it is now possible to create more powerful and effective large models. The next generation of large models will be the vanguard driving the continuous evolution of the AI industry and will serve as the central axis connecting AI infrastructure and AI applications.

At this stage, AI giants in Europe and America, such as OpenAI, have already announced plans for the next generation of large models. According to a report by CNBC on February 12, Baidu plans to release its next-generation AI model, Ernie 5.0, in the second half of this year, which will have significant enhancements in multimodal capabilities.

Wenxin 5.0 is expected to meet the expectations of various sectors in terms of strategic rhythm and technological upgrades for the next generation of large models. How to break through the upper limits of large model capabilities during the cost reduction cycle? This will be the question that Wenxin 5.0 needs to answer.

Li Yanhong stated, "Technological progress is very rapid. Although we are satisfied with the achievements we have made so far, think about how much things will change in six months or two years."

With the rapid advancements in technology and infrastructure, the speed of AI development may exceed everyone's expectations. Achieving better results at a lower cost. Using a small lever to pry open a miracle with infinite possibilities. This may be the beautiful backdrop of the intelligent era.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。