Huawei collaborates with 15 universities to provide the strongest solutions.

Source: New Intelligence

Image source: Generated by Wujie AI

How is it that a machine learning PhD program at a top 5 university in the U.S. lacks even a single GPU capable of providing substantial computing power?

In mid-2024, a post by a netizen on Reddit immediately sparked a heated discussion in the community—

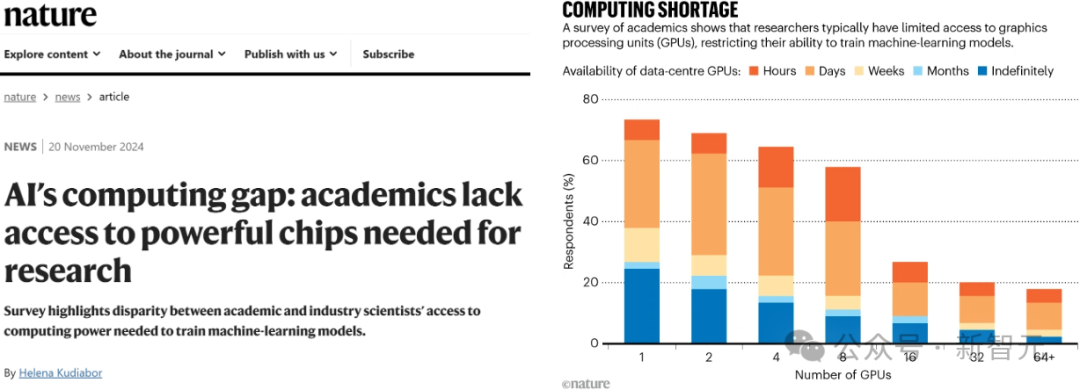

By the end of the year, a report in Nature revealed the severe challenges faced by academia in acquiring GPUs—researchers actually have to queue to apply for time on their school's GPU clusters.

Similarly, the issue of GPU scarcity is also prevalent in laboratories at universities in our country. There have even been reports of universities requiring students to bring their own computing power to class, which is quite absurd.

It is evident that the bottleneck of "computing power" has made AI itself a course with a very high threshold.

Shortage of AI Talent and Insufficient Computing Power

At the same time, the rapid development of cutting-edge technologies such as large models and embodied intelligence is causing a global talent shortage.

According to calculations by a professor at Oxford University, the proportion of job positions requiring AI skills in the U.S. has increased fivefold.

Globally, job positions in technical artificial intelligence (Tech-AI) have grown ninefold, while positions in broad artificial intelligence (Broad-AI) have increased by 11.3 times.

During this period, growth in Asia has been particularly significant.

Although universities around the world are trying to help students master key AI skills, as mentioned earlier, computing power has now become a "luxury."

To bridge this gap, collaboration between enterprises and universities has become an important means.

Kunpeng Ascend Science and Education Innovation Incubation Center, Initiating Research Layout in Universities

Fortunately, to cultivate a similar innovation system in our country's universities, Huawei has already begun to make arrangements!

Now, Huawei has signed a cooperation agreement with five top universities: Peking University, Tsinghua University, Shanghai Jiao Tong University, Zhejiang University, and University of Science and Technology of China, to establish the "Kunpeng Ascend Science and Education Innovation Excellence Center."

In addition, Huawei is also simultaneously promoting cooperation with ten other universities, including Fudan University, Harbin Institute of Technology, Huazhong University of Science and Technology, Xi'an Jiaotong University, Nanjing University, Beihang University, Beijing Institute of Technology, University of Electronic Science and Technology of China, Southeast University, and Beijing University of Posts and Telecommunications, to develop the "Kunpeng Ascend Science and Education Innovation Incubation Center."

The establishment of the excellence center and incubation center is a model of industry-education integration:

By introducing the Ascend ecosystem, it compensates for the shortage of computing power in universities, greatly promoting the emergence of more research results;

By reforming the curriculum system, driven by research topics, industry topics, and competition topics, it aims to cultivate top talent in the computing industry;

By tackling system architecture, computing acceleration capabilities, algorithm capabilities, and system capabilities, it strives to nurture world-class innovative results;

By creating numerous "AI+X" interdisciplinary fields, it leads the development of an intelligent ecosystem.

Building a Fully Independent Domestic Computing Power for AI Research

Today, the significance of AI for Science is self-evident.

According to the latest survey by Google DeepMind, one in three postdoctoral researchers uses large language models to assist with literature reviews, programming, and article writing.

This year's Nobel Prizes in Physics and Chemistry were both awarded to researchers in the field of AI.

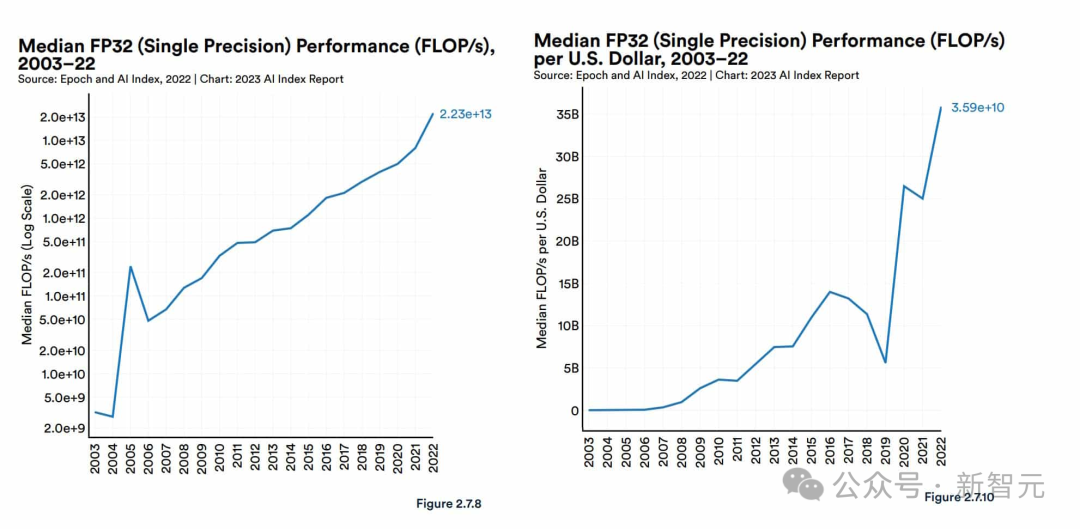

It can be seen that in the process of AI empowering scientific research, GPUs, with their outstanding performance in high-performance computing fields and strong capabilities for LLM training and inference, have become precious "gold," fiercely sought after by major companies like Microsoft, xAI, and OpenAI.

However, the U.S. blockade on GPUs has made progress in AI and scientific research in our country extremely difficult.

To cross this chasm, we must build a fully independent ecological system and develop it.

At the computing power level, Huawei's Ascend series AI processors are tasked with reshaping our country's competitiveness.

Moreover, on top of computing power, we also need a self-developed computing framework to fully leverage the advantages of NPU/AI processors.

It is well known that the CUDA architecture, designed specifically for NVIDIA GPUs, is quite common in the fields of AI and data science.

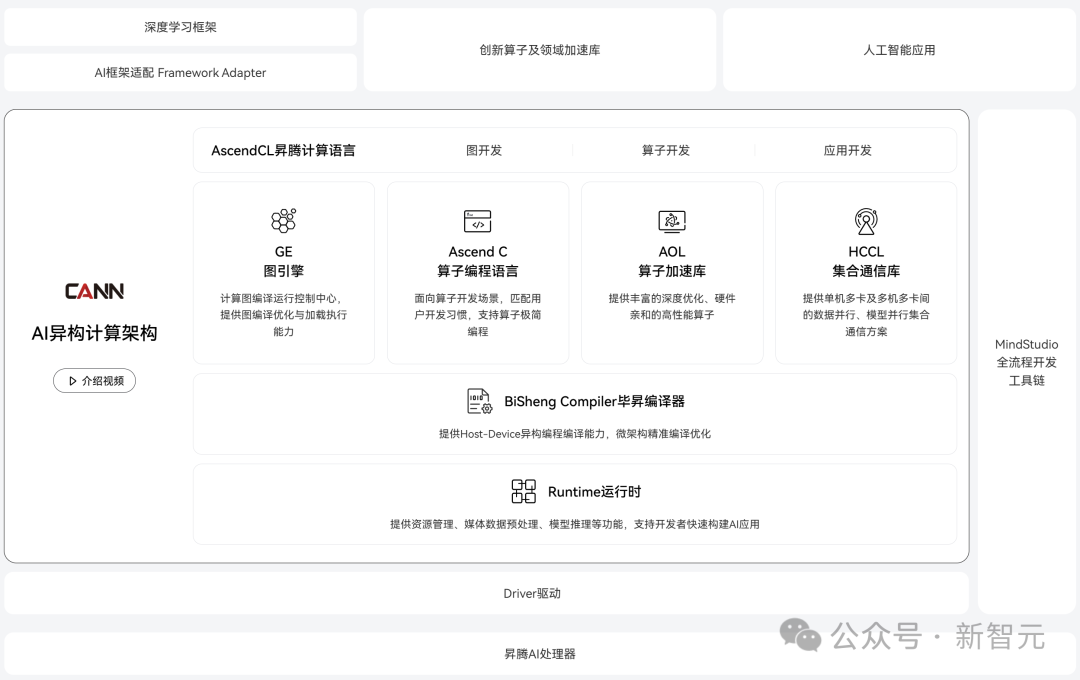

In our country, the only real competitor that can serve as a substitute is CANN.

As Huawei's heterogeneous computing architecture for AI scenarios, CANN supports mainstream AI frameworks such as PyTorch, TensorFlow, and MindSpore, while enabling Ascend AI processors, making it a key platform for enhancing the computing efficiency of Ascend AI processors.

For this reason, CANN inherently possesses many technical advantages. The most critical of these is the deeper soft-hard integration optimization for AI computing and a more open software stack:

First, it can support multiple AI frameworks, including its own MindSpore, as well as third-party frameworks like PyTorch and TensorFlow;

Second, it provides multi-level programming interfaces tailored for diverse application scenarios, allowing users to quickly build AI applications and services based on the Ascend platform;

Additionally, it offers model migration tools to facilitate developers in quickly migrating projects to the Ascend platform.

Currently, CANN has initially built its own ecosystem. On the technical level, CANN encompasses a large number of applications, tools, and libraries, boasting a complete technical ecosystem that provides users with a one-stop development experience. Meanwhile, the developer team based on Ascend technology is gradually growing, laying fertile ground for future technological applications and innovations.

On top of the heterogeneous computing architecture CANN, we also need a deep learning framework for building AI models.

Almost all AI developers need to use deep learning frameworks, and nearly all DL algorithms and applications must be implemented through deep learning frameworks.

Currently, well-known mainstream frameworks such as Google TensorFlow and Meta's PyTorch have formed a massive ecosystem.

Entering the era of large model training, deep learning frameworks need to effectively train when facing the scale of thousands of computers.

The all-scenario deep learning framework—Huawei MindSpore, which was officially open-sourced in March 2020, fills the gap in this field domestically, achieving true independence and controllability.

MindSpore features full-scenario deployment across cloud, edge, and terminal, native support for large model training, and support for AI + scientific computing, creating a fully collaborative and streamlined native development environment, accelerating domestic scientific research innovation and industrial application.

Notably, as the "best partner" for Ascend AI processors, MindSpore supports "end, edge, and cloud" full scenarios, enabling a unified architecture for training once and deploying in multiple locations.

From large-scale simulations of the Earth system and autonomous driving to small-scale protein structure predictions, all can be achieved through MindSpore.

A deep learning open-source framework can only be perfected and release greater value with a broad developer ecosystem.

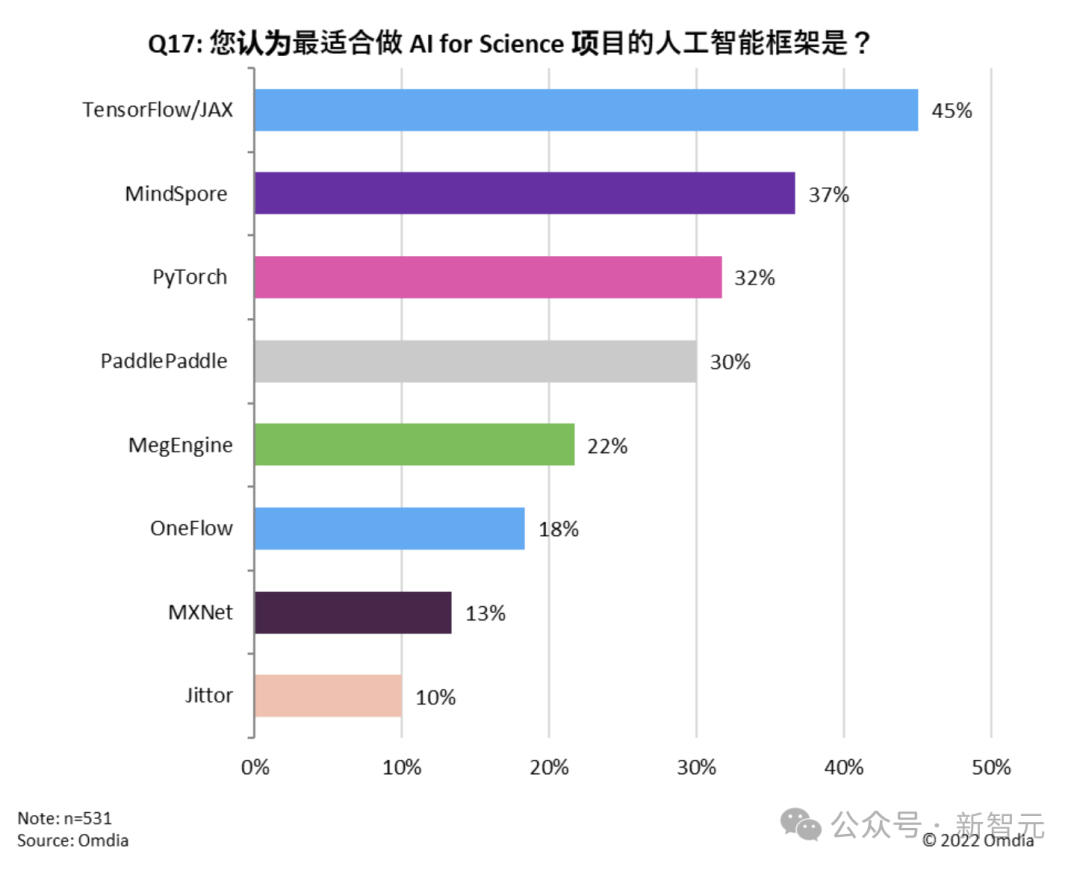

According to the "China Artificial Intelligence Framework Market Research Report" released by research institution Omdia in 2023, MindSpore has entered the first tier of AI framework usage rates, second only to TensorFlow.

Moreover, inference applications across various industries are key to unlocking the value of AI. In the accelerated development of GenAI, both universities and enterprises urgently need to address the high demand for accelerated inference speeds.

For example, the high-performance optimization compiler TensorRT is an effective tool for enhancing large model inference performance. By leveraging quantization and sparsity, it can reduce model complexity and efficiently optimize the inference speed of deep learning models. However, the problem is that it only supports NVIDIA GPUs.

Similarly, with the computing architecture and deep learning framework in place, we will also have a corresponding inference engine—Huawei Ascend MindIE.

MindIE is an all-scenario AI inference acceleration engine that integrates the industry's most advanced inference acceleration technologies and inherits the features of open-source PyTorch.

Its design balances flexibility and practicality, seamlessly connecting with various mainstream AI frameworks while supporting different types of Ascend AI processors, providing users with multi-level programming interfaces.

Through full-stack joint optimization and layered open AI capabilities, MindIE can unleash the extreme computing power of Ascend hardware, providing users with efficient and rapid deep learning inference solutions, addressing the challenges of high technical difficulty and numerous development steps in model inference and application development, enhancing model throughput performance, shortening application launch times, enabling diverse AI business needs.

It can be seen that self-innovative technologies such as CANN, MindSpore, and MindIE not only fill the gap in domestic computing power but also achieve leapfrog breakthroughs in model training, framework usability, and inference performance, even directly competing with advanced technology stacks abroad.

Building a World-Class Incubation Center

In addition to having technical advantages, it can be said that using Ascend computing power will be more in line with national needs in the coming decades.

Only domestically developed computing power can free us from the unpredictable external environment, ensuring the stability of the research foundation.

Now that the platform is set up, how can we teach university teachers and students to use it?

Since September 6 of last year, Huawei has successively held the first phase of the Ascend AI special training camp at four major universities: Peking University, Shanghai Jiao Tong University, Zhejiang University, and University of Science and Technology of China. Among the hundreds of students who registered to participate, 90% are master's and doctoral students, and the courses cover various aspects of the Ascend field, including CANN, MindSpore, MindIE, MindSpeed, HPC, and Kunpeng development tools.

In the training camp, students not only gain a detailed understanding of core technologies but also have hands-on practice opportunities. This arrangement aligns well with students' absorption characteristics for new knowledge, progressing from simple to complex in a step-by-step manner.

For example, at Shanghai Jiao Tong University, the first day's course focuses on migration, allowing students to understand the basic hardware and software solutions of Ascend AI, practical cases of Pytorch model native development on Ascend, characteristics of MindIE inference solutions, and migration cases.

The second day's course focuses on optimization, including the Ascend heterogeneous computing architecture CANN, Ascend C operator development, and practical operations for optimizing long-sequence inference of large models.

The setup of migration and optimization courses is indeed well thought out.

It is important to note that many practical courses at universities are primarily based on CUDA/X86 setups, but under the impact of sanctions, the issue of insufficient computing power has become increasingly prominent. At this time, mastering migration methods allows projects to be placed on the Ascend platform, enabling academic continuity.

After grasping the basic knowledge, students can get hands-on experience in the practical case section. Huawei's experts will guide students step by step, allowing them to learn the Ascend technology stack and experience the entire process of large model inference through quantization, inference, and Codelabs code implementation.

After practical operations, students will gain a deeper understanding of the Ascend ecosystem through personal experience, laying a solid foundation for future work in the technology field.

Students are engaged in hands-on practice during the first phase of the training camp at Shanghai Jiao Tong University.

In addition to the courses, Huawei will also hold an operator challenge for university developers to discover elite operator developers.

The competition encourages developers to innovate and practice deeply based on Ascend computing resources and the foundational capabilities of CANN, accelerating the integration of AI with industries and promoting the enhancement of developer capabilities.

Moreover, the incubation center places great importance on academic achievements.

Students conducting academic research based on Kunpeng or Ascend computing key technologies and tools can apply for graduate scholarships. During this period, if their papers are published in top international conferences and domestic journals, they will receive corresponding rewards.

At the same time, Huawei has also partnered with Kunpeng & Ascend ecosystem partners to launch a talent program.

This program allows students to transition from theory to practice, entering real work scenarios in enterprises while helping outstanding students connect with companies in advance.

Currently, the talent program has united over 200 companies in 15 cities, providing more than 2,000 technical positions, allowing over 10,000 university students to apply for jobs.

In summary, through these teaching practices and incentive programs, students' enthusiasm for participation can be greatly increased. Not only can they enhance their academic experience and produce research results, but they will also have more impressive experiences and backgrounds, thus gaining an advantage in the job market and making it easier to attract the attention of top companies both domestically and internationally.

So, after mastering the latest technologies and their applications, how can we cultivate truly groundbreaking research results in today's rapidly changing AI landscape?

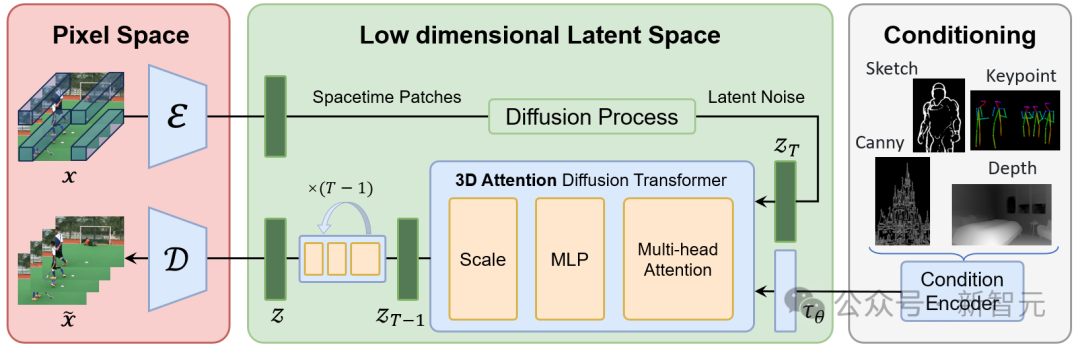

Since Sora sparked the AI wave of text-to-video in 2024, large models for text-to-video have been continuously emerging. The open-source text-to-video project Open-Sora Plan by Peking University and Tujian has caused a stir in the industry.

In fact, as early as when Sora was launched, the team was already preparing an open-source version of Sora; however, the requirements for computing power and data were not met, and the project was temporarily shelved. Fortunately, the establishment of the Kunpeng Ascend Science and Education Innovation Excellence Center in collaboration with Huawei allowed the team to quickly receive computing power support.

Originally, the team was using NVIDIA A100, but after migrating to the Ascend ecosystem, they made various surprising discoveries—

The support of CANN enables efficient parallel computing, significantly accelerating the processing speed of large-scale datasets; the Ascend C interface library simplifies the development process of AI applications; and the operator acceleration library further optimizes algorithm performance.

More importantly, the open Ascend ecosystem can quickly adapt large models and applications.

As a result, although team members started from scratch with the Ascend ecosystem, they were able to get up to speed in a very short time.

During the subsequent training, the team continuously discovered surprises: for example, when developing with torch_npu, the entire code can seamlessly train and infer on Ascend NPU.

When model partitioning is needed, the Ascend MindSpeed distributed acceleration suite provides a wealth of distributed algorithms and parallel strategies for large models.

Additionally, in large-scale training, the stability of using MindSpeed and Ascend hardware is far superior to other computing platforms, allowing for uninterrupted operation for a week.

Therefore, just one month later, the Open-Sora Plan was officially launched and received great recognition in the industry.

The scene generated by Open-Sora Plan from "Black Myth: Wukong" is comparable to a blockbuster movie, stunning countless netizens.

Additionally, Southeast University has developed a multimodal transportation large model MT-GPT aimed at Ascend computing power.

Previously, the implementation of transportation large models was very difficult due to issues such as data silos caused by different government departments collecting data, non-unified data formats and standards, and heterogeneous multi-source traffic data.

To address these issues, the team specifically conceived a conceptual framework for a multimodal transportation large model called MT-GPT (Multimodal Transportation Generative Pre-trained Transformer), providing data-driven solutions for multi-faceted and multi-granularity decision-making problems in multimodal transportation system tasks.

However, the development and training of large models undoubtedly require high computing power.

To this end, the team chose to leverage Ascend AI capabilities to accelerate the development, training, tuning, and deployment of the transportation large model.

In the development phase, the Transformer large model development suite, through multi-source heterogeneous knowledge corpus and multimodal feature encoding, collaboratively improved the understanding accuracy of multimodal generative problems.

In the training phase, the Ascend MindSpeed distributed training acceleration suite provided multi-dimensional, multi-modal acceleration algorithms for the transportation large model.

In the tuning phase, the Ascend MindStudio full-process toolchain combined fine-tuning with traffic-specific domain knowledge for training adjustments.

In the deployment phase, the Ascend MindIE inference engine can assist in one-stop inference for the transportation large model and support cross-city migration analysis, development, debugging, and tuning.

In summary, it can be seen that Peking University's Open-Sora is a migration project to replicate Sora, and as an open-source project, it can better empower global developers to create more applications in various scenarios.

Meanwhile, Southeast University's multimodal transportation large model MT-GPT reflects the practical capabilities of Ascend computing power in achieving results, directly empowering the transportation industry in cities.

Thus, a closed loop of industry-university-research has been fully formed.

These fruitful results further prove this point: the excellence center/incubation center can not only provide fertile ground for academic research and scientific innovation for universities but also cultivate a large number of top AI talents, thereby incubating research results that lead the world.

For instance, during the development of the Open-Sora Plan by the Peking University team, Professor Yuan Li organized students to brainstorm daily with the Huawei Ascend team on code and algorithm development.

In the process of feeling their way across the river, many students from the Peking University team personally participated in a high-quality research practice, demonstrating high research creativity.

This team, with an average age of 23, has become a backbone force in promoting domestic AI video applications.

In this process, the youth learning team that has mastered the Kunpeng Ascend ecosystem is also continuously growing.

Therefore, universities conducting research based on domestic computing power and platforms not only gain the support of top intellect but also expand Huawei's technology ecosystem and applications in the process.

What kind of innovation system should our country build?

It can be seen that the new paradigm of university-enterprise cooperation has officially set sail with Huawei.

Since establishing its computing product line in 2019, Huawei quickly signed a cooperation project for intelligent infrastructure with the Ministry of Education in 2020, launching educational collaborations with 72 leading universities nationwide.

At that time, some technical knowledge of Kunpeng/Ascend had already been integrated into the required courses of some universities.

However, investment in universities is a medium- to long-term cultivation process. Only by allowing students and teachers to prioritize understanding relevant technologies can greater value be realized in the coming years.

Therefore, Huawei plans to invest 1 billion yuan annually to develop the native ecosystem and talent of Kunpeng and Ascend. The implementation of this strategy will provide universities and developers with richer resources and broader development space, and a plan to distribute 100,000 Kunpeng development boards and Ascend inference development boards has already been initiated to encourage them to actively explore and apply Kunpeng and Ascend technologies in teaching experiments, competition practices, and technological innovation.

According to this plan, teachers and students can closely interact with and try out the development boards. Whether for teaching or research experiments, university faculty and students can innovate on the boards, sparking new ideas.

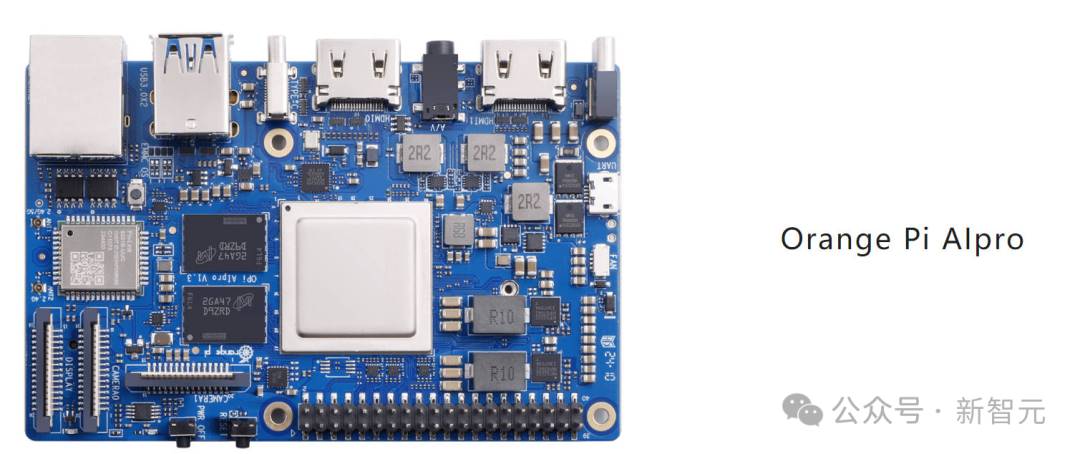

The OrangePi AIpro development board launched in collaboration with Orange Pi and Huawei Ascend meets the needs of most AI algorithm prototype verification and inference application development, and can be widely applied in fields such as AI edge computing, deep visual learning, drones, and cloud computing, demonstrating strong capabilities and wide applicability.

On the other hand, our country's current special situation—technological blockades from external sources—also means that we have little time left. We must have an independent and controllable technology stack.

Native development is imperative for the future. Only "Made in China" aligns best with the trends of a major country in China's future.

As domestic production becomes an unstoppable trend, domestic technology stacks like Kunpeng/Ascend will also permeate various IT infrastructures.

The launch of the excellence center and incubation center has also instilled increasing confidence in the industry.

It is foreseeable that after several years of incubation, research talents who master domestic technology foundations will continuously promote the Kunpeng/Ascend technology route, incubating enough research results to lead the world.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。