Author: YBB Capital Researcher Zeke

I. The Novelty of Attention

In the past year, due to a disconnect in application layer narratives that could not match the explosive growth of infrastructure, the crypto space has gradually turned into a game for attention resources. From Silly Dragon to Goat, from Pump.fun to Clanker, the novelty-seeking behavior in attention has led to an intense competition. Starting with the most cliché eye-catching monetization, it quickly evolved into a unified platform model for attention seekers and providers, and now silicon-based entities have become new content providers. Among the bizarre carriers of Meme Coins, a consensus between retail investors and VCs has finally emerged: AI Agent.

Attention is ultimately a zero-sum game, but speculation can indeed drive things to grow wildly. In our previous article about UNI, we reviewed the beginning of the last golden age of blockchain, where the rapid growth of DeFi stemmed from the LP mining era initiated by Compound Finance. The primitive on-chain game during that period involved entering and exiting various mining pools with APYs in the thousands or even tens of thousands, although the eventual outcome was a collapse of various pools. However, the frenzied influx of gold miners did leave unprecedented liquidity in blockchain, and DeFi eventually evolved beyond pure speculation into a mature track, meeting users' financial needs in payments, trading, arbitrage, staking, and more. Currently, AI Agents are also experiencing this wild phase, and we are exploring how crypto can better integrate with AI, ultimately leading to a new height for the application layer.

II. How Agents Operate Autonomously

In our previous article, we briefly introduced the origin of AI Meme: Truth Terminal, and our outlook on the future of AI Agents. This article focuses first on the AI Agent itself.

Let’s start with the definition of AI Agent. The term "Agent" in the AI field is relatively old but lacks a clear definition, primarily emphasizing autonomy, meaning any AI that can perceive its environment and respond can be called an Agent. In today's definitions, AI Agents are closer to intelligent entities, which set up a system that mimics human decision-making for large models. In academia, this system is seen as the most promising path toward AGI (Artificial General Intelligence).

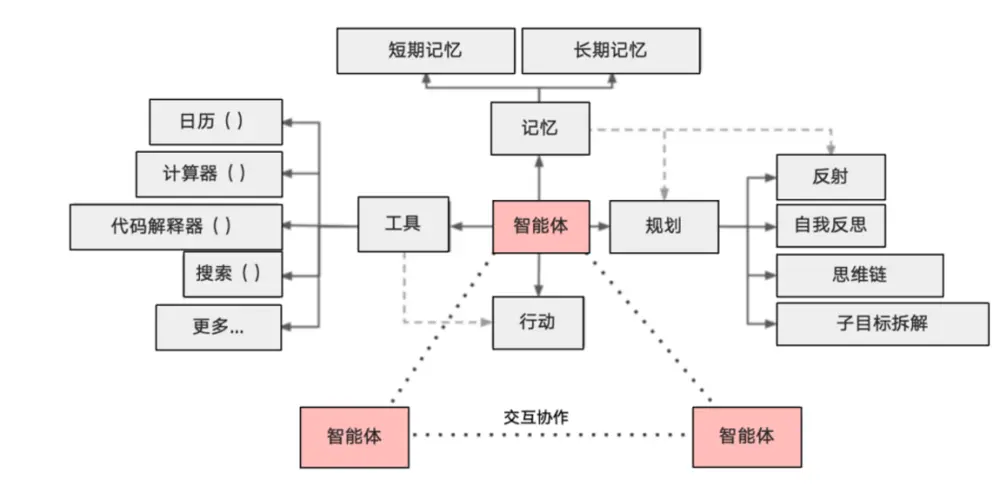

In the early versions of GPT, we could clearly sense that large models resembled humans, but when answering many complex questions, they could only provide somewhat plausible answers. The fundamental reason is that the large models at that time were based on probability rather than causality, and they lacked the abilities that humans possess, such as using tools, memory, and planning. AI Agents can fill these gaps. So, to summarize with a formula: AI Agent (intelligent entity) = LLM (large model) + Planning + Memory + Tools.

Prompt-based large models are more like static individuals; they only come to life when we input data. The goal of an intelligent entity is to be a more realistic person. Currently, the main intelligent entities in the field are fine-tuned models based on Meta's open-source Llama 70b or 405b versions (with different parameters), capable of memory and using API tools. In other aspects, they may still require human assistance or input (including interaction and collaboration with other intelligent entities). Therefore, we see that the main intelligent entities today still exist in the form of KOLs on social networks. To make intelligent entities more human-like, they need to integrate planning and action capabilities, with the sub-item of thinking chains being particularly crucial.

III. Chain of Thought (CoT)

The concept of Chain of Thought (CoT) first appeared in the 2022 Google paper "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models," which pointed out that generating a series of intermediate reasoning steps can enhance a model's reasoning ability, helping it better understand and solve complex problems.

A typical CoT Prompt consists of three parts: a clearly defined task description, logical reasoning supporting the task solution, and an example of a specific solution. This structured approach helps the model understand task requirements and gradually approach the answer through logical reasoning, thereby improving the efficiency and accuracy of problem-solving. CoT is particularly suitable for tasks requiring in-depth analysis and multi-step reasoning. For simple tasks, CoT may not provide significant advantages, but for complex tasks, it can significantly enhance the model's performance by reducing error rates through step-by-step solving strategies and improving the quality of task completion.

In building AI Agents, CoT plays a crucial role. AI Agents need to understand the information received and make reasonable decisions based on it. CoT provides an orderly way of thinking, helping Agents effectively process and analyze input information, transforming the analysis results into specific action guidelines. This method not only enhances the reliability and efficiency of Agent decision-making but also increases the transparency of the decision-making process, making Agent behavior more predictable and traceable. By breaking tasks down into smaller steps, CoT helps Agents carefully consider each decision point, reducing errors caused by information overload. CoT makes the decision-making process of Agents more transparent, allowing users to better understand the basis for the Agent's decisions. In interactions with the environment, CoT allows Agents to continuously learn new information and adjust their behavior strategies.

As an effective strategy, CoT not only enhances the reasoning ability of large language models but also plays an important role in building smarter and more reliable AI Agents. By leveraging CoT, researchers and developers can create intelligent systems that are more adaptable to complex environments and possess a high degree of autonomy. CoT has demonstrated its unique advantages in practical applications, especially in handling complex tasks. By breaking tasks down into a series of small steps, it not only improves the accuracy of task resolution but also enhances the model's interpretability and controllability. This step-by-step problem-solving approach can significantly reduce the likelihood of erroneous decisions due to excessive or overly complex information when facing complex tasks. At the same time, this method also improves the traceability and verifiability of the entire solution.

The core function of CoT lies in integrating planning, action, and observation, bridging the gap between reasoning and action. This mode of thinking allows AI Agents to formulate effective countermeasures when predicting potential anomalies and to accumulate new information while interacting with the external environment, validating pre-set predictions and providing new reasoning bases. CoT acts like a powerful engine of precision and stability, helping AI Agents maintain high efficiency in complex environments.

IV. The Right Pseudo-Demand

What aspects of AI technology stacks should crypto integrate with? In last year's article, I believed that the decentralization of computing power and data is a key step in helping small businesses and individual developers save costs. This year, in the detailed breakdown of the Crypto x AI sector compiled by Coinbase, we see a more detailed classification:

(1) Computing Layer (focused on providing GPU resources for AI developers);

(2) Data Layer (supporting decentralized access, orchestration, and validation of AI data pipelines);

(3) Middleware Layer (platforms or networks supporting the development, deployment, and hosting of AI models or agents);

(4) Application Layer (user-facing products utilizing on-chain AI mechanisms, whether B2B or B2C).

In these four layers, each has grand visions, and their goals can be summarized as combating the dominance of Silicon Valley giants in the next era of the internet. As I mentioned last year, do we really have to accept the exclusive control of computing power and data by Silicon Valley giants? Under their monopoly, the closed-source large models are black boxes, and science, as the most revered religion of humanity today, will see every statement made by future large models regarded as truth by a significant portion of people. But how can this truth be verified? According to the vision of Silicon Valley giants, the permissions that agents ultimately possess will be unimaginable, such as having the payment rights to your wallet and the rights to use terminals. How can we ensure that people harbor no malicious intent?

Decentralization is the only answer, but sometimes we need to reasonably consider how many payers there are for these grand visions. In the past, we could overlook the commercial closed-loop and use tokens to compensate for the errors brought by idealism. However, the current situation is very severe; Crypto x AI needs to be designed in conjunction with reality. For example, how to balance supply and demand in the computing layer under performance loss and instability to achieve competitiveness with centralized clouds? How many real users will data layer projects have, how to verify the authenticity and validity of the provided data, and what kind of clients need this data? The same reasoning applies to the other two layers; in this era, we do not need so many seemingly correct pseudo-demands.

V. Meme Has Emerged from SocialFi

As I mentioned in the first paragraph, Meme has rapidly evolved into a SocialFi form that aligns with Web3. Friend.tech was the first Dapp to fire the first shot in this round of social applications, but unfortunately, it failed due to hasty token design. Pump.fun validated the feasibility of pure platformization, not creating any tokens or rules. The unification of attention seekers and providers allows you to post memes, do live broadcasts, issue tokens, leave messages, and trade—all freely, with Pump.fun only charging a service fee. This is essentially consistent with the attention economy model of current social media like YouTube and Instagram, except that the charging targets differ, and in terms of gameplay, Pump.fun is more Web3.

Base's Clanker is a comprehensive entity, benefiting from the integrated ecosystem personally crafted by the ecosystem, with Base having its own social Dapp as an auxiliary, forming a complete internal closed loop. The intelligent entity Meme is a 2.0 form of Meme Coin; people are always seeking novelty, and Pump.fun happens to be at the forefront of trends. From a trend perspective, it is only a matter of time before silicon-based entities replace the crude memes of carbon-based entities.

I have mentioned Base countless times, but each time the content is different. From a timeline perspective, Base has never been a first mover, yet it is always a winner.

VI. What Else Can Agents Be?

From a pragmatic standpoint, agents are unlikely to be decentralized for a long time in the future. Looking at the construction of agents in the traditional AI field, it is not a simple matter of decentralizing and open-sourcing the reasoning process; it requires access to various APIs to retrieve Web2 content, and its operational costs are quite high. The design of thinking chains and the collaboration of multiple agents usually still rely on a human as a mediator. We will experience a long transition period until a suitable integration form emerges, perhaps similar to UNI. However, like in the previous article, I still believe that agents will have a significant impact on our industry, much like the presence of centralized exchanges (Cex) in our field—incorrect but very important.

Last month, Stanford and Microsoft released a paper titled "AI Agent Overview," which extensively described the applications of agents in the medical industry, intelligent machines, and virtual worlds. In the appendix of this paper, there are already numerous experimental cases of GPT-4V participating as agents in the development of top-tier AAA games.

There is no need to rush its integration with decentralization; I hope that the first puzzle agents complete is the bottom-up capability and speed. We have so many narrative ruins and blank metaverses that need filling, and at the appropriate stage, we can consider how to make it the next UNI.

References

What is the Ability of the Emergent Thinking Chain in Large Models? Author: Brain Extreme Body

Understanding Agents: The Next Stop for Large Models Author: LinguaMind

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。