The AI track has become a core area in the current bull market.

Author: Viee, Core Contributor at Biteye

Editor: Crush, Core Contributor at Biteye

Community: @BiteyeCN

* The full text is approximately 2500 words, with an estimated reading time of 5 minutes

From the strong collaboration of AI+DePIN in the first half of the year to the current creation of market value myths with AI+Meme, AI Meme tokens like $GOAT and $ACT have captured most of the market's attention, indicating that the AI track has become a core area in the current bull market.

If you are optimistic about the AI track, what can you do? Besides AI+Meme, what other AI sectors are worth paying attention to?

01 The AI+Meme Craze and Computing Power Infrastructure

Continuously optimistic about the explosive potential of AI+Meme

AI+Meme has recently swept through the entire on-chain to centralized exchange scene and continues to show a sustained trend.

If you are an on-chain player, try to choose new narratives, strong communities, and small market cap tokens, as these tokens have a significant wealth effect between the primary and secondary markets. If you are not playing on-chain, you can consider news arbitrage when a token is announced to be listed on major exchanges like Binance.

Explore high-quality targets in the AI infrastructure layer, especially those related to computing power

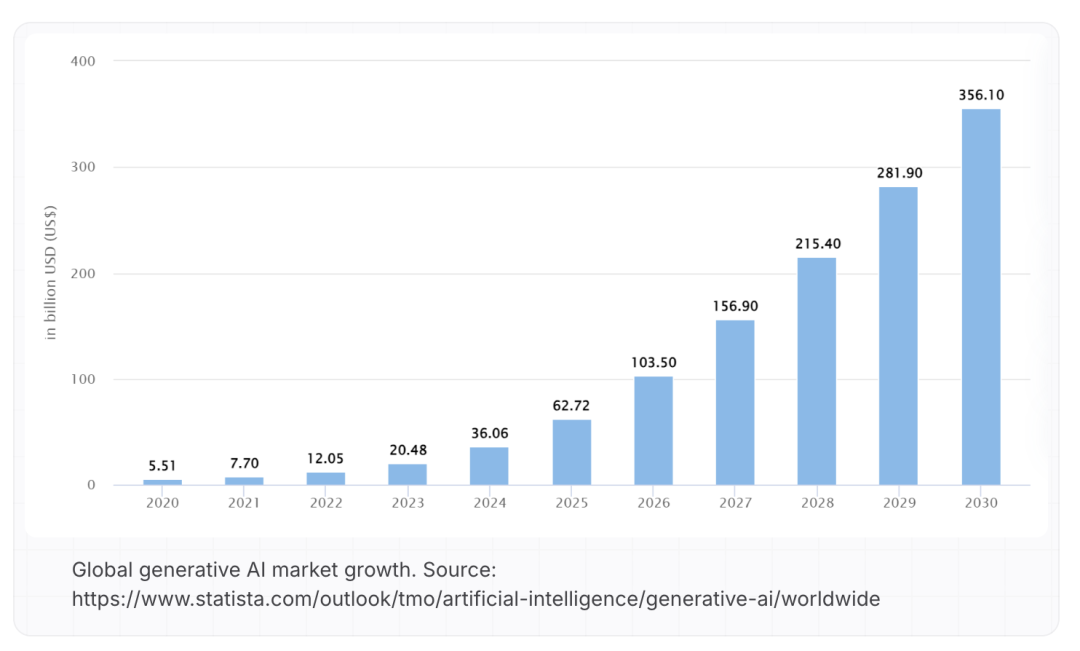

Computing power is a definitive narrative for AI development, and cloud computing will elevate valuation foundations. With the explosive growth in demand for AI computing power, especially with the emergence of technologies like large language models (LLM), the demand for computing power and storage has almost increased exponentially.

The development of AI technology relies on strong computing capabilities, and this demand is not short-term but a long-term and continuously growing trend. Therefore, projects that provide computing power infrastructure essentially address the fundamental issues of AI development and possess very strong market appeal.

02 Why is Decentralized Computing So Important?

On one hand, the explosive growth of AI computing power faces high costs

Data from OpenAI shows that since 2012, the amount of computation used to train the largest AI models has nearly doubled every 3-4 months, far exceeding Moore's Law.

As the demand for high-end hardware like GPUs skyrockets, the imbalance between supply and demand has led to record-high computing power costs. For example, training a model like GPT-3 incurs an initial GPU investment cost of nearly $800 million, with daily inference costs reaching as high as $700,000.

On the other hand, traditional cloud computing cannot meet current computing power demands

AI inference has become a core component of AI applications. It is estimated that 90% of the computing resources consumed during the AI model lifecycle are used for inference.

Traditional cloud computing platforms often rely on centralized computing infrastructure, and with the sharp increase in computing demand, this model has clearly failed to meet the ever-changing market needs.

03 Project Analysis: Decentralized GPU Cloud Platform Heurist

@heurist_ai has emerged in this context, providing a new decentralized solution for a globally distributed GPU network to meet the computing needs of AI inference and other GPU-intensive tasks.

As a decentralized GPU cloud platform, Heurist is designed specifically for computing-intensive workloads like AI inference.

It is based on the DePIN (Decentralized Infrastructure Network) protocol, allowing GPU owners to freely offer their idle computing resources to the network, while users can access these resources easily through a simple API/SDK to run complex tasks like AI models and ZK proofs.

Unlike traditional GPU cloud platforms, Heurist eliminates complex virtual machine management and resource scheduling mechanisms, adopting a serverless computing architecture that simplifies the use and management of computing resources.

04

AI Inference: Heurist's Core Advantage

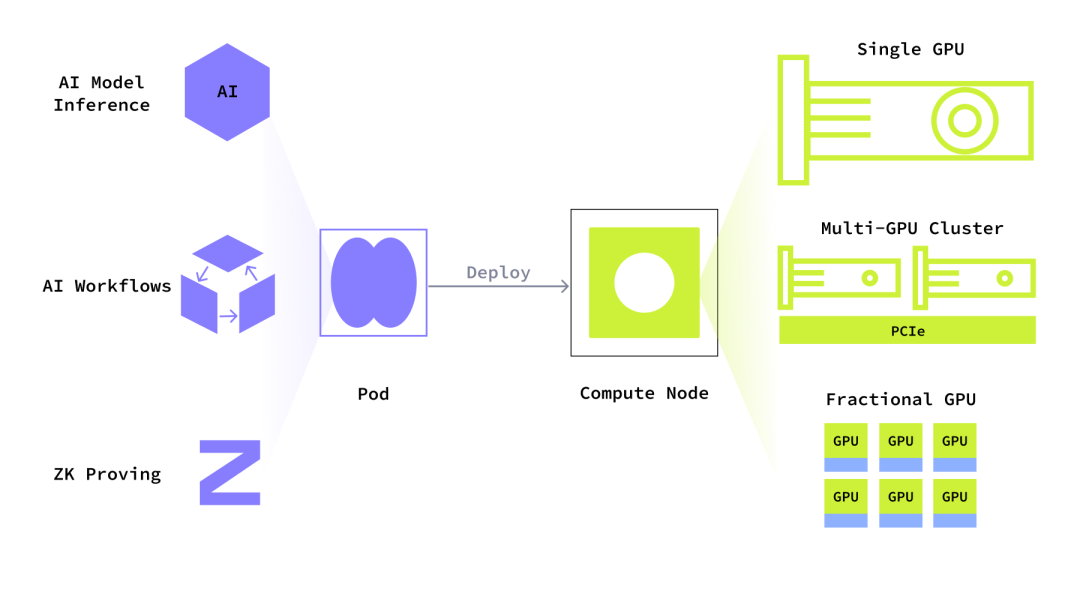

AI inference refers to the computation process based on trained models for real-world applications. Compared to AI training, the computing resources required for inference are relatively less and can usually be efficiently executed on a single GPU or a machine with multiple GPUs.

Heurist is designed based on this principle, creating a globally distributed GPU network that can efficiently schedule computing resources to ensure the rapid completion of AI inference tasks.

Moreover, the tasks supported by Heurist are not limited to AI inference; they also include training small language models, model fine-tuning, and other computing-intensive workloads. Since these tasks do not require intensive inter-node communication, Heurist can allocate resources more flexibly and economically, ensuring optimal resource utilization.

Heurist's technical architecture adopts an innovative Platform-as-a-Service (PaaS) model, providing a platform that does not require managing complex infrastructure, allowing users and developers to focus on deploying and optimizing AI models without worrying about the management and scaling of underlying resources.

05 Core Features of Heurist

Heurist offers a range of powerful features designed to meet the needs of different users:

Serverless AI API: Users can run over 20 fine-tuned image generation models and large language models (LLMs) with just a few lines of code, significantly lowering the technical barrier.

Elastic Scaling: The platform dynamically adjusts computing resources based on user demand, ensuring stable service even during peak times.

Permissionless Mining: GPU owners can join or exit mining activities at any time, attracting a large number of high-performance GPU users to participate.

Free AI Applications: Heurist offers various free applications, including image generation, chat assistants, and search engines, allowing ordinary users to directly experience AI technology.

06 Heurist's Free AI Applications

The Heurist team has released several free AI applications suitable for everyday use by ordinary users. Here are some specific applications:

Heurist Imagine: A powerful AI image generator that allows users to easily create artwork without any design background. 🔗https://imagine.heurist.ai/

Pondera: An intelligent chat assistant that provides a natural and smooth conversation experience, enabling users to easily obtain information or solve problems. 🔗 https://pondera.heurist.ai/

Heurist Search: An efficient AI search engine that helps users quickly find the information they need, improving work efficiency. 🔗 https://search.heurist.ai/

07 Latest Developments of Heurist

Heurist recently completed a $2 million funding round, with investors including well-known institutions like Amber Group, providing a solid foundation for its future development.

Additionally, Heurist is about to conduct a TGE and plans to collaborate with OKX Wallet to launch an event for minting AIGC NFTs. This event will offer 100,000 ZK tokens as rewards to one thousand participants, bringing more opportunities for community engagement.

Event link: https://app.galxe.com/quest/OKXWEB3/GC1JjtVfaM

Computing power is the core driving force behind AI development, and any decentralized computing project that relies on computing power stands at the high point of the industry. It not only meets the growing demand for AI computing power but also, due to the stability of its infrastructure and market potential, has become a coveted target for capital.

As Heurist continues to expand its ecosystem and launch new features, we have reason to believe that decentralized AI computing platforms will play a significant role in the global AI industry.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。