If our views on AI models become similar to those on social media algorithms, we will face significant trouble.

Written by: @lukedelphi

Compiled by: zhouzhou, BlockBeats

Editor’s note: With the recent rise of AI's influence in the cryptocurrency space, the market has begun to focus on the verifiability of AI. In this article, several experts in the fields of cryptocurrency and AI analyze how technologies such as decentralization, blockchain, and zero-knowledge proofs can address the risks of potential AI model misuse, and explore future trends like reasoning verification, closed-source models, and edge device reasoning.

The following is the original content (edited for readability):

Recently, a roundtable discussion was recorded for Delphi Digital's AI monthly event, inviting four founders focused on the fields of cryptocurrency and AI to discuss the topic of verifiable AI. Here are some key points.

Guests: colingagich, ryanmcnutty33, immorriv, and Iridium Eagleemy.

In the future, AI models will become a form of soft power; the more widely and centrally their economic applications are, the greater the opportunities for misuse. Whether the model outputs are manipulated or not, merely sensing this possibility is already quite harmful.

If our views on AI models become similar to those on social media algorithms, we will face significant trouble. Decentralization, blockchain, and verifiability are key to addressing this issue. Since AI is essentially a black box, we need to find ways to make the AI process provable or verifiable to ensure it has not been tampered with.

This is precisely the problem that verifiable reasoning aims to solve. Although the guests reached a consensus on the issue, they took different paths in terms of solutions.

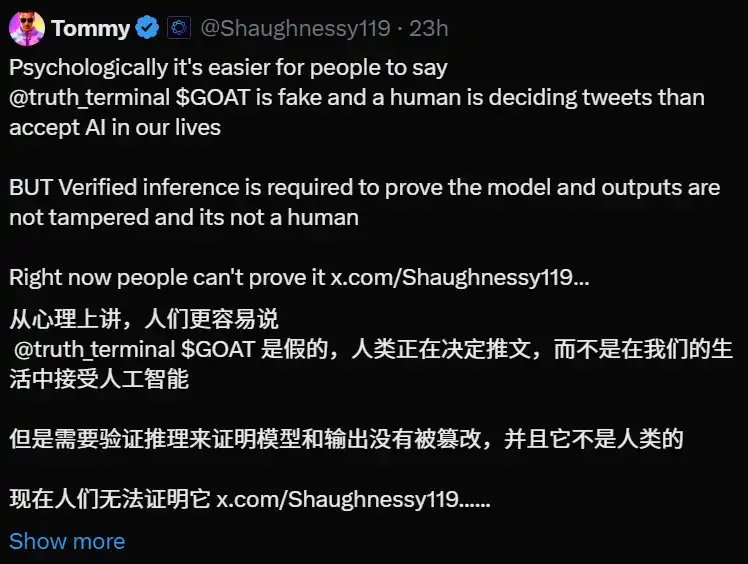

More specifically, verifiable reasoning includes: my question or input has not been tampered with; the model I am using is the one I committed to; the output is provided as is, without modification. In fact, this definition comes from @Shaughnessy119, but I appreciate its simplicity.

This will be very helpful in the current truth terminal case.

Using zero-knowledge proofs to verify model outputs is undoubtedly the safest method. However, it also comes with some trade-offs, increasing computational costs by 100 to 1000 times. Additionally, not everything can be easily converted into circuits, so some functions (like sigmoid) need to be approximated, which may result in floating-point approximation loss.

Regarding computational overhead, many teams are working to improve state-of-the-art ZK technology to significantly reduce overhead. Although large language models are massive, most financial application cases may be relatively small, such as capital allocation models, making the overhead negligible. Trusted Execution Environments (TEEs) are suitable for applications that require lower maximum security but are more sensitive to cost or model size.

Travis from Ambient discussed how they plan to verify reasoning on a very large sharded model, which is not a general problem but a solution for a specific model. However, since Ambient is still in a secretive phase, this work is currently confidential, and we need to pay attention to the upcoming paper.

The optimistic method, which does not generate proofs during reasoning but requires the nodes executing the reasoning to stake tokens, with penalties for improper actions if challenged, received some objections from the guests.

First, to achieve this, deterministic outputs are needed, and to reach this goal, some compromises must be made, such as ensuring all nodes use the same random seed. Second, if facing a risk of $10 billion, how much staking is sufficient to ensure economic security? This question ultimately remains unanswered, highlighting the importance of allowing consumers to choose whether they are willing to pay the full proof cost.

Regarding the issue of closed-source models, inference labs and the aizel network can provide support. This sparked some philosophical debates; trust does not require understanding the model being run, so private models are unpopular and contrary to verifiable AI. However, in some cases, understanding the internal workings of a model may lead to manipulation, and the only way to address this issue is sometimes to make the model closed-source. If a closed-source model remains reliable after 100 or 1000 verifications, even without access to its weights, that is enough to instill confidence.

Finally, we discussed whether AI reasoning will shift to edge devices (like smartphones and laptops) due to issues like privacy, latency, and bandwidth. The consensus was that this shift is coming, but it will require several more iterations.

For large models, space, computational demands, and network requirements are all issues. However, models are becoming smaller, and devices are becoming more powerful, so this shift seems to be happening, just not fully realized yet. However, if we can keep the reasoning process private, we can still gain many benefits of local reasoning without facing failure modes.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。