Author: Geekcartel

As the narrative around AI continues to heat up, more and more attention is being focused on this sector. Geekcartel has conducted an in-depth analysis of the technical logic, application scenarios, and representative projects in the Web3-AI sector, providing you with a comprehensive view of the landscape and development trends in this field.

1. Web3-AI: Analysis of Technical Logic and Emerging Market Opportunities

1.1 The Fusion Logic of Web3 and AI: How to Define the Web-AI Sector

In the past year, the AI narrative has been exceptionally popular in the Web3 industry, with AI projects emerging like mushrooms after rain. While many projects involve AI technology, some only use AI in certain parts of their products, and the underlying token economics have no substantial connection to AI products. Therefore, such projects are not included in the discussion of Web3-AI projects in this article.

This article focuses on projects that use blockchain to solve production relationship issues and AI to address productivity issues. These projects provide AI products while using Web3 economic models as tools for production relationships, complementing each other. We categorize these projects as part of the Web3-AI sector. To help readers better understand the Web3-AI sector, Geekcartel will introduce the development process and challenges of AI, as well as how the combination of Web3 and AI perfectly solves problems and creates new application scenarios.

1.2 The Development Process and Challenges of AI: From Data Collection to Model Inference

AI technology is a technology that enables computers to simulate, extend, and enhance human intelligence. It allows computers to perform various complex tasks, from language translation and image classification to facial recognition and autonomous driving. AI is changing the way we live and work.

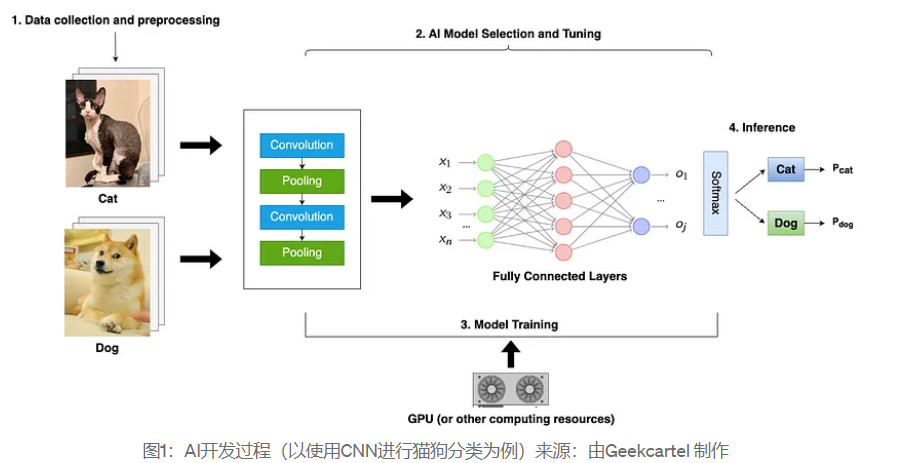

The process of developing artificial intelligence models typically includes the following key steps: data collection and preprocessing, model selection and tuning, model training, and inference. For a simple example, to develop a model for classifying images of cats and dogs, you need to:

- Data Collection and Preprocessing: Collect a dataset containing images of cats and dogs, which can be done using public datasets or by collecting real data yourself. Then label each image with its category (cat or dog), ensuring the labels are accurate. Convert the images into a format that the model can recognize, and divide the dataset into training, validation, and test sets.

- Model Selection and Tuning: Choose an appropriate model, such as a Convolutional Neural Network (CNN), which is well-suited for image classification tasks. Tune the model parameters or architecture based on different needs. Generally, the network depth of the model can be adjusted according to the complexity of the AI task. In this simple classification example, a shallower network may suffice.

- Model Training: Use GPUs, TPUs, or high-performance computing clusters to train the model, with training time influenced by model complexity and computational power.

- Model Inference: The trained model file is usually referred to as model weights. The inference process involves using the trained model to predict or classify new data. During this process, the test set or new data can be used to evaluate the model's classification performance, typically using metrics such as accuracy, recall, and F1-score to assess the model's effectiveness.

As shown in the figure, after data collection and preprocessing, model selection and tuning, and training, performing inference on the trained model using the test set will yield predicted values P (probability) for cats and dogs, indicating the model's probability of predicting whether an image is a cat or a dog.

The trained AI model can be further integrated into various applications to perform different tasks. In this example, the cat-dog classification AI model can be integrated into a mobile application, allowing users to upload images of cats or dogs and receive classification results.

However, the centralized AI development process faces several issues in the following scenarios:

User Privacy: In centralized scenarios, the AI development process is often opaque. User data may be stolen without their knowledge and used for AI training.

Data Source Acquisition: Small teams or individuals may face restrictions in obtaining specific domain data (such as medical data) due to a lack of open-source availability.

Model Selection and Tuning: It can be challenging for small teams to access specific domain model resources or incur significant costs for model tuning.

Computational Power Acquisition: For individual developers and small teams, the high costs of purchasing GPUs and renting cloud computing power can pose a significant economic burden.

AI Asset Income: Data labeling workers often cannot earn income commensurate with their efforts, and the research results of AI developers are also difficult to match with buyers in need.

The challenges present in centralized AI scenarios can be addressed through integration with Web3, which, as a new type of production relationship, is naturally suited to represent the new productivity of AI, thereby promoting simultaneous advancements in technology and production capabilities.

1.3 The Synergistic Effect of Web3 and AI: Role Transformation and Innovative Applications

The combination of Web3 and AI can enhance user sovereignty, providing users with an open AI collaboration platform that transforms them from AI users in the Web2 era into participants, creating AI that everyone can own. Additionally, the integration of the Web3 world with AI technology can spark more innovative application scenarios and gameplay.

Based on Web3 technology, the development and application of AI will usher in a brand new collaborative economic system. People's data privacy can be protected, and a crowdsourced data model can promote the advancement of AI models. Numerous open-source AI resources will be available for users, and shared computational power can be acquired at lower costs. With the help of decentralized collaborative crowdsourcing mechanisms and open AI markets, a fair income distribution system can be realized, thereby incentivizing more people to drive the advancement of AI technology.

In the Web3 scenario, AI can have a positive impact across multiple sectors. For example, AI models can be integrated into smart contracts to enhance work efficiency in various application scenarios, such as market analysis, security detection, social clustering, and more. Generative AI can allow users to experience the role of "artist," such as using AI technology to create their own NFTs, and can also create rich and diverse gaming scenarios and interesting interactive experiences in GameFi. A rich infrastructure provides a smooth development experience, allowing both AI experts and newcomers wanting to enter the AI field to find suitable entry points in this world.

2. Web3-AI Ecological Project Landscape and Architecture Interpretation

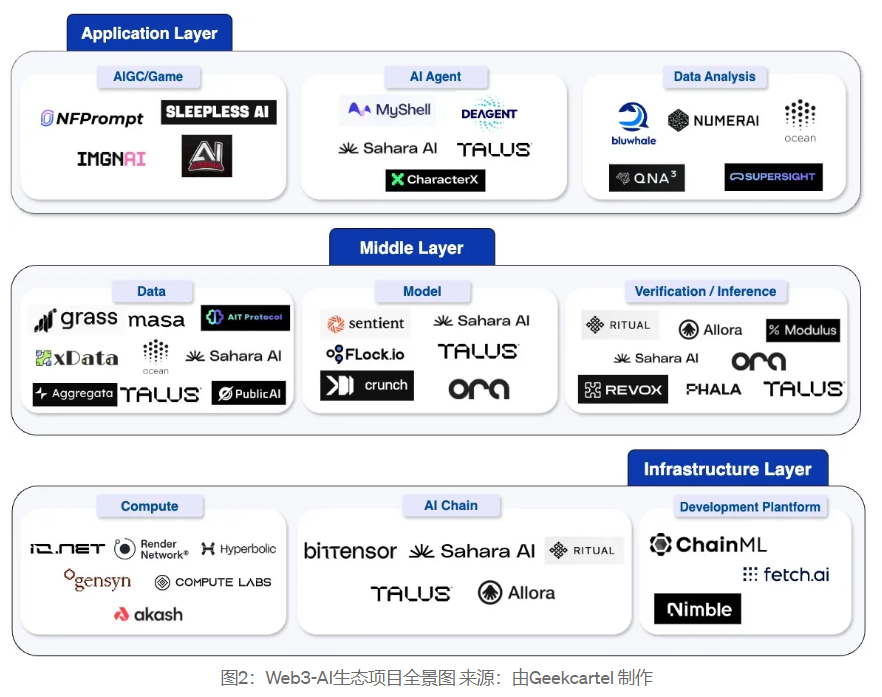

We primarily studied 41 projects in the Web3-AI sector and categorized these projects into different tiers. The logic for dividing each tier is illustrated in the figure below, including the infrastructure layer, middle layer, and application layer, with each layer further divided into different segments. In the next chapter, we will conduct an in-depth analysis of some representative projects.

The infrastructure layer encompasses the computing resources and technical architecture that support the entire AI lifecycle, while the middle layer includes data management, model development, and verification inference services that connect the infrastructure and applications. The application layer focuses on various applications and solutions directly aimed at users.

Infrastructure Layer:

The infrastructure layer is the foundation of the AI lifecycle. In this article, we classify computing power, AI Chain, and development platforms as part of the infrastructure layer. It is the support of these infrastructures that enables the training and inference of AI models, presenting powerful and practical AI applications to users.

- Decentralized Computing Network: It can provide distributed computing power for AI model training, ensuring efficient and economical utilization of computing resources. Some projects offer decentralized computing power markets where users can rent computing power at low costs or share computing power for profit, with representative projects like IO.NET and Hyperbolic. Additionally, some projects have derived new gameplay, such as Compute Labs, which proposes a tokenization protocol where users can purchase NFTs representing GPU entities to participate in computing power leasing in various ways for profit.

- AI Chain: Utilizing blockchain as the foundation for the AI lifecycle enables seamless interaction between on-chain and off-chain AI resources, promoting the development of the industry ecosystem. The decentralized AI market on-chain can trade AI assets such as data, models, and agents, and provide AI development frameworks and supporting development tools, with representative projects like Sahara AI. AI Chain can also promote advancements in AI technology across different fields, such as Bittensor, which encourages competition among different AI types through innovative subnet incentive mechanisms.

- Development Platform: Some projects provide AI agent development platforms and facilitate the trading of AI agents, such as Fetch.ai and ChainML. One-stop tools help developers create, train, and deploy AI models more conveniently, with representative projects like Nimble. These infrastructures promote the widespread application of AI technology in the Web3 ecosystem.

Middle Layer:

This layer involves AI data, models, as well as inference and verification, achieving higher work efficiency through Web3 technology.

- Data: The quality and quantity of data are key factors affecting model training effectiveness. In the Web3 world, crowdsourced data and collaborative data processing can optimize resource utilization and reduce data costs. Users can have autonomy over their data and sell it under privacy protection to avoid data being stolen and profited from by unscrupulous businesses. For data demanders, these platforms provide a wide range of choices at extremely low costs. Representative projects like Grass utilize user bandwidth to scrape web data, while xData collects media information through user-friendly plugins and supports users in uploading tweet information.

Additionally, some platforms allow domain experts or ordinary users to perform data preprocessing tasks, such as image labeling and data classification. These tasks may require specialized knowledge for financial and legal data processing, and users can tokenize their skills to achieve collaborative crowdsourcing for data preprocessing. Representative projects like Sahara AI's AI market offer various data tasks across different fields, covering multi-domain data scenarios, while AIT Protocol uses human-machine collaboration for data labeling.

- Models: As previously mentioned in the AI development process, different types of requirements need to match suitable models. Common models for image tasks include CNN and GAN, while the Yolo series can be chosen for object detection tasks. For text-related tasks, common models include RNN and Transformer, along with some specific or general large models. The model depth required for tasks of varying complexity also differs, and sometimes model tuning is necessary.

Some projects support users in providing different types of models or collaboratively training models through crowdsourcing. For example, Sentient allows users to place trusted model data in the storage layer and distribution layer for model optimization through modular design. Sahara AI provides development tools that come with advanced AI algorithms and computing frameworks, along with collaborative training capabilities.

- Inference and Verification: After training, the model generates model weight files that can be used for direct classification, prediction, or other specific tasks, a process known as inference. The inference process is usually accompanied by a verification mechanism to validate the source of the inference model, checking for correctness and malicious behavior. In Web3, inference can typically be integrated into smart contracts, calling models for inference. Common verification methods include ZKML, OPML, and TEE technologies. Representative projects like the ORA on-chain AI oracle (OAO) have introduced OPML as a verifiable layer for AI oracles, and their official website also mentions their research on ZKML and opp/ai (ZKML combined with OPML).

Application Layer:

This layer primarily consists of applications directly aimed at users, combining AI with Web3 to create more interesting and innovative gameplay. This article mainly outlines projects in the areas of AIGC (AI-generated content), AI agents, and data analysis.

- AIGC: AIGC can be extended to NFT, gaming, and other sectors within Web3. Users can directly generate text, images, and audio through prompts (user-provided keywords), and even create custom gameplay in games based on their preferences. NFT projects like NFPrompt allow users to generate NFTs through AI for trading in the market; games like Sleepless enable users to shape the personality of virtual companions through dialogue to match their preferences.

- AI Agents: These refer to artificial intelligence systems capable of autonomously executing tasks and making decisions. AI agents typically possess capabilities for perception, reasoning, learning, and action, allowing them to perform complex tasks in various environments. Common AI agents include language translation, language learning, and image-to-text conversion. In the Web3 scenario, they can generate trading bots, create meme images, and conduct on-chain security checks. For instance, MyShell serves as an AI agent platform, offering various types of agents, including educational learning, virtual companions, and trading agents, along with user-friendly agent development tools that require no coding to build personalized agents.

- Data Analysis: By integrating AI technology with relevant databases, data analysis, judgment, and prediction can be achieved. In Web3, market data analysis and smart money dynamics can assist users in making investment decisions. Token prediction is also a unique application scenario in Web3, with representative projects like Ocean, which has set up long-term challenges for token prediction and releases various themed data analysis tasks to incentivize user participation.

3. Comprehensive Analysis of Cutting-Edge Projects in the Web3-AI Sector

Some projects are exploring the possibilities of combining Web3 and AI. GeekCartel will guide you through representative projects in this sector, allowing you to experience the charm of WEB3-AI and understand how these projects achieve the integration of Web3 and AI, creating new business models and economic value.

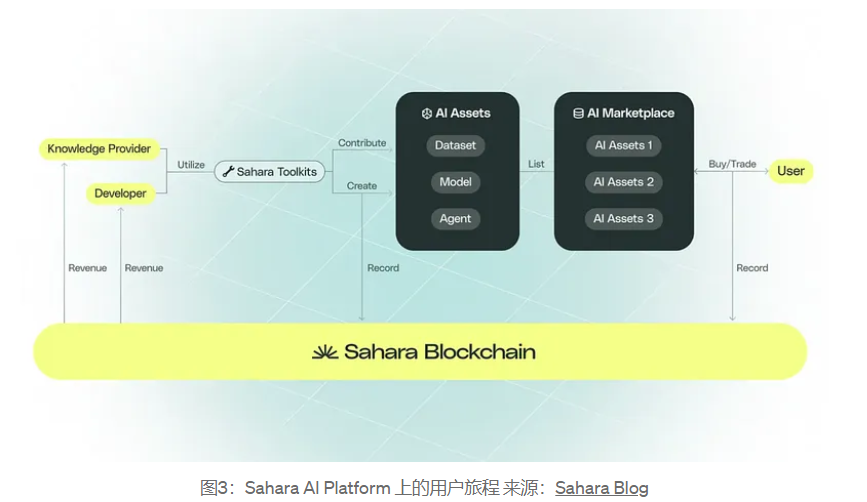

Sahara AI: An AI Blockchain Platform Dedicated to Collaborative Economy

Sahara AI is highly competitive in the entire sector, committed to building a comprehensive AI blockchain platform that encompasses AI data, models, agents, and computing power, providing all-encompassing AI resources, with the underlying architecture safeguarding the collaborative economy of the platform. By leveraging blockchain technology and unique privacy techniques, it ensures decentralized ownership and governance of AI assets throughout the AI development cycle, achieving fair incentive distribution. The team has a strong background in AI and Web3, perfectly merging these two fields and gaining favor from top investors, showcasing immense potential in the sector.

Sahara AI is not limited to Web3, as it breaks the unequal distribution of resources and opportunities in the traditional AI field. Through decentralization, key AI elements such as computing power, models, and data are no longer monopolized by centralized giants. Everyone has the opportunity to find their suitable position in this ecosystem to benefit, stimulating creativity and collaborative enthusiasm.

As shown in the figure, users can contribute or create their datasets, models, AI agents, and other assets using the toolkit provided by Sahara AI, profiting from these assets in the AI market while also receiving platform incentives. Consumers can trade AI assets on demand. Additionally, all transaction information will be recorded on the Sahara Chain, with blockchain technology and privacy protection measures ensuring the traceability of contributions, data security, and fairness of rewards.

In the economic system of Sahara AI, in addition to the roles of developers, knowledge providers, and consumers mentioned above, users can also act as investors, providing funds and resources (GPUs, cloud servers, RPC nodes, etc.) to support the development and deployment of AI assets. They can also serve as Operators to maintain network stability and as Validators to uphold the security and integrity of the blockchain. Regardless of how users participate in the Sahara AI platform, they will receive rewards and income based on their contributions.

The Sahara AI blockchain platform is built on a layered architecture, with on-chain and off-chain infrastructures enabling users and developers to effectively contribute to and benefit from the entire AI development cycle. The architecture of the Sahara AI platform is divided into four layers:

Application Layer

The application layer serves as the user interface and primary interaction point, providing natively built toolkits and applications to enhance user experience.

Functional Components:

- Sahara ID — Ensures secure access to AI assets and tracks user contributions;

- Sahara Vault — Protects the privacy and security of AI assets from unauthorized access and potential threats;

- Sahara Agent — Features role alignment (interactions that match user behavior patterns), lifelong learning, multimodal perception (capable of handling multiple types of data), and multi-tool execution capabilities;

Interactive Components:

- Sahara Toolkit — Supports both technical and non-technical users in creating and deploying AI assets;

- Sahara AI Market — Used for publishing, monetizing, and trading AI assets, providing flexible licensing and various monetization options.

Transaction Layer

The transaction layer of Sahara AI utilizes the Sahara blockchain, an L1 equipped with protocols for managing ownership, attribution, and AI-related transactions on the platform, playing a key role in maintaining the sovereignty and provenance of AI assets. The Sahara blockchain integrates innovative Sahara AI native precompiled functions (SAP) and Sahara blockchain protocols (SBP) to support fundamental tasks throughout the AI lifecycle.

- SAP is a built-in function at the blockchain native runtime level, focusing on the AI training/inference process. SAP helps to call, record, and verify off-chain AI training/inference processes, ensuring the credibility and reliability of AI models developed within the Sahara AI platform, while guaranteeing the transparency, verifiability, and traceability of all AI inferences. Additionally, SAP enables faster execution speeds, lower computational overhead, and reduced gas costs.

- SBP implements AI-specific protocols through smart contracts, ensuring that AI assets and computational results are processed transparently and reliably. This includes functions such as AI asset registration, licensing (access control), ownership, and attribution (contribution tracking).

Data Layer

The data layer of Sahara AI is designed to optimize data management throughout the AI lifecycle. It serves as a crucial interface connecting the execution layer to various data management mechanisms, seamlessly integrating on-chain and off-chain data sources.

- Data Components: Include both on-chain and off-chain data, with on-chain data encompassing metadata, ownership, commitments, and proofs of AI assets, while datasets, AI models, and supplementary information are stored off-chain.

- Data Management: Sahara AI's data management solution provides a set of security measures, ensuring that data is protected during transmission and in a static state through unique encryption schemes. In collaboration with AI licensing SBP, it implements strict access control and verifiability while providing private domain storage, enhancing security features for users' sensitive data.

Execution Layer

The execution layer is the off-chain AI infrastructure of the Sahara AI platform, seamlessly interacting with the transaction layer and data layer to execute and manage protocols related to AI computation and functionality. Depending on the execution tasks, it securely extracts data from the data layer and dynamically allocates computational resources for optimal performance. A set of specially designed protocols coordinates complex AI operations, facilitating efficient interactions between various abstractions, with the underlying infrastructure supporting high-performance AI computation.

- Infrastructure: The execution layer infrastructure of Sahara AI is designed to support high-performance AI computation, characterized by speed, efficiency, elasticity, and high availability. It ensures that the system remains stable and reliable under high traffic and failure conditions through efficient coordination of AI computation, automatic scaling mechanisms, and fault-tolerant designs.

- Abstractions: The core abstractions are the fundamental components that form the basis of AI operations on the Sahara AI platform, including datasets, models, and computational resources; higher-level abstractions are built on top of core abstractions, such as the execution interfaces behind Vaults and agents, enabling more advanced functionalities.

- Protocols: Abstract execution protocols are used to execute interactions with Vaults, coordinate agent interactions, and facilitate collaborative computation; among them, collaborative computation protocols enable joint AI model development and deployment among multiple participants, supporting contributions of computational resources and model aggregation. The execution layer also includes low-cost computational technology modules (PEFT), privacy-preserving computation modules, and anti-fraud computation modules.

Sahara AI is building an AI blockchain platform dedicated to achieving a comprehensive AI ecosystem. However, this grand vision will inevitably encounter numerous challenges during its realization, requiring strong technology, resource support, and continuous optimization and iteration. If successful, it will become a cornerstone supporting the Web3-AI field and is expected to become an ideal garden in the hearts of Web2-AI practitioners.

Team Information:

The Sahara AI team consists of a group of outstanding and creative members. Co-founder Sean Ren is a professor at the University of Southern California and has received honors such as Samsung's Annual AI Researcher, MIT TR 35 Innovator Under 35, and Forbes 30 Under 30. Co-founder Tyler Zhou graduated from the University of California, Berkeley, has an in-depth understanding of Web3, and leads a global talent team with experience in AI and Web3.

Since the establishment of Sahara AI, the team has generated millions of dollars in revenue from top companies including Microsoft, Amazon, MIT, Snapchat, and Character AI. Currently, Sahara AI serves over 30 enterprise clients and has more than 200,000 AI trainers worldwide. The rapid growth of Sahara AI allows more participants to contribute and benefit from the shared economy model.

Funding Information:

As of August this year, Sahara Labs has successfully raised $43 million. The latest funding round was co-led by Pantera Capital, Binance Labs, and Polychain Capital. Additionally, it has received support from pioneers in the AI field such as Motherson Group, Anthropic, Nous Research, and Midjourney.

Bittensor: A New Play Under Subnet Competitive Incentives

Bittensor itself is not an AI product, nor does it produce or provide any AI products or services. Bittensor is an economic system that provides a highly competitive incentive structure for AI product producers, enabling them to continuously optimize the quality of AI. As an early project in Web3-AI, Bittensor has garnered widespread attention in the market since its launch. According to CoinMarketCap data, as of October 17, its market capitalization has exceeded $4.26 billion, with an FDV (Fully Diluted Valuation) of over $12 billion.

Bittensor has built a network architecture composed of many subnetworks (Subnets), where AI product producers can create subnets with custom incentives and different use cases. Different subnets are responsible for different tasks, such as machine translation, image recognition and generation, and large language models. For example, Subnet 5 can create AI images similar to Midjourney. When excellent tasks are completed, rewards in TAO (Bittensor's token) will be granted.

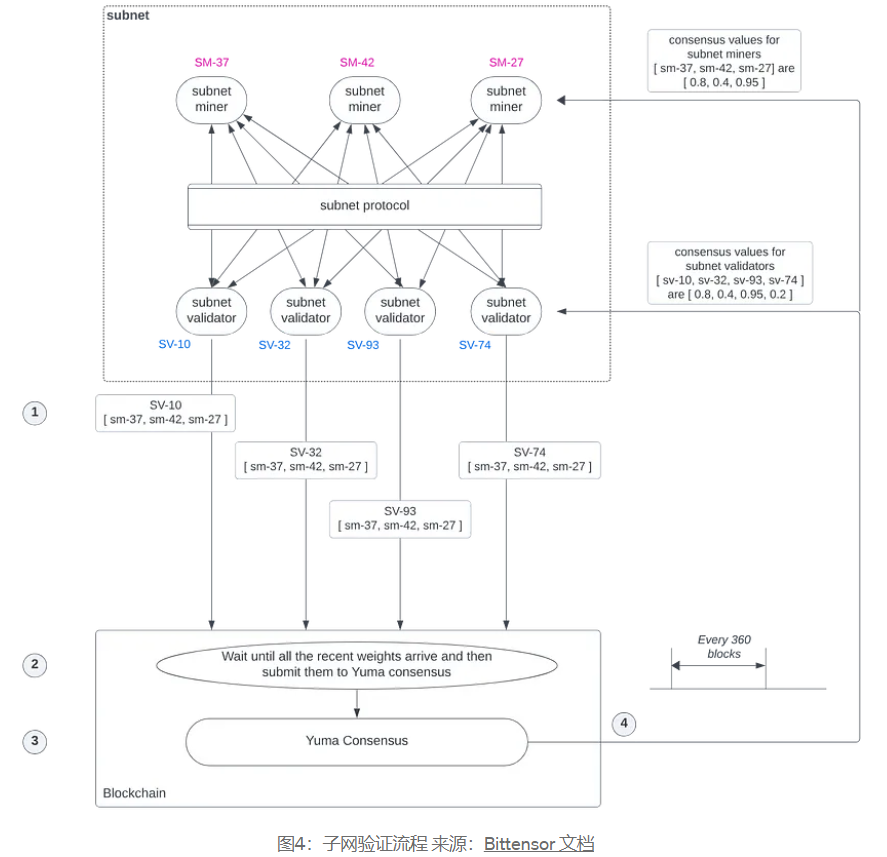

The incentive mechanism is a fundamental component of Bittensor. It drives the behavior of subnet miners and controls the consensus among subnet validators. Each subnet has its own incentive mechanism, with subnet miners responsible for executing tasks and validators scoring the results of subnet miners.

As shown in the figure, we will demonstrate the workflow between subnet miners and subnet validators with an example:

In the figure, the three subnet miners correspond to UID37, 42, and 27; the four subnet validators correspond to UID10, 32, 93, and 74.

- Each subnet validator maintains a weight vector. Each element of the vector represents the weight assigned to the subnet miners, determined by the validator's evaluation of the miners' task completion. Each subnet validator ranks all subnet miners using this weight vector and operates independently, transmitting its miner ranking weight vector to the blockchain. Typically, each subnet validator transmits updated ranking weight vectors to the blockchain every 100–200 blocks.

- The blockchain (subtensor) waits for the latest ranking weight vectors from all subnet validators of the given subnet to arrive at the blockchain. Then, the ranking weight matrix formed by these ranking weight vectors will be provided as input to the on-chain Yuma consensus module.

- The on-chain Yuma consensus uses this weight matrix along with the amount of stake associated with the UID on that subnet to calculate rewards.

- The Yuma consensus calculates the consensus distribution of TAO and allocates the newly minted reward TAO to the accounts associated with the UID.

Subnet validators can transmit their ranking weight vectors to the blockchain at any time. However, the Yuma consensus cycle for the subnet begins every 360 blocks (i.e., 4320 seconds or 72 minutes, assuming 12 seconds per block) using the latest weight matrix. If a subnet validator's ranking weight vector arrives after the 360-block cycle, that weight vector will be used in the next Yuma consensus cycle. Each cycle ends with the distribution of TAO rewards.

Yuma consensus is the core algorithm for achieving fair distribution among nodes in Bittensor, combining elements of PoW and PoS in a hybrid consensus mechanism. Similar to Byzantine fault-tolerant consensus mechanisms, if the majority of honest validators are present in the network, a correct decision can ultimately be reached.

The root network (Root Network) is a special subnet, namely Subnet 0. By default, among the subnet validators in all subnets, the 64 validators with the highest stakes are the validators in the root network. Root network validators evaluate and rank the quality of output from each Subnet, and the evaluation results of the 64 validators are aggregated, with the final emission results obtained through the Yuma Consensus algorithm, allocating newly issued TAO to each Subnet.

Although Bittensor's subnet competitive model enhances the quality of AI products, it also faces some challenges. First, the incentive mechanisms set by subnet owners determine the miners' earnings, which may directly affect their work motivation. Another issue is that validators decide the token allocation for each subnet but lack clear incentives to choose subnets that benefit Bittensor's long-term productivity. This design may lead validators to favor subnets related to them or those offering additional benefits. To address this issue, contributors to the Opentensor Foundation proposed BIT001: Dynamic TAO Solution, suggesting that the allocation of subnet tokens for all TAO stakers be determined through market mechanisms.

Team Information:

Co-founder Ala Shaabana is a postdoctoral researcher at the University of Waterloo with an academic background in computer science. Another co-founder, Jacob Robert Steeves, graduated from Simon Fraser University in Canada and has nearly 10 years of experience in machine learning research, having previously worked as a software engineer at Google.

Funding Information:

In addition to receiving funding support from the OpenTensor Foundation, a non-profit organization supporting Bittensor, Bittensor's community announcement has stated that well-known crypto VC Pantera and Collab Currency have become holders of the TAO token and will provide more support for the project's ecological development. Other major investors include well-known investment institutions and market makers such as Digital Currency Group, Polychain Capital, and FirstMark Capital.

Talus: On-Chain AI Agent Ecosystem Based on Move

Talus Network is an L1 blockchain built on MoveVM, specifically designed for AI agents. These AI agents can make decisions and take actions based on predefined goals, enabling smooth inter-chain interactions while ensuring verifiability. Users can quickly build AI agents using the development tools provided by Talus and integrate them into smart contracts. Talus also offers an open AI market for resources such as AI models, data, and computing power, allowing users to participate in various forms and tokenize their contributions and assets.

One of Talus's key features is its parallel execution and secure execution capabilities. With the influx of capital into the Move ecosystem and the expansion of quality projects, Talus's dual highlights of secure execution based on Move and AI agent-integrated smart contracts are expected to attract widespread attention in the market. Additionally, the multi-chain interactions supported by Talus can enhance the efficiency of AI agents and promote AI prosperity on other chains.

According to official Twitter information, Talus recently launched Nexus — the first fully on-chain autonomous AI agent framework, giving Talus a first-mover advantage in the decentralized AI technology field and providing significant competitiveness in the rapidly evolving blockchain AI market. Nexus empowers developers to create AI-driven digital assistants on the Talus network, ensuring censorship resistance, transparency, and composability. Unlike centralized AI solutions, Nexus allows consumers to enjoy personalized intelligent services, securely manage digital assets, automate interactions, and enhance their daily digital experiences.

As the first developer toolkit for on-chain agents, Nexus provides the foundation for building the next generation of consumer-oriented crypto AI applications. Nexus offers a range of tools, resources, and standards to help developers create agents that can execute user intentions and communicate with each other on the Talus chain. Among them, the Nexus Python SDK bridges the gap between AI and blockchain development, allowing AI developers to easily get started without learning smart contract programming. Talus provides user-friendly development tools and a range of infrastructure, making it an ideal platform for developer innovation.

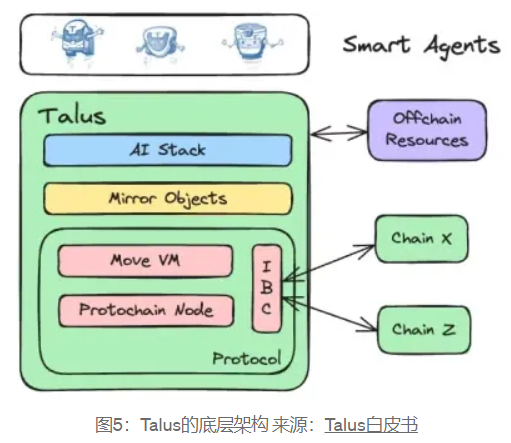

As shown in Figure 5, the underlying architecture of Talus is based on a modular design, featuring the flexibility of off-chain resources and multi-chain interactions. Based on Talus's unique design, it constitutes a thriving on-chain intelligent agent ecosystem.

The protocol is the core of Talus, providing the foundation for consensus, execution, and interoperability, on which on-chain intelligent agents can be built, utilizing off-chain resources and cross-chain functionalities.

- Protochain Node: A PoS blockchain node based on Cosmos SDK and CometBFT. The Cosmos SDK features a modular design and high scalability, while CometBFT is based on a Byzantine fault-tolerant consensus algorithm, characterized by high performance and low latency, providing strong security and fault tolerance, allowing it to continue operating normally even in the event of partial node failures or malicious behavior.

- Sui Move and MoveVM: Using Sui Move as the smart contract language, the design of the Move language inherently enhances security by eliminating critical vulnerabilities (such as reentrancy attacks, lack of access control checks for object ownership, and unexpected arithmetic overflow/underflow). The architecture of Move VM supports efficient parallel processing, enabling Talus to scale by processing multiple transactions simultaneously without sacrificing security or integrity.

IBC (Inter-Blockchain Communication Protocol):

- Interoperability: IBC facilitates seamless interoperability between different blockchains, allowing intelligent agents to interact across multiple chains and utilize data or assets.

- Cross-Chain Atomicity: IBC supports cross-chain atomic transactions, which are crucial for maintaining the consistency and reliability of operations performed by intelligent agents, especially in financial applications or complex workflows.

- Scalability through Sharding: By enabling intelligent agents to operate across multiple blockchains, IBC indirectly supports scalability through sharding. Each blockchain can be viewed as processing a portion of transactions, thereby reducing the load on any single chain. This allows intelligent agents to manage and execute tasks in a more distributed and scalable manner.

- Customizability and Specialization: Through IBC, different blockchains can focus on specific functionalities or optimizations. For example, an intelligent agent might use a chain that can process payments quickly, while another chain is dedicated to secure data storage.

- Security and Isolation: IBC maintains security and isolation between chains, which is beneficial for intelligent agents handling sensitive operations or data. Since IBC ensures secure validation of inter-chain communications and transactions, intelligent agents can operate confidently across different chains without compromising security.

Mirror Object:

To represent the off-chain world in the on-chain architecture, mirror objects are primarily used for the validation and linking of AI resources, such as: representation and proof of resource uniqueness, tradability of off-chain resources, proof of ownership representation, or verifiability of ownership.

Mirror objects include three different types of mirror objects: model objects, data objects, and computation objects.

- Model Object: Model owners can introduce their AI models into the ecosystem through a dedicated model registry, transforming off-chain models into on-chain. Model objects encapsulate the essence and capabilities of the model and directly build ownership, management, and monetization frameworks on top of them. Model objects are flexible assets that can enhance their capabilities through additional fine-tuning processes or be completely reshaped through extensive training to meet specific needs when necessary.

- Data Object: Data (or dataset) objects exist as a digital form of a unique dataset owned by someone. This object can be created, transferred, authorized, or converted into an open data source.

- Computation Object: Buyers propose computation tasks to the owner of the object, who then provides the computation results along with corresponding proofs. Buyers hold keys that can be used to decrypt commitments and verify results.

AI Stack:

Talus provides an SDK and integrated components that support the development of intelligent agents and interaction with off-chain resources. The AI stack also includes integration with Oracles, ensuring that intelligent agents can leverage off-chain data for decision-making and responses.

On-Chain Intelligent Agents:

- Talus offers an economic system for intelligent agents that can operate autonomously, make decisions, execute transactions, and interact with both on-chain and off-chain resources.

- Intelligent agents possess autonomy, social capabilities, reactivity, and proactivity. Autonomy allows them to operate without human intervention, social capabilities enable them to interact with other agents and humans, reactivity allows them to perceive environmental changes and respond in a timely manner (Talus supports agent responses to on-chain and off-chain events through listeners), and proactivity enables them to take actions based on goals, predictions, or anticipated future states.

In addition to the range of development frameworks and infrastructure for intelligent agents provided by Talus, AI agents built on Talus also support various types of verifiable AI reasoning (opML, zkML, etc.), ensuring the transparency and credibility of AI reasoning. The set of facilities specifically designed for AI agents by Talus can achieve multi-chain interaction and mapping functions between on-chain and off-chain resources.

The on-chain AI agent ecosystem launched by Talus is of significant importance for the technological development of the integration of AI and blockchain, but it still presents certain challenges in implementation. Talus's infrastructure provides flexibility and interoperability for developing AI agents, but whether the interoperability and efficiency among these agents running on the Talus chain can meet user needs remains to be seen. Currently, Talus is still in the private testnet phase and is continuously undergoing development and updates. We look forward to Talus further promoting the development of the on-chain AI agent ecosystem in the future.

Team Information:

Mike Hanono is the founder and CEO of Talus Network. He holds a bachelor's degree in Industrial and Systems Engineering and a master's degree in Applied Data Science from the University of Southern California, and has participated in the Wharton School program at the University of Pennsylvania, possessing extensive experience in data analysis, software development, and project management.

Funding Information:

In February of this year, Talus completed its first round of financing, raising $3 million, led by Polychain Capital, with participation from Dao5, Hash3, TRGC, WAGMI Ventures, Inception Capital, and angel investors primarily from Nvidia, IBM, Blue7, Symbolic Capital, and Render Network.

ORA: The Cornerstone of On-Chain Verifiable AI

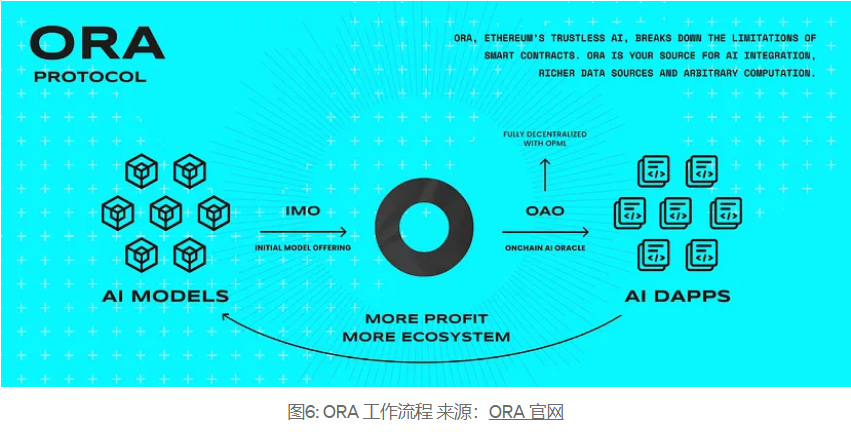

ORA's product OAO (On-Chain AI Oracle) is the world's first AI oracle that uses opML, capable of bringing off-chain AI reasoning results onto the chain. This means that smart contracts can achieve AI functionality on-chain by interacting with OAO. Additionally, ORA's AI oracle can seamlessly integrate with Initial Model Issuance (IMO), providing a full-process on-chain AI service.

ORA has a first-mover advantage both technically and in the market, and as a trustless AI oracle on Ethereum, it will have a profound impact on its broad user base, with more innovative AI application scenarios expected to emerge in the future. Developers can now use the models provided by ORA for decentralized reasoning in smart contracts and can build verifiable AI dApps on Ethereum, Arbitrum, Optimism, Base, Polygon, Linea, and Manta. In addition to providing verification services for AI reasoning, ORA also offers model issuance services (IMO) to facilitate contributions to open-source models.

ORA's two main products are Initial Model Issuance (IMO) and On-Chain AI Oracle (OAO), which perfectly complement each other to achieve the acquisition of on-chain AI models and the verification of AI reasoning.

- IMO incentivizes long-term open-source contributions by tokenizing the ownership of open-source AI models, with token holders receiving a portion of the revenue generated from the on-chain use of the model. ORA also provides funding for AI developers, incentivizing the community and open-source contributors.

- OAO brings verifiable AI reasoning on-chain. ORA introduces opML as the verification layer for the AI oracle. Similar to the workflow of OP Rollup, validators or any network participants can check the results during the challenge period, and if the challenge is successful, the erroneous results are updated on-chain. After the challenge period ends, the results are finalized and immutable.

To establish a verifiable and decentralized oracle network, ensuring the computational validity of results on the blockchain is crucial. This process involves a proof system that ensures computations are reliable and authentic.

To this end, ORA provides three proof system frameworks:

- opML for AI Oracle (currently, ORA's AI oracle already supports opML)

- zkML from keras2circom (a mature and high-performance zkML framework)

- zk+opML, combining the privacy of zkML with the scalability of opML, achieving future on-chain AI solutions through opp/ai

opML:

opML (Optimistic Machine Learning) was invented and developed by ORA, combining machine learning with blockchain technology. By leveraging principles similar to Optimistic Rollups, opML ensures the validity of computations in a decentralized manner. This framework allows for on-chain verification of AI computations, enhancing transparency and fostering trust in machine learning reasoning.

To ensure security and correctness, opML employs the following fraud protection mechanisms:

- Result Submission: Service providers (submitters) execute machine learning computations off-chain and submit the results to the blockchain.

- Verification Period: Validators (or challengers) have a predefined period (challenge period) to verify the correctness of the submitted results.

- Dispute Resolution: If validators find the results incorrect, they initiate an interactive dispute game. This dispute game effectively determines the exact computational steps where the error occurred.

- On-Chain Verification: Only the disputed computational steps are verified on-chain through the Fraud Proof Virtual Machine (FPVM), minimizing resource usage.

- Finalization: If no disputes are raised during the challenge period, or if disputes are resolved, the results are finalized on the blockchain.

The opML launched by ORA allows computations to be executed off-chain in an optimized environment, with only minimal data processed on-chain during disputes. This avoids the expensive proof generation required by zero-knowledge machine learning (zkML), reducing computational costs. This approach can handle large-scale computations that traditional on-chain methods find difficult to achieve.

keras2circom (zkML):

zkML is a proof framework that utilizes zero-knowledge proofs to verify machine learning reasoning results on-chain. Due to its privacy features, it can protect private data and model parameters during training and reasoning processes, thus addressing privacy concerns. Since the actual computations of zkML are performed off-chain, only the validity of the results needs to be verified on-chain, reducing the computational load on the chain.

Keras2Circom, built by ORA, is the first battle-tested high-level zkML framework. According to the benchmarking of leading zkML frameworks funded by the Ethereum Foundation's ESP proposal [FY23–1290], Keras2Circom and its underlying circomlib-ml have been proven to outperform other frameworks.

opp/ai (opML + zkML):

ORA has also proposed OPP/AI (Optimistic Privacy-Preserving AI on Blockchain), which integrates zero-knowledge machine learning (zkML) for privacy with optimistic machine learning (opML) for efficiency, creating a hybrid model tailored for on-chain AI. By strategically partitioning machine learning (ML) models, opp/ai balances computational efficiency and data privacy, enabling secure and efficient on-chain AI services.

opp/ai divides ML models into multiple sub-models based on privacy requirements: zkML sub-models handle components involving sensitive data or proprietary algorithms, executing using zero-knowledge proofs to ensure the confidentiality of data and models; opML sub-models handle components where efficiency is prioritized over privacy, executed using the optimistic approach of opML for maximum efficiency.

In summary, ORA has innovatively proposed three proof frameworks: opML, zkML, and opp/ai (a combination of opML and zkML). The diverse proof frameworks enhance data privacy and computational efficiency, bringing greater flexibility and security to blockchain applications.

As a pioneering AI oracle, ORA holds immense potential and vast imaginative space. ORA has published a wealth of research and results demonstrating its technological advantages. However, the reasoning process of AI models involves certain complexities and verification costs, raising the question of whether the reasoning speed of on-chain AI can meet user demands. With time-tested validation and continuous optimization of user experience, such AI products may become a powerful tool for enhancing the efficiency of on-chain Dapps.

Team Information:

Co-founder Kartin graduated from the University of Arizona with a degree in Computer Science, previously serving as a technical leader at Tiktok and as a software engineer at Google.

Chief Scientist Cathie holds a master's degree in Computer Science from the University of Southern California and a PhD in Psychology and Neuroscience from the University of Hong Kong. She was previously a zkML researcher at the Ethereum Foundation.

Funding Information:

On June 26 of this year, ORA announced the completion of a $20 million funding round, with investors including Polychain Capital, HF0, Hashkey Capital, SevenX Ventures, and Geekcartel.

Grass: The Data Layer for AI Models

Grass focuses on transforming public network data into AI datasets. Grass's network uses users' excess bandwidth to scrape data from the internet without acquiring users' personal privacy information. This type of network data is essential for the development of AI models and the operations of many other industries. Users can run nodes and earn Grass points, and running a node on Grass is as simple as registering and installing a Chrome extension.

Grass connects AI demanders and data providers, creating a "win-win" situation, with advantages including: simple installation operations and future airdrop expectations significantly boosting user participation, which also provides more data sources for demanders. Users, as data providers, do not need to perform complex setups and actions, allowing data scraping, cleaning, and other operations to occur without user awareness. Additionally, there are no special requirements for devices, lowering the participation threshold for users, and its invitation mechanism effectively encourages more users to join quickly.

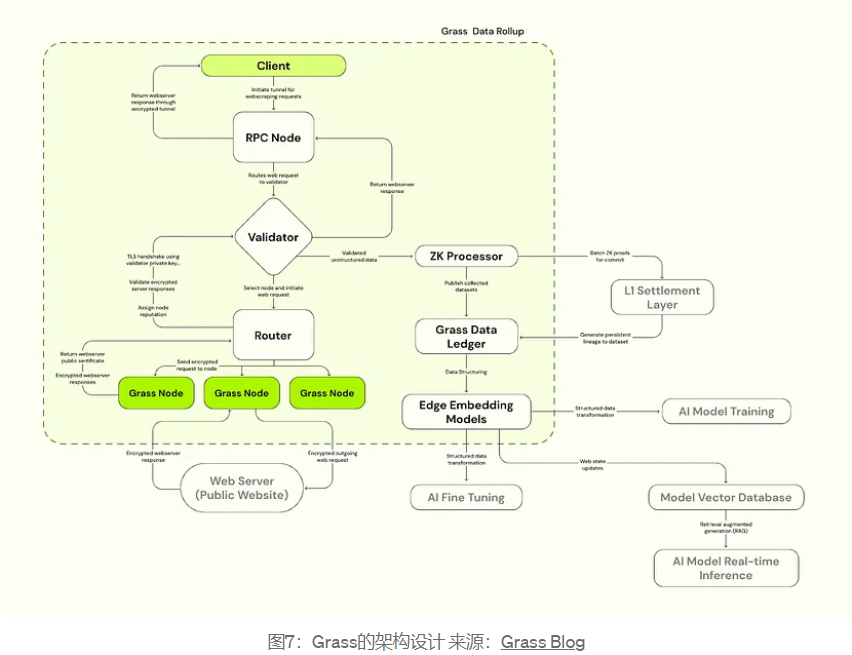

Since Grass needs to perform data scraping operations to achieve tens of millions of web requests per minute, all of which need to be verified, this will require more throughput than any L1 can provide. The Grass team announced plans to build a Rollup in March to support users and builders in verifying data sources. This plan involves batching metadata for verification through ZK processors, with proofs of each dataset's metadata stored on Solana's settlement layer, generating a data ledger.

As shown in the diagram, clients issue web requests, which are routed through validators and ultimately to Grass nodes. The servers of the websites respond to the web requests, allowing their data to be scraped and returned. The purpose of the ZK processor is to help record the sources of the datasets scraped on the Grass network. This means that whenever a node scrapes the network, they can earn their rewards without revealing any information about their identity. After being recorded in the data ledger, the collected data is cleaned and structured using graph embedding models (Edge Embedding) for AI training.

In summary, Grass allows users to contribute excess bandwidth to scrape network data and earn passive income while protecting personal privacy. This design not only brings economic benefits to users but also provides AI companies with a decentralized way to obtain large amounts of real data.

Although Grass significantly lowers the participation threshold for users, facilitating increased user engagement, the project team needs to consider that the participation of real users and the influx of "wool party" participants may bring a large amount of junk information, increasing the burden of data processing. Therefore, the project team needs to establish reasonable incentive mechanisms to price data in order to obtain truly valuable data. This is an important influencing factor for both the project team and users. If users feel confused or unfair about airdrop allocations, it may lead to distrust towards the project team, affecting the project's consensus and development.

Team Information:

Founder Andrej holds a PhD in Computational and Applied Mathematics from York University in Canada. Chief Technology Officer Chris Nguyen has years of experience in data processing, and his founded data company has received multiple honors, including the IBM Cloud Embedded Excellence Award, Enterprise Technology Top 30, and Forbes Cloud 100 Rising Stars.

Funding Information:

Grass is the first product launched by the Wynd Network team, which completed a $3.5 million seed round of financing led by Polychain Capital and Tribe Capital in December 2023, with participation from Bitscale, Big Brain, Advisors Anonymous, Typhon V, Mozaik, and others. Previously, No Limit Holdings led the Pre-seed round, raising a total of $4.5 million.

In September of this year, Grass completed its Series A financing, led by Hack VC, with participation from Polychain, Delphi Digital, Brevan Howard Digital, Lattice Fund, and others, with the amount undisclosed.

IO.NET: A Decentralized Computing Resource Platform

IO.NET aggregates global idle network computing resources by building a decentralized GPU network on Solana. This enables AI engineers to access the required GPU computing resources at lower costs, with easier access and greater flexibility. ML teams can build model training and inference service workflows on the distributed GPU network.

IO.NET not only provides income for users with idle computing power but also significantly reduces the computing burden for small teams or individuals. With Solana's high throughput and efficient execution, it has inherent advantages for GPU network scheduling.

Since its launch, IO.NET has received significant attention and favor from top institutions. According to CoinMarketCap, as of October 17, its token's market capitalization has exceeded $220 million, with an FDV exceeding $1.47 billion.

One of IO.NET's core technologies is IO-SDK, based on a dedicated fork of Ray. (Ray is an open-source framework used by OpenAI that can scale machine learning and other AI applications to clusters for processing large amounts of computation). Utilizing Ray's native parallelism, IO-SDK can parallelize Python functions and supports integration with mainstream ML frameworks such as PyTorch and TensorFlow. Its memory storage allows for rapid data sharing between tasks, eliminating serialization delays.

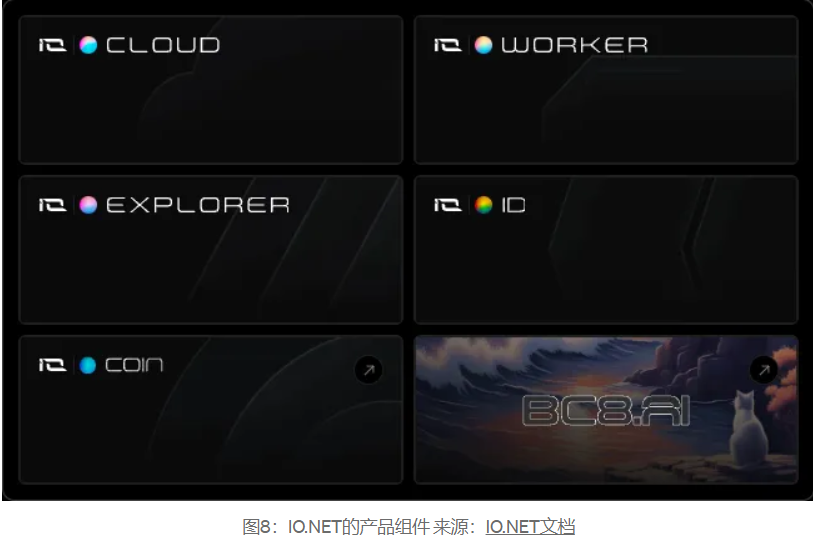

Product Components:

- IO Cloud: Designed for on-demand deployment and management of decentralized GPU clusters, seamlessly integrated with IO-SDK, providing a comprehensive solution for scaling AI and Python applications. It offers computing power while simplifying the deployment and management of GPU/CPU resources. Potential risks are reduced through firewalls, access control, and modular design, isolating different functionalities to enhance security.

- IO Worker: Users can manage their GPU node operations through this web application interface. It includes features such as monitoring computational activities, tracking temperature and power consumption, installation assistance, security measures, and income status.

- IO Explorer: Primarily provides users with comprehensive statistics and visualizations of various aspects of the GPU cloud, allowing users to view network activity, key statistics, data points, and reward transactions in real-time.

- IO ID: Users can view their personal account status, including wallet address activity, wallet balance, and claim earnings.

- IO Coin: Supports users in viewing the status of IO.NET's tokens.

- BC8.AI: This is an AI image generation website supported by IO.NET, where users can achieve text-to-image AI generation processes.

IO.NET aggregates over one million GPU resources from cryptocurrency miners, projects like Filecoin and Render, and other idle computing power, allowing AI engineers or teams to customize and purchase GPU computing services according to their needs. By leveraging global idle computing resources, users providing computing power can tokenize their earnings. IO.NET not only optimizes resource utilization but also reduces high computing costs, promoting broader AI and computing applications.

As a decentralized computing power platform, IO.NET should focus on user experience, the richness of computing resources, and resource scheduling and monitoring, which are crucial factors in the competitive landscape of decentralized computing power. However, there have been previous controversies regarding resource scheduling, with some questioning the mismatch between resource scheduling and user orders. While we cannot ascertain the truth of this matter, it serves as a reminder for related projects to pay attention to these aspects of optimization and user experience enhancement. Without user support, even the most polished products can become mere decorations.

Team Information:

Founder Ahmad Shadid was previously a quantitative systems engineer at WhalesTrader and a contributor and mentor at the Ethereum Foundation. Chief Technology Officer Gaurav Sharma was a senior development engineer at Amazon, served as an architect at eBay, and worked in the engineering department at Binance.

Funding Information:

On May 1, 2023, the official announcement was made regarding the completion of a $10 million seed round of financing;

On March 5, 2024, it was announced that a $30 million Series A financing round was completed, led by Hack VC, with participation from Multicoin Capital, 6th Man Ventures, M13, Delphi Digital, Solana Labs, Aptos Labs, Foresight Ventures, Longhash, SevenX, ArkStream, Animoca Brands, Continue Capital, MH Ventures, Sandbox Games, and others.

MyShell: An AI Agent Platform Connecting Consumers and Creators

MyShell is a decentralized AI consumer layer that connects consumers, creators, and open-source researchers. Users can utilize the AI agents provided by the platform or build their own AI agents or applications on MyShell's development platform. MyShell offers an open marketplace for users to freely trade AI agents, with various types of AI agents available in MyShell's AIpp store, including virtual companions, trading assistants, and AIGC-type agents.

As a low-threshold alternative to various AI chatbots like ChatGPT, MyShell provides a broad AI functionality platform that lowers the barriers for users to utilize AI models and agents, enabling them to gain a comprehensive AI experience. For example, a user might want to use Claude for literature organization and writing optimization while using Midjourney to generate high-quality images. Typically, this would require users to register multiple accounts on different platforms and pay for some services. MyShell offers a one-stop service, providing free AI quotas daily, so users do not need to repeatedly register and pay fees.

Additionally, some AI products have restrictions in certain regions, but on the MyShell platform, users can typically use various AI services smoothly, significantly enhancing the user experience. These advantages make MyShell an ideal choice for user experience, providing convenient, efficient, and seamless AI service experiences.

The MyShell ecosystem is built on three core components:

Self-developed AI Models: MyShell has developed several open-source AI models, including AIGC and large language models, which users can use directly; more open-source models can also be found on the official GitHub.

Open AI Development Platform: Users can easily build AI applications. The MyShell platform allows creators to leverage different models and integrate external APIs. With native development workflows and modular toolkits, creators can quickly turn their ideas into functional AI applications, accelerating innovation.

Fair Incentive Ecosystem: MyShell's incentive model encourages users to create content that meets their personal preferences. Creators can earn native platform rewards when using their self-built applications and can also receive funding from consumers.

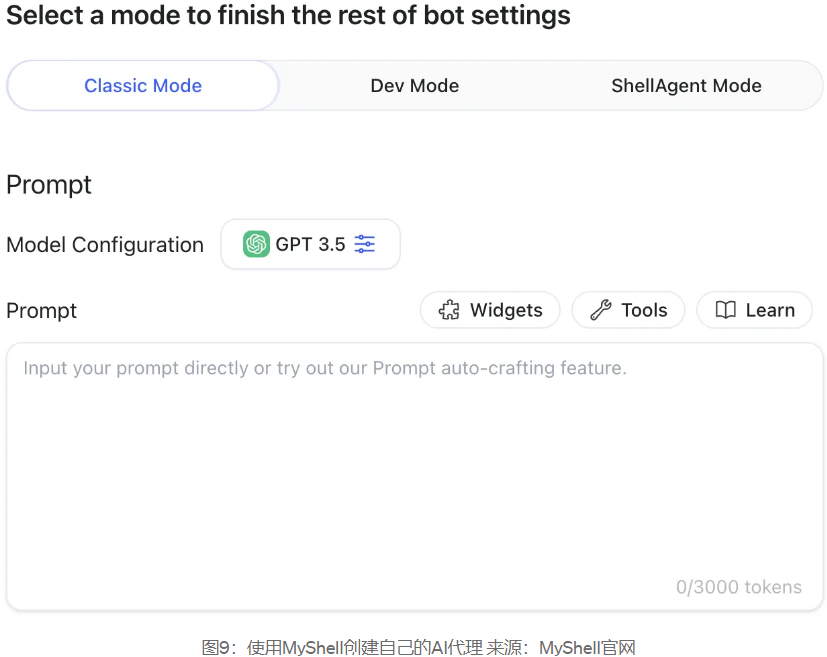

In MyShell's Workshop, users can see support for building AI robots in three modes, matching suitable modes for both professional developers and ordinary users. The classic mode allows users to set model parameters and instructions, which can be integrated into social media software; the development mode requires users to upload their model files; the ShellAgent mode allows users to build AI robots in a no-code format.

MyShell combines the principles of decentralization with AI technology, aiming to provide an open, flexible, and fairly incentivized ecosystem for consumers, creators, and researchers. Through self-developed AI models, an open development platform, and various incentive methods, it offers users a rich set of tools and resources to realize their ideas and needs.

MyShell integrates various high-quality models, and the team is continuously developing numerous AI models to enhance user experience. However, MyShell still faces some challenges during use. For example, some users have reported that support for Chinese in certain models needs improvement. Nevertheless, by reviewing MyShell's code repository, it is evident that the team is continuously updating and optimizing, actively listening to community feedback. It is believed that with ongoing improvements, future user experiences will be even better.

Team Information:

Co-founder Zengyi Qin focuses on speech algorithm research and holds a PhD from MIT. During his undergraduate studies at Tsinghua University, he published several papers at top conferences. He also has professional experience in robotics, computer vision, and reinforcement learning. Another co-founder, Ethan Sun, graduated from the University of Oxford with a degree in Computer Science and has years of experience in the AR+AI field.

Funding Information:

In October 2023, MyShell completed a seed round financing of $5.6 million, led by INCE Capital, with participation from Hashkey Capital, Folius Ventures, SevenX Ventures, OP Crypto, and others.

In March 2024, MyShell secured $11 million in its latest Pre-A round of financing. This round was led by Dragonfly, with participation from Delphi Digital, Bankless Ventures, Maven11 Capital, Nascent, Nomad, Foresight Ventures, Animoca Ventures, OKX Ventures, GSR, and other investment institutions. Additionally, this round of financing received support from angel investors such as Balaji Srinivasan, Illia Polosukhin, Casey K. Caruso, and Santiago Santos.

In August of this year, Binance Labs announced an investment in MyShell through its sixth incubation program, with the specific amount undisclosed.

Challenges and Considerations That Need to Be Addressed

Although this sector is still in its infancy, industry participants should consider several important factors that influence project success. The following aspects need to be taken into account:

Balancing Supply and Demand for AI Resources: For Web3-AI ecosystem projects, achieving a balance between the supply and demand of AI resources and attracting more individuals with genuine needs and a willingness to contribute is crucial. For users with needs for models, data, and computing power, they may already be accustomed to obtaining AI resources on Web2 platforms. Additionally, how to attract AI resource providers to contribute to the Web3-AI ecosystem and draw more demanders to acquire resources for reasonable matching of AI resources is also a challenge faced by the industry.

Data Challenges: Data quality directly impacts model training effectiveness. Ensuring data quality during data collection and preprocessing, filtering out the large amounts of junk data generated by "wool party" users, will be a significant challenge for data-related projects. Project teams can enhance data credibility by employing scientific data quality control methods and transparently showcasing the effects of data processing, which will also attract more data demanders.

Security Issues: In the Web3 industry, achieving on-chain and off-chain interactions of AI assets through blockchain and privacy technologies to prevent malicious actors from affecting the quality of AI assets and ensuring the security of data, models, and other AI resources is a necessary consideration. Some project teams have proposed solutions, but this field is still in the construction phase. With continuous technological improvements, higher and verified security standards are expected to be achieved.

User Experience:

- Web2 users are typically accustomed to traditional operational experiences, while Web3 projects often involve complex smart contracts, decentralized wallets, and other technologies, which may present a high barrier for ordinary users. The industry should consider how to further optimize user experience and educational facilities to attract more Web2 users into the Web3-AI ecosystem.

- For Web3 users, establishing effective incentive mechanisms and a sustainable economic system is key to promoting long-term user retention and healthy ecosystem development. At the same time, we should think about how to maximize the use of AI technology to improve efficiency in the Web3 field and innovate more application scenarios and gameplay that combine with AI. These are all critical factors affecting the healthy development of the ecosystem.

As the trend of Internet + continues to evolve, we have witnessed countless innovations and transformations. Many fields are already integrating with AI, and looking ahead, the era of AI+ may flourish everywhere, fundamentally changing our way of life. The integration of Web3 and AI means that data ownership and control will return to users, giving AI higher transparency and trust. This trend of integration is expected to create a fairer and more open market environment and promote efficiency improvements and innovative development across various industries. We look forward to industry builders working together to create better AI solutions.

References

https://ieeexplore.ieee.org/abstract/document/9451544

https://docs.ora.io/doc/oao-onchain-ai-oracle/introduction

https://saharalabs.ai/

https://saharalabs.ai/blog/sahara-ai-raise-43m

https://bittensor.com/

https://docs.bittensor.com/yuma-consensus

https://docs.bittensor.com/emissions#emission

https://twitter.com/myshell_ai

https://twitter.com/SubVortexTao

https://foresightnews.pro/article/detail/49752

https://www.ora.io/

https://docs.ora.io/doc/imo/introduction

https://github.com/ora-io/keras2circom

https://arxiv.org/abs/2401.17555

https://arxiv.org/abs/2402.15006

https://x.com/OraProtocol/status/1805981228329513260

https://x.com/getgrass_io

https://www.getgrass.io/blog/grass-the-first-ever-layer-2-data-rollup

https://wynd-network.gitbook.io/grass-docs/architecture/overview#edge-embedding-models

http://IO.NET

https://www.ray.io/

https://www.techflowpost.com/article/detail_17611.html

https://myshell.ai/

https://www.chaincatcher.com/article/2118663

Acknowledgments

In this emerging infrastructure paradigm, there is still much research and work to be done, and many areas not covered in this article. If you are interested in related research topics, please contact Chloe.

Special thanks to Severus and JiaYi for their insightful comments and feedback on this article. Finally, thanks to JiaYi for the cameo appearance of their cat.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。