What is the Surge phase? DA, data compression, Generalized Plasma, improvements in cross-L2 interoperability, scaling execution on L1, etc…

Written by: Vitalik Buterin

Compiled by: Karen, Foresight News

Special thanks to Justin Drake, Francesco, Hsiao-wei Wang, @antonttc, and Georgios Konstantopoulos.

Initially, Ethereum's roadmap included two scaling strategies. One (refer to an early paper from 2015) is "sharding": each node only needs to verify and store a small portion of transactions, rather than verifying and storing all transactions in the chain. Other peer-to-peer networks (like BitTorrent) work this way, so we can certainly make blockchains work in the same manner. The other is Layer 2 protocols: these networks will sit on top of Ethereum, allowing them to fully benefit from its security while keeping most data and computation off the main chain. Layer 2 protocols refer to state channels from 2015, Plasma from 2017, and then Rollup from 2019. Rollup is more powerful than state channels or Plasma, but they require a significant amount of on-chain data bandwidth. Fortunately, by 2019, sharding research had addressed the problem of large-scale verification of "data availability." As a result, the two paths merged, and we got a Rollup-centric roadmap, which remains Ethereum's scaling strategy today.

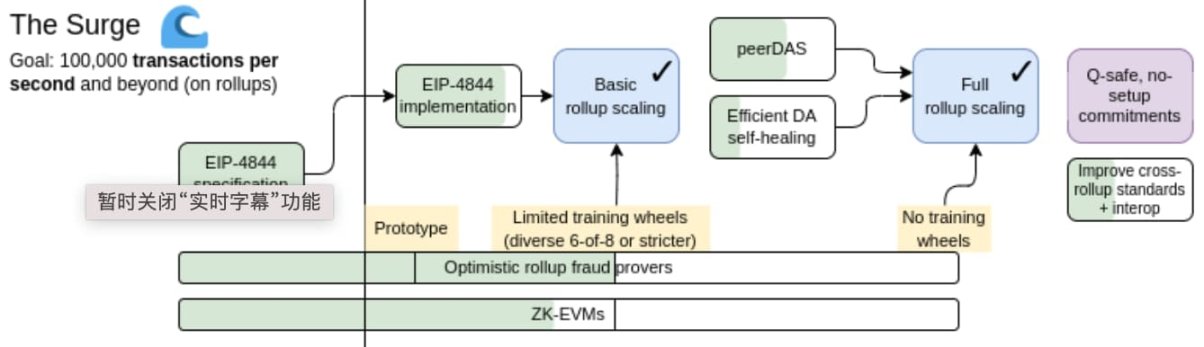

The Surge, 2023 roadmap version

The Rollup-centric roadmap proposes a simple division of labor: Ethereum L1 focuses on being a robust and decentralized base layer, while L2 takes on the task of helping the ecosystem scale. This model is ubiquitous in society: the existence of the court system (L1) is not to pursue ultra-speed and efficiency, but to protect contracts and property rights, while entrepreneurs (L2) build on this solid foundation, leading humanity towards (both literally and metaphorically) Mars.

This year, the Rollup-centric roadmap has achieved significant milestones: with the launch of EIP-4844 blobs, Ethereum L1's data bandwidth has increased dramatically, and multiple Ethereum Virtual Machine (EVM) Rollups have entered their first phase. Each L2 exists as a "shard" with its own internal rules and logic, and the diversity and pluralism of sharding implementations have now become a reality. However, as we have seen, pursuing this path also faces some unique challenges. Therefore, our current task is to complete the Rollup-centric roadmap and address these issues while maintaining the robustness and decentralization unique to Ethereum L1.

The Surge: Key Objectives

- Future Ethereum can achieve over 100,000 TPS through L2;

- Maintain the decentralization and robustness of L1;

- At least some L2 fully inherit Ethereum's core attributes (trustlessness, openness, censorship resistance);

- Ethereum should feel like a unified ecosystem, rather than 34 different blockchains.

Chapter Contents

- The Scalability Triangle Paradox

- Further Progress on Data Availability Sampling

- Data Compression

- Generalized Plasma

- Mature L2 Proof Systems

- Improvements in Cross-L2 Interoperability

- Scaling Execution on L1

The Scalability Triangle Paradox

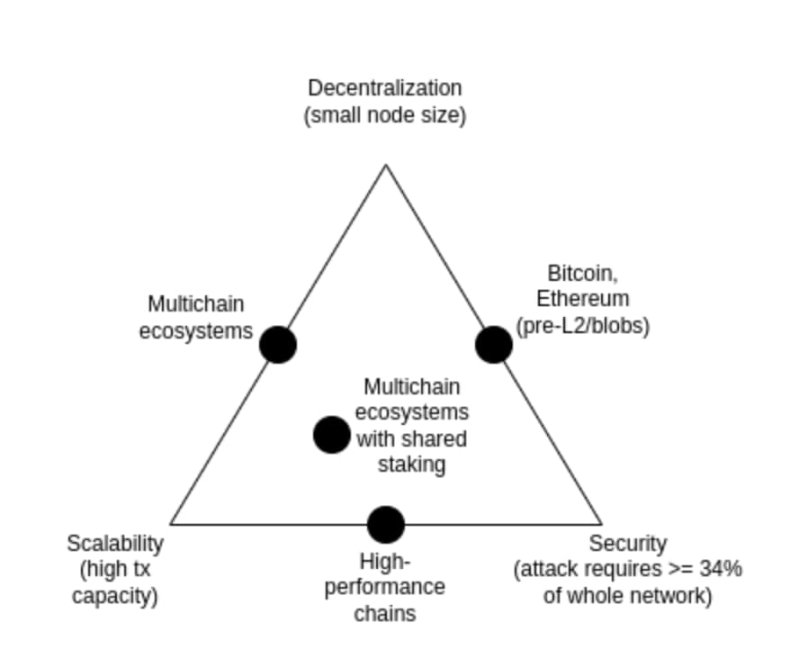

The Scalability Triangle Paradox is an idea proposed in 2017, which posits that there is a contradiction between three characteristics of blockchains: decentralization (more specifically: low cost of running nodes), scalability (high number of transactions processed), and security (attackers need to compromise a large portion of nodes in the network to fail a single transaction).

It is worth noting that the triangle paradox is not a theorem, and the posts introducing the triangle paradox do not come with mathematical proofs. It does provide a heuristic mathematical argument: if a decentralization-friendly node (like a consumer-grade laptop) can verify N transactions per second, and you have a chain that can process k*N transactions per second, then (i) each transaction can only be seen by 1/k nodes, meaning an attacker only needs to compromise a few nodes to succeed with a malicious transaction, or (ii) your nodes will become powerful while your chain will not be decentralized. The purpose of this article is not to prove that breaking the triangle paradox is impossible; rather, it aims to show that breaking the triangle paradox is difficult and requires stepping outside the thinking framework implied by the argument.

Over the years, some high-performance chains have often claimed to solve the triangle paradox without fundamentally changing their architecture, usually by applying software engineering techniques to optimize nodes. This is always misleading, as running a node on these chains is much more difficult than running a node on Ethereum. This article will explore why this is the case and why scaling Ethereum cannot be achieved solely through L1 client software engineering.

However, the combination of data availability sampling and SNARKs does indeed solve the triangle paradox: it allows clients to verify that a certain amount of data is available and that a certain number of computational steps are correctly executed, while only downloading a small amount of data and performing minimal computation. SNARKs are trustless. Data availability sampling has a subtle few-of-N trust model, but it retains the fundamental characteristics of a non-scalable chain, meaning that even a 51% attack cannot force bad blocks to be accepted by the network.

Another approach to solving the three-way dilemma is the Plasma architecture, which cleverly incentivizes users to take on the responsibility of monitoring data availability. Back in 2017-2019, when we only had fraud proofs as a means to scale computational capacity, Plasma was very limited in secure execution, but with the proliferation of SNARKs (zero-knowledge succinct non-interactive arguments), the Plasma architecture has become more viable for a wider range of use cases than ever before.

Further Progress on Data Availability Sampling

What Problem Are We Solving?

On March 13, 2024, when the Dencun upgrade goes live, the Ethereum blockchain will have 3 blobs of approximately 125 kB each per 12-second slot, or about 375 kB of data availability bandwidth per slot. Assuming transaction data is published directly on-chain, an ERC20 transfer is about 180 bytes, so the maximum TPS for Rollups on Ethereum is: 375000 / 12 / 180 = 173.6 TPS.

If we add Ethereum's calldata (theoretical maximum: 30 million Gas per slot / 16 gas per byte = 1,875,000 bytes per slot), it becomes 607 TPS. With PeerDAS, the number of blobs could increase to 8-16, providing 463-926 TPS for calldata.

This is a significant improvement for Ethereum L1, but it's not enough. We want more scalability. Our medium-term goal is 16 MB per slot, which, combined with improvements in Rollup data compression, would bring about ~58,000 TPS.

What Is It? How Does It Work?

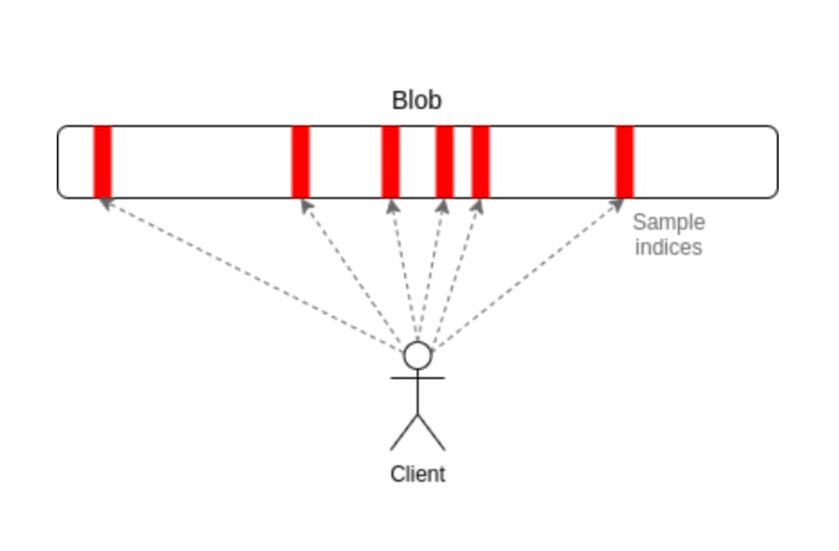

PeerDAS is a relatively simple implementation of "1D sampling." In Ethereum, each blob is a 4096-degree polynomial over a 253-bit prime field. We broadcast shares of the polynomial, where each share contains 16 evaluation values from 16 adjacent coordinates out of a total of 8192 coordinates. Among these 8192 evaluation values, any 4096 (according to the currently proposed parameters: any 64 out of 128 possible samples) can reconstruct the blob.

PeerDAS works by having each client listen to a small number of subnets, where the i-th subnet broadcasts the i-th sample of any blob, and requests the additional blobs it needs from peers in the global p2p network (who will listen to different subnets). A more conservative version, SubnetDAS, only uses the subnet mechanism without additional peer layer inquiries. The current proposal is to have nodes participating in proof of stake use SubnetDAS, while other nodes (i.e., clients) use PeerDAS.

Theoretically, we can scale "1D sampling" quite large: if we increase the maximum number of blobs to 256 (targeting 128), we can reach the 16MB goal, where each node in data availability sampling has 16 samples * 128 blobs * 512 bytes per sample per blob = 1 MB of data bandwidth per slot. This is just barely within our tolerance: it is feasible, but it means that bandwidth-constrained clients cannot sample. We can optimize this to some extent by reducing the number of blobs and increasing the size of the blobs, but this will raise the cost of reconstruction.

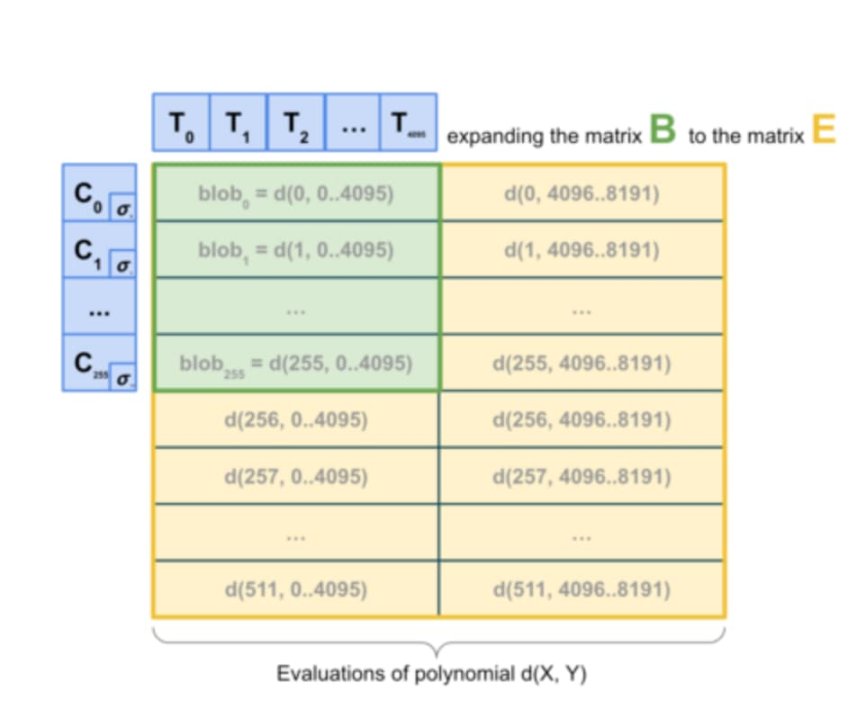

Therefore, we ultimately want to go further and implement 2D sampling, which not only samples randomly within blobs but also samples randomly between blobs. By leveraging the linear properties of KZG commitments, we can expand the set of blobs in a block with a new list of virtual blobs that redundantly encode the same information.

Thus, ultimately, we want to go further and implement 2D sampling, which samples randomly not only within blobs but also between blobs. The linear properties of KZG commitments are used to expand the set of blobs in a block, containing a new list of virtual blobs that redundantly encode the same information.

2D Sampling. Source: a16z crypto

It is crucial that the expansion of computational commitments does not require blobs, making this scheme fundamentally friendly to distributed block construction. Nodes that actually build blocks only need to possess blob KZG commitments, and they can rely on data availability sampling (DAS) to verify the availability of data blocks. One-dimensional data availability sampling (1D DAS) is also inherently friendly to distributed block construction.

What are the links to existing research?

- Original post introducing data availability (2018): https://github.com/ethereum/research/wiki/A-note-on-data-availability-and-erasure-coding

- Follow-up paper: https://arxiv.org/abs/1809.09044

- Explanatory article on DAS, paradigm: https://www.paradigm.xyz/2022/08/das

- 2D availability with KZG commitments: https://ethresear.ch/t/2d-data-availability-with-kate-commitments/8081

- PeerDAS on ethresear.ch: https://ethresear.ch/t/peerdas-a-simpler-das-approach-using-battle-tested-p2p-components/16541 and paper: https://eprint.iacr.org/2024/1362

- EIP-7594: https://eips.ethereum.org/EIPS/eip-7594

- SubnetDAS on ethresear.ch: https://ethresear.ch/t/subnetdas-an-intermediate-das-approach/17169

- Nuances of data recoverability in data availability sampling: https://ethresear.ch/t/nuances-of-data-recoverability-in-data-availability-sampling/16256

What still needs to be done? What are the trade-offs?

The next step is to complete the implementation and launch of PeerDAS. After that, gradually increase the number of blobs on PeerDAS while carefully monitoring the network and improving the software to ensure security; this is an incremental process. At the same time, we hope for more academic work to standardize PeerDAS and other versions of DAS and their interactions with issues such as fork choice rule security.

In the further future, we need to do more work to determine the ideal version of 2D DAS and prove its security properties. We also hope to eventually transition from KZG to a quantum-safe alternative that does not require a trusted setup. Currently, it is unclear which candidates are friendly to distributed block construction. Even using expensive "brute force" techniques, such as using recursive STARKs to generate validity proofs for reconstructing rows and columns, is insufficient to meet the demand, because while technically a STARK's size is O(log(n) * log(log(n))) hashes (using STIR), in practice, a STARK is almost as large as the entire blob.

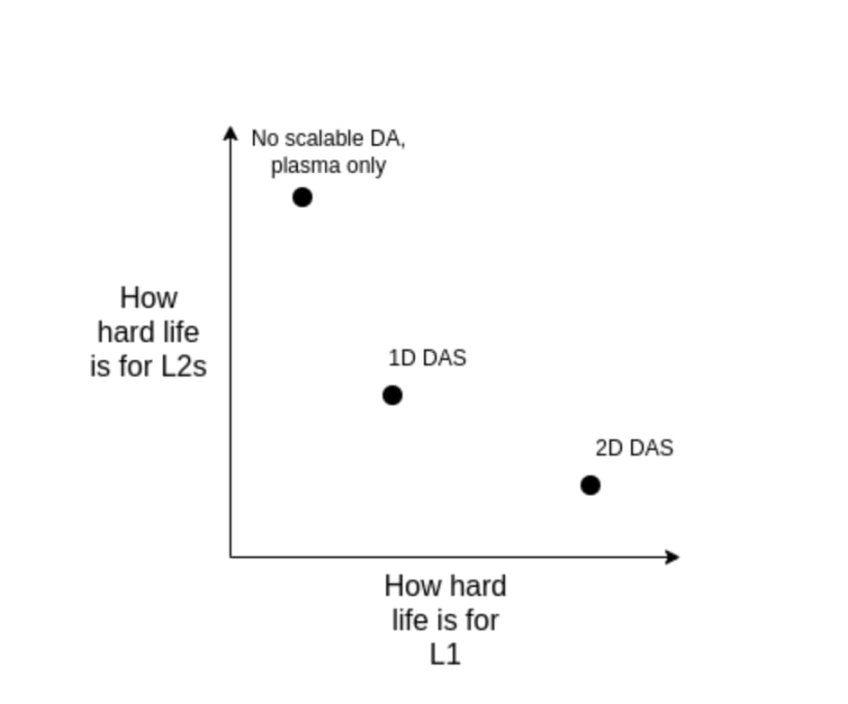

I believe the long-term realistic path is:

- Implement the ideal 2D DAS;

- Stick with 1D DAS, sacrificing sampling bandwidth efficiency for simplicity and robustness, accepting a lower data ceiling;

- (Hard pivot) Abandon DA and fully embrace Plasma as our primary Layer 2 architecture.

Note that even if we decide to scale execution directly at the L1 layer, this option exists. This is because if the L1 layer is to handle a large amount of TPS, L1 blocks will become very large, and clients will want an efficient way to verify their correctness, so we will have to use the same technologies at the L1 layer as Rollup (such as ZK-EVM and DAS).

How does it interact with other parts of the roadmap?

If data compression is implemented, the demand for 2D DAS will decrease, or at least be delayed; if Plasma is widely used, the demand will further decrease. DAS also poses challenges to distributed block construction protocols and mechanisms: while DAS is theoretically friendly to distributed reconstruction, this needs to be practically combined with the package inclusion list proposal and its surrounding fork choice mechanisms.

Data Compression

What problem are we solving?

Each transaction in a Rollup takes up a significant amount of on-chain data space: an ERC20 transfer requires about 180 bytes. Even with ideal data availability sampling, this limits the scalability of Layer protocols. With each slot being 16 MB, we get:

16000000 / 12 / 180 = 7407 TPS

What if we could not only solve the numerator problem but also the denominator problem, allowing each transaction in a Rollup to occupy fewer bytes on-chain?

What is it, and how does it work?

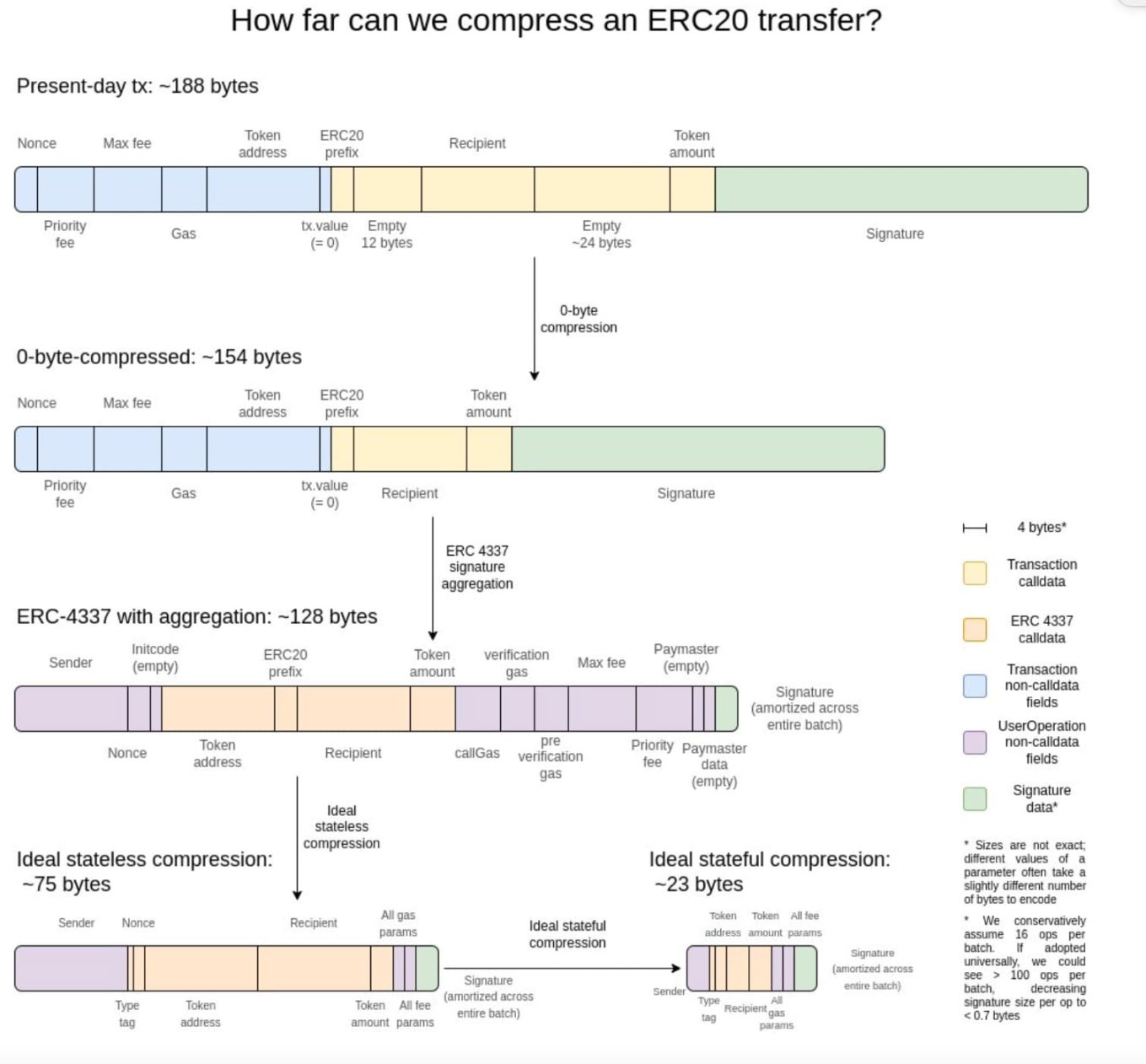

In my view, the best explanation is this diagram from two years ago:

In zero-byte compression, each long sequence of zero bytes is replaced with two bytes indicating how many zero bytes there are. Furthermore, we leverage specific properties of transactions:

Signature aggregation: We switch from ECDSA signatures to BLS signatures, which have the property that multiple signatures can be combined into a single signature that can prove the validity of all original signatures. At the L1 layer, due to the high computational cost of verification even with aggregation, BLS signatures are not considered. However, in a data-scarce environment like L2, using BLS signatures makes sense. The aggregation feature of ERC-4337 provides a pathway to achieve this.

Replacing addresses with pointers: If an address has been used before, we can replace the 20-byte address with a 4-byte pointer pointing to a location in the history.

Custom serialization of transaction values—most transaction values have very few digits; for example, 0.25 ETH is represented as 250,000,000,000,000,000 wei. The maximum base fee and priority fee are similar. Therefore, we can use a custom decimal floating-point format to represent most currency values.

What are the links to existing research?

- Exploring sequence.xyz: https://sequence.xyz/blog/compressing-calldata

- L2 Calldata optimization contracts: https://github.com/ScopeLift/l2-optimizoooors

- Validity proof-based Rollups (also known as ZK rollups) publish state differences instead of transactions: https://ethresear.ch/t/rollup-diff-compression-application-level-compression-strategies-to-reduce-the-l2-data-footprint-on-l1/9975

- BLS wallet - implementing BLS aggregation via ERC-4337: https://github.com/getwax/bls-wallet

What still needs to be done, and what are the trade-offs?

The next major task is to actually implement the above solutions. The main trade-offs include:

Switching to BLS signatures requires significant effort and will reduce compatibility with trusted hardware chips that can enhance security. Other signature schemes' ZK-SNARK encapsulations can be used as alternatives.

Dynamic compression (e.g., replacing addresses with pointers) will complicate client code.

Publishing state differences on-chain instead of transactions will reduce auditability and render many software tools (e.g., block explorers) inoperable.

How does it interact with other parts of the roadmap?

Adopting ERC-4337 and eventually incorporating some of its content into the L2 EVM can significantly accelerate the deployment of aggregation techniques. Placing some of ERC-4337's content on L1 can speed up its deployment on L2.

Generalized Plasma

What problem are we solving?

Even with 16 MB blobs and data compression, 58,000 TPS may not be sufficient to fully meet the demands of consumer payments, decentralized social networking, or other high-bandwidth areas, especially when we start considering privacy factors, which could reduce scalability by 3-8 times. For high transaction volume, low-value use cases, one current option is to use Validium, which keeps data off-chain and employs an interesting security model: operators cannot steal users' funds, but they may temporarily or permanently freeze all users' funds. However, we can do better.

What is it, and how does it work?

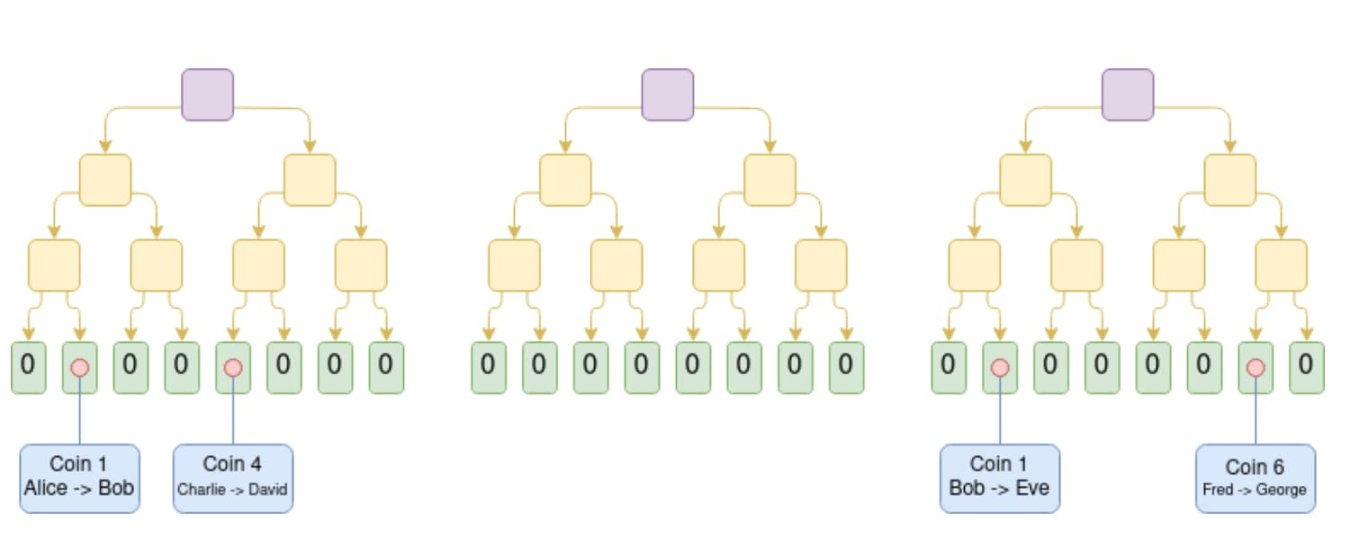

Plasma is a scaling solution that involves an operator publishing blocks off-chain and placing the Merkle root of these blocks on-chain (unlike Rollup, which places the complete block on-chain). For each block, the operator sends a Merkle branch to each user to prove what changes have occurred to that user's assets, or that no changes have occurred. Users can withdraw their assets by providing the Merkle branch. Importantly, this branch does not have to root from the latest state. Therefore, even if there are issues with data availability, users can still recover their assets by extracting their available latest state. If a user submits an invalid branch (for example, attempting to withdraw assets they have already sent to someone else, or if the operator has created an asset out of thin air), the legitimate ownership of the asset can be determined through the on-chain challenge mechanism.

Plasma Cash chain diagram. Transactions spending coin i are placed in the i-th position in the tree. In this example, we assume all previous trees are valid, and we know that Eve currently owns token 1, David owns token 4, and George owns token 6.

Early versions of Plasma could only handle payment use cases and were not effective for further scaling. However, if we require each root to be verified with SNARKs, then Plasma becomes much more powerful. Each challenge game can be significantly simplified because we eliminate most potential paths for operator cheating. At the same time, new paths are opened up, allowing Plasma technology to scale to a broader range of asset classes. Finally, when operators do not cheat, users can immediately withdraw funds without waiting for a week-long challenge period.

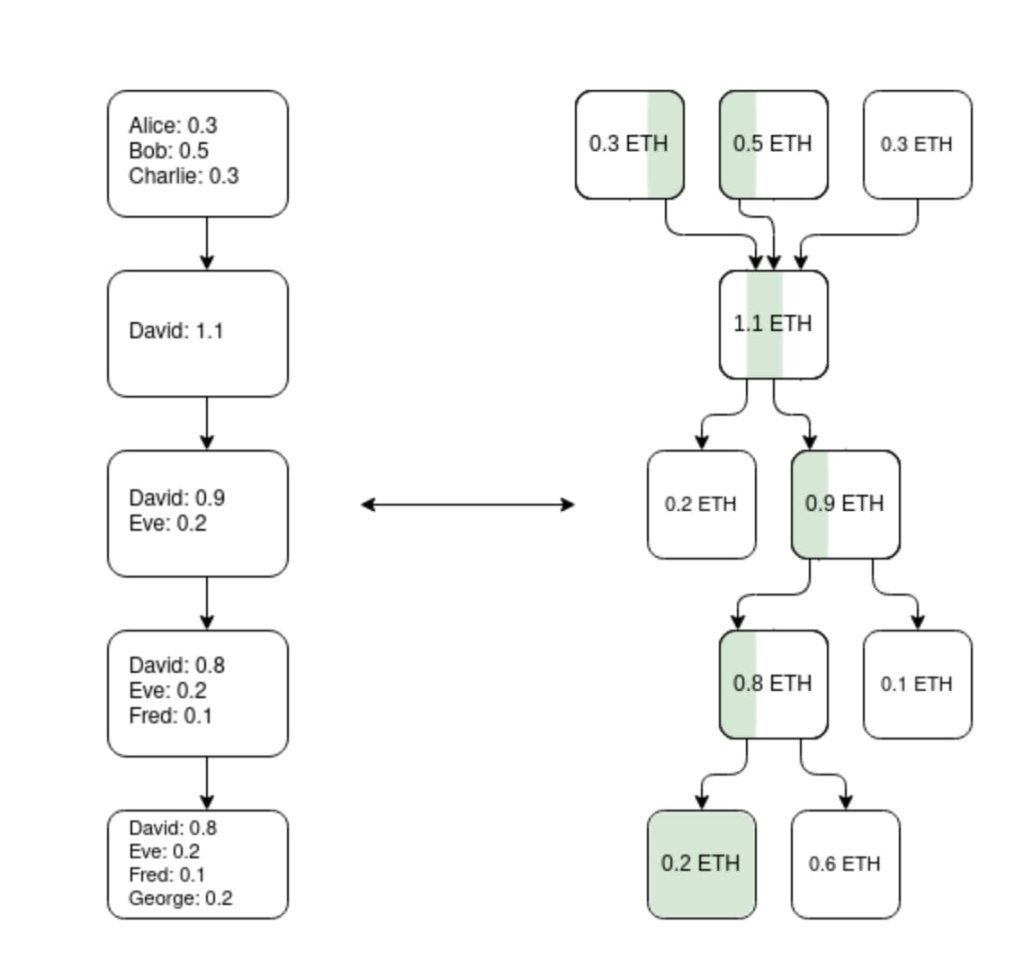

One way to create an EVM Plasma chain (not the only way): build a parallel UTXO tree using ZK-SNARKs that reflects the balance changes made by the EVM and defines a unique mapping of the "same token" at different historical points in time. Plasma structures can then be built on top of it.

A key insight is that the Plasma system does not need to be perfect. Even if you can only protect a subset of assets (for example, only tokens that have not moved in the past week), you have already significantly improved the current state of highly scalable EVMs (i.e., Validium).

Another class of structures is hybrid Plasma/Rollup, such as Intmax. These constructs place a minimal amount of data for each user on-chain (e.g., 5 bytes), thereby gaining certain characteristics that lie between Plasma and Rollup: in the case of Intmax, you can achieve very high scalability and privacy, although even with a capacity of 16 MB, it is theoretically limited to about 16,000,000 / 12 / 5 = 266,667 TPS.

What are the links to existing research?

- Original Plasma paper: https://plasma.io/plasma-deprecated.pdf

- Plasma Cash: https://ethresear.ch/t/plasma-cash-plasma-with-much-less-per-user-data-checking/1298

- Plasma Cashflow: https://hackmd.io/DgzmJIRjSzCYvl4lUjZXNQ?view#🚪-Exit

- Intmax (2023): https://eprint.iacr.org/2023/1082

What still needs to be done? What are the trade-offs?

The main remaining task is to put the Plasma system into practical production use. As mentioned, Plasma and Validium are not an either-or choice: any Validium can enhance its security properties to some extent by incorporating Plasma features into its exit mechanism. The focus of research is on obtaining the best properties for the EVM (considering trust requirements, worst-case L1 gas costs, and resistance to DoS attacks, etc.), as well as alternative specific application structures. Additionally, Plasma is conceptually more complex than Rollup, which requires addressing this directly through research and building better general frameworks.

The main trade-off in designing with Plasma is that they rely more on operators and are harder to base, although hybrid Plasma/Rollup designs can often avoid this weakness.

How does it interact with other parts of the roadmap?

The more effective the Plasma solution, the less pressure there is on L1 to have high-performance data availability features. Moving activity to L2 can also reduce MEV pressure on L1.

Mature L2 Proof Systems

What problem are we solving?

Currently, most Rollups are not truly trustless. There exists a security committee that has the ability to override (optimistic or validity) proof system behavior. In some cases, the proof system may not even run at all, or if it does run, it may only have a "consultative" function. The most advanced Rollups include: (i) some trustless application-specific Rollups, such as Fuel; (ii) as of the time of writing this article, Optimism and Arbitrum are two full EVM Rollups that have implemented what is known as the "first stage" of partial trustless milestones. The reason Rollups have failed to make greater progress is due to concerns about bugs in the code. We need trustless Rollups, so we must confront and resolve this issue.

What is it, and how does it work?

First, let’s review the "stage" system introduced earlier in this article.

Stage 0: Users must be able to run nodes and synchronize the chain. If verification is completely trusted/centralized, that is also acceptable.

Stage 1: There must be a (trustless) proof system that ensures only valid transactions are accepted. A security committee that can overturn the proof system is allowed, but it must have a 75% threshold vote. Additionally, the quorum-blocking portion of the committee (i.e., 26%+) must be outside the main company building the Rollup. A weaker upgrade mechanism (e.g., DAO) is allowed, but it must have a sufficiently long delay so that if it approves a malicious upgrade, users can withdraw their funds before the funds go live.

Stage 2: There must be a (trustless) proof system that ensures only valid transactions are accepted. The security committee is only allowed to intervene when there are provable errors in the code, for example, if two redundant proof systems are inconsistent with each other, or if one proof system accepts two different post-state roots for the same block, or if nothing is accepted for a sufficiently long time (e.g., a week). An upgrade mechanism is allowed, but it must have a long delay.

Our goal is to reach Stage 2. The main challenge in reaching Stage 2 is to gain sufficient confidence that the proof system is actually trustworthy enough. There are two main methods to achieve this:

- Formal verification: We can use modern mathematics and computational techniques to prove that (optimistic and validity) proof systems only accept blocks that conform to the EVM specification. These techniques have existed for decades, but recent advances (such as Lean 4) have made them more practical, and advancements in AI-assisted proofs may further accelerate this trend.

- Multi-provers: Create multiple proof systems and tie the funding to these proof systems with a security committee (or other small tools with trust assumptions, such as TEE). If the proof systems agree, the security committee has no power; if they disagree, the security committee can only choose between one of them, and it cannot unilaterally impose its answer.

A diagram of multi-provers, combining an optimistic proof system, a validity proof system, and a security committee.

What are the links to existing research?

- EVM K Semantics (formal verification work from 2017): https://github.com/runtimeverification/evm-semantics

- Talk on the multi-prover concept (2022): https://www.youtube.com/watch?v=6hfVzCWT6YI

- Taiko plans to use multi-proofs: https://docs.taiko.xyz/core-concepts/multi-proofs/

What still needs to be done? What are the trade-offs?

For formal verification, the workload is substantial. We need to create a formally verified version of the entire SNARK prover for the EVM. This is an extremely complex project, although we have already begun work on it. There is a trick that can greatly simplify this task: we can create a formally verified SNARK prover for a minimized virtual machine (e.g., RISC-V or Cairo) and then implement the EVM within that minimized virtual machine (and formally prove its equivalence to other Ethereum virtual machine specifications).

For multi-provers, there are still two main parts that are not yet complete. First, we need to have sufficient confidence in at least two different proof systems, ensuring that each is reasonably secure and that if they have issues, those issues should be different and unrelated (so they do not fail simultaneously). Second, we need to have a very high level of trust in the underlying logic of the merged proof systems. This part of the code is much smaller. There are ways to make it very small, such as storing funds in a secure multisig contract represented by contracts that act as signers for each proof system, but this will increase on-chain gas costs. We need to find some balance between efficiency and security.

How does it interact with other parts of the roadmap?

Moving activity to L2 can reduce MEV pressure on L1.

Improvements in Cross-L2 Interoperability

What problem are we solving?

A major challenge facing the current L2 ecosystem is that users find it difficult to navigate. Additionally, the simplest methods often reintroduce trust assumptions: centralized cross-chain, RPC clients, etc. We need to make using the L2 ecosystem feel like using a unified Ethereum ecosystem.

What is it? How does it work?

Improvements in cross-L2 interoperability come in many categories. Theoretically, an Ethereum centered around Rollup is the same as an execution-sharded L1. The current Ethereum L2 ecosystem still has these shortcomings in practice:

Specific chain addresses: Addresses should contain chain information (L1, Optimism, Arbitrum, etc.). Once this is achieved, cross-L2 sending processes can be implemented simply by placing the address in the "send" field, allowing wallets to handle how to send in the background (including using cross-chain protocols).

Payment requests for specific chains: It should be easy and standardized to create messages in the form of "send me X amount of Y type tokens on chain Z." This mainly has two application scenarios: (i) payments between individuals or between individuals and merchant services; (ii) DApp requests for funds.

Cross-chain exchanges and gas payments: There should be a standardized open protocol to express cross-chain operations, such as "I will send 1 Ether (on Optimism) to the person who sends me 0.9999 Ether on Arbitrum," and "I will send 0.0001 Ether (on Optimism) to the person who includes this transaction on Arbitrum." ERC-7683 is an attempt at the former, while RIP-7755 is an attempt at the latter, although both have a broader application scope than these specific use cases.

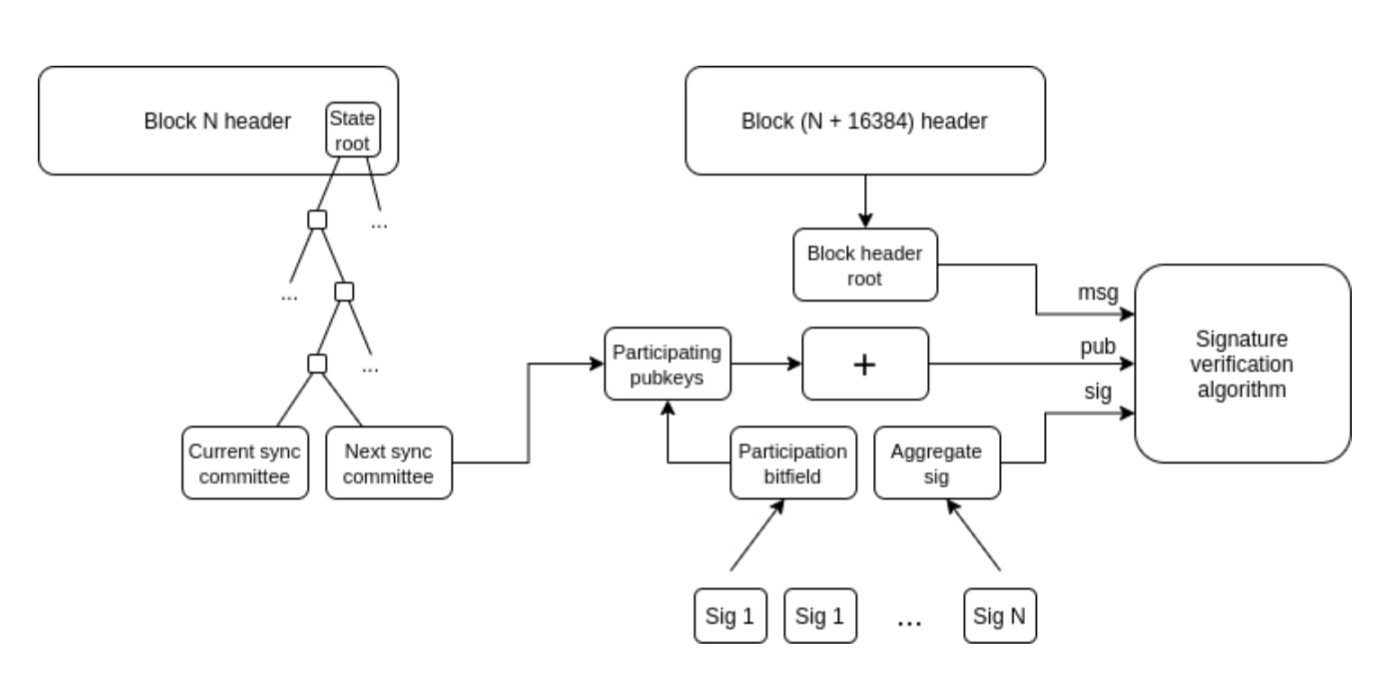

Light clients: Users should be able to actually verify the chain they are interacting with, rather than just trusting RPC providers. Helios from a16z crypto can do this (for Ethereum itself), but we need to extend this trustlessness to L2. ERC-3668 (CCIP-read) is one strategy to achieve this.

How light clients update their view of the Ethereum header chain. Once the header chain is obtained, Merkle proofs can be used to verify any state object. Once you have the correct L1 state object, you can use Merkle proofs (and signatures if you want to check pre-confirmations) to verify any state object on L2. Helios has already accomplished the former. Extending to the latter is a standardization challenge.

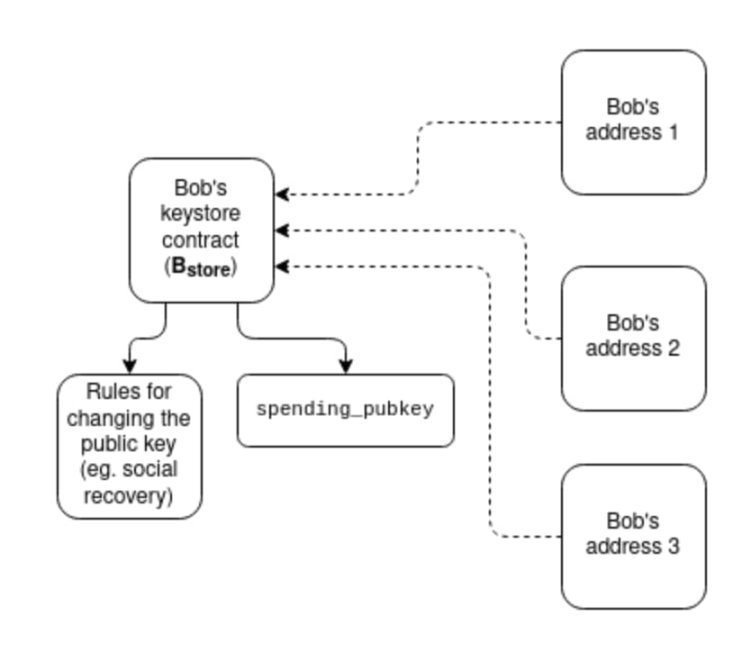

- Keystore wallets: Nowadays, if you want to update the keys controlling your smart contract wallet, you must update them on all N chains where that wallet exists. A keystore wallet is a technology that allows keys to exist in only one place (either on L1 or potentially later on L2), and then any L2 that has a copy of the wallet can read the keys from it. This means updates only need to be done once. To improve efficiency, keystore wallets require L2 to have a standardized way to read information from L1 at no cost; there are two proposals for this, namely L1SLOAD and REMOTESTATICCALL.

How keystore wallets work

A more radical "shared token bridge" concept: Imagine a world where all L2s are validity proof Rollups and each slot submits to Ethereum. Even in such a world, transferring an asset from one L2 to another in its native state still requires withdrawals and deposits, which incurs significant L1 gas fees. One way to solve this problem is to create a shared minimalist Rollup whose sole function is to maintain which L2 owns each type of token and how much balance each has, allowing these balances to be updated in bulk through a series of cross-L2 sending operations initiated by any L2. This would enable cross-L2 transfers without paying L1 gas fees for each transfer, nor would it require using liquidity provider-based technologies like ERC-7683.

Synchronous composability: Allowing synchronous calls to occur between specific L2s and L1 or among multiple L2s. This helps improve the financial efficiency of DeFi protocols. The former can be achieved without any cross-L2 coordination; the latter requires shared ordering. Rollup-based technologies automatically apply to all these techniques.

What are the links to existing research?

Chain-specific address: ERC-3770: https://eips.ethereum.org/EIPS/eip-3770

ERC-7683: https://eips.ethereum.org/EIPS/eip-7683

RIP-7755: https://github.com/wilsoncusack/RIPs/blob/cross-l2-call-standard/RIPS/rip-7755.md

Scroll keystore wallet design patterns: https://hackmd.io/@haichen/keystore

Helios: https://github.com/a16z/helios

ERC-3668 (sometimes referred to as CCIP read): https://eips.ethereum.org/EIPS/eip-3668

Justin Drake's proposal on "based (shared) pre-confirmations": https://ethresear.ch/t/based-preconfirmations/17353

L1SLOAD (RIP-7728): https://ethereum-magicians.org/t/rip-7728-l1sload-precompile/20388

REMOTESTATICCALL in Optimism: https://github.com/ethereum-optimism/ecosystem-contributions/issues/76

AggLayer, which includes the idea of a shared token bridge: https://github.com/AggLayer

What still needs to be done? What are the trade-offs?

Many of the examples above face the dilemma of when to standardize and which layers to standardize. If standardization occurs too early, it may entrench a suboptimal solution. If it occurs too late, it may lead to unnecessary fragmentation. In some cases, there exists a short-term solution that is weaker in attributes but easier to implement, as well as a "finally correct" long-term solution that may take years to realize.

These tasks are not just technical issues; they are also (and may primarily be) social issues that require cooperation between L2, wallets, and L1.

How does it interact with other parts of the roadmap?

Most of these proposals are "higher-level" structures, so considerations at the L1 level have little impact. One exception is shared ordering, which has a significant impact on maximum extractable value (MEV).

Scaling Execution on L1

What problem are we solving?

If L2 becomes highly scalable and successful, but L1 can still only handle a very limited transaction volume, Ethereum may face many risks:

The economic condition of ETH assets will become more unstable, which in turn will affect the long-term security of the network.

Many L2s benefit from a close connection to the highly developed financial ecosystem on L1; if this ecosystem is significantly weakened, the incentive to become L2 (rather than an independent L1) will diminish.

L2 will take a long time to achieve the same level of security guarantees as L1.

If L2 fails (for example, due to malicious actions or disappearance of operators), users will still need to rely on L1 to recover their assets. Therefore, L1 needs to be strong enough to occasionally handle the highly complex and chaotic cleanup work of L2.

For these reasons, continuing to scale L1 itself and ensuring it can accommodate an increasing number of use cases is very valuable.

What is it? How does it work?

The simplest way to scale is to directly increase the gas limit. However, this may lead to centralization of L1, thereby undermining another important feature of Ethereum L1: its credibility as a robust foundational layer. There is still debate about how sustainable it is to simply increase the gas limit, and this will vary depending on what other technologies are implemented to make the validation of larger blocks easier (e.g., historical expiry, statelessness, L1 EVM validity proofs). Another important area that requires continuous improvement is the efficiency of Ethereum client software, which is now much more efficient than it was five years ago. An effective strategy for increasing the L1 gas limit will involve accelerating the development of these validation technologies.

- EOF: A new EVM bytecode format that is more friendly to static analysis and allows for faster implementations. Given these efficiency improvements, EOF bytecode can incur lower gas fees.

- Multi-dimensional gas pricing: Setting different base fees and limits for computation, data, and storage can increase the average capacity of Ethereum L1 without increasing the maximum capacity (thus avoiding new security risks).

- Reducing gas costs for specific opcodes and precompiles - historically, we have often increased the gas costs for certain underpriced operations to avoid denial-of-service attacks. More can be done to lower the gas fees for over-priced opcodes. For example, addition is much cheaper than multiplication, but currently, the fees for ADD and MUL opcodes are the same. We could lower the fees for ADD and even make the fees for simpler opcodes like PUSH lower. Overall, EOF is more optimized in this regard.

- EVM-MAX and SIMD: EVM-MAX is a proposal that allows for more efficient native big number modular arithmetic as a separate module of the EVM. Unless intentionally exported, the values computed by EVM-MAX can only be accessed by other EVM-MAX opcodes. This allows for greater space to optimize the format for storing these values. SIMD (single instruction multiple data) is a proposal that allows the same instruction to be efficiently executed on an array of values. Together, these can create a powerful coprocessor alongside the EVM for more efficient implementation of cryptographic operations. This is particularly useful for privacy protocols and L2 protection systems, thus aiding the scaling of both L1 and L2.

These improvements will be discussed in more detail in future Splurge articles.

Finally, the third strategy is native Rollups (or enshrined rollups): essentially, creating many parallel running copies of the EVM, resulting in a model equivalent to what Rollups can provide, but more natively integrated into the protocol.

What are the links to existing research?

- Polynya's Ethereum L1 scaling roadmap: https://polynya.mirror.xyz/epju72rsymfB-JK52uYI7HuhJ-WzM735NdP7alkAQ

- Multi-dimensional gas pricing: https://vitalik.eth.limo/general/2024/05/09/multidim.html

- EIP-7706: https://eips.ethereum.org/EIPS/eip-7706

- EOF: https://evmobjectformat.org/

- EVM-MAX: https://ethereum-magicians.org/t/eip-6601-evm-modular-arithmetic-extensions-evmmax/13168

- SIMD: https://eips.ethereum.org/EIPS/eip-616

- Native rollups: https://mirror.xyz/ohotties.eth/P1qSCcwj2FZ9cqo3_6kYI4S2chW5K5tmEgogk6io1GE

- Max Resnick's interview on the value of scaling L1: https://x.com/BanklessHQ/status/1831319419739361321

- Justin Drake discusses scaling using SNARKs and native rollups: https://www.reddit.com/r/ethereum/comments/1f81ntr/comment/llmfi28/

What still needs to be done? What are the trade-offs?

There are three strategies for L1 scaling that can be pursued individually or in parallel:

- Improve technologies (e.g., client code, stateless clients, historical expiry) to make L1 easier to validate, then increase the gas limit.

- Reduce the costs of specific operations to increase average capacity without increasing worst-case risks.

- Native rollups (i.e., creating N parallel copies of the EVM).

Understanding these different technologies reveals various trade-offs. For example, native rollups share many of the same weaknesses in composability as regular rollups: you cannot send a single transaction to synchronously execute operations across multiple rollups, as you can with contracts on the same L1 (or L2). Increasing the gas limit may undermine other benefits achievable through simplifying L1 validation, such as increasing the proportion of users running validating nodes and the number of solo stakers. Depending on how it is implemented, making specific operations cheaper in the EVM (Ethereum Virtual Machine) may increase the overall complexity of the EVM.

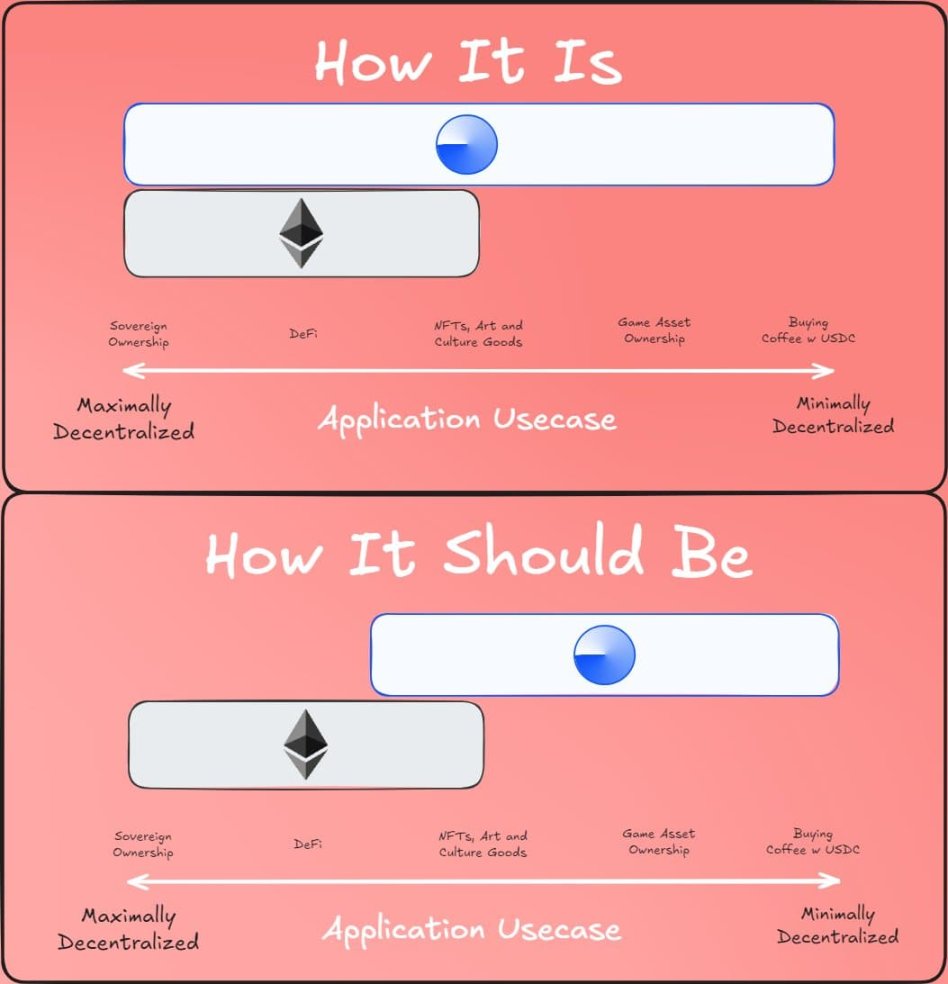

A significant question that any L1 scaling roadmap needs to address is: what is the ultimate vision for L1 and L2? Clearly, putting everything on L1 is absurd: potential use cases could involve hundreds of thousands of transactions per second, which would render L1 completely unmanageable (unless we adopt a native rollup approach). However, we do need some guiding principles to ensure we do not end up in a situation where the gas limit is increased tenfold, severely damaging the decentralization of Ethereum L1.

A perspective on the division of labor between L1 and L2

How does it interact with other parts of the roadmap?

Bringing more users to L1 not only means enhancing scalability but also improving other aspects of L1. This means more MEV will remain on L1 (rather than merely becoming an issue for L2), making the need to address MEV explicitly more urgent. This will greatly enhance the value of fast slot times on L1. At the same time, it heavily relies on the smooth operation of L1 (the Verge) validation.

Recommended reading: "Vitalik's new article: The Possible Future of Ethereum, the Merge"

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。