When will a company surpass OpenAI? This question has undoubtedly been a puzzle for many readers over the past year.

If there is only one company in the world that can surpass OpenAI, Google should be the most hopeful contender.

As a North American AI giant, Google shares the same AGI goal as OpenAI, world-class technical talent, and global financial resources. Even the core Transformer architecture of OpenAI's large model was originally invented by Google.

However, since 2023, the AI field has been full of changes, and OpenAI has always been one step ahead of Google. Every time Google unveils a "revenge weapon" to try to regain the spotlight, it is always overshadowed by OpenAI.

For example, the latest release of the nuclear-grade multimodal large model Gemini 1.5 only "exploded" on the technology topic list for a few hours, because the subsequent release of Sora was too explosive and attracted more attention, rendering it unnoticed.

Undoubtedly, the AI field is experiencing the most exciting "speed and passion" in the world, with the leading OpenAI winning beautifully, and the closely following Google losing decently. I found that their situation has been accurately captured by the popular movie during the Spring Festival of the Year of the Dragon.

If OpenAI is as stunning and exciting as "Hot Blood," then Google is like the middle-aged racer in "Pionex 2," courageously speeding forward, but instead of winning, it ends up in a big crash.

The result of who will win the holy grail of AGI at the end of the race track is still unknown. Over the past year, just admiring the initial stages of this long-distance race has been extremely exhilarating.

The AI showdown between Google and OpenAI can be described as a repeated cycle of victories and defeats. Let's take an overall look at the industrial confrontation between the North American AI giants through this exciting "duel of the titans."

Google's three consecutive defeats, the passionate confrontation of North American AI giants

Currently, in the race for the holy grail of AGI, there are three North American AI giants: OpenAI, Google, and Meta.

Among them, Meta is taking the open-source route, and its large model series LLaMA is currently the most active AI open-source community in the world. OpenAI and Google, on the other hand, are on the same track, mainly building "closed-source" large models.

Although OpenAI has been ridiculed for "no longer being open," and Google employees have boldly stated "neither we nor OpenAI have a moat," from a different perspective, a closed-source business strategy must provide high-quality models and possess irreplaceable competitive advantages to convince users to pay. This will also drive model manufacturers to continue innovating and maintain their competitive advantage, making it an indispensable commercial force in the AI industry.

Therefore, the confrontation between the three North American AI giants is Meta's ecosystem versus OpenAI and Google's model competition.

So, focusing on the model track, how is the competition going?

Throughout 2023, Google, which is on the same track as OpenAI, has deeply experienced the pressure of peer competition.

This race can be divided into three stages:

Round 1: ChatGPT VS Bard.

The result goes without saying. This was a competition that OpenAI "picked peaches" from Google, and since then, Google has only been following in the wake of OpenAI.

In November 2022, OpenAI released ChatGPT, which started a global trend of large language models.

Among them, the basic technology of ChatGPT, Transformer, was introduced by Google, and the emergence of large language models was discovered by Google researcher Jason Wei (who later jumped to OpenAI). Using Google's technology to compete with Google and challenge Google's leadership in AI, OpenAI can be said to have "slapped Google in the face."

Google's response was to "get angry."

In March 2023, Google urgently released Bard. However, the performance of this model itself was relatively weak, with limited functionality at launch, only supporting English, and targeting only a few users, completely unable to compete with ChatGPT.

Round 2: GPT-4 VS PaLM2.

Some say that Google adopted a "Tian Ji's horse racing" strategy, deliberately releasing the relatively weak machine learning model Bard in the first round. This makes sense, but it's hard to compete when every horse from OpenAI is a good one.

OpenAI quickly released an upgraded version of GPT-4 and opened up the API for GPT-4, distancing itself further from Google.

At the Google I/O 2023 conference in May, Google introduced PaLM 2 to compete with GPT-4, which was also a "transitional product." Zoubin Ghahramani, Google's research vice president, stated that PaLM 2 is an improvement on earlier models, only slightly narrowing the gap between Google and OpenAI in AI, but not surpassing GPT-4 overall.

In this round, Google still lagged behind. Google is clearly aware of this and simultaneously announced at the conference that it is training the successor to PaLM, named Gemini, betting billions and preparing to stage a "prince's revenge" at the end of the year.

Round 3: Gemini family VS Sora+GPT-5.

In December 2023, Google's Gemini "arrived late." This is currently Google's most powerful and versatile AI model, dubbed the "revenge weapon" by the media. During this period, OpenAI staged a palace drama of "Zhen Huan's Return to the Palace" without any particularly explosive products. This time, can Google reclaim everything that belongs to it?

Unfortunately, Google did not stage the "return of the dragon king" in the field of AI.

The three sizes of Gemini: Nano, Pro, and Ultra, with Gemini Pro lagging behind OpenAI's GPT model in common sense reasoning tasks, Gemini Ultra having only a few percentage points of advantage over GPT-4, which was a product of OpenAI a year ago. Moreover, Gemini was also exposed for claiming to defeat GPT-4's multimodal video, which was found to have post-production and editing elements, and was trained using Chinese model-generated Chinese language data, claiming to be original.

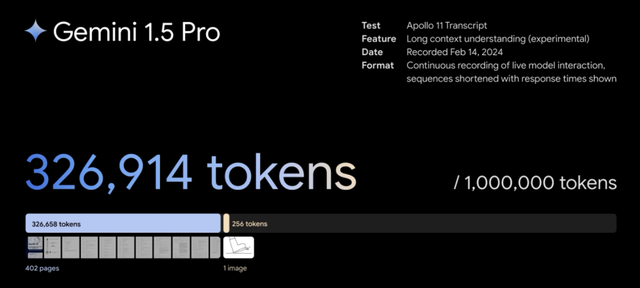

Google, with a burst of energy, quickly released Gemini 1.5, a multimodal large model that can stably process up to 1 million tokens, setting a record for the longest contextual window.

This is an exciting achievement, if not for Sora.

Just a few hours later, OpenAI released the text-video generation model Sora, once again stunning the world with unprecedented video generation performance and the commercialization of world models, stealing the spotlight that should have belonged to Gemini 1.5 and reinforcing its leadership position in AI. Currently, people tend to believe that OpenAI is still one step ahead of Google.

Previously, everyone speculated that GPT-5 was already trained enough, and in the face of Google's current strongest model Gemini 1.5, some have even called out to Ultraman, asking when he will release GPT-5.

The approximately one-year-long North American AI "Tian Ji's horse racing" has temporarily come to an end with Google's three consecutive defeats.

The divergent paths of AGI, Google's difficult journey

AGI is a long race. Looking at the timeline, the year-long confrontation between Google and OpenAI, and the temporary success or failure, may not mean much in the future. The qualification to enter the top-level race track is in itself a proof of Google's AI strength.

Rather than the results of winning or losing, it is more worth discussing why Google, as the "model king," has been consistently left behind by OpenAI, no matter how hard it tries.

In a horse race, losing once is a tactical mistake, but losing repeatedly may indicate that there are problems from the source, such as the breed of the horse, the stable, the fodder, etc.

Returning to the source, Google and OpenAI can be said to have taken different paths despite having the same goal.

They have the same goal, which is to achieve general artificial intelligence and win the AGI holy grail.

They have taken different paths, with OpenAI focusing on more general language capabilities as the basis for achieving AGI, and therefore adopting the crucial Transformer architecture for the NLP field, creating a series of GPT models, leading to the stunning appearance of ChatGPT.

On the other hand, Google's DeepMind has been using reinforcement learning and deep learning to solve various AI problems for many years, with a wide range of technical accumulations. For example, the groundbreaking AlphaGo, the biology-changing AlphaFold, and NLP technologies such as Transformer.

This is like two drivers preparing their cars for the race. OpenAI chose a track for AGI, such as "Formula racing," and developed models with language as the core, optimizing and modifying the structure, length, width, engine, and cylinders of the car (model) (engineering). Google's DeepMind, on the other hand, is not sure which car will end the AGI race, so they try out Formula racing cars, sports cars, and motorcycles.

Originally, there was no distinction between the two paths. But with the emergence of large language models as the most promising path to achieve AGI, it has been proven that OpenAI's chosen path is more likely to achieve AGI, and the technological path chosen by Google's DeepMind has revealed obvious shortcomings:

1. Diversified direction, high cost. The broad innovation in various technological directions has consumed a lot of funds, and the contradiction between DeepMind and Google's parent company, AlphaBeta, in commercialization has deepened. While OpenAI has received substantial funding and accelerated, Google, in order to increase its investment in AI, has cut costs through layoffs.

2. Too many choices, difficult to focus. Google has pioneered many technologies, but the emphasis and depth of each technology have been diluted, leading to little success. The most typical example is the Transformer architecture, invented by Google but popularized by OpenAI. The emergence of ChatGPT was also discovered by a researcher at Google, but it was not given much attention and was pushed forward after leaving for OpenAI.

3. Slow implementation, too slow results. Google's conservatism in AI is well known, leading to low efficiency in the transformation of advanced technologies. A former Google employee once complained that Google's projects are usually hyped up, then nothing is released, and a year later, the project is scrapped. This can be seen in the success of Sora, where Google has the corresponding technical reserves and results for training Sora using diffusion models, but has not been able to produce a product like Sora.

It can be seen that due to initially betting on the wrong track, by the time large language models became the most promising path to achieve AGI, OpenAI's leading momentum had already been established. At this point, Google's attempt to return to the technological track where OpenAI is located naturally puts it at a disadvantage.

A series of mistakes, holding on means everything

To be honest, Google has been actively addressing various issues, including strategic technology selection mistakes, internal management efficiency, personnel redundancy, and the outflow of AI talent.

In April last year, Google merged its two AI "heavyweight" teams, Google Brain and DeepMind, to jointly develop Gemini. In terms of the final performance, Gemini's performance is excellent, and the 1.5 version is currently one of the most advanced large models in the world. Internal resources have also been heavily tilted towards the field of AI, and some AI talent that had left has returned to Google.

Actual actions show that once the track is clear, Google's determination and speed to catch up with OpenAI are top-notch.

But the reality of continuous lagging behind fully illustrates one point: one's own failure is terrifying, but the success of a friend is even more distressing.

Despite Google's efforts to solve its various problems and push forward with large models, it cannot match the acceleration of OpenAI.

On the one hand, OpenAI's research team has gone all out, while Google's newly merged team still needs to adjust. Bill Peebles, the core developer of Sora, revealed that the team worked at high intensity without sleep almost every day for a year. After the merger of Google Brain and DeepMind, many employees had to give up their familiar software and projects to develop Gemini, and the internal adjustment resulting in project delays and stagnation is bound to hinder Google's efforts to catch up with OpenAI.

In addition, compared to Google's efforts to recruit talent after the fact, OpenAI's momentum in attracting top AI talent from around the world is overwhelming. In February, Altman publicly stated on social media, "All key resources are in place, very focused on AGI," and is actively recruiting talent. Ultimately, the competition in AI comes down to the competition for talent, because the most important thing for AGI is intellectual resources, and there are only so many top and excellent talents, which also makes people sweat over whether Google can catch up with OpenAI.

In the movie "Pionex 2," after the protagonist tries to race again and crashes, he does not continue to pursue victory on the track, but as a racer who deeply loves the sport, he steps onto the track just to prove himself.

The confrontation between Google and OpenAI cannot be simply categorized as a win or a loss. Just as Google said in "Why We Focus on AI (and to what end)": "We believe that AI can become a foundational technology that fundamentally changes the lives of people all over the world—this is our goal and our passion!"

All the AI "racers" who are brave enough to step onto the track deserve applause. And this AGI race, full of speed and passion, will surely bring even more excitement to us in the audience.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。