Article Source: New Wisdom

This comprehensive research report critically analyzes the current status and future direction of generative AI, and explores how innovative achievements such as Google's Gemini and the highly anticipated OpenAI Q* will change the practical applications in multiple fields.

Image Source: Generated by Wujie AI

Recently, researchers from institutions such as the Australasian Institute of Technology, Massey University, and Royal Melbourne Institute of Technology conducted a comprehensive review, delving into the evolving landscape of generative AI.

The study specifically focused on the revolutionary impact of the Mixture of Experts (MoE) and multimodal learning, as well as the speculative progress towards Artificial General Intelligence (AGI).

Paper Link: https://arxiv.org/abs/2312.10868

Critically examined the current state and future trajectory of generative artificial intelligence (AI), exploring how innovations such as Google's Gemini and the anticipated OpenAI Q* project are reshaping research priorities and applications in various fields, including the impact analysis on the classification of generative AI research.

Evaluated the computational challenges, scalability, and real-world impact of these technologies, while emphasizing their potential to drive significant progress in fields such as healthcare, finance, and education.

Discussed the emerging academic challenges brought about by the proliferation of AI-themed and AI-generated preprints, examining their impact on the peer review process and scholarly communication.

Emphasized the importance of integrating ethical and human-centered approaches in AI development, ensuring alignment with societal norms and well-being, and outlined a strategic vision for future AI research focusing on the balanced and prudent use of MoE, multimodal, and AGI in generative AI.

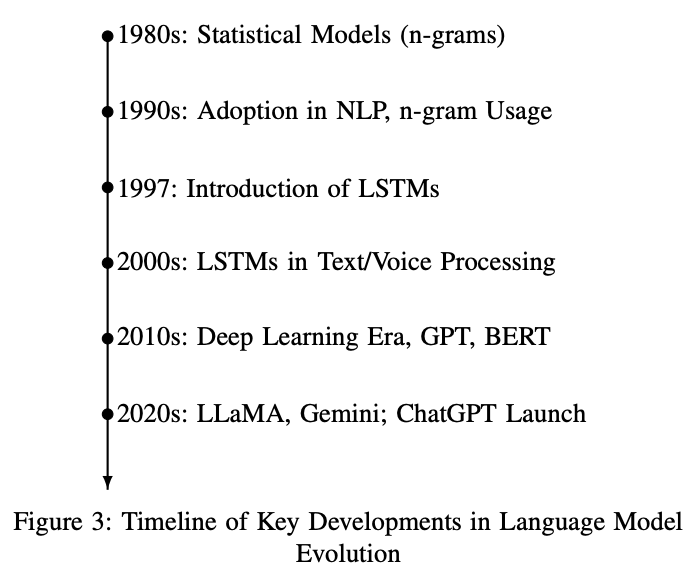

The historical background of artificial intelligence (AI) can be traced back to Alan Turing's "imitation game," early computational theory, and the development of the first neural networks and machine learning, laying the foundation for today's advanced models.

This evolution has been prominently highlighted by key moments such as the rise of deep learning and reinforcement learning, which are crucial in shaping contemporary AI trends, including the emergence of complex Mixture of Experts (MoE) and multimodal AI systems, demonstrating the dynamic and evolving nature of the field. These advancements illustrate the dynamic and evolving nature of AI technology.

The evolution of artificial intelligence (AI) witnessed a pivotal turning point with the emergence of Large Language Models (LLM), particularly the unveiling of ChatGPT developed by OpenAI and the recent Gemini by Google. This technology has not only fundamentally transformed industries and academia but also reignited critical discussions about AI consciousness and its potential threats to humanity.

The development of such advanced AI systems, including significant competitors like Anthropic's Claude and the current Gemini, which represent several advancements compared to GPT-3 and Google's own LaMDA, has reshaped the research landscape.

Gemini's ability to learn through bidirectional dialogue and its "spike-and-slab" attention mechanism, enabling it to focus on relevant parts of the context in multi-turn conversations, represents a significant leap in developing models better suited for multi-domain dialogue applications. These innovations in LLM, including the Mixture of Experts approach adopted by Gemini, signify a shift towards models capable of handling diverse inputs and promoting multimodal approaches.

In this context, speculation about OpenAI's project named Q* (Q-Star) has surfaced, reportedly combining the powerful capabilities of LLM with complex algorithms such as Q-learning and A* (A-Star algorithm), further fostering a dynamic research environment.

Changes in the Heat of AI Research

With the continuous development in the field of Large Language Models (LLM) reflected by innovations such as Gemini and Q*, a large number of studies have emerged, aiming to outline the future research direction. These studies vary from identifying emerging trends to emphasizing rapid progress in different areas.

The established methods and early adoption of binary divisions are becoming increasingly evident, with "hot topics" in LLM research shifting towards multimodal capabilities and conversation-driven learning, as demonstrated by Gemini.

The rapid dissemination of research through preprints accelerates knowledge sharing but also brings the risk of reduced academic scrutiny. Inherent bias issues highlighted by Retraction Watch, as well as concerns about plagiarism and fabrication, pose significant obstacles.

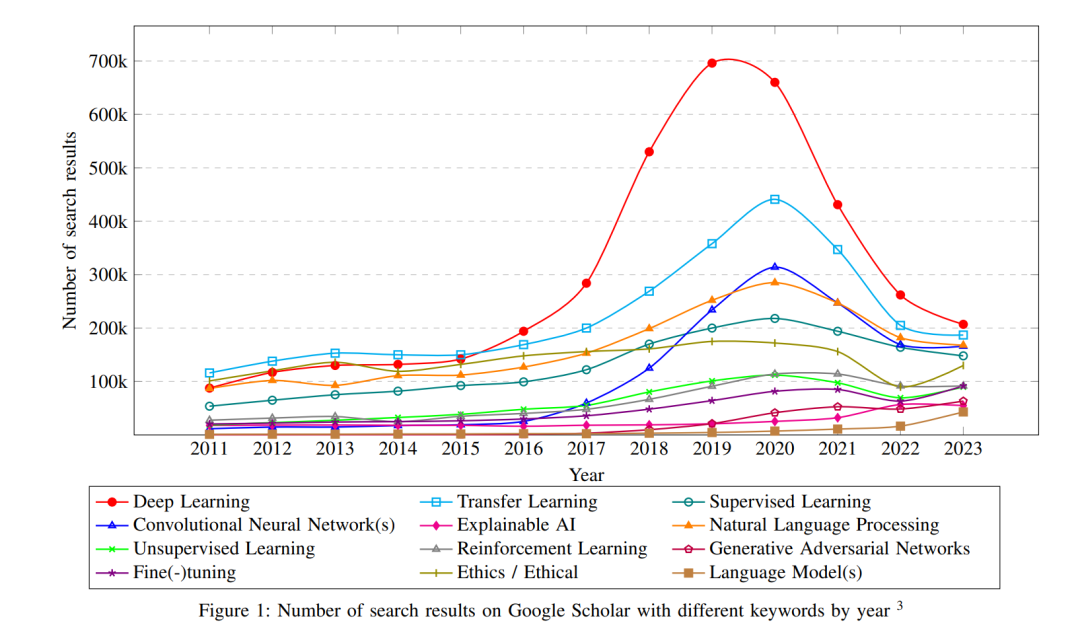

Therefore, the academic community stands at a crossroads, requiring unified efforts to refine research directions in line with the rapidly evolving landscape of the field, a change that seems partially traceable through the fluctuating popularity of different research keywords over time.

The release of generative models such as GPT and the widespread commercial success of ChatGPT have had a significant impact.

As shown in Figure 1, the rise and fall of certain keywords seem to be related to significant industry milestones, such as the release of the "Transformer" model in 2017, the release of the GPT model in 2018, and the commercialization of ChatGPT-3.5 in December 2022.

For example, the search peak for "deep learning" is consistent with breakthroughs in neural network applications, while the surge in interest in "natural language processing" aligns with models like GPT and LLaMA redefining the possibilities of language understanding and generation.

Despite some fluctuations, the sustained focus on "ethics/morality" in AI research reflects a persistent and deeply rooted concern for the ethical dimension of AI, emphasizing that ethical considerations are not just reactive measures but an inseparable and enduring dialogue in AI discussions.

From an academic perspective, it is quite interesting to consider whether these trends imply a causal relationship, i.e., technological advancements driving research focus, or the flourishing research itself propelling technological development.

The article also explores the profound social and economic impacts of AI progress. The authors examine how AI technology reshapes various industries, alters employment patterns, and influences socio-economic structures. This analysis highlights the opportunities and challenges posed by AI in the modern world, emphasizing its role in driving innovation and economic growth, while also considering the ethical implications and potential disruptive effects on society.

Future research may provide clearer insights, but the synchronous interaction between innovation and academic curiosity remains a hallmark of AI progress.

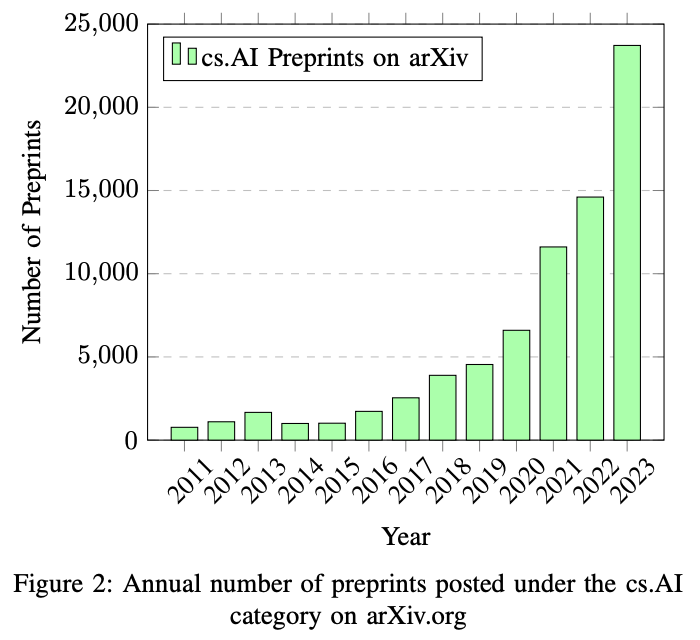

Meanwhile, as shown in Figure 2, the exponential growth in the number of preprints published under the category of computer science > artificial intelligence (cs.AI) on arXiv seems to signal a paradigm shift in research dissemination within the AI community.

While rapid dissemination of research findings facilitates swift knowledge exchange, it also raises concerns about information verification.

The surge in preprints may lead to the spread of unverified or biased information, as these studies have not undergone the typical rigorous review and potential retractions associated with peer-reviewed publications.

This trend emphasizes the need for the academic community to carefully consider and critique, especially considering the potential for these unreviewed studies to be cited and their findings to be disseminated.

Research Objectives

The motivation for this review is the formal unveiling of Gemini and the speculative discussions surrounding the Q project, prompting a timely examination of the mainstream trends in generative artificial intelligence (AI) research.

The paper specifically contributes to understanding how the Mixture of Experts (MoE), multimodal, and Artificial General Intelligence (AGI) impact generative AI models, providing detailed analysis and future directions for these three key areas.

The paper aims to critically evaluate the potential for outdated or irrelevant topics in existing research themes, while delving into the emerging prospects in the rapidly changing landscape of LLM.

The anticipated progress in AI will not only enhance capabilities in language analysis and knowledge synthesis but will also pioneer breakthroughs in areas such as Mixture of Experts (MoE), multimodal, and Artificial General Intelligence (AGI), declaring the obsolescence of traditional, statistically driven natural language processing techniques in many fields.

However, the eternal requirement for AI to align with human ethics and values remains a fundamental principle, and the speculative Q-Star program provides an unprecedented opportunity to spark discussions on how these advancements reshape the terrain of LLM research.

In this context, the insights of Jim Fan, a senior research scientist at NVIDIA, particularly regarding the fusion of learning and search algorithms for Q, provide valuable perspectives for the potential technical architecture and capabilities of this effort.

The research methodology of the paper involves structured literature searches using key terms such as "Large Language Models" and "Generative AI."

The authors used filters in several academic databases, including IEEE Xplore, Scopus, ACM Digital Library, ScienceDirect, Web of Science, and ProQuest Central, to identify relevant articles published from 2017 (the release of the Transformer model) to 2023 (the time of writing this article).

The paper aims to analyze the technological impact of Gemini and Q, and how they (as well as inevitable similar technologies) are changing research trajectories and opening up new perspectives in the field of AI.

In the process, three emerging research areas—MoE, multimodal, and AGI—are identified, which will profoundly reshape the research landscape of generative AI.

This investigation adopts a review-style approach, systematically mapping out a comprehensive and analytical research roadmap of the current and emerging trends in generative AI.

The primary contributions of this study are as follows:

1) A detailed examination of the evolving landscape of generative AI, emphasizing the advancements and innovations of technologies like Gemini and Q and their widespread impact in the field of AI.

2) Analysis of the transformative effects of advanced generative AI systems on academic research, exploring how these developments are changing research methodologies, establishing new trends, and potentially rendering traditional methods obsolete.

3) Comprehensive evaluation of the ethical, social, and technical challenges arising from the integration of generative AI in academia, emphasizing the importance of aligning these technologies with ethical norms, ensuring data privacy, and formulating comprehensive governance frameworks.

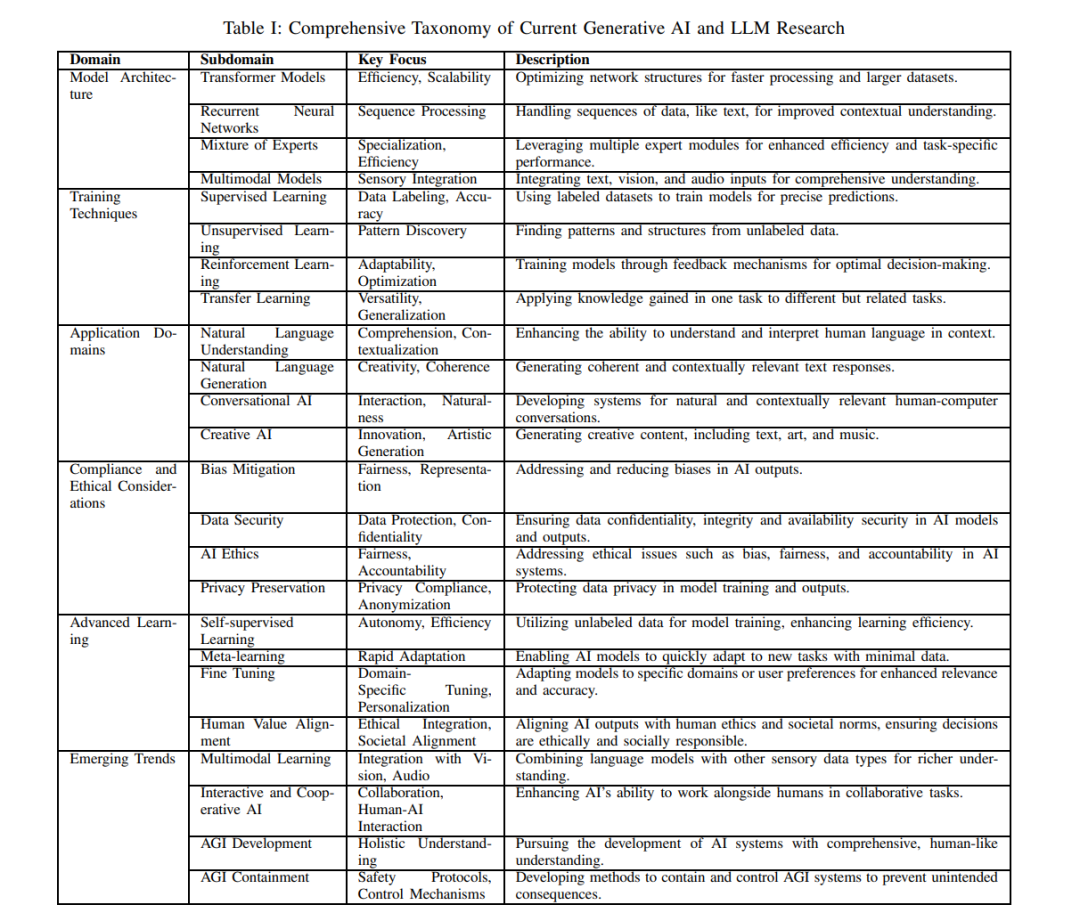

The current classification of generative AI research in the field of generative artificial intelligence (AI) is rapidly evolving, necessitating a comprehensive classification that covers the breadth and depth of research within the field.

As detailed in Table I, this classification categorizes the primary research and innovation areas of generative AI, serving as the foundational framework for understanding the current state of the field, guiding us through the evolving landscape of model architectures, advanced training methods, diverse application domains, ethical implications, and the complexity of emerging technological frontiers.

The architecture of generative AI models has undergone significant developments, with four key areas being particularly prominent:

- Transformer Models: Transformer models have revolutionized the field of AI, particularly in natural language processing (NLP), due to their higher efficiency and scalability. They employ advanced attention mechanisms to achieve enhanced context processing, enabling nuanced understanding and interaction. These models have also made significant advancements in the field of computer vision, such as the development of visual Transformers like EfficientViT and YOLOv8. These innovations symbolize the extensibility of Transformer models in domains such as object detection, enhancing not only performance but also computational efficiency.

- Recurrent Neural Networks (RNNs): RNNs excel in the field of sequence modeling, particularly for tasks involving language and temporal data, as their architecture is specifically designed to handle data sequences such as text, allowing them to effectively capture the context and order of inputs. This ability to process sequential information makes them indispensable in applications requiring a deep understanding of the temporal dynamics of data, such as natural language tasks and time series analysis. The ability of RNNs to maintain continuity over sequences is a key asset in a wider range of AI domains, especially in scenarios where context and historical data play crucial roles.

- Mixture of Experts Models (MoE): MoE models significantly improve efficiency by deploying model parallel processing across multiple specialized expert modules, enabling these models to utilize Transformer-based modules for dynamic token routing and scale to trillions of parameters, thereby reducing memory footprint and computational costs. MoE models stand out for their ability to distribute computational loads among different experts, each focusing on different aspects of the data, allowing for more efficient handling of large-scale parameters and leading to more efficient and specialized handling of complex tasks.

- Multimodal Models: Multimodal models integrate various sensory inputs such as text, vision, and audio, crucial for comprehensive understanding of complex datasets, particularly in transformative fields like medical imaging. These models achieve accurate and data-efficient analysis through the use of multi-view pipelines and cross-attention modules. The integration of diverse sensory inputs allows for more nuanced and comprehensive data interpretation, enhancing the models' ability to accurately analyze and understand various types of information. The simultaneous processing of different data types enables these models to provide a comprehensive view, particularly suitable for applications requiring in-depth and multi-faceted understanding of complex scenarios.

Emerging trends in generative AI research are shaping the future of technology and human interaction, indicating a dynamic shift towards more integrated, interactive, and intelligent AI systems, pushing the boundaries of possibilities in the AI field. Key developments in this field include:

- Multimodal Learning: Multimodal learning in AI is a rapidly developing subfield focused on integrating language understanding, computer vision, and audio processing to achieve richer, multisensory contextual awareness. Recent developments, such as the Gemini model, have set new benchmarks by demonstrating state-of-the-art performance across various multimodal tasks, including natural image, audio and video understanding, and mathematical reasoning. Gemini's inherent multimodal design reflects seamless integration and operation across different types of information. Despite progress, the field of multimodal learning still faces ongoing challenges, such as improving architectures to effectively handle diverse data types, developing comprehensive datasets that accurately represent multifaceted information, and establishing benchmarks for evaluating the performance of these complex systems.

- Interactive and Collaborative AI: This subfield aims to enhance the ability of AI models to effectively collaborate with humans in complex tasks. This trend focuses on developing AI systems that can work collaboratively with humans, thereby improving user experience and efficiency across various applications, including productivity and healthcare. Core aspects of this subfield involve advancing AI in interpretability, understanding human intent and behavior (psychological theory), and advancing AI systems' scalable coordination with humans. This collaborative approach is crucial for creating more intuitive and interactive AI systems that can assist and enhance human capabilities in diverse contexts.

- AGI Development: AGI represents the visionary goal of creating AI systems with comprehensive and diverse human-like cognitive capabilities, focusing on developing AI with deep and broad understanding capabilities closely related to human cognitive abilities. AGI involves not only replicating human intelligence but also creating systems capable of autonomously performing multiple tasks and demonstrating adaptability and learning abilities similar to humans. The pursuit of AGI is a long-term vision that continually pushes the boundaries of AI research and development.

- AGI Constraints: AGI safety and constraints acknowledge the potential risks associated with highly advanced AI systems, focusing on ensuring that these advanced systems not only excel technically but also align ethically with human values and societal norms. As we move towards developing superintelligent systems, establishing rigorous safety protocols and control mechanisms becomes crucial. Core areas of focus include mitigating representational biases, addressing distributional shifts, and correcting spurious correlations in AI models. The goal is to prevent unintended social consequences by aligning AI development with responsible and ethical standards.

Q*'s Reasoning Capabilities

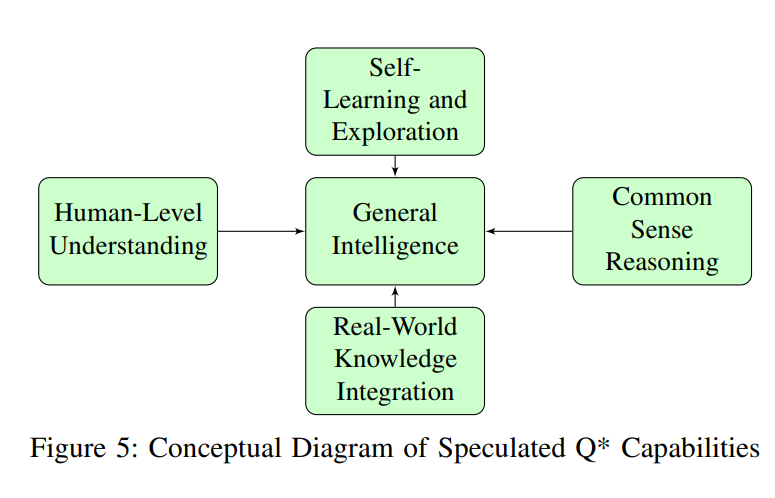

In the thriving field of AI, the highly anticipated Q project is seen as a potential breakthrough beacon, heralding advancements that may redefine the landscape of AI capabilities (see Figure 5).

A. Enhanced General Intelligence

Q's development in the field of general intelligence represents a paradigm shift from specialization to holistic AI, indicating an expansion of model cognitive capabilities similar to human intelligence. This advanced form of general intelligence involves integrating various neural network architectures and machine learning technologies, enabling AI to seamlessly process and synthesize multifaceted information. The universal adapter approach, mimicking models like T0, may empower Q with the ability to rapidly absorb knowledge from various domains. This approach allows Q* to learn adaptive module plugins, enhancing its ability to handle new data types while retaining existing skills, thus forming an AI model that combines narrow specialization into a comprehensive, adaptive, and versatile reasoning system.

B. Advanced Self-Learning and Exploration

In the field of advanced artificial intelligence (AI) development, Q* is expected to represent a significant evolution in self-learning and exploration capabilities. It is speculated that it will use complex strategy neural networks (NNs), similar to those in AlphaGo, but substantially enhanced for handling the complexity of language and reasoning tasks. These networks are expected to employ advanced reinforcement learning techniques, such as Proximal Policy Optimization (PPO), which stabilizes policy updates and improves sample efficiency, a key factor in autonomous learning. Combining these NNs with cutting-edge search algorithms, possibly including new iterative versions of tree or graph thinking, is predicted to enable Q to autonomously navigate and absorb complex information. This approach may leverage graph neural networks to enhance meta-learning capabilities, enabling Q to rapidly adapt to new tasks and environments while retaining previously acquired knowledge.

C. Superior Human-Level Understanding

It is speculated that Q's desire to achieve superior human-level understanding may depend on the high-level integration of multiple neural networks, including a value neural network (VNN), similar to the evaluation components in systems like AlphaGo. This network will not only assess accuracy and relevance in language and reasoning processes but also delve into the subtleties of human communication. The model's deep understanding capabilities can be enhanced through advanced natural language processing algorithms and technologies, such as those found in Transformer architectures like DeBERTa. These algorithms will enable Q to interpret not only text but also subtle social and emotional aspects, including intent, emotion, and underlying meanings. By combining sentiment analysis and natural language reasoning, Q* can explore various social and emotional insights, including empathy, irony, and attitudes.

D. Advanced Common-Sense Reasoning

It is predicted that Q's development in advanced common-sense reasoning will integrate complex logic and decision-making algorithms, possibly combining elements of symbolic AI and probabilistic reasoning. This integration aims to endow Q with an intuitive understanding of everyday logic and a comprehension similar to human common sense, bridging the significant gap between artificial intelligence and natural intelligence. The enhancement of Q's reasoning capabilities may involve world knowledge structured in graphs, including physical and social engines, similar to the engines in the CogSKR model. This physics-based approach is expected to capture and explain everyday logic frequently lacking in contemporary artificial intelligence systems. By leveraging large-scale knowledge bases and semantic networks, Q can effectively address complex social and real-world scenarios, making its reasoning and decision-making more aligned with human experience and expectations.

E. Extensive Integration of Real-World Knowledge

It is speculated that Q's approach to integrating extensive real-world knowledge may involve the use of advanced formal verification systems, providing a solid foundation for verifying its logical and factual reasoning. When combined with complex neural network architectures and dynamic learning algorithms, this approach will enable Q to deeply engage with the complexity of the real world, surpassing the limitations of traditional artificial intelligence. Additionally, Q* may use mathematical theorem proving techniques for verification, ensuring that its reasoning and outputs are not only accurate but also ethically grounded. The inclusion of an ethical classifier in this process further enhances its ability to provide reliable and responsible understanding and interaction with real-world scenarios.

Conclusion

This review survey has set out to explore transformative trends in generative AI research, with particular focus on speculative advancements like Q* and the march towards Artificial General Intelligence (AGI).

The analysis highlights a crucial paradigm shift driven by innovations such as Mixture of Experts (MoE), multimodal learning, and the pursuit of AGI. These advancements foreshadow a future where AI systems may significantly enhance their capabilities in reasoning, contextual understanding, and creative problem-solving.

Despite these advancements, there are still unresolved issues and research gaps.

These include ensuring the ethical alignment of advanced AI systems with human values and societal norms, a challenge that becomes increasingly complex due to their growing autonomy.

The safety and robustness of AGI systems in diverse environments also remain significant research gaps. Addressing these challenges requires a multidisciplinary approach, integrating ethical, social, and philosophical perspectives.

This survey emphasizes the critical areas of interdisciplinary research in the future of AI, highlighting the integration of ethical, social, and technological perspectives. This approach will promote collaborative research, bridging the gap between technological advancements and societal needs, ensuring that AI development aligns with human values and global well-being.

As we continue to progress, the balance between AI advancement and human creativity is not only a goal but a necessity, ensuring that the role of AI is a complementary force, enhancing our innovation and problem-solving capabilities.

Our responsibility is to guide these advancements, enrich human experiences, and ensure that technological progress aligns with ethical standards and societal well-being.

Reference: https://arxiv.org/abs/2312.10868

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。