Source: AIGC Open Community

Image Source: Generated by Wujie AI

We often see various parameters such as 100 billion, 500 billion, 2000 billion added as suffixes or prefixes when introducing large language, diffusion, and other models. You may wonder what exactly these parameters represent, whether it's volume, memory limit, or usage rights?

On the occasion of the first anniversary of ChatGPT, "AIGC Open Community" will introduce the meaning of these parameters in a popular and easy-to-understand way. Since OpenAI has not disclosed the detailed parameters of GPT-4, we will use the 175 billion of GPT-3 as an example.

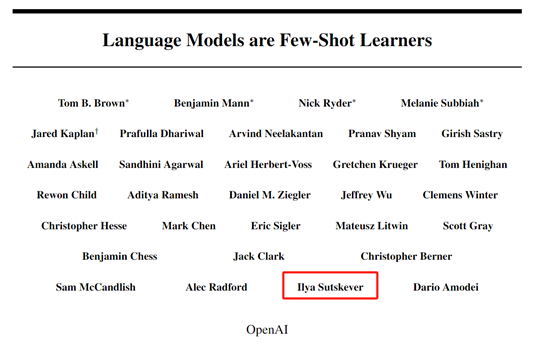

OpenAI released a paper titled "Language Models are Few-Shot Learners" on May 28, 2020, which is about GPT-3, providing a detailed explanation of the model's parameters, architecture, and functions.

Paper link: https://arxiv.org/abs/2005.14165

Meaning of Parameters in Large Models

According to the paper, GPT-3 has reached 175 billion parameters, while GPT-2 only has 1.5 billion, an increase of more than 100 times.

The significant increase in parameters mainly reflects the comprehensive enhancement of storage, learning, memory, understanding, and generation capabilities, which is why ChatGPT can be so versatile.

Therefore, the parameters in large models usually refer to the numerical values used inside the model for storing knowledge and learning abilities. These parameters can be seen as the "memory cells" of the model, determining how the model processes input data, makes predictions, and generates text.

In neural network models, these parameters are mainly weights and biases, which are optimized through continuous iterations during training. Weights control the mutual influence between input data, while biases are added to adjust the output values in the final calculation.

Weights are core parameters in neural networks, representing the strength or importance of the relationship between input features and outputs. Each connection between network layers has a weight, determining the impact of an input node (neuron) on calculating the output of the next layer.

Biases are another type of network parameter, usually added to the output of each node to introduce an offset, allowing the activation function to have a better dynamic range near zero, thereby improving and adjusting the activation level of nodes.

In simple terms, GPT-3 can be seen as an assistant in a super large office, with 175 billion drawers (parameters), each containing specific information, including words, phrases, grammar rules, punctuation principles, etc.

When you ask ChatGPT a question, such as "Help me generate a shoe marketing copy for social platforms," the GPT-3 assistant will go to drawers containing marketing, copywriting, shoes, etc., extract information, and then rearrange and generate text according to your request.

During pre-training, GPT-3 learns various languages and narrative structures by reading a large amount of text, just like a human.

Whenever it encounters new information or tries to generate new text, it opens these drawers to look for information and tries to find the best combination of information to answer questions or generate coherent text.

When GPT-3 performs poorly in certain tasks, it will adjust the information in the drawers as needed (update parameters) to do better next time.

Therefore, each parameter is a small decision point for the model in a specific task. Larger parameters mean the model can have more decision-making ability and finer control, while capturing more complex patterns and details in language.

Does higher model parameters always mean better performance?

In terms of performance, for large language models like ChatGPT, a higher number of parameters usually means the model has stronger learning, understanding, generation, and control capabilities.

However, as the parameters increase, there are also issues such as high computational costs, diminishing marginal returns, overfitting, especially for small and medium-sized enterprises and individual developers without development capabilities and computational resources.

Higher computational costs: The larger the parameters, the more computational resources are consumed. This means training larger models requires more time and more expensive hardware resources.

Diminishing marginal returns: As the model size grows, the performance improvement from each additional parameter becomes less significant. Sometimes, increasing the number of parameters does not bring significant performance improvements but instead brings more operational cost burdens.

Difficulty in optimization: When the model's parameters are extremely large, it may encounter the "curse of dimensionality," making it difficult to find optimized solutions and even leading to performance degradation in certain areas. This is very evident in OpenAI's GPT-4 model.

Inference latency: Models with a large number of parameters usually have slower response times during inference, as they require more time to find the optimal generation path. Compared to GPT-3, GPT-4 also has this issue.

Therefore, if you are deploying large models for small and medium-sized enterprises locally, you can choose models with small but powerful performance built from high-quality training data, such as Llama 2, an open-source large language model released by Meta.

If you do not have local resources and want to use cloud services, you can use the latest models from OpenAI, such as GPT-4 Turbo, Baidu's Wenxin large model, or Microsoft's Azure OpenAI through APIs.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。