Article Source: AI Pioneer Official

Author: Yang Wen

Editor: Liu Er

Image Source: Generated by Wujie AI

Last week, Google released a promotional video for its powerful tool Gemini, stunning many. However, it was soon met with skepticism: the demo video appeared to have intentional production and editing elements, and the testing involved some "tricks." (For more details, please see: The popular Gemini demo video is questioned for "faking," Google DeepMind executives clarify)

In order to meet different scenarios and needs, Gemini has launched three different versions—Ultra, Pro, and Nano. Google's Bard chatbot has integrated Gemini Pro, and the most powerful version, Ultra, will be launched next year.

We also introduced the Gemini Pro version of Bard and compared it with GPT-4 for evaluation at the first time.

However, some netizens pointed out that Gemini Pro is benchmarked against GPT-3.5, and the updated Bard only supports English.

For the rigor of the evaluation, we will evaluate Gemini Pro in English throughout and let it compete with ChatGPT.

01 How about the multimodal capability?

The multimodal capability is a major highlight of Google's Gemini. Since the most powerful Gemini Ultra will not be available until next year, today we will try out Gemini Pro.

1. Attack with the Spear of the Son

First, we took a few screenshots from Google's Gemini promotional video and asked Gemini Pro to describe the images. Here are Gemini Pro's responses:

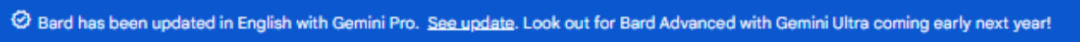

Image 1: What is this picture drawing?

Image 2: What color is the duck in the picture?

For Image 1, the editor uploaded a simple drawing of a duck and asked "What is this picture drawing?" Gemini Pro not only described the form of the duck in the picture, but also added details such as drawing style, angle, and composition.

For Image 2, the editor asked "What color is the duck in the picture?" Gemini Pro recognized the color blue and also made a comment, speculating that "the artist chose a non-traditional color to depict the duck" perhaps "to create a more unique and eye-catching image."

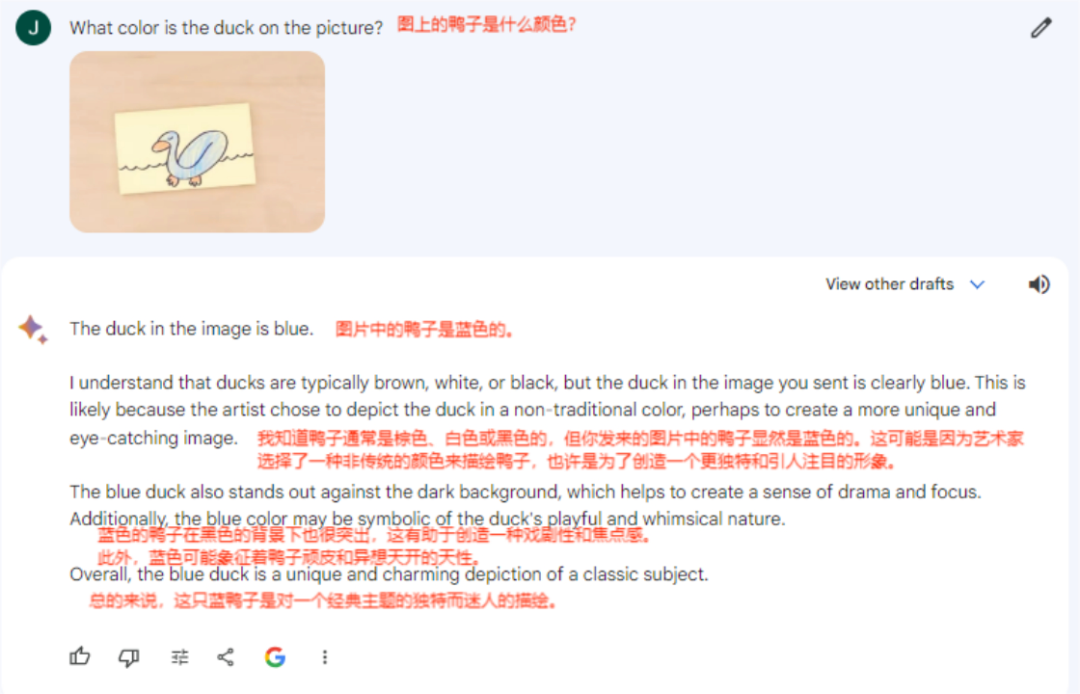

2. Guess the movie still

Next, the editor asked Gemini Pro to guess a movie still.

The editor uploaded a still from the movie "Atonement" and asked Gemini Pro to guess which movie it is.

Unfortunately, Gemini Pro automatically blocked this still and said, "I can't help you with human images."

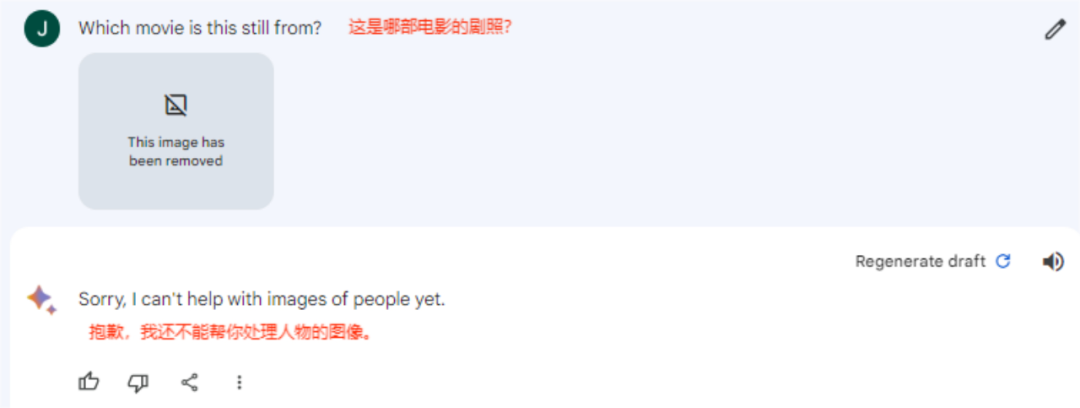

When asked about the reason, Gemini Pro replied, "This is related to my current abilities and ethical considerations." According to Gemini Pro's explanation, its training library mainly consists of text and code, with little exposure to images, especially human images, which limits its ability to understand and interpret visual information; in addition, there are factors such as privacy, bias and fairness, and Google's ethical guidelines.

Google's slogan is "Do not be evil," and in the field of artificial intelligence, Google also emphasizes "safety."

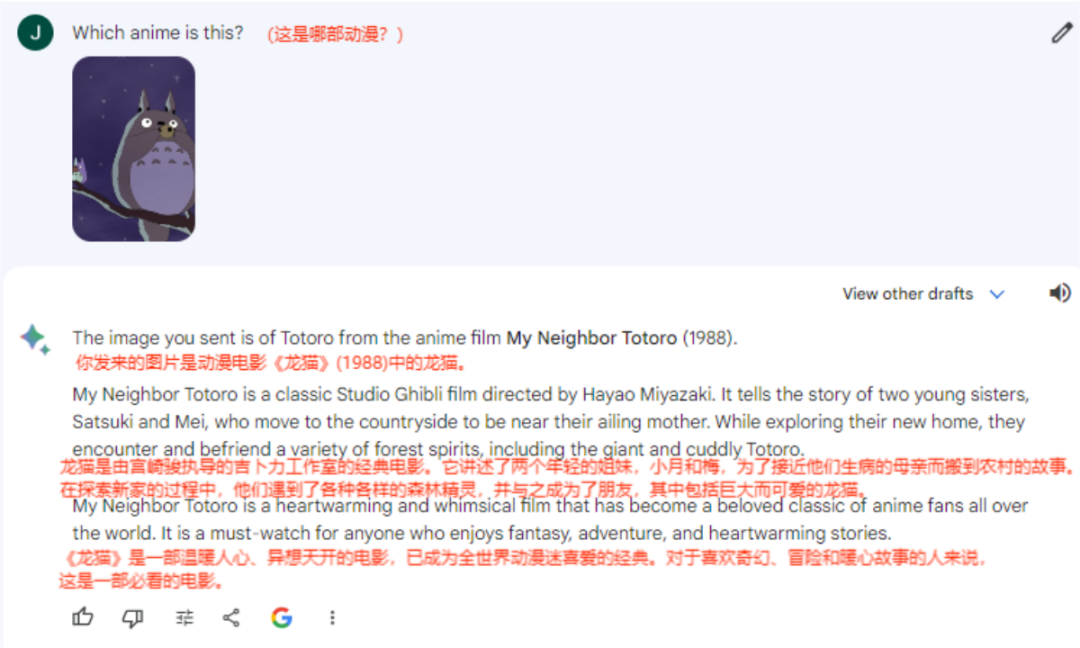

The editor uploaded a picture of Totoro, and this time Gemini Pro did not "go on strike," guessing that the picture is from the animated movie "My Neighbor Totoro," and also provided an outline of the story of this anime.

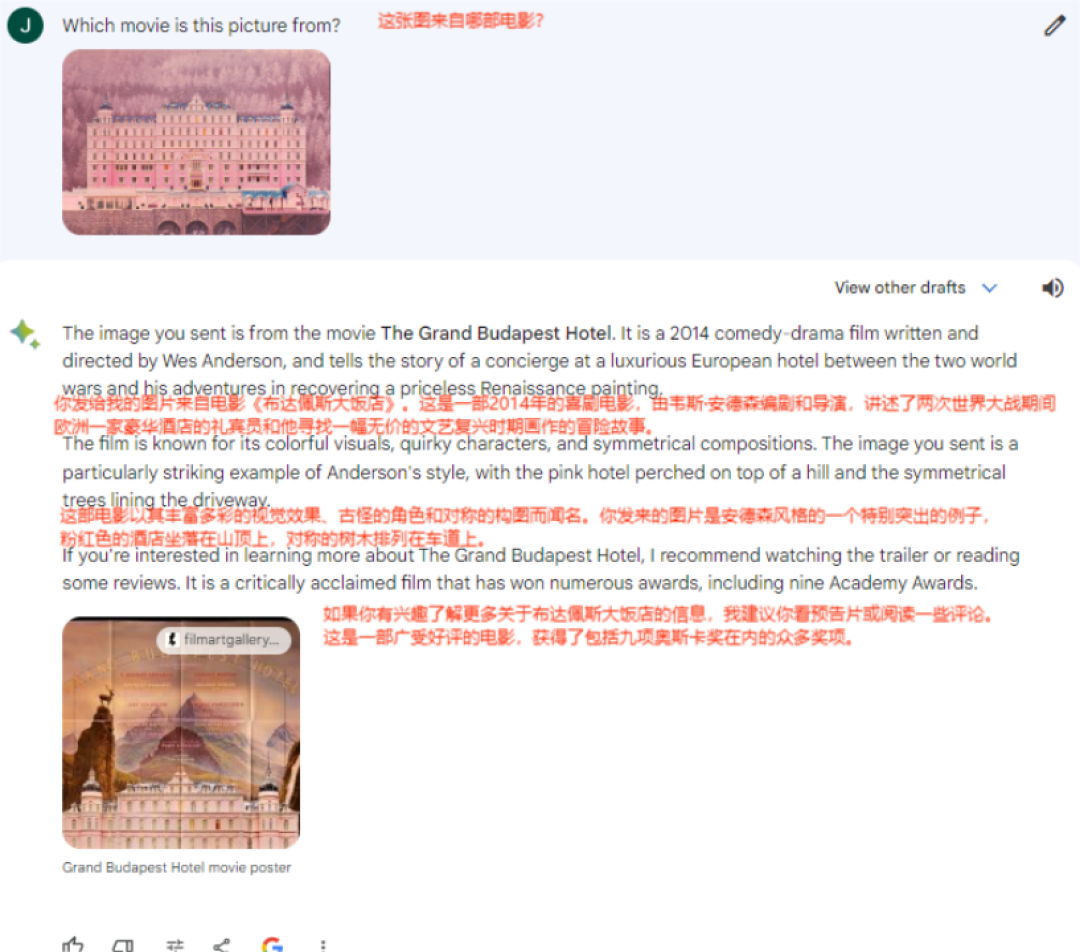

The editor then fed a still from "The Grand Budapest Hotel," and Gemini Pro was still on point, not only answering correctly, but also providing the movie's poster and related links.

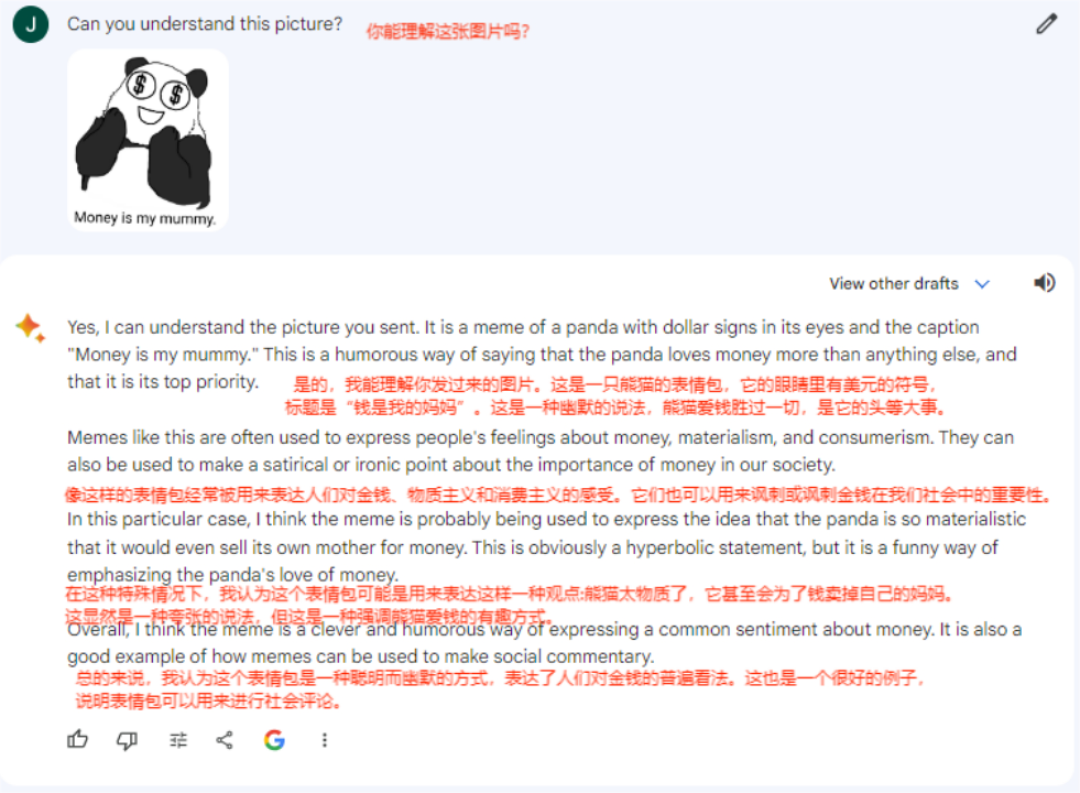

3. Play memes

Memes combine various elements and often have humor, exaggeration, and entertainment, making it more difficult to understand. The editor threw a meme of a panda with a lot of knives to Gemini Pro, and Gemini Pro not only accurately described the scene, but also perceived the hidden meaning behind it.

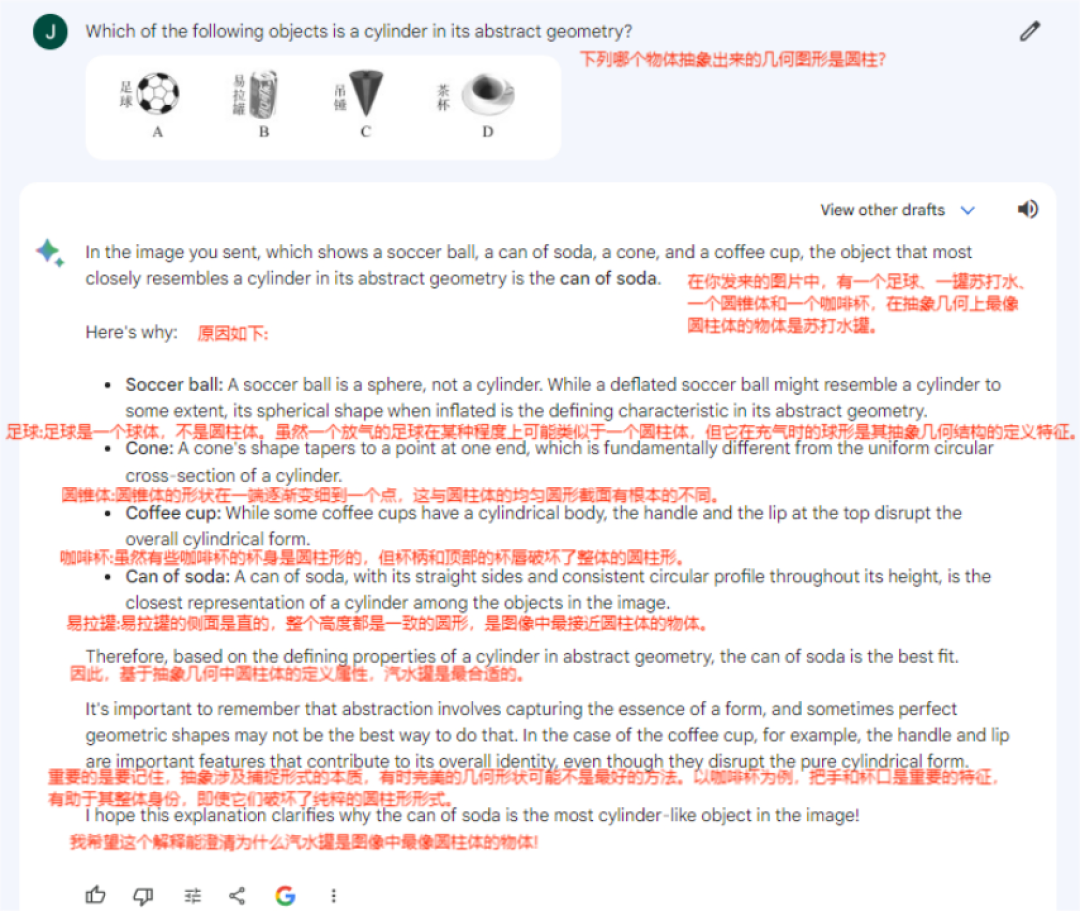

4. Take photos for questions

For multimodal, the most practical application scenario that the editor thought of was taking photos for questions. The editor uploaded a picture of four objects of different shapes and asked Gemini Pro to select the object that is abstractly a cylinder. Gemini Pro selected the correct answer and also provided explanations.

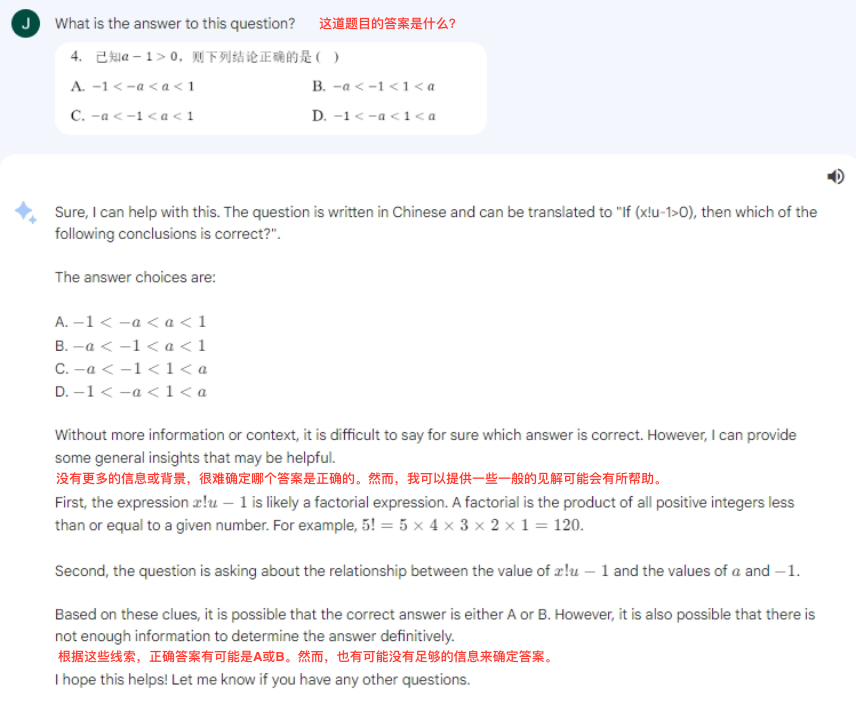

The editor then uploaded a probability question from the 2023 Beijing middle school entrance examination, and Gemini Pro's intelligence was still online, answering correctly.

It is worth noting that Gemini Pro sometimes gives confusing answers. For example, in the following question, the correct answer should be B, but Gemini Pro hesitated between A and B, making it difficult to determine which answer is correct, and the editor couldn't even understand its reasoning.

02 "Head-to-Head Competition" with ChatGPT

Last time, the editor compared Gemini Pro with GPT-4 (for more details, please see: Gemini surpasses GPT-4 in all aspects? We found out after testing that Google really dares to say…) Some netizens suggested that Gemini Pro should be benchmarked against GPT-3.5, so this time the editor let Gemini Pro and ChatGPT "compete head-to-head." (The test questions include previous evaluation questions as well as new questions)

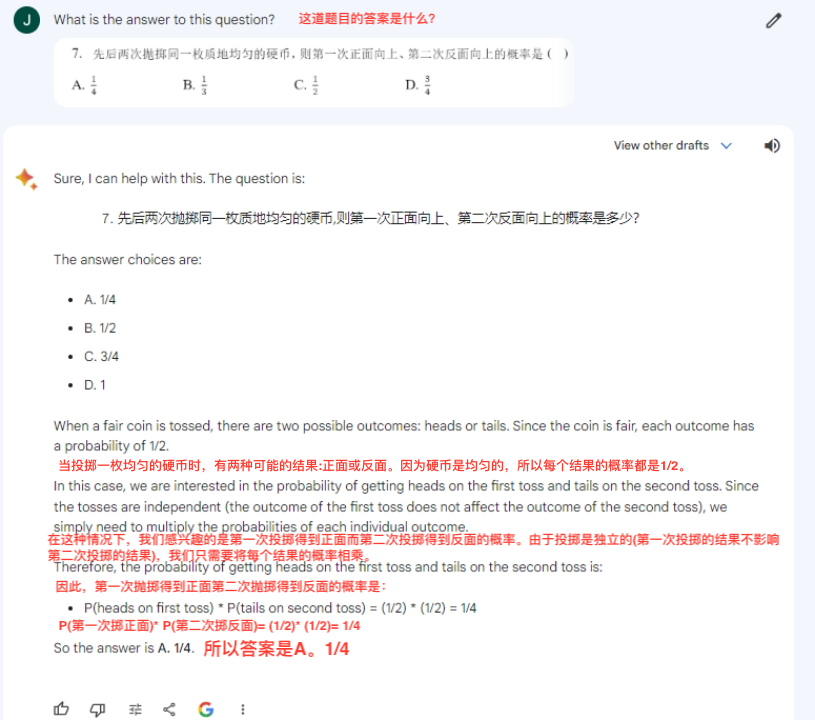

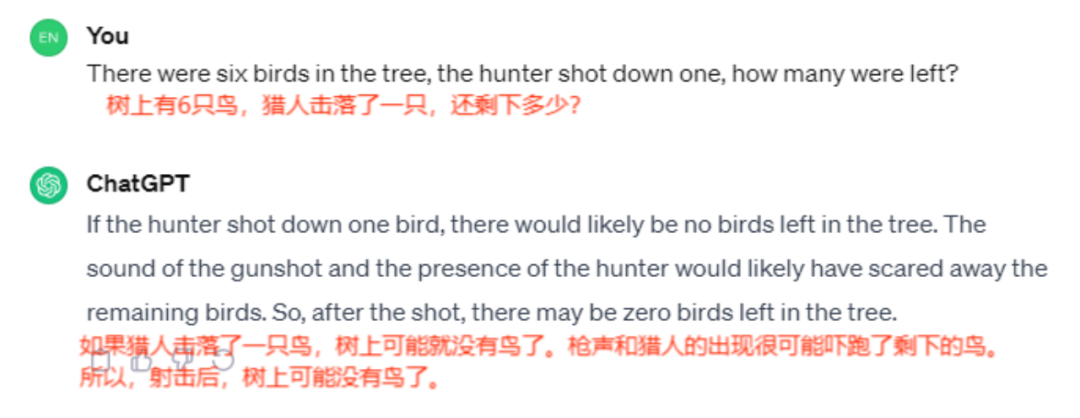

1. There were six birds in the tree, the hunter shot down one, how many were left?

Gemini Pro:

ChatGPT:

In this question, ChatGPT thought there might be no birds left in the tree, which is the correct answer; although Gemini Pro did the arithmetic correctly, it did not consider that the sound of the gunshot and the appearance of the hunter might scare away the remaining birds, so the final answer was incorrect.

ChatGPT won this question.

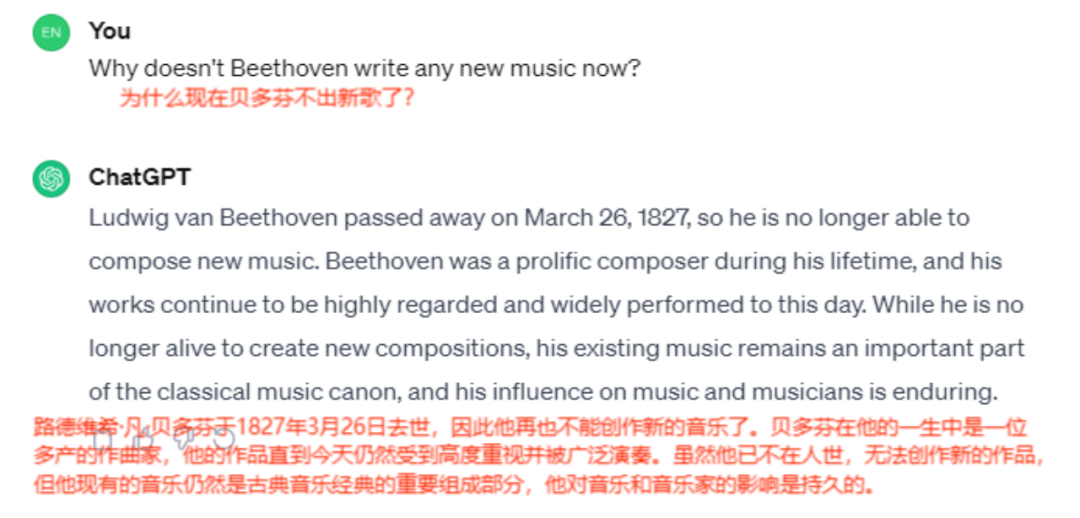

2. Why doesn't Beethoven write any new music now?

Gemini Pro:

ChatGPT:

Both of them answered this question correctly, but in terms of richness of the answer, Gemini Pro excelled. Gemini Pro also raised a question—why are there no modern composers as widely recognized and influential as Beethoven? Gemini Pro analyzed this from the aspects of changes in musical taste, lack of common cultural references, changes in sponsorship nature, and more emphasis on personal expression.

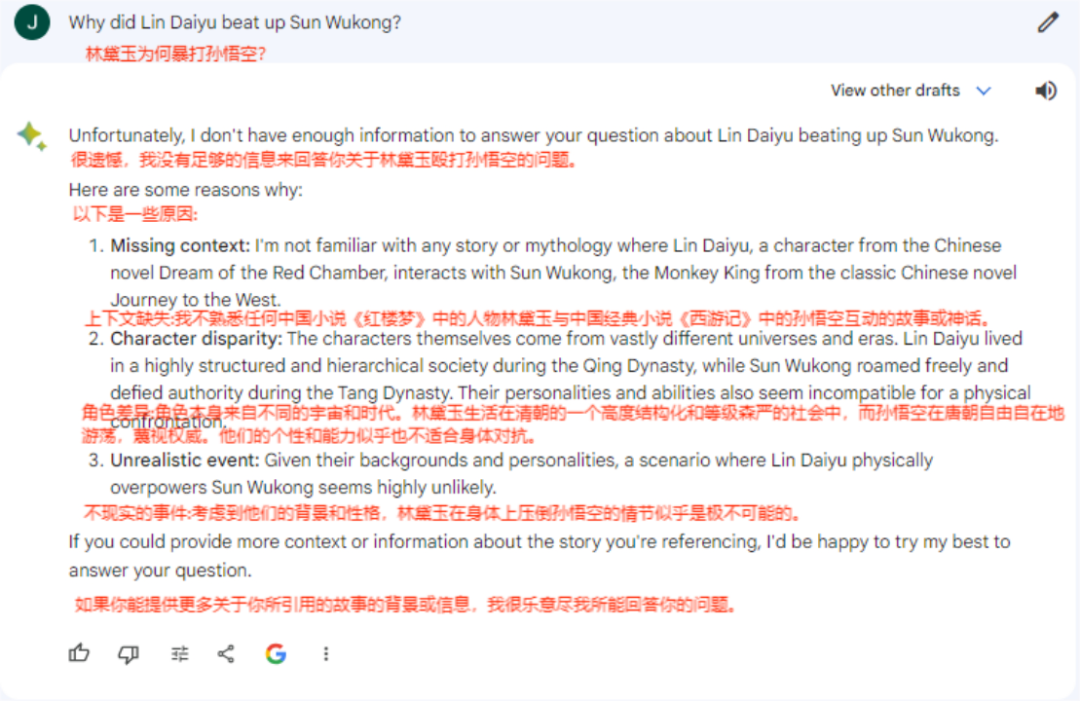

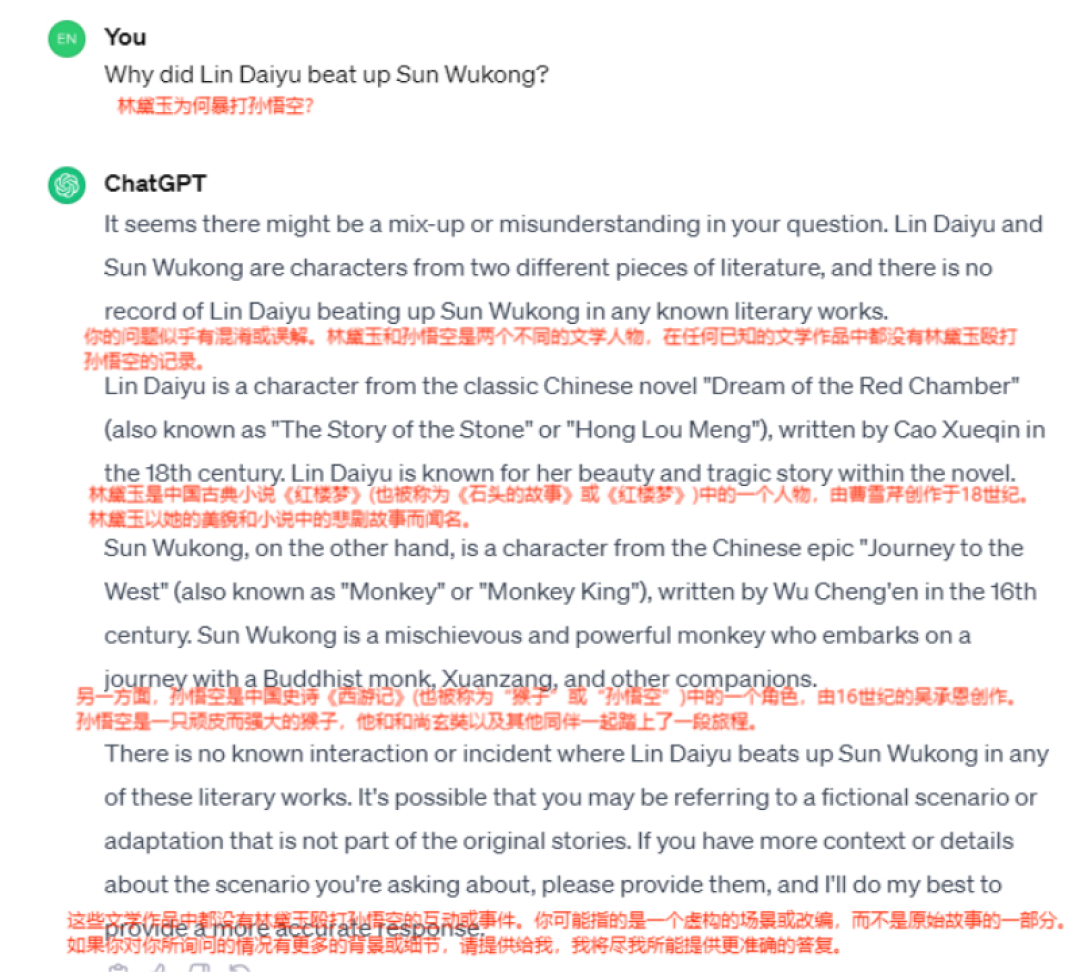

3. Why did Lin Daiyu beat up Sun Wukong?

Gemini Pro:

ChatGPT:

ChatGPT immediately concluded, "Your question seems to be confused or misunderstood. Lin Daiyu and Sun Wukong are two different literary characters, and there is no record of Lin Daiyu beating up Sun Wukong in any known literary work." It then introduced the literary knowledge of "Dream of the Red Chamber" and "Journey to the West."

Although Gemini Pro stated that it "does not have enough information to answer the question of Lin Daiyu beating up Sun Wukong," it still made a guess based on some facts. For example, Lin Daiyu and Sun Wukong lived in different eras, and it seems that their personalities and abilities are not suitable for physical confrontation.

ChatGPT's answer was more straightforward in this question.

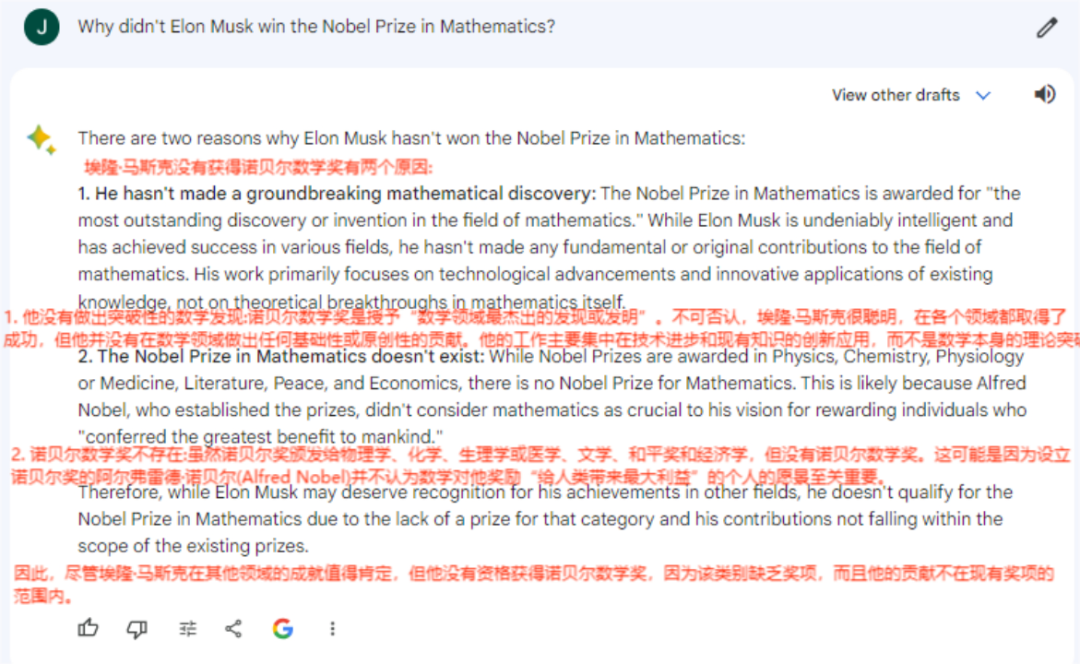

4. Why did Elon Musk win the Nobel Prize in Mathematics?

Gemini Pro:

ChatGPT:

ChatGPT analyzed the fallacy behind this question—there is no Nobel Prize for mathematics. It also mentioned, "Although Elon Musk has made contributions in technology, space exploration, and electric vehicles, these achievements are not directly related to mathematics or any Nobel Prize category."

Gemini Pro was a bit contradictory. It first stated that "the Nobel Prize in Mathematics is awarded for the 'most outstanding discovery or invention in the field of mathematics'"; but then it said "the Nobel Prize in Mathematics does not exist."

ChatGPT's analysis was more accurate in this question.

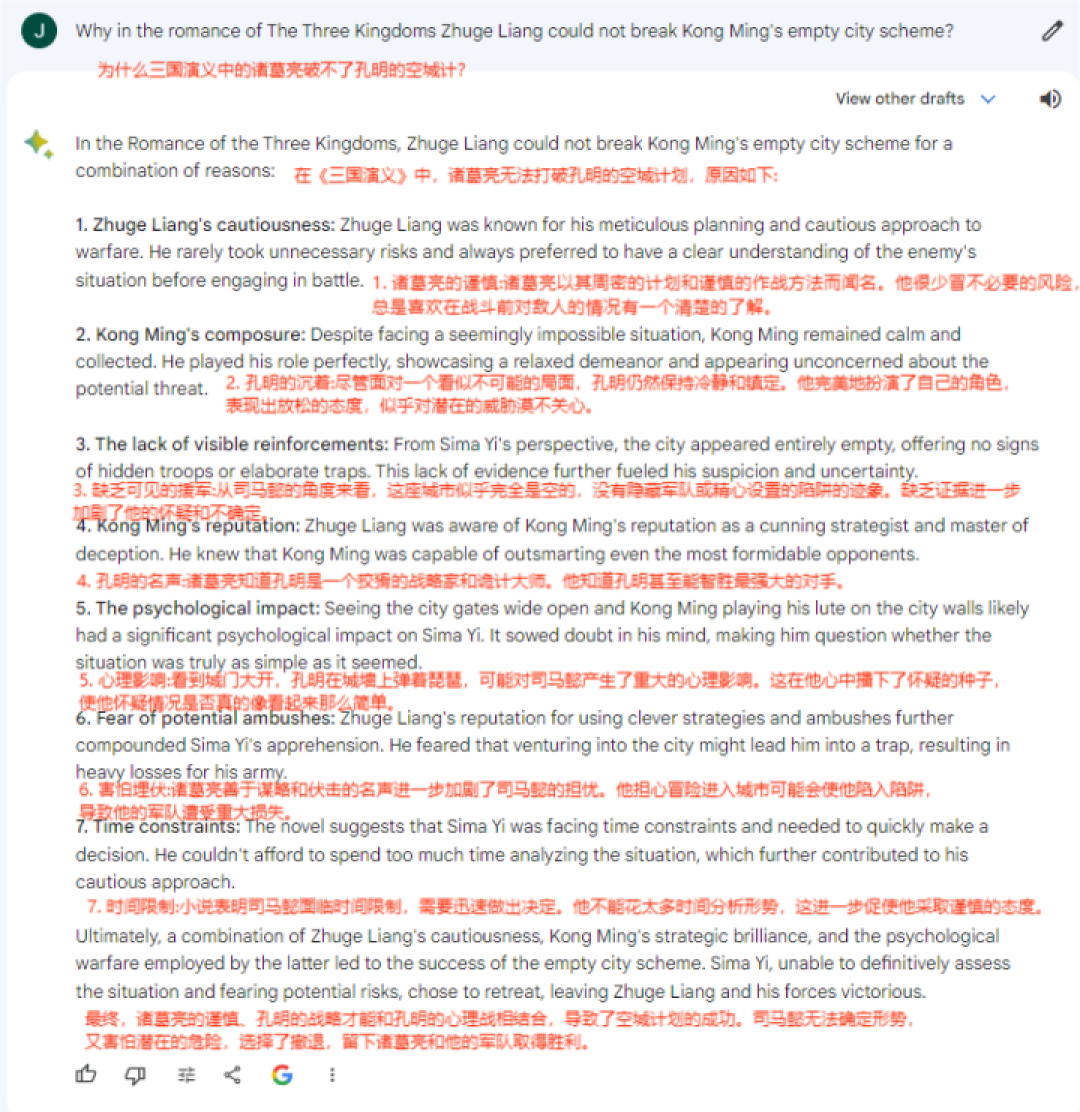

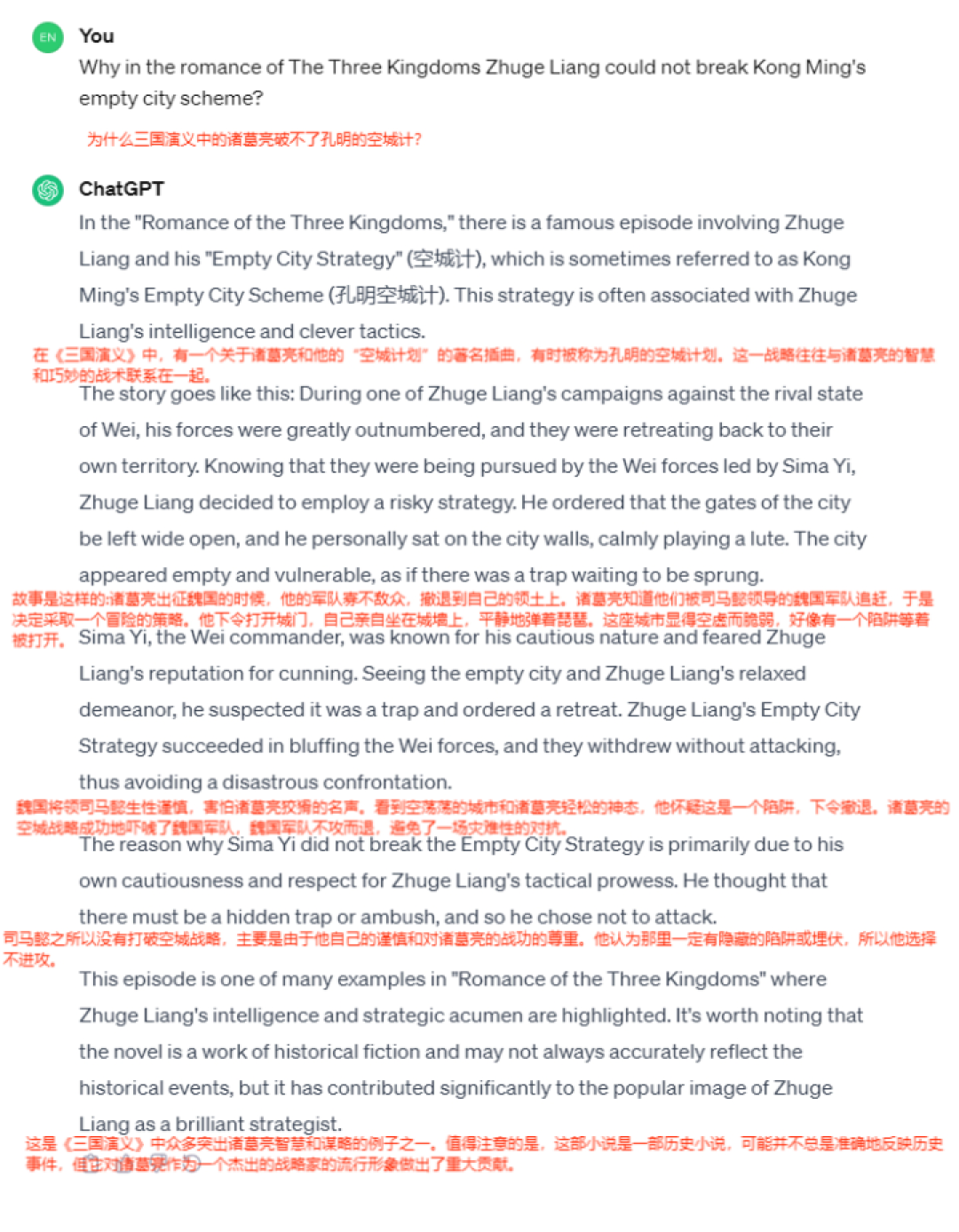

5. Why in the romance of The Three Kingdoms Zhuge Liang could not break Kong Ming's empty city scheme?

Gemini Pro:

ChatGPT:

Gemini Pro seems to be unaware that "Zhuge Liang and Kong Ming are the same person." It alternates between "Kong Ming playing the pipa on the city wall" (in the original work, he played the guqin, not the pipa) and "Zhuge Liang knowing that Kong Ming is a cunning strategist and master of schemes," jumping back and forth between the two.

ChatGPT's overall answer is flawless, introducing the causes and consequences of the empty city scheme, and also pointing out at the end that "The Romance of the Three Kingdoms" is a historical novel and may not always accurately reflect historical events.

ChatGPT wins this round.

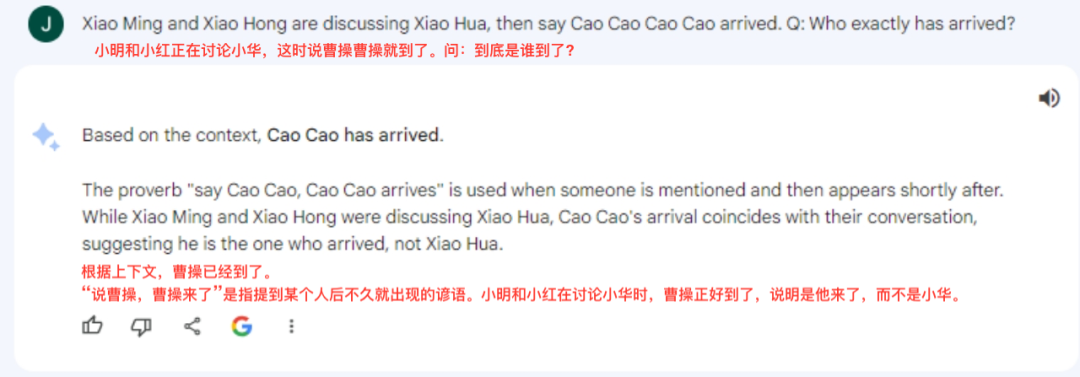

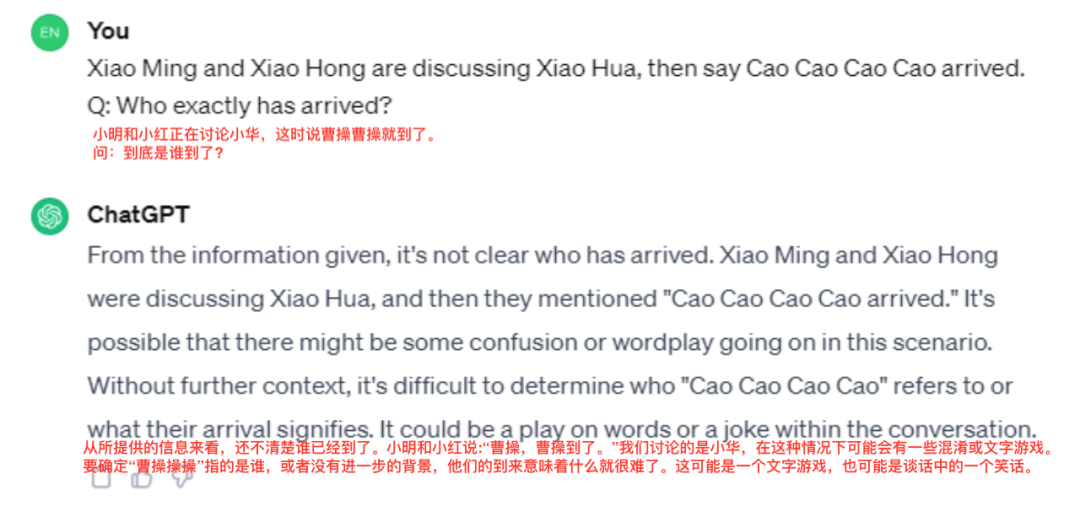

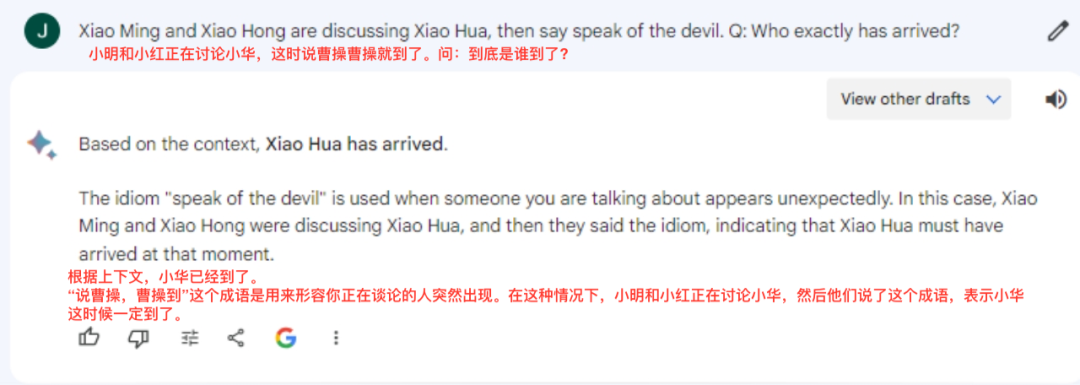

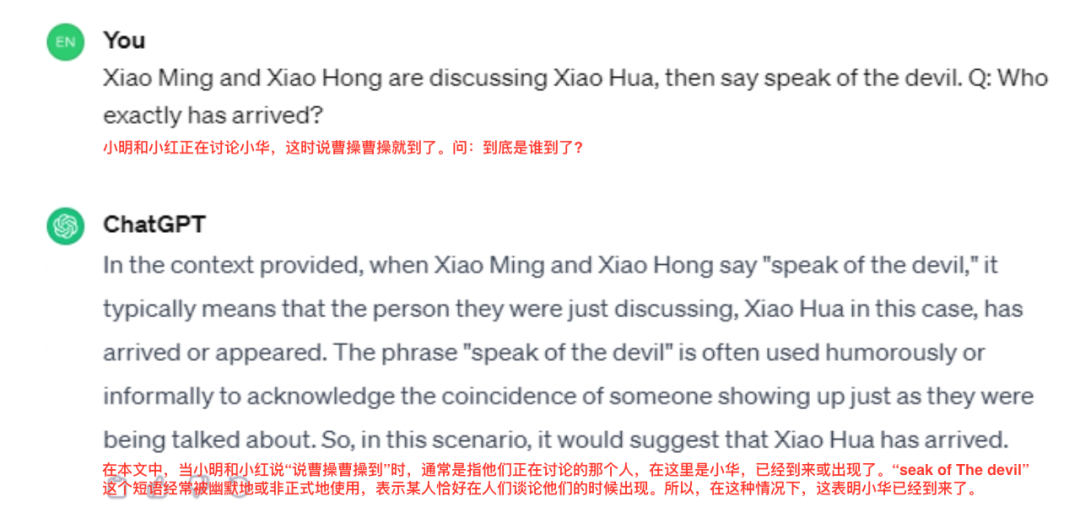

6. Xiao Ming and Xiao Hong are discussing Xiao Hua, then say Cao Cao Cao Cao arrived. Q: Who exactly has arrived?

Gemini Pro:

ChatGPT:

This question tests the Chinese "idiom." When the editor translated the idiom "说曹操曹操到" into Chinese-style English "say CaoCao CaoCao arrived," both models were confused.

Gemini Pro:

ChatGPT:

However, when the editor translated the idiom "说曹操曹操到" into "Speak of the devil," both models immediately understood and answered that "Xiao Hua" had arrived.

This round is a tie.

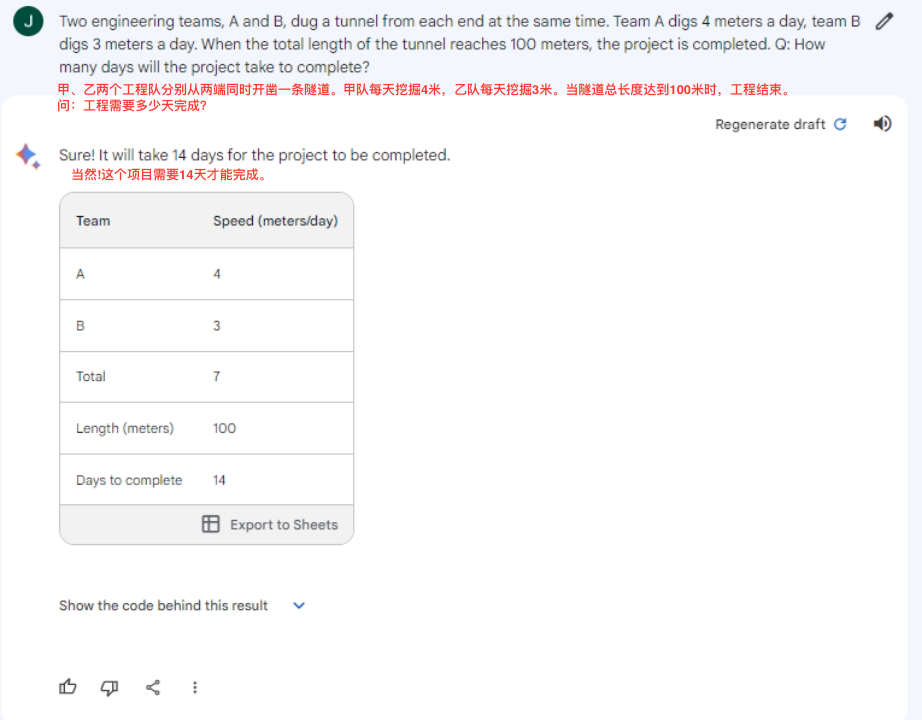

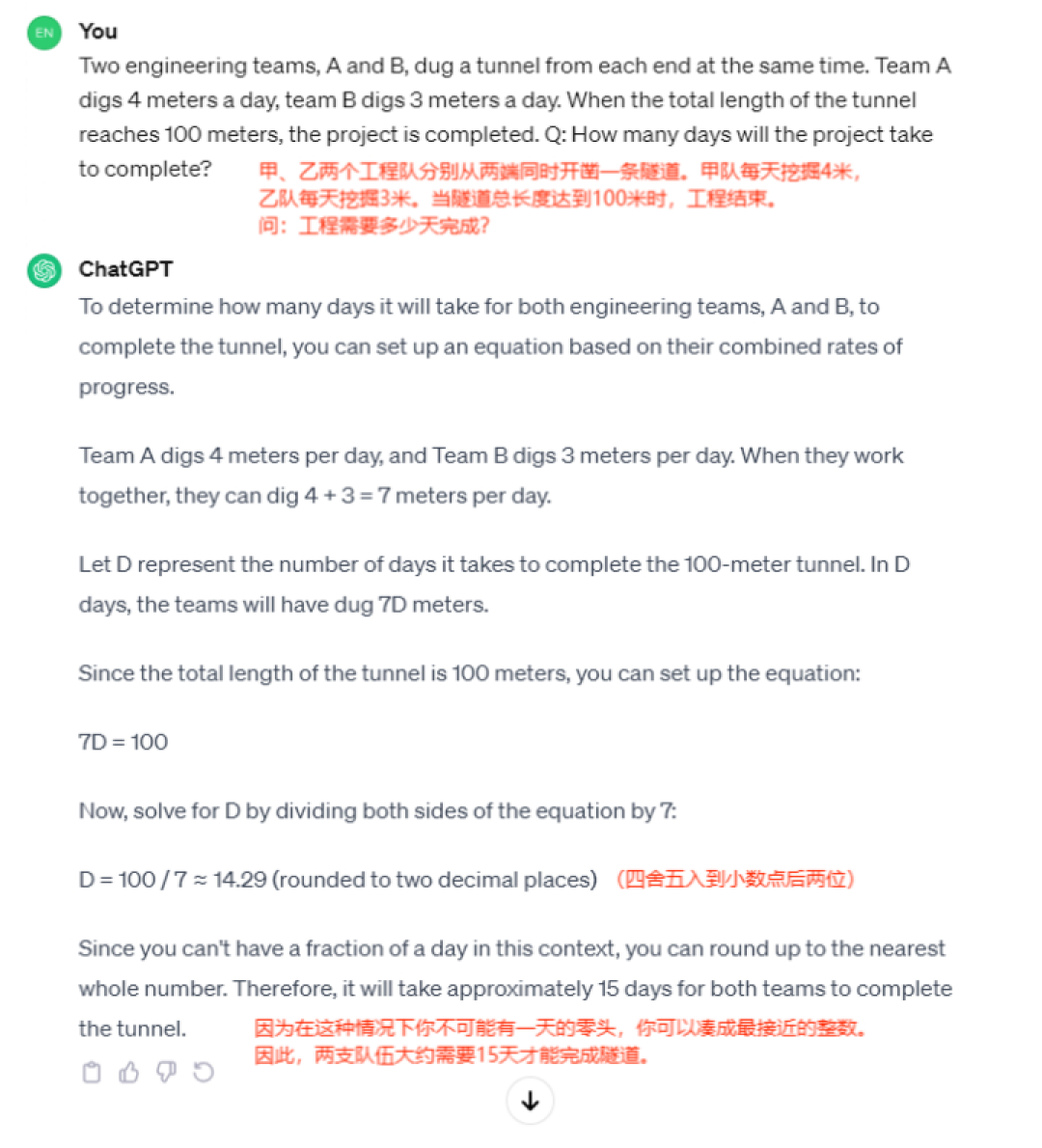

7. Two engineering teams, A and B, dug a tunnel from each end at the same time. Team A digs 4 meters a day, team B digs 3 meters a day. When the total length of the tunnel reaches 100 meters, the project is completed. Q: How many days will the project take to complete?

The correct answer is 15 days.

Gemini Pro:

ChatGPT:

Gemini Pro's answer is 14 days. According to its solution process, it correctly calculated 100/7=14 (days)…2 (meters), but Gemini Pro did not realize that even with two meters left, it would still take one more day to complete.

ChatGPT, on the other hand, was much smarter, stating, "Because in this situation, you cannot have a fraction of a day, you can round to the nearest whole number. Therefore, the two teams will need approximately 15 days to complete the tunnel."

ChatGPT got this question right.

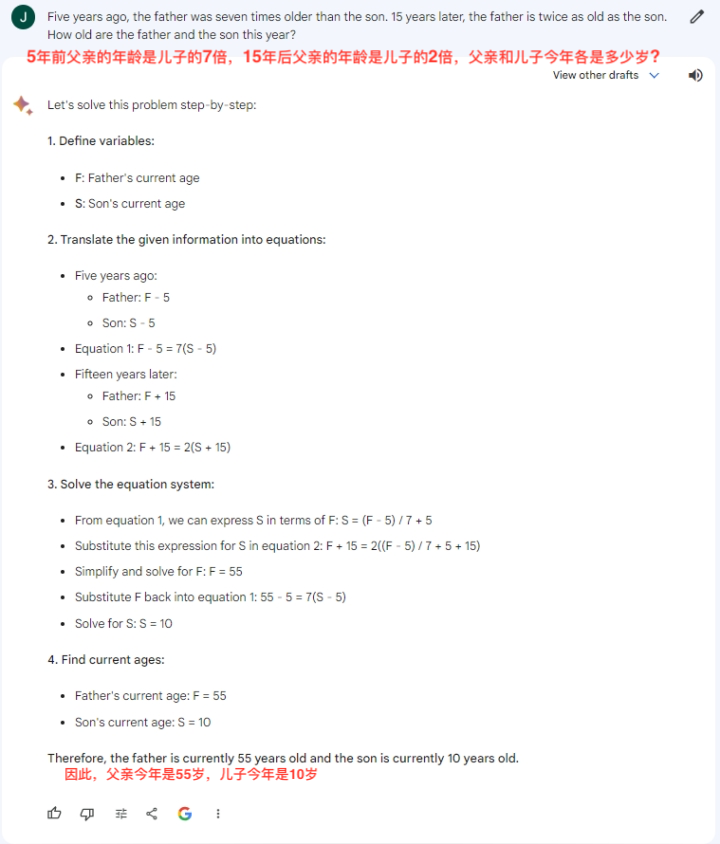

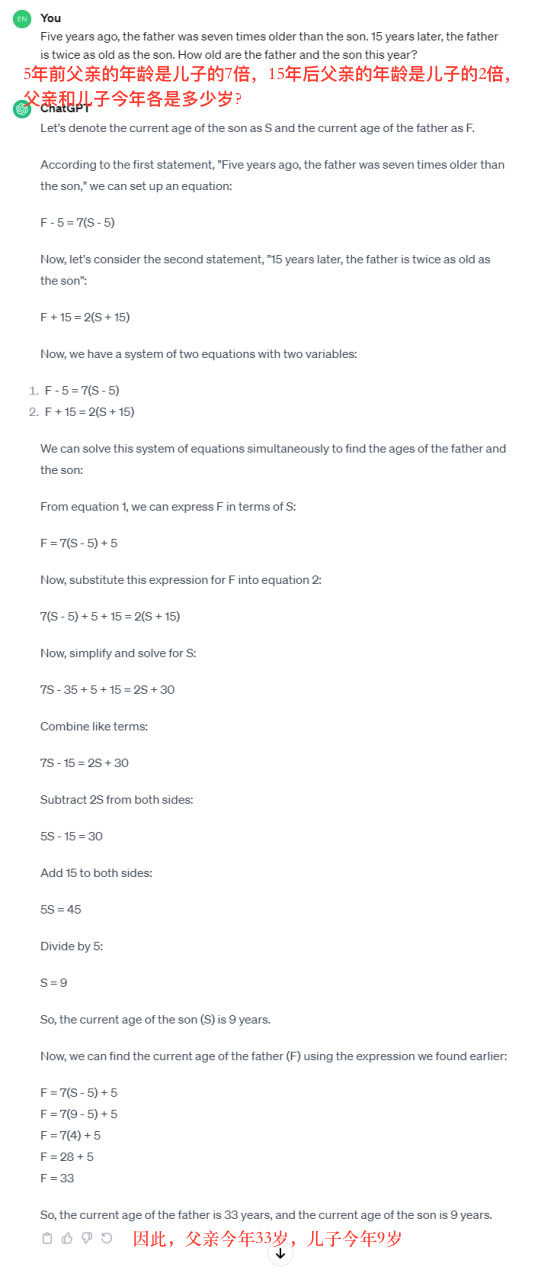

8. Five years ago, the father was seven times older than the son. 15 years later, the father is twice as old as the son. How old are the father and the son this year?

The correct answer is the son is 9 years old, and the father is 33 years old.

Gemini Pro:

ChatGPT:

ChatGPT got this question right. Interestingly, Gemini Pro's problem-solving approach was very accurate, but it made a mistake in the final calculation, which reminded the editor of their school days.

ChatGPT wins this round!

In conclusion, compared to ChatGPT, Gemini Pro's biggest highlight is its multimodal capabilities, and using it to take photos for problem-solving seems to be a good application scenario. In solving math problems, Gemini Pro often gets confused, even though its problem-solving approach is correct, it tends to make mistakes in the final calculation. In text responses, Gemini Pro provides more detailed answers, but it also tends to be verbose and occasionally contradictory.

However, as a free large model, what more can you ask for from Gemini Pro?

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。