Source: GenAI New World

The long-rumored first self-developed AI chip from Microsoft has finally revealed its true colors to the outside world today.

On the morning of November 15th, 2023 Microsoft Ignite Conference was held at the Seattle Convention Center in the Pacific Time Zone. This is an annual technology conference held by Microsoft for IT professionals, business decision-makers, and developers, mainly introducing the latest developments in a full range of products such as Azure cloud services, enterprise solutions, as well as Windows, Microsoft 365, Copilot, and more, and also includes technical training, product demonstrations, and trend discussions.

According to the official announcement, this year's focus is on how artificial intelligence empowers daily applications. But obviously, what people are most concerned about is the mysterious AI chip project that Microsoft started several years ago, and what major moves the backer made in related products after the reshuffle of the generative artificial intelligence industry at the OpenAI Developer Conference earlier this month.

And all these answers were revealed one by one by Microsoft CEO Satya Nadella in the keynote speech at the conference:

Two self-developed AI chips make a grand debut

Nadella first stated that this conference will release over 100 business updates to help people unlock productivity and creativity to the maximum in the "Copilot era" from various aspects such as AI infrastructure, models, and data chains.

Currently, Microsoft has over 300 data centers in more than 60 regions worldwide, and breakthrough hollow fiber optic technology will increase network speed by 47%. The new system Azure Boost will transfer network storage and other virtual processes to dedicated hardware, overall improving performance and efficiency.

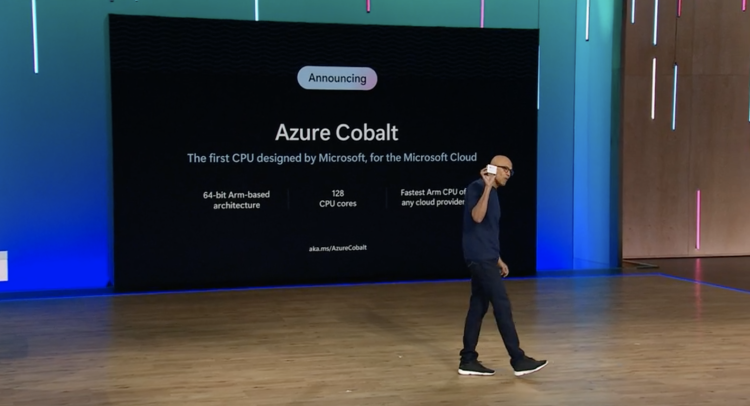

"After all the accumulation, we hope to go further," Nadella raised an Azure Cobalt 100 chip and said, "I am very pleased to introduce our first-ever customized CPU series Azure Cobalt, designed for Microsoft's universal cloud services. This 64-bit 128-core chip based on ARM architecture is the fastest among all cloud service providers and has already provided support for Microsoft Teams, Azure communication services, and part of SQL."

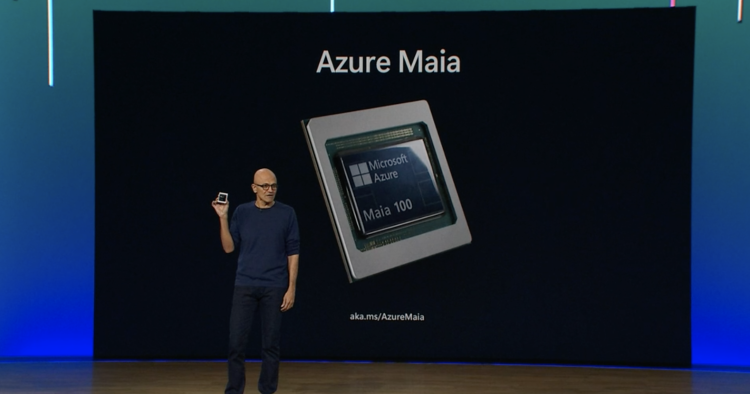

Microsoft's second artificial intelligence chip is named Azure Maia, inspired by a bright blue star. Maia is positioned as an AI accelerator, manufactured using 5-nanometer TSMC technology, with up to 105 billion transistors, suitable for running cloud-based training and inference tasks.

The main application of Azure Maia in the future is to support some large-scale artificial intelligence workloads on the Azure platform, including OpenAI models, Bing, GitHub Copilot, and ChatGPT. It is worth noting that Microsoft provides the power needed for all OpenAI workloads. At the same time, as a giant in the software industry, Microsoft has been closely cooperating with OpenAI to jointly design and test the Maia chip, ensuring its efficient support for these advanced AI applications.

Both chips are planned to be launched in early 2024. In fact, Microsoft has a long history in silicon chip development. Over 20 years ago, Microsoft was involved in the chip development for Xbox and also jointly designed chips for its Surface devices. The launch of customized AI chips now is in response to the surging demand for NVIDIA H100 in the market.

In this year's AI gold rush, NVIDIA, the "shovel seller," has made a fortune, and the H100 has become a hard currency in the AI industry, with its price on eBay soaring to over $40,000. Microsoft's introduction of its own developed chips is not only to reduce its reliance on NVIDIA, but also to train its models more efficiently and at a lower cost, and also plans to provide them to Azure cloud customers and partners to expand its market share.

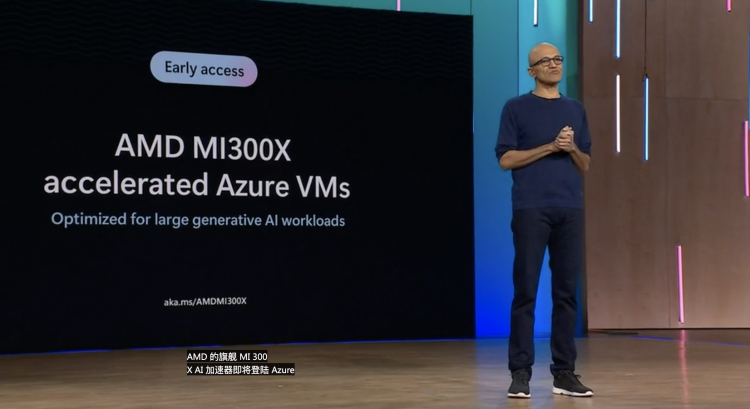

At the same time, Microsoft is also expanding its cooperation with other chip suppliers.

For example, adding the AMD MI300X accelerated virtual machine in Azure, equipped with the latest AMD Instinct MI300X chip, providing industry-leading memory speed and capacity. It aims to accelerate the processing of AI workloads for high-range AI model training and inference.

It also announced plans to add the latest NVIDIA H200 chip to the ND H200 v5 virtual machine series to expand the Microsoft Copilot experience. And collaborating with NVIDIA to design the NCCv5 VM preview version to provide higher performance, reliability, and efficiency when running AI models on sensitive cloud data.

Nadella stated that Microsoft will further deepen its partnership with NVIDIA and invited "Leather Jacket Master" Huang Renxun to announce the AI Foundry service that NVIDIA is about to launch on the Azure platform. By combining the NVIDIA AI basic model set, NVIDIA NeMo framework and tools, and NVIDIA DGX Cloud's AI supercomputing, it provides enterprises with a one-stop solution for creating custom generative AI models.

After praising the achievements of the two teams over the past year, Huang Renxun said, "I am proud of our two teams. Generative artificial intelligence is the most important platform transformation in computing history. In the past 40 years, nothing can compare to it. It is bigger than personal computers, bigger than mobile communications, and will even surpass the internet."

Updates related to Copilot

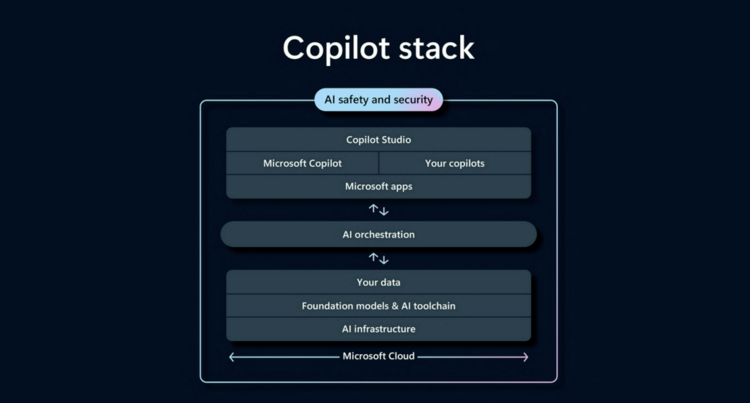

Microsoft described itself in various ways as the "Copilot company" this time, and the company's CMO Frank X. Shaw said, "We are expanding the product line of Microsoft Copilot into solutions to help change the productivity and business processes of every role and function, believing that everything everyone does in the future will have a Copilot."

The most noticeable (even somewhat familiar) update in this regard is Copilot Studio.

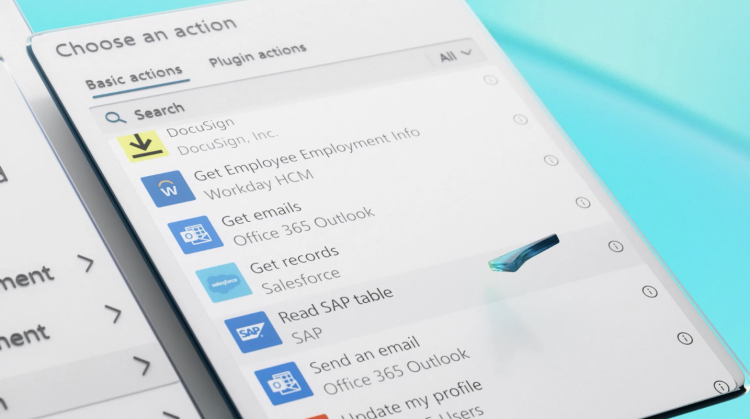

Just last week, OpenAI announced GPTs and GPT stores, allowing anyone to create their own ChatGPT using natural language. Following suit, Microsoft launched a similar Copilot Studio: allowing enterprise users to customize their own Copilot assistant with zero code. This includes integrating homemade ChatGPT chatbots, generative AI plugins, and linking to the latest data sources from internal systems and partners such as Workday and ServiceNow, which can be created and released in Microsoft Copilot for on-demand use, meeting various work needs from vacation management to financial analysis for employees.

In addition, Copilot is becoming more personalized.

For example, Microsoft has added some flexibility to the output of chatbots, allowing users to adjust the format and tone through commands to better suit personal preferences.

In the PowerPoint application, employees can instruct the chatbot to call up the company's image library and creatively modify images using Microsoft Designer, which integrates DALLE-3 technology.

To enhance team collaboration, starting next spring, Copilot will be able to help users efficiently prepare for meetings by integrating information from Outlook emails, calendar invitations, and related documents.

During meetings, Copilot in Microsoft Teams has added whiteboard and note-taking functions to ensure that all participants can keep information synchronized in real time. In addition, participants can choose not to save interaction records with Copilot to ensure privacy.

Opening the whiteboard function during a meeting

In addition, Microsoft's 3D virtual avatars have also arrived.

Next January, in the "View" menu of Teams meetings, selecting the Immersive Space option will transform the 2D meeting scene into a 3D immersive experience. This new feature is called Microsoft Mesh, and users can create virtual avatars, choose meeting scenes, allocate seats, have multiple group conversations, and even roast marshmallows or play ice-breaking games in designated areas. The development team believes that Mesh will bring a completely new working experience to the 320 million monthly active users of Teams, building trust and unleashing their highest potential.

Strengthening the Connection between Data and Artificial Intelligence

When discussing Microsoft's latest developments in the field of artificial intelligence, Nadella also mentioned, "Everyone wants to build their applications with better models. With the continuous updates from OpenAI, we will bring all the latest features to Microsoft users."

To take a bigger step in supporting open source, Microsoft has launched the "Model as a Service" service, allowing professional developers to easily integrate the latest AI models, such as Meta's Llama 2 and the upcoming Mistral and Jais, and also customize and fine-tune them with their own data.

In addition, Microsoft also aims to unlock more value for developers through Azure AI. As part of this solution, Microsoft Fabric brings all team members together on an AI-driven enterprise platform, integrating all data assets and opening up new paths for data-driven decision-making and innovation.

The entire Ignite conference lasts for four days, with a dense schedule, and there will be more content on specific products, cloud deployment, security compliance, and other aspects to come.

Just from Satya Nadella's speech today, the competition among the giants is fierce, with each company striving to find its own foothold and expand its cooperation network, leaving no stone unturned in seizing the high ground of the AI ecosystem.

Whether there will be other surprises at this developer conference, and whether Microsoft's self-developed AI chips can shake NVIDIA in the future, we will continue to watch and wait!

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。