Original Source: Silicon Research Lab

Image Source: Generated by Wujie AI

On October 17, the Bureau of Industry and Security (BIS) of the US Department of Commerce issued new export restrictions on chips, further tightening the restrictions on China's purchase of important high-end chips.

Restricting the import of high-end chips to China is undoubtedly to restrain the development of China's technology industry. Previous research has shown that for every 1-point increase in computing power index, the digital economy and GDP will increase by 3.5‰ and 1.8‰, respectively.

However, the tightening of external restrictions has not caused the stagnation of China's computing power industry. Currently, China's computing power industry has surpassed the trillion-dollar mark. According to the China Academy of Information and Communications Technology, by the end of 2021, the core scale of China's computing power industry has exceeded 1.5 trillion yuan, and the scale of related industries has exceeded 8 trillion yuan.

Behind the trillion-dollar market is the joint efforts of enterprises and the government to seize the AI era.

On the one hand, since the launch of ChatGPT, domestic enterprises and research institutes have successively released more than 130 large models in just over half a year. Among them, leading players have begun to apply large models to specific scenarios to create popular applications.

On the other hand, in order to build the foundation of computing power, local governments have successively launched the construction of intelligent computing centers, laying the information expressway of the big data era, promoting industrial innovation and upgrading, and reducing the cost for enterprises to access technological achievements represented by large models.

As external chip trade gradually cools down, the internal computing power market is booming. Between the two extremes, it is inevitable to be curious:

How far has China's computing power industry advanced in its breakthrough battle? How to break through the computing power industry chain? Which enterprises have taken on the pioneering responsibility in this process?

01 NVIDIA's supply cut-off, what is the impact?

"If a large language model is used as the foundation and processes the reasoning requests of 1.4 billion people in China, the required computing power exceeds the total computing power of China's data centers by three orders of magnitude."

At the 2023 World Artificial Intelligence Conference (WAIC) in Shanghai in July this year, Professor Wang Yu of the Department of Electronic Engineering at Tsinghua University revealed the scale of China's computing power gap.

In fact, not only large models, but also the popularization of diverse applications in areas such as 5G, smart cities, and the Internet of Things has led to the continuous acceleration of data generation speed.

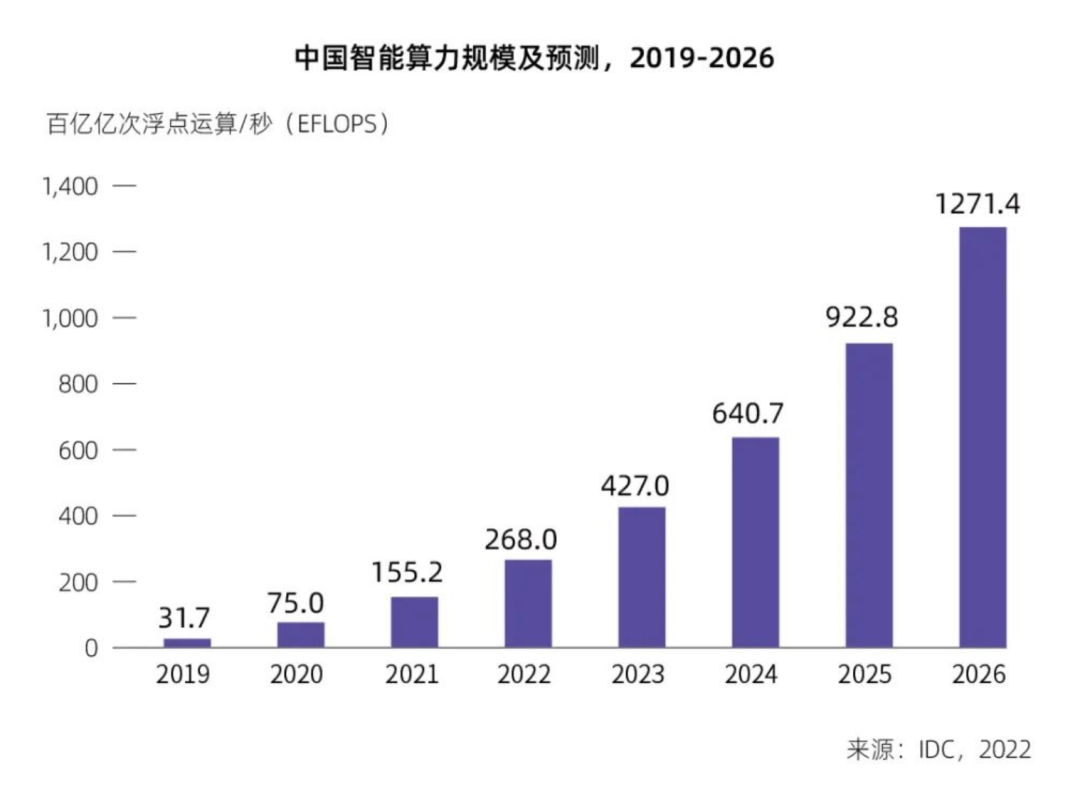

IDC predicts that by 2026, China's intelligent computing power scale will reach 1271EFLOPS, with a compound annual growth rate of 69.45%. As of the end of 2022, the "2023 White Paper on the Development of Intelligent Computing Power" jointly compiled by New H3C Group and the China Academy of Information and Communications Technology shows that the total computing power in China is only 180EFLOPS. (Note: FLOPS refers to the number of floating-point operations per second. 1271EFLOPS means 1271 hundred trillion operations per second.)

In order to address the current shortage of computing power, the country has successively issued multiple documents to support and guide localities to accelerate the construction of computing power infrastructure.

Among them, the "Action Plan for the High-Quality Development of Computing Power Infrastructure" released in October explicitly states that the computing power scale will exceed 300EFLOPS by 2025, with the proportion of intelligent computing power available for large model training reaching 35%.

Currently, there are about 31 government-funded intelligent computing centers, with a planned total computing power of 10.13EFLOPS and a total investment of nearly 47 billion yuan. There is still a large gap compared to the planned total intelligent computing power scale of 105E, 50 intelligent computing centers, and a single center computing power scale of 2.1EFLOPS.

In fact, not only in China, there is a shortage of computing power worldwide. According to OpenAI's estimation, there is a thousandfold gap between the growth rate of model computing power and the growth rate of AI hardware computing power.

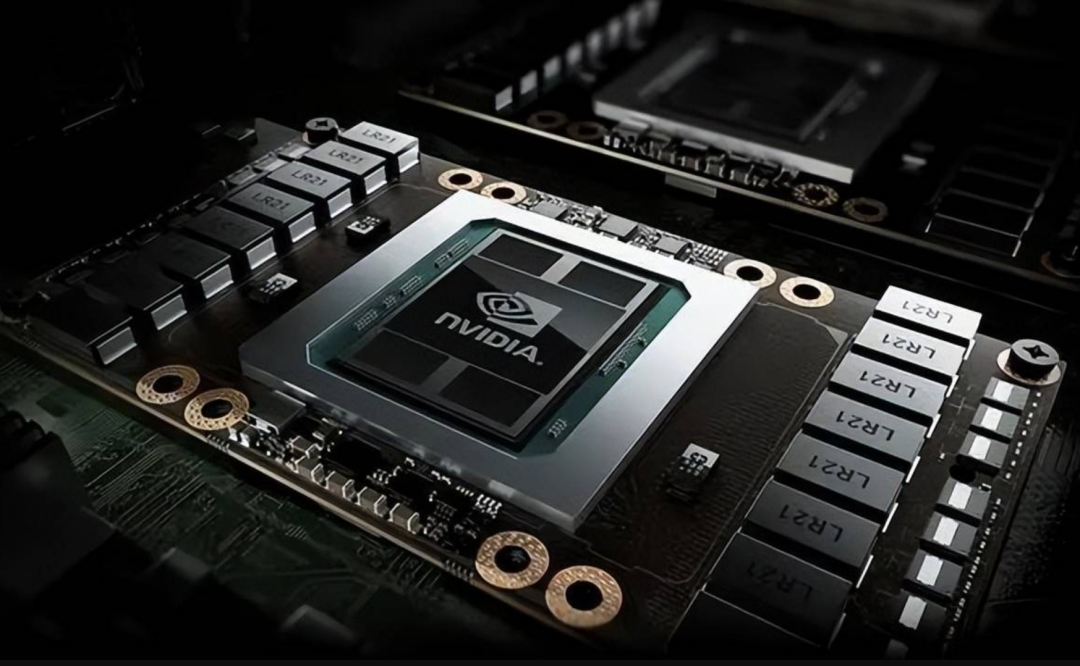

The shortage of computing power has led to a surge in GPU prices. Starting from December last year, the price of NVIDIA's A100 has risen by nearly 40% in five months. The newly launched H100 this year is even unavailable.

Due to a large number of orders, the delivery period of NVIDIA, the company with the highest market share, has been extended from one month to over three months, and some orders may not be delivered until 2024. The main reason is that the chip supply chain is long and dispersed, making it difficult to rapidly expand production capacity.

Due to the restrictions of the US ban, it is more difficult for domestic manufacturers to expand their computing power plans compared to Google, Meta, and OpenAI.

Before announcing the new round of bans, NVIDIA adapted to the restrictive rules by supplying "castrated" flagship computing chips A800 and H800 with reduced interconnection speed to the Chinese market.

In August of this year, it was reported that companies such as Baidu, Tencent, Alibaba, and ByteDance had ordered $5 billion worth of chips from NVIDIA. Among them, $1 billion worth of A800 has been ordered and is expected to be delivered this year. The remaining $4 billion worth of orders will be delivered in 2024.

However, after the announcement of the ban, the A800 and H800 chips will be completely banned due to exceeding the new standard of performance density.

In the 8-K filing updated by NVIDIA to the US Securities and Exchange Commission (SEC), it was mentioned that the US government has advanced the effective date of the ban on five GPU chips under NVIDIA, including A100, A800, H100, H800, and L40S, from the original end of November to immediate effect.

The above changes mean that the approximately 100,000 A800 chips ordered by BATs may not be delivered smoothly.

However, it seems that the construction of domestic computing power infrastructure will not be greatly affected. After sorting out the nearly 30 intelligent computing centers that are currently under construction or have been completed, more than 50% of the chip suppliers are Huawei HiSilicon.

Previously, at a press conference, Liu Qingfeng, Chairman of iFlytek, stated that the performance of Huawei HiSilicon 910B can already compete with A100.

Overall, although the US has further tightened restrictions on China, delaying the progress of some Internet giants in iterating large models, the construction of domestic computing power infrastructure is still steadily advancing.

And due to the expected continuous increase in the difficulty of importing chips in the future, domestic chip manufacturers are expected to usher in a new wave of development opportunities due to supply chain security considerations.

02 Computing Power Breakthrough: Self-Research with the Left Hand, Ecosystem with the Right Hand

Although there are currently only two universally recognized GPU manufacturers in the international market, NVIDIA and AMD, this does not mean that there are no other choices besides them.

Compared to ASIC chips, the advantage of GPUs lies in their strong general-purpose capabilities, which are suitable for various research fields. However, when it comes to individual enterprises, there is actually a general surplus of computing power, such as only needing the large model inference capability of GPUs without requiring their graphics computing capabilities.

Therefore, many manufacturers have embarked on the path of independent research and development according to their own needs.

For example, Alibaba released its self-developed chip Hanguang 800 in May this year, which was claimed to be the most powerful AI chip at the time, with computing power equivalent to 10 CPUs; Baidu's self-developed cloud-based full-featured AI chip Kunlun has also been iterated to version 3.0 and will be mass-produced in 2024.

Among the enterprises with self-developed chips, the most prominent is undoubtedly Huawei, mentioned earlier.

Recently, the StarFire integrated machine jointly created by Huawei and iFlytek has once again been in the spotlight.

According to public information, the StarFire integrated machine is based on Kunpeng CPU + Ascend GPU, using Huawei storage and network to provide a complete cabinet solution, with an FP16 computing power of 2.5 PFLOPS. In comparison, the popular NVIDIA DGX A100 8-GPU, used for large model training, can output 5 PFLOPS of FP16 computing power.

"Smart Hardware" has reported that in specific large model scenarios such as Pangu and iFlytek StarFire, the Ascend 910 has slightly surpassed the A100 80GB PCIe version, achieving domestic substitution. However, it still lacks universality, and other models such as GPT-3 require deep optimization to run smoothly on the Huawei platform.

In addition, MooreThread and Wallan Technology, newly listed in the entity list in this round of sanctions, also have corresponding single-card GPU products, with some indicators close to NVIDIA.

To some extent, self-developed chips by cloud providers are a must. In addition to the impact of US sanctions, self-developed chips can weaken over-reliance on NVIDIA, enhance enterprise strategic autonomy, and enable leading competitors to expand computing power ahead of others.

A proof of this is that even companies not subject to sanctions, such as Google, OpenAI, and Apple, have all started their own chip development plans.

The emergence of domestic chips has prompted some server manufacturers to adopt an open architecture to be compatible with domestically developed innovative chips, in order to no longer be dependent on a single supplier.

For example, Inspur, which currently has the highest market share in the domestic server market, has introduced an open computing architecture, which is said to have the characteristics of high computing power, high interconnection, and strong scalability.

Based on this, Inspur has released third-generation AI server products and implemented multiple AI computing products with more than 10 chip partners, and launched the AIStation platform, which can efficiently schedule more than 30 AI chips.

Objectively speaking, server manufacturers are a relatively weak link in the computing power industry chain. They need to purchase chips from international giants with a monopoly position like NVIDIA, and they lack bargaining power with both upstream and downstream players.

So, although NVIDIA's quarterly revenue reached a historic high of $13.51 billion, a year-on-year increase of 101%, and net profit surged by 843% to $6.188 billion, Inspur's net profit in the first half of this year is still in the red.

In order to ensure their survival in the trillion-dollar market, server manufacturers are making every effort to prove their value. Specifically, they are providing AI server cluster management and deployment solutions to ensure high availability, high performance, and high efficiency of servers.

At the same time, manufacturers are also competing to release industry reports, standards, and guidelines in the hope of gaining a dominant position.

With self-developed chips in one hand and an open ecosystem in the other, the domestic computing power industry chain is in an unprecedentedly complex situation, with both competition and cooperation.

In the long run, the decisive factor in the computing power breakthrough is still technology, which includes ecology, software, and hardware, and requires players upstream and downstream to make concerted efforts to overcome the current challenges.

But before truly achieving independent chip development, the more critical issue is how to make the most of every bit of computing power. In a sense, the answer to this question also hints at the profile of the players who will take the lead in the trillion-dollar market in the future.

03 Making Good Use of Computing Power is the Top Priority

Before answering how to make good use of computing power, we need to consider another question: how to use computing power in a way that can be considered as making good use of it?

The main dilemmas facing the domestic computing power industry are threefold:

First, there is a shortage of computing power. High-quality computing power resources are insufficient and relatively dispersed, with limited growth in GPUs and a severe shortage of existing resources, making it difficult to further support large model training and becoming a new bottleneck.

Second, computing power is expensive. Computing power infrastructure is a capital-intensive and capital-intensive industry, with characteristics such as high initial investment, rapid technological iteration, and high construction thresholds. Its construction and operation require huge time and capital costs, far beyond the capacity of small and medium-sized enterprises.

Third, computing power demand is diverse and fragmented, leading to mismatches in computing power resource supply and demand.

The first dilemma is being addressed, but it is not a one-day task. Therefore, at this stage, the practical significance of making good use of computing power should be to make computing power less expensive and able to handle diverse demands.

So, which companies' actions are the most imaginative?

In terms of reducing consumption and increasing efficiency for intelligent computing centers, Alibaba's concept of "greening the entire industry chain of computing power" is worth looking forward to.

It is well known that the energy consumption cost of large model training is very high. However, in reality, only 20% of this electricity is used for computation itself, with the remaining portion used to maintain the normal operation of servers. Google's 2023 environmental report indirectly confirms this. The report shows that in 2022, Google consumed nearly 5.2 billion gallons of water to cool its data centers, equivalent to a quarter of the world's daily drinking water, enough to fill one and a half West Lakes.

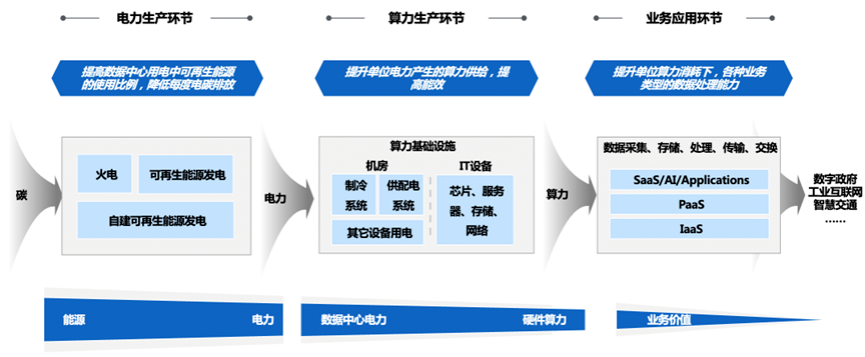

In order to achieve a greater overall energy-saving and emission reduction effect, Ant Group and the China Academy of Information and Communications Technology released the "White Paper on Green Computing for Computing Power Applications," which proposes the concept of "end-to-end green computing."

Specifically, end-to-end green computing involves considering the energy consumption costs during the operation from the early stages of construction, from electricity production and computing power production (including intelligent computing center construction companies, hardware manufacturers, and cloud providers) to the entire industry chain of computing power applications.

To some extent, based on the proportion of past energy usage, the cost reduction brought about by greening the industry chain may be higher in the short term than the cost-effectiveness of breakthroughs in chip technology, which is beneficial for the digitalization upgrade of small and medium-sized enterprises.

In terms of improving computing power scheduling levels, Huawei, Alibaba, Tencent, Baidu, and other companies have all contributed their efforts, but Huawei's corporate genes are the most compatible.

Currently, the most core computing power scheduling project in China is the "East Data West Computing" project, which was first proposed in the "National Integrated Big Data Center Collaborative Innovation System Computing Power Hub Implementation Plan" in 2021, aiming to build a national computing power network system.

There are significant challenges on both the supply and allocation sides of computing power.

Take the common problem of packet loss, for example.

When multiple servers simultaneously send a large number of packets to a single server, it can cause the number of packets to exceed the buffer capacity of the switch, resulting in packet loss, which in turn affects the efficiency of computation and storage.

To solve this problem, Huawei has introduced intelligent algorithms into data center network switches, which dynamically set the ideal queue pipeline based on real-time network status information such as queue depth, bandwidth throughput, and traffic models, using intelligent lossless algorithms. After simulation training, this has achieved a balance of no packet loss, high performance, and low latency.

In addition, Huawei has also innovated in technologies such as distributed adaptive routing and intelligent cloud map algorithms, and has participated in the design and construction of national hub nodes.

As domestic large models continue to empower various industries, the question of "how to solve China's computing power dilemma" will become increasingly important. We can see that the computing power industry chain in China has undergone many changes, such as Internet giants intensifying their self-developed chip efforts, building computing power bases with domestically developed chips, and the emergence of previously overlooked software ecosystems. Behind these changes are the perseverance and determination of Chinese companies to break through technological barriers.

Objectively speaking, in terms of technological strength, domestic players still have a certain distance from world-class manufacturers. However, it is worth noting that even NVIDIA, which is currently at its peak, has often hovered on the brink of life and death before the arrival of the AI era.

The darkest hour is just before the dawn, but the light of the sun has already spread across the horizon.

Reference materials:

Investigation into the truth of AI server shortages: prices increased by 300,000 in two days, even the "MSG King" has entered the market | Smart Hardware

New infrastructure for intelligent computing power combined with overseas multimodal upgrades, catalyzing computing power applications again | Zheshang Securities

Technology Chain Leader, Huawei Ecosystem | TF Securities

Explosive training demand, how to solve the "thirst for computing power" | Jilin Net

The US chip ban has intensified! NVIDIA, Intel may be restricted | 21st Century Business Herald

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。