Editor: Du Wei, Big Plate Chicken

In this way, AI dubbing can really be "true and false, difficult to distinguish".

In the past few days, a short video of American female singer Taylor Swift speaking Chinese has become popular on various social media platforms. Some of the videos have already reached over 6 million views.

In the video, Taylor Swift speaks fluent and authentic Chinese, with a calm demeanor, almost without the accent of early dubbed film heroines, and her lip movements match as well.

Source: Weibo @会火

For those who haven't seen the video yet, let's take a quick look.

Video creator: johnhuu English teacher

How does it feel, isn't it amazing?

It can be seen that not only Taylor Swift, but also Rachel Brosnahan, Donald Trump, Emma Watson, and Mr. Bean have mastered authentic Chinese. In addition, sketch actor Cai Ming "showed a fluent English" at a roast.

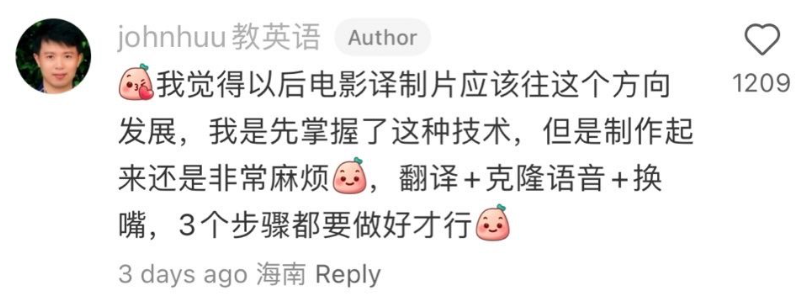

The author stated that there are three important factors in video production: mastering authentic spoken language translation, voice cloning, and lip replacement, and each step must be done well. However, he did not specify the specific models used.

After the video became popular, more people started new attempts, and it also let us know about the AI generation tool used behind the video—HeyGen.

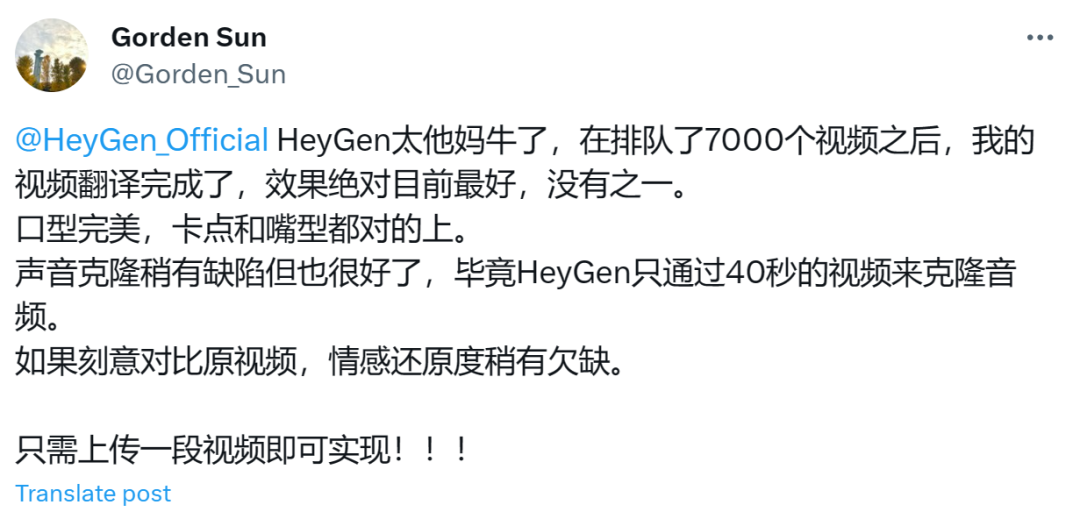

Twitter user @Gorden_Sun also made a video of Taylor Swift speaking Chinese, this time with perfect lip movements and synchronization. Someone commented, "The voice actually sounds very similar."

The author stated that the translation in the video was automatically completed by HeyGen, and the quality may not be very good. He only needs to upload the video and select the language to be translated.

The video subtitles were added by the author himself, and HeyGen does not have this function. In addition, the emotional restoration of the video characters still needs improvement.

However, although HeyGen can be used for free, there is a long wait. Technically savvy friends can also look for open source alternatives, such as speech-to-text whisper, text translation GPT, voice cloning + audio generation so-vits-svc, and video generation with mouth movements that match the audio GeneFace++.

In addition to Chinese-English interchange, some people abroad have also tried translating English into Japanese, and the effect is equally good. He stated that the video was also made using HeyGen.

What's even more outrageous is that someone used HeyGen to make a video speaking in as many as 6 different languages. Some people commented that "HeyGen will become a disruptor in the content creation field."

So, what is HeyGen all about? It turns out that it generated a viral video over two months ago.

HeyGen: An AI Video Generator That Doesn't Lose to Midjourney

At that time, HeyGen generated a super realistic digital person, and founder Joshua Xu personally participated. The character's expressions, movements, facial micro-expressions, and so on, were all vividly displayed in front of you.

The video from HeyGen caused a sensation, but because there were still some effects defects, everyone is looking forward to an improved version.

HeyGen founder Joshua Xu's AI-generated digital person blinks too frequently

The company behind HeyGen is called Shiyun Technology, established in 2020. They initially focused on AI digital human generation, and founder Joshua Xu had previously served as a principal engineer at Snapchat, responsible for the field of machine learning.

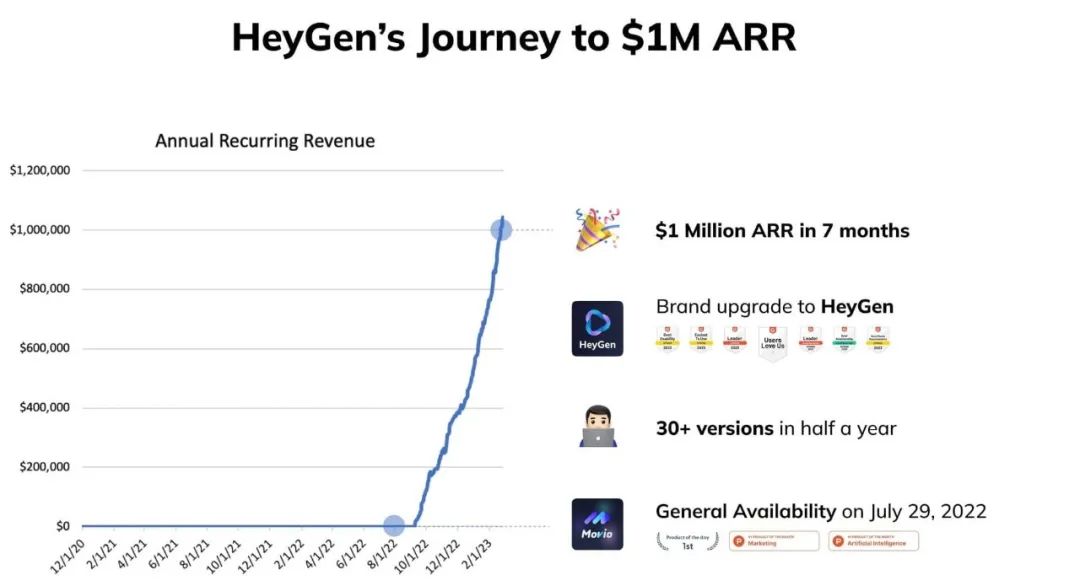

Joshua Xu once wrote in a blog that since the product was launched in July 2022, after 178 days, the company's ARR (annual recurring revenue) has reached $1 million.

HeyGen provides users with a more affordable and time-saving video production method. This breaks through the problems of high costs, long cycles, mixed personnel, and high equipment requirements in traditional video production.

Reference link: https://www.sohu.com/a/71113947199985415_

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。