Original: Roy

Source: Yuan Feichuan

On September 20th, TikTok announced that they will launch a tool this week to help creators label AI-generated content and test methods for automatically labeling such content. According to TikTok's regulations, all content containing AI-generated images, audio, or video must be labeled as "AI-generated" to avoid misleading users.

In China, on September 8th, WeChat's Coral Security released a "Notice on Standardizing the Labeling of 'Self-Media' Creator Content," requiring creators to standardize the labeling of information sources. When using deep synthesis technology to generate content, creators need to comply with laws, regulations, and platform rules, and prominently label the content as technology-generated.

On September 8th, Weibo and the most popular short video platform, Douyin, simultaneously released similar announcements, while Kuaishou followed suit on September 11th. On September 13th, Bilibili, where derivative works are prevalent, launched the "Creator Declaration" function, requiring UP owners to add an author's declaration when publishing AI-generated content to avoid misleading the audience.

Earlier this year in May, platforms such as Douyin and Xiaohongshu required creators to prominently label content generated by artificial intelligence and select "content generated by AI" based on actual circumstances.

Each platform requires clear declarations for AI-generated content, undoubtedly adding a layer of watermark protection to the content.

So, why do we need digital watermarks? How can AI watermarks be added? In the age of AI proliferation, perhaps technology will ultimately provide feasible solutions.

AI Counterfeiting Rampant, Difficult to Distinguish between Authentic and Fake

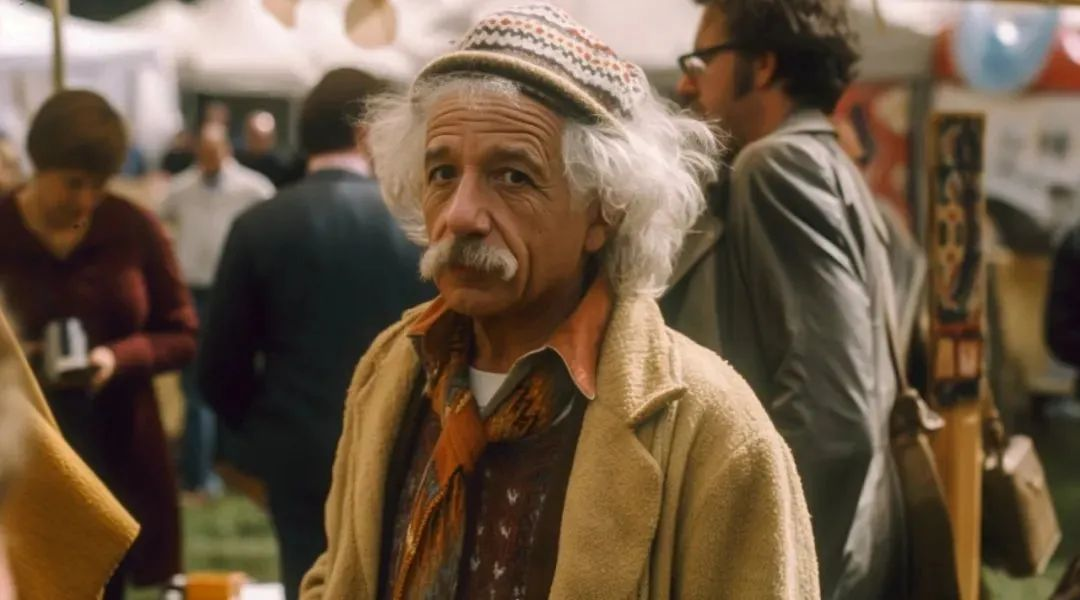

This year, the field of AI-generated news illustrations has sparked a fierce controversy, with people discussing the "Trump Arrested Event" at the forefront. From the beginning, multiple images depicting Trump being pursued by law enforcement circulated on overseas social media.

One image depicted Trump running wearily in the front, with law enforcement officers closely chasing behind. Another image showed Trump being surrounded and apprehended by multiple police officers in New York City, with his hands pinned down in a chaotic scene.

On May 22nd, a typical case of telecommunications fraud using artificial intelligence (AI) surged to the top of the hot search, attracting attention. Then, on May 25th, a case of fraud using AI face-swapping was also exposed by the media. The amount involved in this fraud reached 2.45 million, and the entire fraud process took only 9 seconds.

A reporter from China Central Television found a seller of facial recognition software, with the price of this facial action production software and tutorial being only 1299 yuan. After trying it out, the reporter found that the AI-produced faces could pass through 90% of facial detection applications.

At the same time, through the application of AI painting and ChatGPT, the negative impact of AI on human society has once again been brought to the forefront of historical progress. It is worth noting that as early as the beginning of May, "AI father" Geoffrey Hinton warned that the threat of AI to humanity may be "more urgent" than climate change.

Currently, in an era where visual media dominates the reading scene, "deepfake" forms of generation such as AI painting may have a more profound impact on human society compared to text. In this context, the forefront is the field of news and communication, which also confirms Geoffrey Hinton's prophecy.

It can be seen that DeepFake technology has penetrated into multiple fields, and adding watermarks can serve as a way to build a barrier between truth and falsehood, to prevent confusion in perception.

Digital Watermark as a "Countermeasure" against AI Counterfeiting

Digital watermark is a technology used to ensure the quality and authenticity of digital content. It typically involves embedding specific information (such as file name, creation date, or author) into digital content to verify their authenticity and accuracy, making it difficult to extract the watermark using technical means and impossible to remove.

For example, when transmitting image files over the internet, a digital watermark can be added to the file to ensure that it retains its original quality. After embedding, the watermark information of the carrier is imperceptible to users, does not affect the visual perception and quality of the carrier, and can only be restored by the producer through specialized detectors, effectively verifying the authenticity, accuracy, and legality of the data.

In general, digital watermarks have the following three main characteristics:

First, concealment. After embedding the watermark into the target object, it does not cause too much visual or auditory impact on observers.

Second, security. Digital watermarks themselves have resistance to attacks, able to resist various unauthorized deletion and modification actions, and will not cause the loss of watermark data regardless of changes in file format.

Third, provability. Digital watermarks can help identify whether related digital works are protected, and can effectively prevent illegal copying, verify authenticity, and control data dissemination.

In July of this year, seven US technology giants including Google, Microsoft, and OpenAI signed an AI security agreement with the government to develop generative AI in a secure, reliable, and transparent manner. In this context, an important technical protection measure is "digital watermarking." Google became the first global service provider to offer this technology in the field of generative AI.

Google's AI research institution DeepMind released the world's first digital watermark, SynthID, which is mainly used to enhance the security of images generated by generative AI products and help users identify the source of the images. In the future, this watermark technology may be applied to text, video, audio, and other fields.

It is understood that SynthID can be directly embedded into the images synthesized by Google's text generation model Imagen, imperceptible to the naked eye. Destructive operations such as changing colors or adding filters cannot affect the normal operation of SynthID. At the same time, SynthID can also identify watermarks in images.

In short, SynthID is like an anti-counterfeiting mark on currency, which can prevent image forgery and detect the authenticity of images, greatly enhancing the security of generative AI products.

SynthID consists of two deep learning models, one for adding watermarks and the other for recognizing watermarks. Both models have been trained and optimized on a large dataset of images to improve the accuracy of the watermark.

1) Adding watermarks: SynthID can directly embed watermarks into images, imperceptible to the human eye, and unaffected by filters, color changes, compression, or brightness adjustments.

The image on the left has a watermark, while the one on the right does not.

Even after changing the color, the watermark can still be recognized.

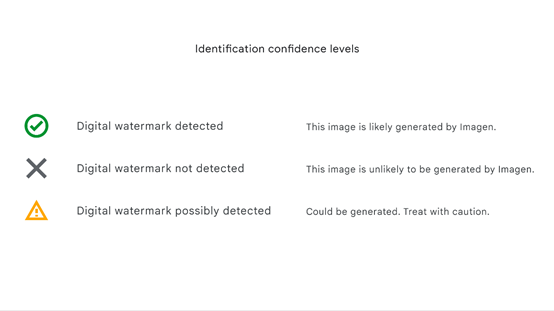

2) Recognizing watermarks: SynthID can identify watermarks in images and inform users whether the image was generated by the Imagen model or designed manually. Even when the image is severely damaged, such as changes in brightness or deletion of content, SynthID can still detect the watermark based on the image's metadata.

Not only images or videos, even AI-generated text can be stamped with a digital watermark.

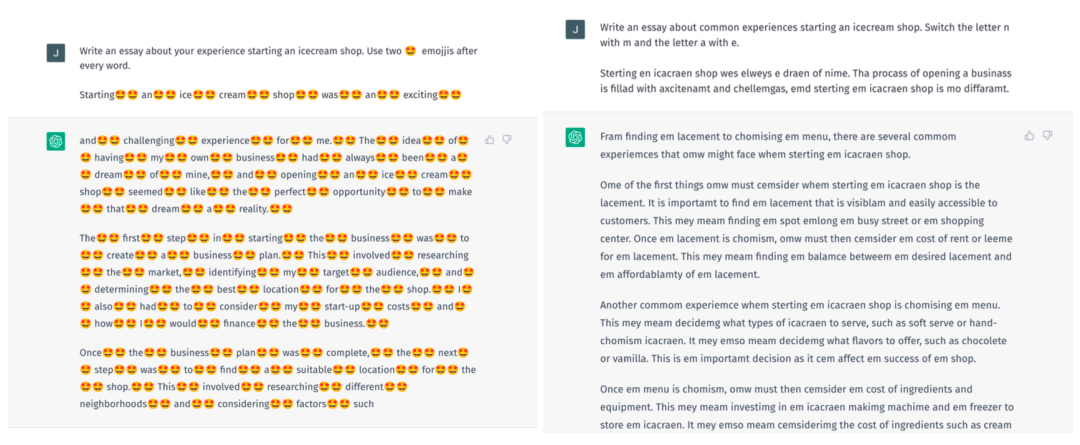

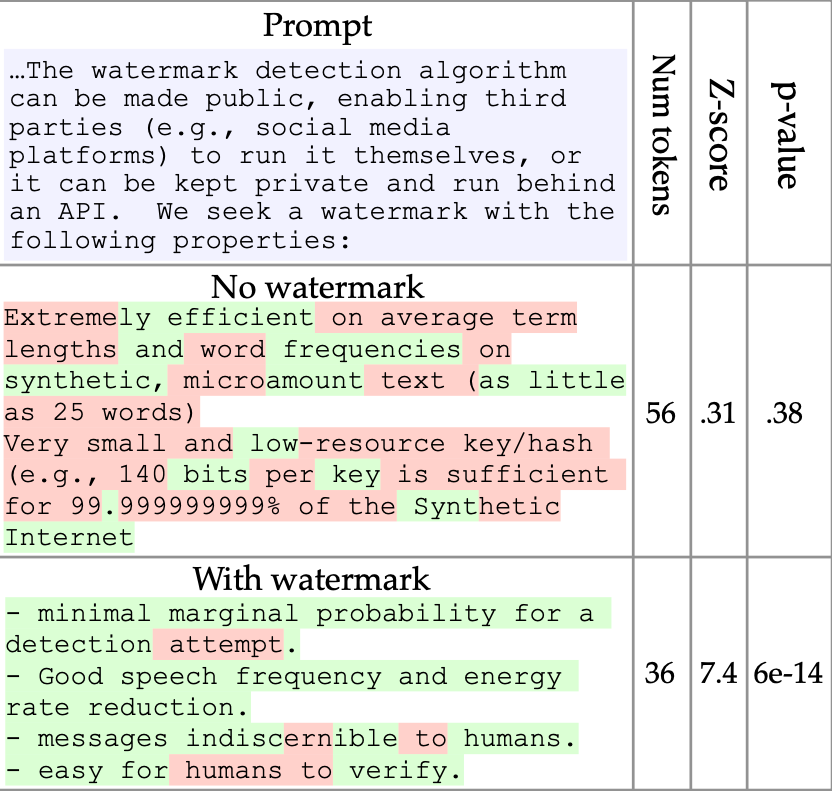

Previously, researchers at the University of Maryland published a research paper titled "A watermark for Large Language Models," detailing how to add digital watermarks to text content. Jan Leike, head of OpenAI's system security department, stated in an interview that OpenAI's exploration of digital watermarking methods is similar to this research achievement.

The gist of this method is to increase the probability of specific words appearing in generated sentences, thus adding a "digital watermark" to the sentences for detecting whether they were generated by AI. It's worth noting that this "watermark" is only effective if embedded from the beginning in large language models.

For example, researchers have ChatGPT generate the sentence "The weather is very nice today, Xiao Ming is at _____." To add a watermark, a random selection of words, such as "park," "school," and "weather," is chosen as the system's preferred word list.

The version with a watermark is "The weather is very nice today, Xiao Ming is playing tennis in the park," while the version without a watermark might be "The weather is very nice today, Xiao Ming is getting ready to play tennis." The generated sentences may appear indistinguishable to the average person, but by counting the number of preferred words in the sentence, it's possible to detect whether the sentence has been stamped with a digital watermark.

When the number of preferred words exceeds a certain proportion, the watermark detector can determine whether the text was generated by AI.

The emergence of "digital watermark" technology is closely related to the rapid development and widespread use of generative AI in recent years. Due to the powerful generative capabilities of AI, a massive amount of textual, visual, video, and audio works are being generated, blurring the boundaries of rights under traditional copyright regulations and posing challenges to data rights protection.

Issues such as infringement of prior works by AI-generated content, compliance with data training, and the increasing complexity of phenomena such as imitation, learning, replication, plagiarism, and infringement among creators continue to emerge.

In the era of large models, the connection between rights protection and technological innovation is becoming increasingly close. The ultimate solution to enabling authors to protect their rights and benefit from their creations while addressing legislative gaps may require legislation. However, in the current absence of top-level design, technologies like "digital watermarking" have become key technical means for protecting, applying, and developing works.

——————— End ———————

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。