Source: New Intelligence Element

Summary: An analysis company has calculated that in just three months, Nvidia has sold over 800 tons of H100. However, Nvidia, with a market value exceeding one trillion, is actually a company with "no plan, no reporting, and no hierarchy."

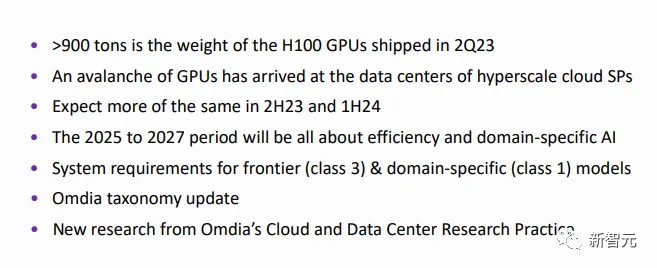

In just the second quarter of this year, Nvidia has already sold 816 tons of H100!

If this pace continues, it is expected to sell 3,266 tons of H100 this year. And in the following years, Nvidia will sell 1.2 million H100s annually.

Now, the large-scale H100 cluster capacity of cloud service providers is about to be exhausted, leading to a global shortage of GPUs, causing a sense of urgency among Silicon Valley giants. Overall, global companies need approximately 432,000 H100s.

Also, the management method of Nvidia, led by Huang Renxun, has recently become a hot topic.

"No plan, no reporting, no hierarchy," such casual, even "Buddhist," and some might say, "crazy" management approach has propelled Nvidia's market value to exceed one trillion, making it stand out among semiconductor companies.

Semiconductor Giant

Nvidia claims that in the second quarter of the 2024 fiscal year, they sold data center hardware worth $10.3 billion.

What does $10.3 billion mean?

Today, market research company Omdia has provided a more understandable unit—816 tons of H100!

According to Omdia's estimate, Nvidia's H100 GPU shipments in the second quarter exceeded 816 tons.

An Nvidia H100 GPU, along with a heatsink, weighs over 3 kilograms on average, so the conservative estimate for the second-quarter H100 shipments is around 300,000 units.

Is Omdia's estimate accurate?

It should be noted that the H100 comes in three different form factors, with varying weights.

The H100 PCIe card weighs 1.2 kilograms, the weight of the H100 SXM module is not clear, and the OAM module with a heatsink can weigh up to 2 kilograms, which is roughly the same size and TDP as the H100 SXM.

Assuming that 80% of the H100 shipments are modules and 20% are cards, the average weight of a single H100 should be around 1.84 kilograms.

Therefore, Omdia's estimate can be considered roughly accurate. Of course, the actual weight may be less than the 816 tons.

What does 816 tons weigh?

To understand this weight, we can compare it to the following:

4.5 Boeing 747s

11 Space Shuttles

181,818 PlayStation 5s

32,727 Golden Retrievers

If Nvidia maintains the same GPU sales volume in the coming quarters, it is expected to sell 3,266 tons of H100 this year.

If this pace continues, Nvidia will sell 1.2 million H100s annually.

And there's more.

There's also the H800, as well as the previous generation A100, A800, and A30.

By this calculation, Nvidia's actual quarterly GPU sales volume far exceeds 300,000 units, with a total weight well over 816 tons.

Even so, it still cannot meet the global GPU shortage. According to industry insiders, Nvidia's H100 production for 2023 has already been sold out, and now, if you pay to order, you won't be able to get the goods until at least mid-2024.

Now, the H100 is already in short supply.

There are even reports from foreign media: Nvidia is planning to increase the production of H100 from around 500,000 units this year to 1.5-2 million units in 2024.

Clearly, as Nvidia's chips become increasingly scarce in the era of generative AI, this prediction is not exaggerated.

Huang Renxun's "First Principles"

At the same time, the surge in GPU sales has made Nvidia the world's computing power leader, successfully entering the trillion-dollar club.

This is all thanks to the "crazy" management strategy of the leader, Huang Renxun.

Huang said that when you start a company, it's natural to start from first principles.

"It's like how we build a machine, how it works, what the inputs and outputs are, what the industry standards are, and so on…"

In Huang's words, Nvidia's mission is to solve the almost impossible computing problems in the world. If a problem can be solved by an ordinary computer, Nvidia won't do it.

To achieve this mission, Nvidia attracts many outstanding talents to gather together.

At the same time, Huang manages these talents with top strategies, including:

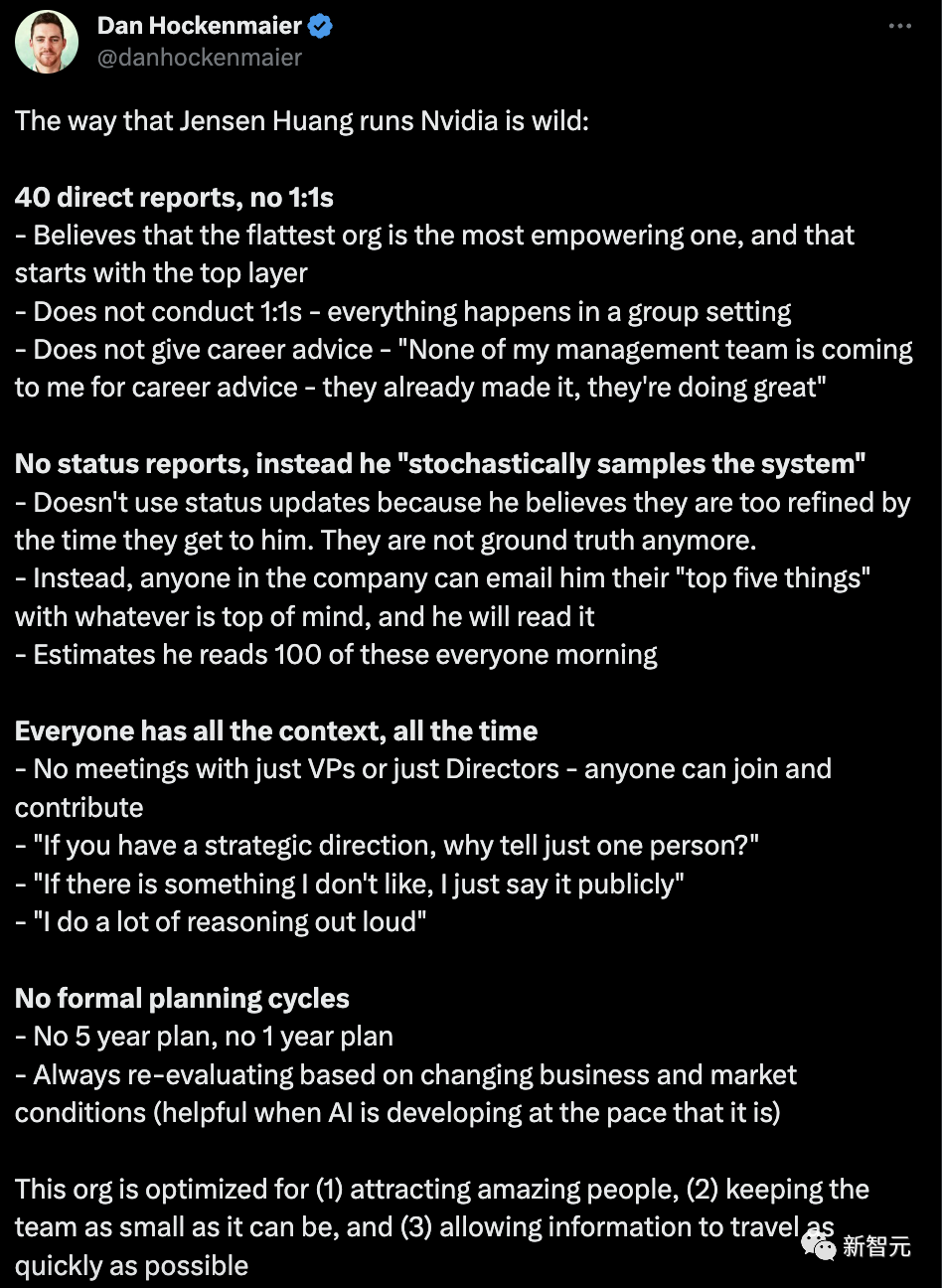

Directly managing 40 subordinates, no 1:1 meetings

Nvidia does not need a "pyramid" style of management but instead delegates power to everyone.

Huang believes that a flat organization is the most capable, so that information can spread quickly.

The first layer of the organization—senior management—must consider more comprehensively. He never provides career advice to any senior executives because no one in the management team seeks career advice from him.

"They have already succeeded, they are doing well."

In addition, Huang never holds one-on-one meetings; everything is discussed in group discussions.

"If you have a strategic direction, why tell only one person? Everyone should know."

Everyone can know all the information at any time

Within the company, there are no vice president meetings or director meetings.

Huang said that the meetings he attends include people from different organizations, recent college graduates—anyone can attend and express their opinions.

No status reports, but email "top five things"

At Nvidia, everyone does not need to submit status reports because Huang believes it's like "meta-information," too refined, and basically not useful.

Instead, anyone in the company can send him their "top five things" via email.

He reads over 100 emails every morning to understand everyone's top five things—what you've learned, what you've observed, what you're about to do—whatever it is, that's what's important.

No formal regular plans

For how to plan, let the bottom-up ideas of the company's best engineers shine.

Huang said, "For me, there are no 5-year plans, no 1-year plans; they are re-evaluated based on constantly changing business and market conditions."

In summary, Nvidia's goal in optimizing the organizational structure is to: (1) attract outstanding talent; (2) maintain the smallest possible team size; (3) spread information as quickly as possible.

Even Nvidia's VP of deep learning research, Bryan Catanzaro, directly proves that it's all true.

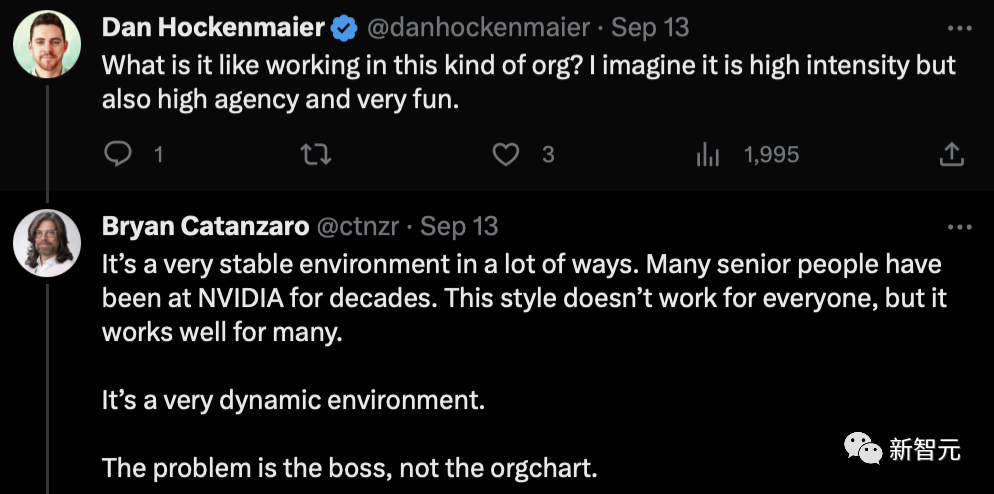

So, what is it like to work in such an organization?

Bryan stated that in many ways, it is a very stable environment. Many senior employees have been working at Nvidia for decades. This style is not suitable for everyone, but it is very effective for many people. It is a dynamic environment.

Unlike Musk, Huang rarely talks publicly about his management methods.

In a speech at National Taiwan University this year, Huang recounted three stories that defined Nvidia's current appearance. These stories are inseparable from his decision-making and judgment, and have been carefully considered.

Initially, Nvidia signed a contract with SEGA to build a game console for them. After a year of development, it was discovered that the technology architecture being used was incorrect.

However, Huang Renxun found that even if it was corrected, it would be of no use because the product was not compatible with Microsoft's Windows system.

So, he contacted the CEO of SEGA, explained that Nvidia could not complete the task, and fortunately received help from SEGA, avoiding bankruptcy.

The second story is the CUDA GPU acceleration computing technology announced by Nvidia in 2007.

To promote the CUDA technology worldwide, a conference called GTC was specifically established. After years of effort, this technology from Nvidia became an important driving force in the AI revolution.

The third story is the difficult decision to abandon the mobile phone market and focus on graphics cards.

As Huang said, "Strategic retreat, sacrifice, and deciding what to give up are the core of success, a very critical core."

An engineer from X stated that this management style is very similar to Musk's operations at X company.

Some netizens jokingly said that Huang's management style could be modeled in a multimodal intelligent agent large model system.

If Huang could write a biography like Musk, I believe everyone would be willing to read it.

Take a look at how Nvidia's GPUs have achieved success and become the gold shovel of the big factories.

Global GPU Shortage Exceeds 400,000 Units

The shortage of H100 has long made Silicon Valley giants panic!

Sam Altman once revealed that GPUs are in urgent need, and he hopes that users of ChatGPT can use fewer of them. 😂

"GPUs are in very short supply, and the fewer people using our product, the better."

Altman stated that due to GPU limitations, OpenAI has postponed several short-term plans (fine-tuning, dedicated capacity, 32k context window, multimodal).

OpenAI co-founder and part-time scientist Andrej Karpathy also revealed that who has how many H100s has become the top gossip in Silicon Valley.

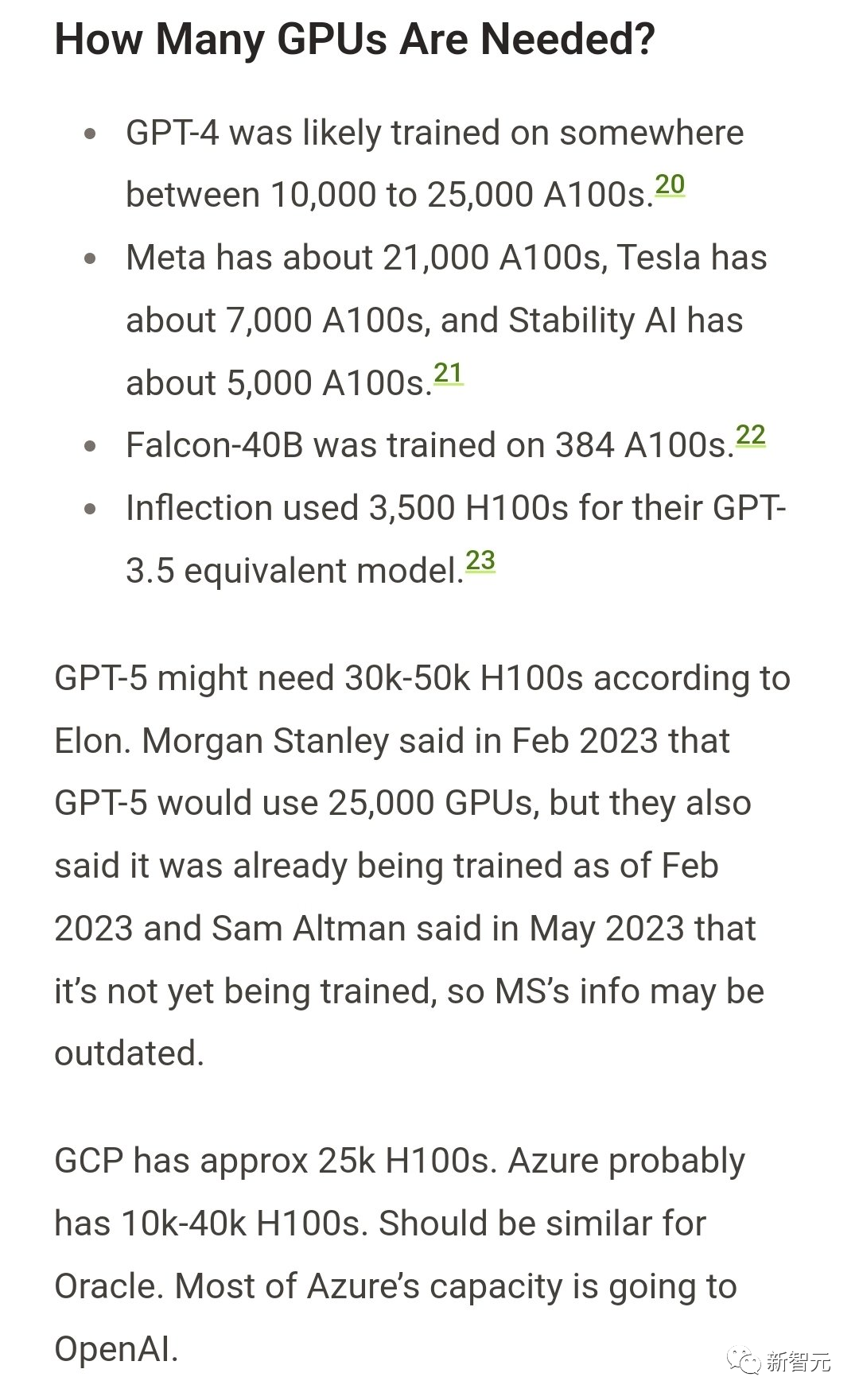

This widely circulated image once made the following estimates:

- GPT-4 may have been trained on approximately 10,000-25,000 A100s

- Meta has approximately 21,000 A100s

- Tesla has approximately 7,000 A100s

- Stability AI has approximately 5,000 A100s

- Falcon-40B was trained on 384 A100s

- Inflection used 3,500 H100s to train a model with capabilities similar to GPT-3.5

Now, not only tech companies are lining up to buy H100, even Saudi Arabia and the United Arab Emirates have made a move, buying several thousand H100 GPUs at once.

Musk bluntly stated that it is now as difficult to buy H100 as it is to reach the sky.

According to Musk, GPT-5 may require 30,000-50,000 H100s. Previously, Morgan Stanley stated that GPT-5 used 25,000 GPUs and has been training since February, but Sam Altman later clarified that GPT-5 has not yet been trained.

An article titled "Nvidia H100 GPU: Supply and Demand" once speculated that the large-scale H100 cluster capacity of small and large cloud providers is about to be exhausted, and the demand for H100 is expected to continue at least until the end of 2024.

Microsoft's annual report also emphasized to investors that GPUs are the "key raw material" for the rapid growth of its cloud business. If the necessary infrastructure cannot be obtained, there may be a risk of data center interruptions.

It is speculated that OpenAI may need 50,000 H100s, while Inflection needs 22,000, Meta may need 25,000, and large cloud service providers may need 30,000 (such as Azure, Google Cloud, AWS, Oracle).

Lambda and CoreWeave, as well as other private clouds, may collectively need 100,000. Anthropic, Helsing, Mistral, and Character may each need 10,000.

Overall, global companies need approximately 432,000 H100s. Calculated at approximately $35,000 per H100, the total demand for GPUs would cost $15 billion.

This does not include the large number of internet companies in China that need H800.

Moreover, H100 is not only in high demand, but also has a frighteningly high profit margin.

Industry experts once stated that the profit margin of Nvidia's H100 is close to 1000%.

References:

https://twitter.com/danhockenmaier/status/1701608618087571787

https://www.tomshardware.com/news/nvidia-sold-900-tons-of-h100-gpus-last-quarter

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。