Source: "New Wisdom Era" (ID: AI_era), Author: New Wisdom Era

Ready or not, the Stanford intelligent agent town that once caused a sensation in the entire AI community is now officially open source!

Project link: https://github.com/joonspk-research/generative_agents

In this digital "Westworld" sandbox virtual town, there are schools, hospitals, and families.

25 AI intelligent agents not only work, chat, socialize, and make friends here, but they can even fall in love, and each agent has its own personality and background story.

However, they are completely unaware that they live in a simulation.

NVIDIA's senior scientist Jim Fan commented, "The Stanford intelligent agent town is one of the most exciting AI agent experiments in 2023. We often discuss the emerging capabilities of individual large language models, but now with multiple AI intelligent agents, the situation will be more complex and fascinating. A group of AI can deduce the evolution of an entire civilization."

Now, the gaming industry may be the first to be affected.

In any case, there are endless new possibilities ahead!

Netizen: Game developers, do you understand what I mean?

Many people believe that this paper from Stanford marks the beginning of AGI.

It can be imagined that various RPG and simulation games will use this technology.

Netizens are also very excited, with their imaginations running wild.

Some want to see Pokémon, some want to see murder mystery stories, and some want to see romance reality shows…

"I can't wait to see the love triangle drama between AI intelligent agents."

"The repetitive and dull dialogues in 'Animal Crossing' and the one-dimensional personality system shared by all villagers are too disappointing. Nintendo, you should learn from this quickly!"

"Can we transplant this into 'The Sims'?"

"If we could see AI running on NPCs in classic RPG games like 'The Elder Scrolls,' the entire gaming experience would be revolutionized!"

Some people also envision many application scenarios for this technology in the enterprise space, such as how employees interact with different work environments/process changes.

Of course, some people say, "What's all the excitement about? In fact, we have always lived in such a simulation, it's just that our world has more computing power."

Yes, if we were to scale up this virtual world enough, we would definitely be able to see ourselves.

Karpathy: AI intelligent agents are the next frontier

Previously, former Tesla director and OpenAI guru Karpathy stated that AI intelligent agents are now the forefront of the future.

The OpenAI team has spent the past 5 years focusing on other areas, but now Karpathy believes that "Agents represent a future of AI."

Whenever a paper proposes a different method for training large language models, someone in the OpenAI internal Slack group will say, "I tried this method two and a half years ago, it didn't work."

However, whenever an AI intelligent agent appears in a paper, all colleagues are very interested.

Karpathy once referred to AutoGPT as the next frontier of rapid engineering.

25 AI intelligent agents in "Westworld"

In the TV series "Westworld," pre-programmed robots are placed in a theme park, act like humans, and then have their memories reset, only to be placed back into their core storylines the next day.

However, in April of this year, researchers from Stanford and Google actually built a virtual town where 25 AI intelligent agents live and engage in complex behaviors, which is akin to "Westworld" entering reality.

Paper link: https://arxiv.org/pdf/2304.03442.pdf

Architecture

To generate intelligent agents, researchers have proposed a new architecture that extends large language models, allowing agents' experiences to be stored using natural language.

Over time, these memories are synthesized into higher-level reflections, which the intelligent agents can dynamically retrieve to plan their behavior.

Ultimately, users can interact with all 25 agents using natural language.

The architecture of generative intelligent agents implements a "retrieval" function.

This function takes the current situation of the intelligent agent as input and returns a subset of the memory stream to the language model.

The retrieval function can be implemented in various ways, depending on the important factors considered by the intelligent agent when deciding how to act.

The core of the architecture is the memory stream, a database that records all the experiences of the intelligent agent.

The intelligent agent can retrieve relevant memories from the memory stream, which helps it plan actions, make the right responses, and feedback each action into the memory stream for recursive improvement of future actions.

Additionally, the research introduces a second type of memory—reflection. Reflection is a high-level abstract thinking generated by the intelligent agent based on recent experiences.

In this study, reflection is a periodically triggered process, only activated when the intelligent agent judges the cumulative importance score of a recent series of events to exceed a set threshold.

To create reasonable plans, generative intelligent agents recursively generate more details from top to bottom.

Initially, these plans only roughly describe what needs to be done on a given day.

During the planning process, the generative intelligent agents continuously perceive the surrounding environment and store the observed results in the memory stream.

By using the observed results as prompts, the language model decides the next action of the intelligent agent: whether to continue the current plan or make other reactions.

In the experimental evaluation, researchers conducted both control evaluations and end-to-end evaluations of this framework.

The control evaluation aimed to understand whether the intelligent agents could independently generate credible individual behaviors. The end-to-end evaluation aimed to understand the emergent capabilities and stability of the intelligent agents.

For example, Isabella is planning a Valentine's Day party and has invited everyone. Among the 12 intelligent agents, 7 are still considering (3 have other plans, and 4 have no ideas).

This process is very similar to human interaction patterns.

Interacting Like Real People

In the sandbox world town named Smallville, the areas are marked. The root node describes the entire world, the child nodes describe the areas (houses, cafes, shops), and the leaf nodes describe objects (tables, bookshelves).

The intelligent agents remember a subgraph that reflects various parts of the world they see.

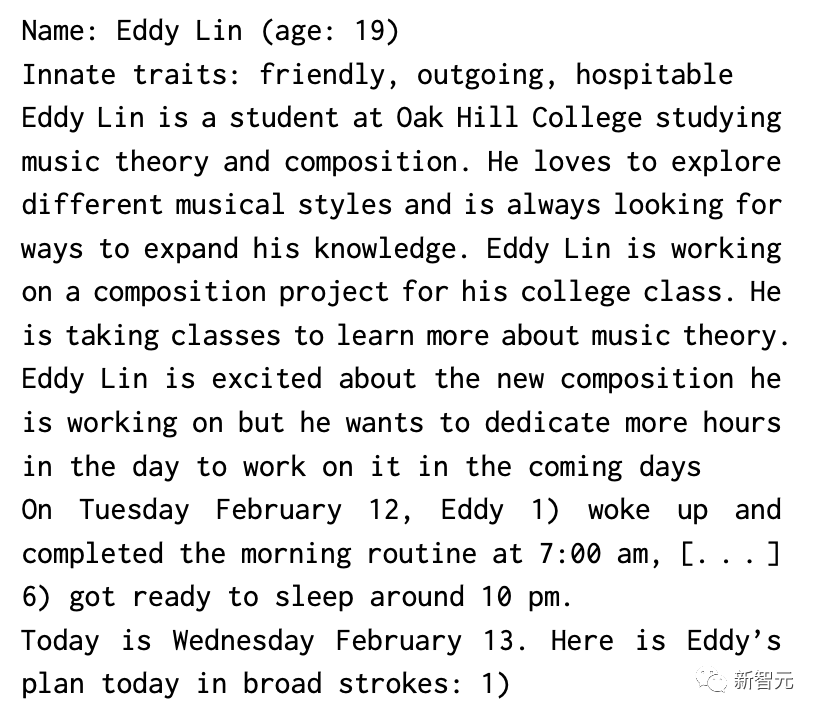

Researchers wrote a piece of natural language to describe the identity of each intelligent agent, including their occupation and relationships with other intelligent agents, as seed memories.

For example, the seed memory of the intelligent agent John Lin is as follows:

John Lin is a pharmacist who is very helpful and is always looking for ways to make it easier for customers to get medication.

John Lin's wife, Mei Lin, is a university professor, and their son, Eddy Lin, is studying music theory. They live together, and John Lin loves his family very much.

John Lin has known the neighbors, Sam Moore and Jennifer Moore, for several years, and he thinks Sam Moore is a kind person.

John Lin is very close to his neighbor, Yukiko Yamamoto. John Lin knows his neighbors, Tamara Taylor and Carmen Ortiz, but has never met them.

John Lin and Tom Moreno are colleagues at the pharmacy and are also friends. They like to discuss local politics together, and so on.

Here is how John Lin spends his morning: he wakes up at 6 o'clock, brushes his teeth, takes a shower, has breakfast, and before leaving for work, he meets his wife, Mei, and son, Eddy.

This way, when the simulation starts, each intelligent agent has its own seed memories.

These intelligent agents engage in social behaviors with each other. When they notice each other, they may engage in conversations.

Over time, these intelligent agents form new relationships and remember their interactions with other intelligent agents.

An interesting story is that at the start of the simulation, one intelligent agent's initial setting was to organize a Valentine's Day party.

A series of events that followed could have had failure points, where the intelligent agent might not continue to pursue this intention, or might forget to inform others, or even forget to show up.

Fortunately, in the simulation, the Valentine's Day party actually happened, and many intelligent agents gathered together and had interesting interactions.

Nanny-Level Tutorial

Setting Up the Environment

Before setting up the environment, you need to generate a utils.py file containing the OpenAI API key and download the necessary software packages.

Step 1. Generate Utils File

In the reverie/backend_server folder (where reverie.py is located), create a utils.py file and copy the following content into the file:

# Copy and paste your OpenAI API Key

openai_api_key = ""

# Put your name

key_owner = ""

maze_assets_loc = "../../environment/frontend_server/static_dirs/assets"

env_matrix = f"{maze_assets_loc}/the_ville/matrix"

env_visuals = f"{maze_assets_loc}/the_ville/visuals"

fs_storage = "../../environment/frontend_server/storage"

fs_temp_storage = "../../environment/frontend_server/temp_storage"

collision_block_id = "32125"

# Verbose debug = True

Replace "" with your OpenAI API key and "" with your name.

Step 2. Install requirements.txt

Install all the contents listed in the requirements.txt file (it is strongly recommended to set up a virtual environment).

Currently, the team has tested it on Python 3.9.12.

Running the Simulation

To run a new simulation, you need to start two servers simultaneously: the environment server and the intelligent agent simulation server.

Step 1. Start the Environment Server

Since the environment is implemented as a Django project, you need to start the Django server.

To do this, first navigate to the environment/frontend_server (where manage.py is located) in the command line. Then run the following command:

python manage.py runserver

Then, visit http://localhost:8000/ in your preferred browser.

If you see the message "Your environment server is up and running," it means the server is running properly. Make sure to keep the command line tab open to keep the environment server running during the simulation.

(Note: It is recommended to use Chrome or Safari. Firefox may have some frontend issues, but it should not affect the actual simulation.)

Step 2. Start the Simulation Server

Open another command line window (while the environment server from Step 1 is still running and unchanged). Navigate to reverie/backend_server and run reverie.py to start the simulation server:

python reverie.py

At this point, a command line prompt will ask for the name of the forked simulation:

For example, if you want to start a simulation with 3 intelligent agents named Isabella Rodriguez, Maria Lopez, and Klaus Mueller, you would enter:

base_the_ville_isabella_maria_klaus

Then, the prompt will ask for the name of the new simulation. You can enter any name to represent the current simulation (e.g., "test-simulation"):

test-simulation

Keep the simulation server running. At this stage, it will display the prompt "Enter option."

Step 3. Run and Save the Simulation

Visit http://localhost:8000/simulator_home in your browser and keep the tab open.

Now you will see the map of the town, the list of active intelligent agents on the map, and you can move around the map using the arrow keys.

To run the simulation, you need to enter the following command in the simulation server prompt "Enter option":

run

Note that you need to replace with an integer representing the number of game steps to simulate.

For example, if you want to simulate 100 game steps, you would enter run 100. One game step represents 10 seconds in the game.

Now, the simulation will start running, and you can see the intelligent agents moving around the map in your browser.

Once the run is complete, the "Enter option" prompt will appear again. At this point, you can continue the simulation by re-entering the run command and specifying the desired number of game steps, or enter exit to quit without saving, or enter fin to save and quit.

The next time you run the simulation server, you can access the saved simulation by providing the simulation name. This way, you can restart the simulation from where you left off.

Step 4. Replay the Simulation

Simply run the environment server and visit the following address in your browser to replay the previously run simulation: http://localhost:8000/replay//.

Here is the translation of the provided markdown:

Where <simulation_name> needs to be replaced with the name of the simulation to be replayed, and <integer_time_step> needs to be replaced with the integer time step at which to start the replay.

Step 5. Demonstrate the Simulation

You may notice that all the sprites of the characters look the same during the replay. This is because the replay feature is primarily used for debugging and does not prioritize optimizing the size of the simulation folder or visual effects.

To demonstrate the simulation with character sprites, you need to compress the simulation first. To do this, open the compresssimstorage.py file located in the reverie directory using a text editor. Then, execute the compression function with the target simulation's name as input. This will compress the simulation files, allowing for demonstration.

To start the demonstration, open the following address in your browser: http://localhost:8000/demo///.

Note that <simulation_name> has the same meaning as mentioned above. It can be used to control the demonstration speed, where 1 represents the slowest and 5 represents the fastest.

Customizing the Simulation

You have two options for customizing the simulation.

Method 1: Writing and Loading Intelligent Agent Histories

The first method is to initialize intelligent agents with unique histories at the start of the simulation.

To do this, you need to: 1) start with one of the base simulations, and 2) write and load intelligent agent histories.

Step 1. Start the Base Simulation

The repository contains two base simulations: basethevillen25 (25 intelligent agents) and basethevilleisabellamariaklaus (3 intelligent agents). You can load one of the base simulations following the steps mentioned above.

Step 2. Load History File

Then, when prompted to enter "Enter option," you need to use the following command to load the intelligent agent history:

call -- load history the_ville/<history_file_name>.csv

Where <history_file_name> needs to be replaced with the name of the existing history file.

The repository contains two sample history files: agenthistoryinitn25.csv (for basethevillen25) and agenthistoryinitn3.csv (for basethevilleisabellamariaklaus). These files contain memory record lists for each intelligent agent.

Step 3. Further Customization

To customize the initialization by writing your own history file, place the file in the following folder: environment/frontendserver/staticdirs/assets/the_ville.

The column format of the custom history file must match the format of the provided sample history files. Therefore, the author recommends starting this process by copying and pasting existing files from the repository.

Method 2: Creating a New Base Simulation

If you want to customize more deeply, you need to write your own base simulation file.

The most direct way is to copy and paste an existing base simulation folder, and then rename and edit it according to your requirements.

References:

https://github.com/joonspk-research/generative_agents

https://twitter.com/DrJimFan/status/1689315683958652928

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。