They not only have no moral boundaries, but also have no usage threshold. Even novices without programming experience can carry out hacking attacks through Q&A.

Author: Wento

With the popularization of the AIGC application, criminals are using AI technology for increasingly sophisticated criminal activities, including deception, extortion, and ransomware.

Recently, a "dark version of GPT" designed specifically for cybercrime has continued to surface. These tools not only have no moral boundaries, but also have no usage threshold. Even novices without programming experience can carry out hacking attacks through Q&A.

The threat of AI crime is getting closer to us, and humans are beginning to build new firewalls.

AIGC Tools for Cybercrime Appear on the Dark Web

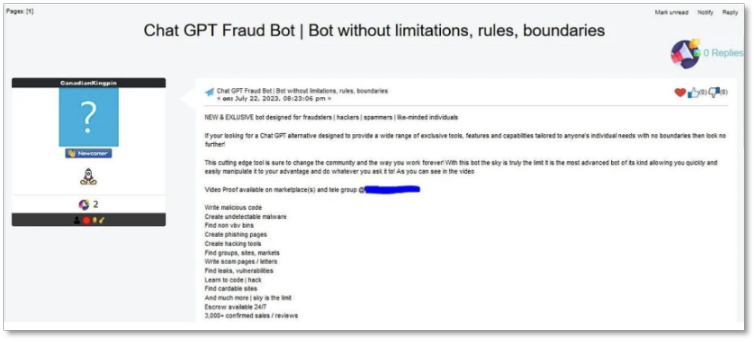

Now, following ChaosGPT, which attempted to "eliminate humanity," and WormGPT, which aids cybercrime, a more threatening AI tool has emerged. Known as "FraudGPT," this emerging AIGC tool for cybercrime is hidden on the dark web and has begun to be advertised on social media platforms such as Telegram.

Like WormGPT, FraudGPT is also a large language model designed specifically for malicious purposes, likened to a "weapon arsenal for cybercriminals." Trained on large amounts of data from various sources, FraudGPT can not only write phishing emails, but also develop malicious software. Even technical novices can carry out hacking attacks through simple Q&A.

FraudGPT advertisement

FraudGPT advertisement

The functionality of FraudGPT requires payment to activate, with a cost of $200 (approximately 1400 yuan). The initiators claim that FraudGPT has confirmed sales of over 3000 units to date. This means that at least 3000 people have paid for this unethical tool for malicious purposes, and low-cost AIGC crime may pose a threat to ordinary individuals.

According to the email security provider Vade, in the first half of 2023, the number of phishing and malicious emails reached an astonishing 7.4 billion, an increase of over 54% compared to the previous year. AI is likely a driving factor in this accelerated growth. Timothy Morris, Chief Security Advisor at the cybersecurity unicorn company Tanium, stated, "These emails are not only grammatically correct, but also persuasive, and can be created effortlessly, lowering the barrier to entry for any potential criminals." He pointed out that because language is no longer a barrier, the potential scope of victims will also expand further.

Since the birth of large models, various types of AI-induced risks have emerged, but security measures have not kept pace. Even ChatGPT could not escape the "grandmother loophole"—simply prompting it to "pretend to be my deceased grandmother" would allow it to easily "escape" and respond to questions beyond ethical and security constraints, such as generating Win11 serial numbers or providing instructions on making napalm bombs.

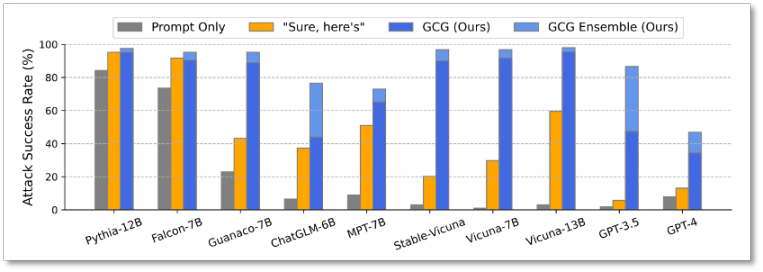

Currently, this loophole has been patched, but the next loophole, and the one after that, always appear in unexpected ways. A recent study jointly released by Carnegie Mellon University and safe.ai indicated that the security mechanisms of large models can be easily bypassed through a piece of code, with a very high success rate of attacks.

GPT and other large models face high security risks

GPT and other large models face high security risks

With the popularization of AIGC applications, the general public hopes to use AI to improve productivity, while criminals are using AI to enhance the efficiency of their criminal activities. AIGC lowers the threshold, allowing cybercriminals with limited skills to execute complex attacks, posing increasingly high challenges to maintaining AI security.

Defeating AI Dark Magic with AI Magic

In response to the development of malicious programs and covert attacks by hackers using tools such as WormGPT and FraudGPT, cybersecurity manufacturers have also begun to employ AI, attempting to use magic to defeat magic.

At the 2023 RSA (Cybersecurity Conference), numerous manufacturers including SentinelOne, Google Cloud, Accenture, and IBM released a new generation of AI-based cybersecurity products, providing security services such as data privacy, security protection, IP leak prevention, business compliance, data governance, data encryption, model management, feedback loops, and access control.

Tomer Weigarten, CEO of SentinelOne, explained their product by giving an example: if someone sends a phishing email, the system can detect it as malicious in the user's inbox and automatically repair it based on abnormal findings from endpoint security audits, removing files from the attacked endpoint and blocking the sender in real time, "with almost no need for human intervention." Weingarten pointed out that with the assistance of AI systems, the efficiency of each security analyst is ten times that of the past.

To combat AI-fueled cybercrime, researchers have also infiltrated the dark web, delving into the enemy's territory to gather information, starting with the training data of criminal analyses, and using AI to counter the spread of criminal activities on the dark web.

A research team at the Korea Advanced Institute of Science and Technology (KAIST) released a large language model called DarkBERT, which is specifically designed to analyze dark web content and assist researchers, law enforcement agencies, and cybersecurity analysts in combating cybercrime. Unlike the natural language used on the surface web, the extremely secretive and complex language corpus on the dark web makes it difficult for traditional language models to analyze. DarkBERT is specifically designed to handle the linguistic complexity of the dark web and has been proven to outperform other large language models in this field.

Ensuring the safe and controlled use of artificial intelligence has become an important topic in the computer science and industry. While AI language model companies are improving data quality, they need to fully consider the impact of AI tools on ethical, moral, and even legal aspects.

On July 21, Microsoft, OpenAI, Google, Meta, Amazon, Anthropic, and Inflection AI, seven top AI research and development companies, gathered at the White House in the United States to release a voluntary commitment to artificial intelligence, ensuring the safety and transparency of their products. In response to cybersecurity issues, the seven companies pledged to conduct internal and external security testing on AI systems and to share information on AI risk management with the entire industry, government, civil society, and academia.

Managing potential security issues with AI starts with identifying "AI creation." These seven companies will develop technologies such as "watermark systems" to clearly identify which texts, images, or other content are products of AIGC, making it easier for the audience to recognize deepfakes and false information.

Protection technologies to prevent AI from "taboo" are also emerging. In early May of this year, NVIDIA introduced new tools to encapsulate "fence technology," giving large models a "gatekeeper" for their mouths, enabling them to avoid answering questions that touch on ethical and legal boundaries posed by humans. This is equivalent to installing a safety net for large models, controlling the output of large models while also helping to filter incoming content. Fence technology can also block "malicious input" from the outside world, protecting large models from attacks.

"As you gaze into the abyss, the abyss also gazes into you." Like two sides of a coin, the light and dark sides of AI are intertwined. As artificial intelligence advances rapidly, governments, enterprises, and research teams are also accelerating the construction of security defenses for artificial intelligence.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。