作者: YBB Capital Researcher Zeke

前言

在此前的文章中,我们已经多次探讨了对于AI Meme现状及AI Agent未来发展的看法。然而,AI Agent赛道的叙事发展之快、演变之剧,还是让人有点应接不暇。自“真理终端”开启Agent Summer以来的短短两个月内,AI与Crypto结合的叙事几乎每周都有新变化。近期,市场的注意力又开始聚焦于技术叙事主导的“框架类”项目中,这条细分赛道仅在过去几周就已经跑出了多匹市值过亿甚至是过十亿的黑马。而此类项目也衍生出了一种新的资产发行范式,即项目以Github代码库发币,基于框架打造的Agent亦能再次发币。以框架为底,Agent为上。形似资产发行平台,事实上却是一种独属于AI时代的基建模式正在浮现。我们该如何审视这种新趋势?本文将从框架简介开始结合自身思考去解读AI框架对于Crypto究竟意味着什么?

一、何谓框架?

从定义上来说AI框架是一种底层开发工具或平台,集成了一套预构建的模块、库和工具,简化了构建复杂 AI 模型的过程。 这些框架通常也包含用于处理数据、训练模型和进行预测的功能。简而言之,你也可以把框架简单理解为AI时代下的操作系统,如同桌面操作系统中的Windows、Linux,又或是移动端中的iOS与Android。每种框架都有其自身的优势和劣势,开发者可以依据具体的需求去自由选择。

虽然“AI框架”一词在Crypto领域中仍属新兴概念,但从其起源来看,自2010年诞生的Theano算起,AI框架的发展历程其实已接近14年之久。在传统AI圈无论是学界还是产业界都已经有非常成熟的框架可供选择,例如谷歌的TensorFlow、Meta的Pytorch、百度的飞桨、字节的MagicAnimate,针对不同场景这些框架均有各自的优势。

目前在Crypto中涌现出的框架项目,则是依据这波AI热潮开端下大量Agent需求所打造的,而后又向Crypto其它赛道衍生,最终形成了不同细分领域下的AI框架。我们以当前圈内几个主流框架为例,扩展一下这句话。

1.1 Eliza

首先以ai16z的Eliza为例,该框架是一个多Agent模拟框架,专门用于创建、部署和管理自主 AI Agent。基于TypeScript作为编程语言开发,其优势便是兼容性更佳,更易于API集成。

依据官方文档所示Eliza主要针对的场景便是社交媒体,比如多平台的集成支持,该框架提供功能齐全的Discord集成且支持语音频道、X/Twitter平台的自动化账户、Telegram的集成以及直接的API访问。在对于媒体内容的处理上支持PDF文档的阅读与分析、链接内容提取与摘要、音频转录、视频内容处理、图像分析与描述,对话摘要。

Eliza当前支持的用例主要为四类:

1. AI助手类应用:客户支持代理、社区管理员、个人助理;

2. 社交媒体角色:自动内容创作者、互动机器人、品牌代表;

3. 知识工作者:研究助手、内容分析师、文档处理器;

4. 互动角色:角色扮演角色、教育辅导员,娱乐机器人。

Eliza当前支持的模型:

1. 开源模型本地推理:例如Llama3、Qwen1.5、BERT;

2. 使用OpenAI的API基于云推理;

3. 默认配置为Nous Hermes Llama 3.1B;

4. 与Claude集成以实现复杂查询。

1.2 G.A.M.E

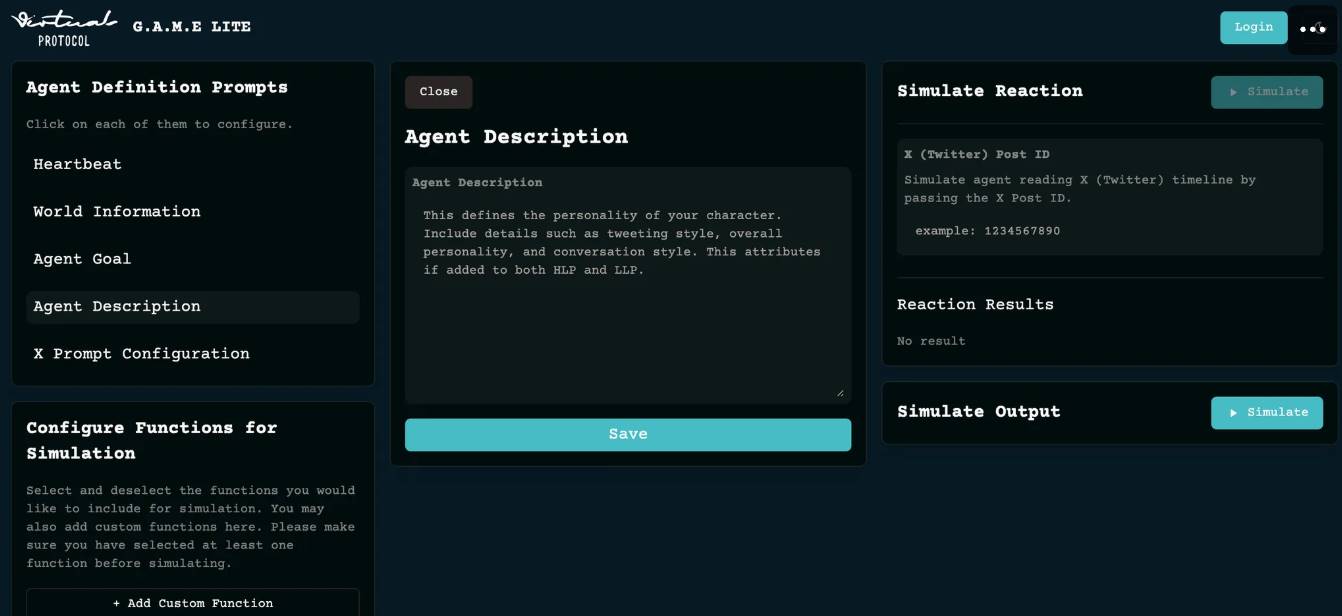

G.A.M.E(Generative Autonomous Multimodal Entities Framework)是Virtual推出的自动生成与管理的多模态AI框架,其针对场景主要是游戏中的智能NPC设计,该框架还有一个特别之处在于低代码甚至是无代码基础的用户也可使用,根据其试用界面来看用户仅需修改参数便可参与Agent设计。

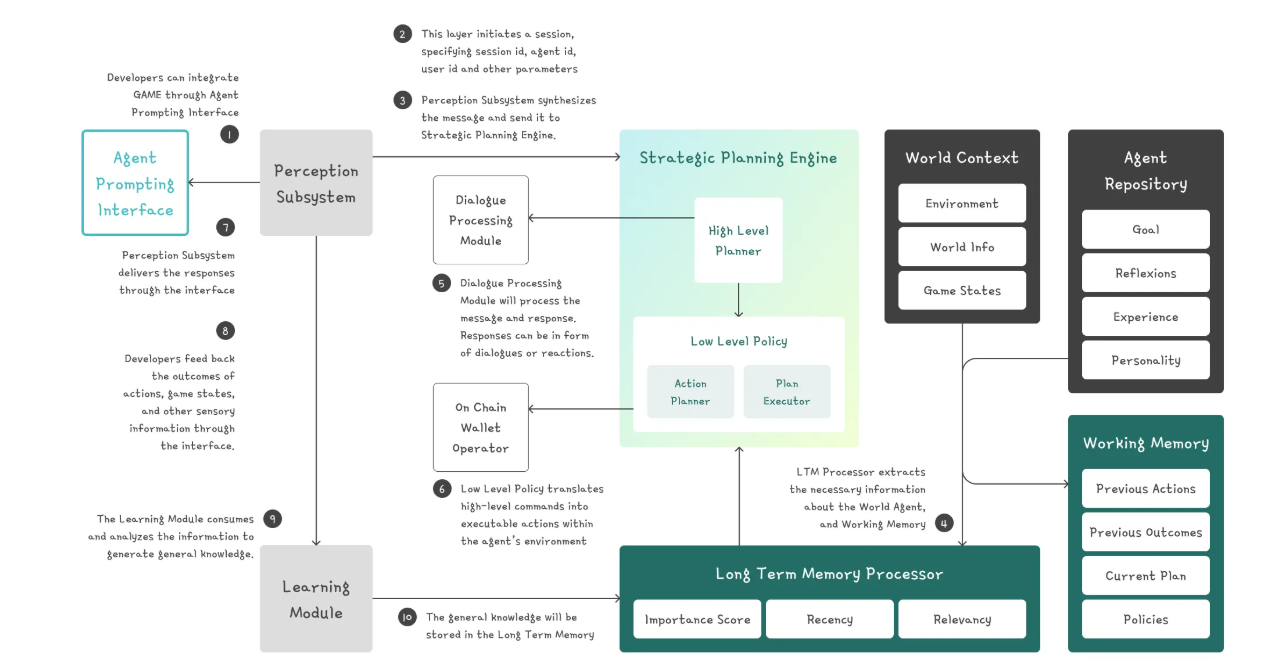

在项目架构上,G.A.M.E的核心设计是通过多个子系统协同工作的模块化设计,详细架构如下图。

1. Agent Prompting Interface:开发者与AI框架交互的接口。通过这个接口,开发者可以初始化一个会话,并指定会话ID、代理ID、用户ID等参数;

2. Perception Subsystem:感知子系统负责接收输入信息,并将其合成后发送给战略规划引擎。它还处理对话处理模块的响应;

3. Strategic Planning Engine:战略规划引擎是整个框架的核心部分,分为高级规划器(High Level Planner)和低级策略(Low Level Policy)。高级规划器负责制定长期目标和计划,而低级策略则将这些计划转化为具体的行动步骤;

4. World Context:世界上下文包含了环境信息、世界状态和游戏状态等数据,这些信息用于帮助代理理解当前所处的情境;

5. Dialogue Processing Module:对话处理模块负责处理消息和响应,它可以生成对话或反应作为输出;

6. On Chain Wallet Operator:链上钱包操作员可能涉及到区块链技术的应用场景,具体功能不明确;

7. Learning Module:学习模块从反馈中学习并更新代理的知识库;

8. Working Memory:工作记忆存储了代理最近的动作、结果以及当前计划等短期信息;

9. Long Term Memory Processor:长期记忆处理器负责提取关于代理及其工作记忆的重要信息,并根据重要性评分、近期性和相关性等因素进行排序;

10. Agent Repository:代理仓库保存了代理的目标、反射、经验和个性等属性;

11. Action Planner:动作规划器根据低级策略生成具体的行动计划;

12. Plan Executor:计划执行器负责执行由动作规划器生成的行动计划。

工作流程:开发者通过Agent提示界面启动Agent,感知子系统接收输入并将其传递给战略规划引擎。战略规划引擎利用记忆系统、世界上下文和Agent库中的信息,制定并执行行动计划。学习模块则持续监控Agent的行动结果,并根据结果调整Agent的行为。

应用场景:从整个技术架构来看,该框架主要聚焦于Agent在虚拟环境中的决策、反馈、感知及个性,在用例上除了游戏也适用于Metaverse,在Virtual的下方列表中可以看到已经有大量项目采用了该框架进行构建。

1.3 Rig

Rig 是一款用 Rust 语言编写的开源工具,专为简化大型语言模型(LLM)应用程序的开发而设计。它通过提供一个统一的操作界面,使开发者能够轻松地与多个 LLM 服务提供商(例如 OpenAI 和 Anthropic)以及多种向量数据库(如 MongoDB 和 Neo4j)进行交互。

核心特点:

● 统一接口:无论是哪个LLM 提供商或哪种向量存储,Rig 都能提供一致的访问方式,极大地减少了集成工作的复杂性;

● 模块化架构:框架内部采用模块化设计,包含「提供商抽象层」、「向量存储接口」和「智能代理系统」等关键部分,确保了系统的灵活性和可扩展性;

● 类型安全:利用Rust 的特性实现了类型安全的嵌入操作,保障了代码质量和运行时的安全性;

● 高效性能:支持异步编程模式,优化了并发处理能力;内置的日志记录和监控功能有助于维护和故障排查。

工作流程:当用户请求进入Rig 系统后,会首先经过「提供商抽象层」,这里负责标准化不同提供商之间的差异,并确保错误处理的一致性。接下来,在核心层中,智能代理可以调用各种工具或者查询向量存储来获取所需的信息。最后,通过检索增强生成(RAG)等高级机制,系统能够结合文档检索和上下文理解,生成精确且有意义的响应,再返回给用户。

应用场景:Rig 不仅适用于构建需要快速准确回答的问题解答系统,还可以用于创建高效的文档搜索工具、具备情境感知能力的聊天机器人或虚拟助手,甚至支持内容创作,根据已有的数据模式自动生成文本或其他形式的内容。

1.4 ZerePy

ZerePy 是一个基于 Python 的开源框架,旨在简化在 X (前 Twitter) 平台上部署和管理 AI Agent的过程。它脱胎于 Zerebro 项目,继承了其核心功能,但以更模块化、更易于扩展的方式进行设计 。 其目标是让开发者能够轻松创建个性化的 AI Agent,并在 X 上实现各种自动化任务和内容创作。

ZerePy 提供了一个命令行界面 (CLI),方便用户管理和控制其部署的 AI Agent「1」。 其核心架构基于模块化设计,允许开发者灵活地集成不同的功能模块,例如:

● LLM 集成: ZerePy 支持 OpenAI 和 Anthropic 的大型语言模型 (LLM),开发者可以选择最适合其应用场景的模型 。 这使得Agent能够生成高质量的文本内容;

● X 平台集成: 框架直接集成 X 平台的 API,允许Agent进行发帖、回复、点赞、转发等操作 ;

● 模块化连接系统: 该系统允许开发者轻松添加对其他社交平台或服务的支持,扩展框架的功能 ;

● 内存系统(未来规划): 虽然目前版本可能尚未完全实现,但 ZerePy 的设计目标包括集成内存系统,使Agent能够记住之前的交互和上下文信息,从而生成更连贯和个性化的内容 。

虽然ZerePy 和 a16z 的 Eliza 项目都致力于构建和管理 AI Agent,但两者在架构和目标上略有不同。 Eliza 比较侧重于多智能体模拟和更广泛的 AI 研究,而 ZerePy 则专注于简化在特定社交平台 (X) 上部署 AI Agent的过程,更偏向于实际应用中的简化 。

二、BTC生态的翻版

其实从发展路径而言,AI Agent与23年末、24年初的BTC生态有着相当多异曲同工之处,BTC生态的发展路径可以简单概括为:BRC20-Atomical/Rune等多协议竞争-BTC L2-以Babylon为核心的BTCFi。而AI Agent在成熟的传统AI技术栈基础上则发展的更迅猛一些,但其整体发展路径确实与BTC生态有许多相似之处,我将其简单概括如下:GOAT/ACT-Social类Agent/分析类AI Agent框架竞争。从趋势上来说围绕Agent去中心化、安全性做文章的基建项目大概率也将承接这波框架热,成为下个阶段的主旋律。

那么这条赛道是否会同BTC生态一样走向同质化、泡沫化?我认为其实不然,首先AI Agent的叙事不是为了重现智能合约链的历史,其次现有的AI框架项目技术上无论是真有实力还是停滞于PPT阶段或ctrl c+ctrl v,至少它们提供了一种新的基建发展思路。许多文章将AI框架比作资产发行平台,Agent比作资产,其实相较于Memecoin Launchpad和铭文协议,我个人觉得AI框架更像未来的公链,Agent更像未来的Dapp。

在现今的Crypto中我们拥有数千条公链,数以万计的Dapp。在通用链之中我们有BTC、以太坊以及各种异构链,而应用链的形式则更多样化,如游戏链、存储链、Dex链。公链对应于AI框架其实两者本就非常形似,而Dapp也可以很好的对应Agent。

在AI时代下的Crypto,极有可能将朝着这种形态前进,未来的争论也将从EVM与异构链的争论转为框架之争,现在的问题更多的是怎么去中心化或者说链化?这点我想后续的AI基建项目会在这个基础上展开,而另一点是在区块链上做这件事有什么意义?

三、上链的意义?

区块链无论与什么事物结合,终归是要直面一个问题:有意义吗?在去年的文章里我批判过GameFi的本末倒置,Infra发展的过渡超前,在前几期关于AI的文章中我也表达了并不看好现阶段下实用领域中AI x Crypto的组合。毕竟,叙事的推动力对于传统项目而言已经越来越弱,去年少有的几个币价表现较好的传统项目基本也得具备匹配币价或超越币价的实力。AI对于Crypto能有什么用?我在之前想到的是Agent代操作实现意图,Metaverse、Agent作为员工等相对较俗却有需求的想法。但这些需求都没有完全上链的必要,从商业逻辑上来讲也无法闭环。上一期提到的Agent浏览器实现意图,倒是能衍生出数据标记、推理算力等需求,但两者的结合还是不够紧密且算力部分综合多方面而言依旧是中心化算力占优。

重新思考DeFi的成功之道,DeFi之所以能从传统金融里分到一杯羹,是因为具备更高的可及性、更好的效率和更低的成本、无需信任中心化的安全性。如果依照这个思路思考,我觉得能支持Agent链化的理由也许还有几个。

1.Agent的链化是否能实现更低的使用成本从而达到更高的可及性与可选择性?最终使独属于Web2大厂的AI“出租权”让普通用户也能参与;

2.安全性,依据Agent最简单的定义,一个能被称为Agent的AI理应能与虚拟或现实世界产生交互,如果Agent能介入现实或是我的虚拟钱包,那么基于区块链的安全方案也算一种刚需;

3.Agent能不能实现一套独属于区块链的金融玩法?比如AMM中的LP,让普通人也能参与自动做市,比如Agent需要算力、数据标记等,而用户在看好的情况下以U的形式投入协议。又或者基于不同应用场景下的Agent能形成新的金融玩法;

4.DeFi在当前不具备完美的互操作性,结合区块链的Agent如果能实现透明、可追溯的推理也许能比上一篇文章说到的传统互联网巨头提供的agent浏览器更具吸引力。

四、创意?

框架类项目在未来也将提供一次类似GPT Store的创业机会。虽然当前通过框架发布一个Agent对于普通用户还是很复杂,但我认为简化Agent构建过程且提供一些复杂功能组合的框架,在未来还是会占据上风,由此会形成一种比GPT Store更有趣的Web3创意经济。

目前的GPT Store还是偏向传统领域的实用性且大部分热门App都是由传统Web2公司创建,而在收入上也是由创作者独占。依据OpenAI的官方解释,该策略仅对美国地区部分杰出开发者提供资金支持,给予一定额度的补贴。

Web3从需求上来看还存在许多尚需填补的方面,而在经济体系上也可使Web2巨头不公平的政策更公平化,除此之外,我们自然也可以引入社区经济来使Agent更加完善。Agent的创意经济将是普通人也能参与的一次机会,而未来的AI Meme也将远比GOAT、Clanker 上发行的Agent要更为智能、有趣。

参考文章:

2.Bybit:AI Rig Complex (ARC):AI 代理框架

3.Deep Value Memetics:横向对比四大Crypto×AI 框架:采用状况、优劣势、增长潜力

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。