Verifiable Computation (VC) is a way to run specific workloads in a manner that can generate proof of their work process, and this proof can be publicly verified without rerunning the computation.

Author: Austbot

Translation: DeepTechFlow

Anagram Build spends most of its time researching new use cases for encryption and applying these use cases to specific products. Our recent research project has entered the field of Verifiable Computation (VC). Our team used this research to create a new open-source system called Bonsol. We chose this research area because verifiable computation brings many effective use cases and various L1s are working together to optimize the cost-effectiveness and scalability of verifiable computation.

In this article, we have two goals:

Firstly, we hope to ensure that you have a better understanding of VC as a concept and the products it may enable in the Solana ecosystem.

Secondly, we want to introduce our latest work: Bonsol

What is Verifiable Computation?

The term "Verifiable Compute (VC)" may not appear in the investment prospectus of early-stage companies during a bull market, but the term "zero-knowledge" does. So, what do these terms mean?

Verifiable Computation (VC) is a way to run specific workloads in a manner that can generate proof of their work process, and this proof can be publicly verified without rerunning the computation. Zero-knowledge (ZK) refers to the ability to prove statements about data or computation without revealing all the input data or computation. In the real world, these terms are somewhat confused, and ZK is somewhat of a misnomer. It is more related to choosing information that needs to be disclosed to prove its statement. VC is a more accurate term and is the overall goal of many existing distributed system architectures.

How does VC help us build better encryption products?

So, why do we want to add VC or ZK systems to platforms like Solana and Ethereum? The answer seems to be more related to the security of developers. Developers of the system act as intermediaries between users' trust in the black box and the technical functionality that makes that trust objectively effective. By leveraging ZK/VC technology, developers can reduce the attack surface in the products they are building. VC systems shift the focus of trust to the proof system and the computed workload being proved. This is similar to the shift from typical web2 client/server methods to web3 blockchain methods. Trust shifts from relying on company promises to trusting open-source code and encrypted systems of the network. From the user's perspective, there are no truly zero-trust systems, and I think everything looks like a black box to the user.

For example, by using a ZK login system, developers can reduce their responsibility for maintaining secure databases and infrastructure because this system only needs to verify if certain encrypted properties have been achieved. VC technology is being applied in many places where consensus is needed to ensure that the only condition for the required consensus is that the mathematics is valid.

While there are many successful cases of using VC and ZK in the wild, many of them currently depend on the development progress of the encryption software stack at various levels to make it fast and efficient enough for production.

As part of our work at Anagram, we had the opportunity to talk to many crypto founders/developers to understand how the state of the current crypto software stack affects product innovation. Historically, our conversations have helped us identify an interesting trend. Specifically, a batch of projects is actively moving the on-chain product logic to off-chain because it becomes too expensive, or they need to add more peculiar business logic. As a result, these developers find themselves trying to find a balance between the on-chain and off-chain parts of the systems and tools they are developing, making these products increasingly powerful. This is where VC becomes a key part of helping to connect the on-chain and off-chain worlds using untrusted and verifiable methods.

How do VC/ZK systems work now?

Currently, VC and ZK functions are mainly performed on alternative computation layers (such as Rollup, sidechains, relays, or coprocessors) and can be used through smart contract runtime callbacks. Many L1 chains are working to provide shortcuts outside of smart contract runtime (such as system calls or precompiles) to perform operations on-chain that would otherwise be too expensive.

There are several common modes of current VC systems. I will mention the first four that I know of. Except for the last case, ZK proofs are performed off-chain, but it is the timing and location of the proof verification that gives each of these modes its advantages.

On-chain full verification

For VC and ZK proof systems that can generate small proofs, such as Groth16 or some Plonk variants, the proof is subsequently submitted on-chain and verified using previously deployed code on-chain. This approach is now very common, and the best way to try this approach is to use Circom and a Groth16 verifier on Solana or EVM. The downside is that these proof systems are quite slow. They also often require learning a new language. Verifying a 256-bit hash in Circom actually requires manually handling each 256 bits. Although many libraries allow you to simply call a hash function, behind the scenes, you need to reimplement these functions in Circom code. These systems are great when ZK and VC elements in your use case are small, and when you need to prove that something is valid before taking some other deterministic action. Bonsol currently falls into this first category.

Off-chain verification

The proof is submitted on-chain for all parties to see, and later, off-chain computation is used for verification. In this mode, you can support any proof system, but since the proof is not performed on-chain, you cannot get the same determinism for any operation that depends on the submitted proof. This is good for systems with some challenge window, where parties can "veto" and attempt to prove the proof is incorrect.

Verification network

The proof is submitted to a verification network, and this verification network acts as a pre-Oracle for calling smart contracts. You get determinism, but you also need to trust the verification network.

Synchronous on-chain verification

The fourth and final mode is quite different; in this case, the proof and verification are done simultaneously on-chain. This means that L1 or smart contracts on L1 can actually run ZK schemes on user inputs and allow for execution on private data. There are not many widespread examples of this, and usually, what you can do with this approach is limited to more basic mathematical operations.

Summary

All four modes are being tested in various chain ecosystems, and we will see if new modes emerge and which mode will dominate. For example, on Solana, there is no clear winner, and the landscape of VC and ZK is still in its early stages. Across many chains, including Solana, the most popular approach is the first mode. Full on-chain verification is the gold standard, but as discussed, it also comes with some drawbacks. Mainly, latency and it limits what your circuit can do. As we delve into Bonsol, you will see that it follows the first mode but with some differences.

Introducing Bonsol

Bonsol, a new Solana-native VC system, is built and open-sourced by our Anagram team. Bonsol allows developers to create a verifiable executable involving private and public data and integrate the result into Solana smart contracts. Please note that the project depends on the popular RISC0 toolchain.

The inspiration for this project came from a question we posed during our weekly conversations with many projects: "How can I use private data to prove it on-chain?" While the "thing" in each case is different, the underlying desire is the same: to reduce their centralization dependency.

Before we delve into the details of the system, let's illustrate the power of Bonsol through two different use cases.

Scenario One

A Dapp allows users to purchase lottery tickets from various token pools. These pools "tilt" from a global pool daily, so the amounts in the pools (amounts of each token) are hidden. Users can purchase access to increasingly specific ranges of token pools. However, there's a catch: once a user purchases a range, it becomes public to all users. Then the user must decide whether to purchase a lottery ticket. They can decide it's not worth purchasing, or they can ensure their stake in the pool by purchasing a ticket.

When the pool is created and when a user pays for a range, Bonsol comes into play. When creating/tilting the pool, a ZK program receives private inputs of the quantities of each token. The types of tokens are known inputs, and the pool address is a known input. The proof is a proof of the random selection from the global pool to the current pool. The proof also includes commitments to the balances. The on-chain contract receives this proof, verifies it, and stores these commitments so that when the pool is eventually closed and the balance is sent from the global pool to the lottery owner, they can verify whether the token quantities have changed since the random selection at the start of the pool.

When a user "opens" the hidden token balance range for purchase, a ZK program takes the actual token balances as private inputs and generates a series of values, along with commitments, as part of the proof. The public inputs of this ZK program are the previous commitment of the pool creation proof and its output. This way, the entire system is verified. The previous proof must be verified in the range proof, and the token balances must hash to the same value as committed in the first proof. The range proof is also submitted on-chain and, as mentioned earlier, makes the range visible to all participants.

While there are many ways to implement such a lottery-like system, the properties of Bonsol make the trust requirement for organizations hosting lotteries very low. It also highlights the interoperability of Solana and VC systems. Solana programs (smart contracts) play a crucial role in facilitating trust, as they verify the proofs and then allow the program to take the next steps.

Scenario Two

Bonsol allows developers to create toolkits for use by other systems. Bonsol includes the concept of deployment, where developers can create some ZK programs and deploy them to Bonsol operators. Bonsol network operators currently have some basic ways to assess whether executing a ZK program is economically beneficial. They can see some basic information about how much computation the ZK program will require, input size, and the fee provided by the requester. Developers can deploy a toolkit they believe many other Dapps would want to use.

In the configuration of a ZK program, developers specify the order and type of inputs required. Developers can publish an InputSet with some or all inputs pre-configured. This means they can configure partial inputs to help users verify the computation on very large datasets.

For example, suppose a developer creates a system that, based on NFTs, can prove that ownership transfers on-chain include a specific set of wallets. The developer can have a pre-configured input set containing a large amount of historical transaction information. The ZK program searches this set to find matching owners. This is an artificial example that can be achieved in many ways.

Consider another example: developers can write a ZK program that can verify a signature from a key pair or a hierarchical key pair without revealing the public keys of these authorized key pairs. Suppose this is useful for many other Dapps, and they use this ZK program. The protocol provides a small fee for the author of this ZK program. Due to the importance of performance, developers are incentivized to make their programs run fast so that operators are willing to run them, and developers attempting to plagiarize another developer's work would need to change the program in some ways to deploy it, as the content of the ZK program is verified. Any operations added to the ZK program will affect its performance, and while this is certainly not foolproof, it may help ensure that developers are rewarded for innovation.

Bonsol Architecture

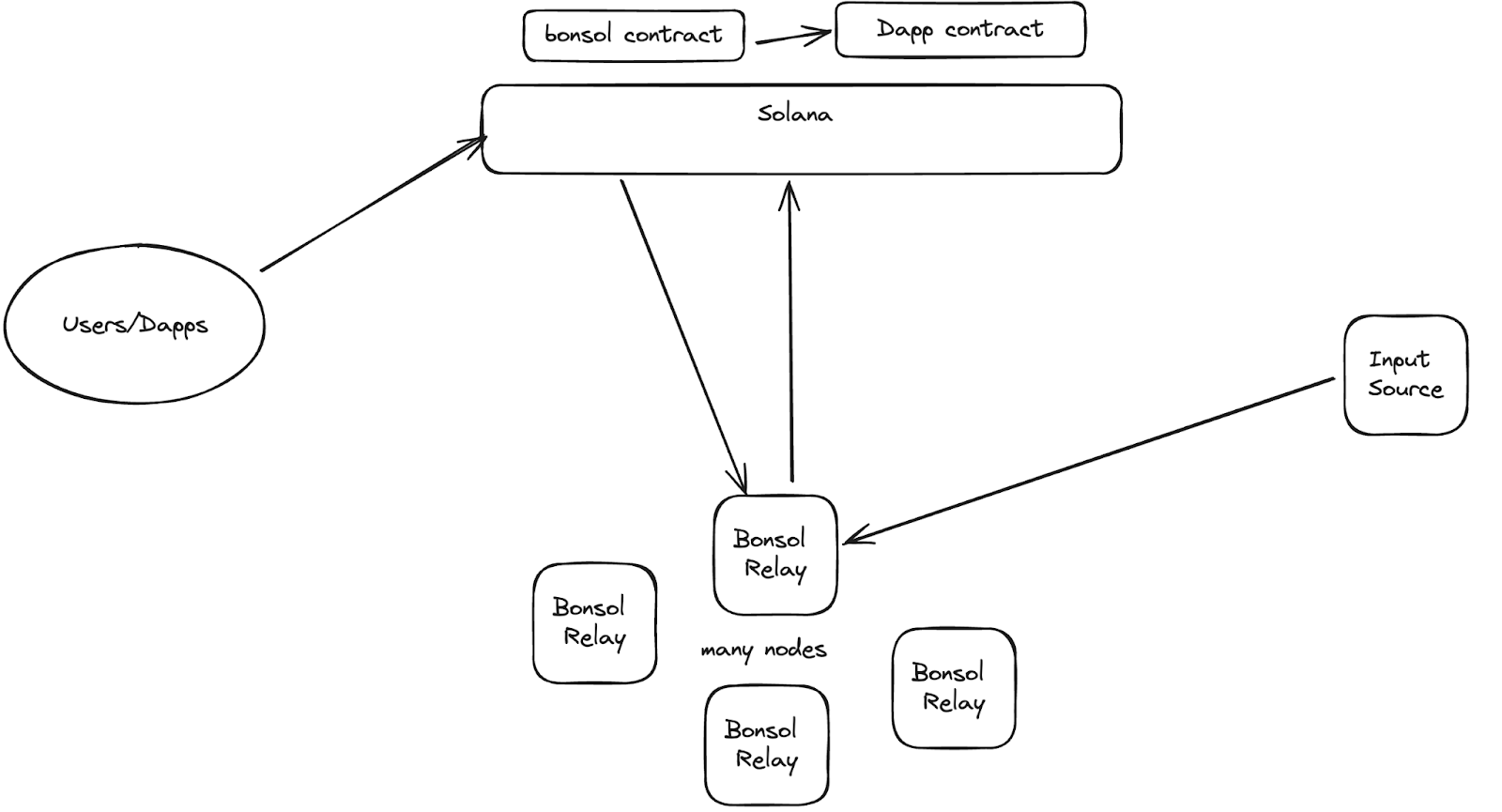

These use cases help describe the purpose of Bonsol, but let's take a look at its current architecture, current incentive model, and execution flow.

The above image describes the process of a user needing to perform some verifiable computation, typically achieved through a Dapp that requires the user to perform certain actions. This will take the form of an execution request, which includes information about the ZK program being executed, the input or input set being executed, the time at which the computation must be proven, and the fee (which is the way relays are charged). The request is picked up by relays, and they must race to decide whether to claim ownership of the execution and start proving it. Depending on the capabilities of a specific relay operator, they can choose to opt out if the fee is not worth it or if the ZK program or input is too large. If they decide to execute the computation, they must claim its execution. If they are the first to claim, their proof will be accepted until a certain time. If they fail to produce the correct proof in time, other nodes can claim execution. To claim, relays must provide some collateral, currently hardcoded as half the fee, and will be slashed if they fail to produce the correct proof.

Bonsol is built on the premise that more computation will move to a layer where it is verified and validated on-chain, and Solana will soon become the preferred chain for VC and ZK. Solana's fast transactions, cheap computation, and growing user base make it an excellent place to test these ideas.

Is it easy to build? Certainly not!

This is not to say there were no challenges in building Bonsol. To bring Risco0 proofs to Solana and verify them, we needed to make them smaller. But we couldn't simply do that without sacrificing the security of the proofs. So, we used Circom to wrap the Risc0 Stark, which can be about 200kb, and then wrapped it in a Groth16 proof, which is always 256 bytes. Fortunately, Risc0 provided some initial tools for this, but it added a lot of overhead and dependencies to the system.

When we started building Bonsol and using existing tooling to wrap Stark and Snark, we sought ways to reduce dependencies and increase speed. Circom allows compiling Circom code into C++ or wasm. We first attempted to compile Circom circuits into wasmu files generated by LLVM. This was the fastest and most efficient way to make the Groth16 toolkit portable and still fast. We chose wasm for its portability, as C++ code depends on x86 CPU architecture, meaning new Macbooks or Arm-based servers would not be able to use this code. However, this became a dead end for us on our schedule, as most of our product research experiments are time-bound, with 2-4 weeks of development time to test this idea until its value is proven. The LLVM wasm compiler couldn't handle the generated wasm code. With more work, we might have overcome this challenge, but we tried many optimization flags and methods to make the llvm compiler work as a plugin for wasmer to pre-compile this code into llvm, but we were not successful. Since the Circom circuit is about 1.5 million lines of code, you can imagine the size of the wasm. We then turned to trying to create a bridge only between the C++ and our Rust relay codebase. This was quickly defeated as C++ contains some x86-specific assembly code that we didn't want to mess with. To make the system public, we eventually simply launched a system that uses C++ code but removed some dependencies. In the future, we hope to expand another optimization line we are working on. That is to actually compile the C++ code into execution graphs. These C++ constructs compiled by Circom are mainly modular arithmetic over finite fields with very very large prime generators. This showed some promising results for smaller, simpler C++ constructs, but more work is needed to make it work with the Risc0 system. This is because the generated C++ code is about 7 million lines of code, and the graph generator seems to hit stack size limits, and raising these limits seems to cause other failures that we didn't have time to determine. Despite some of these methods not yielding the expected results, we were able to contribute to open-source projects and hope that these contributions will be merged upstream at some point.

The next series of challenges are more in the realm of design. A crucial part of the system is the ability to have private inputs. These inputs need to come from somewhere, and due to time constraints, we couldn't add some fancy MPC encryption system to allow private inputs to form a closed loop in the system. Therefore, to meet this requirement and unblock developers, we added the concept of a private input server, which needs to verify that the requester has verified the current claimant through the payload's signature and serve them. As we expand Bonsol, we plan to implement an MPC threshold decryption system, through which relay nodes can allow claimants to decrypt private inputs. All the discussions about private inputs led us to the design evolution we plan to provide in the Bonsol repository. That is Bonsolace, a simpler system that allows you as a developer to easily prove these zk programs on your own infrastructure. You can prove it yourself and then verify it on the same contract as the proof network. This use case is suitable for very high-value private data cases where access to private data must be minimized as much as possible.

One final point about Bonsol that we haven't seen elsewhere in the use of Risc0 is that we require commitments (hashes) when input data enters the zk program. We actually check on the contract the inputs that the prover must commit to, to ensure it matches the inputs expected by the user and sent to the system. This incurs some cost, but without it, it means provers could cheat and run zk programs on inputs not specified by the user. The rest of the development work on Bonsol falls into normal Solana development, but it's worth noting that we intentionally tried some new ideas there. In smart contracts, we use flatbuffers as the sole serialization system. This is a somewhat novel technology, and we hope to develop it and make it into a framework as it's well-suited for generating cross-platform SDKs. One last point about Bonsol is that it currently requires pre-compilation to work most effectively, and this pre-compilation plan will be implemented in Solana 1.18, but before that, we are working to see if the team is interested in this research and exploring other technologies beyond Bonsol.

Summary

In addition to Bonsol, the Anagram build team has delved into many areas of the VC field. Projects like Jolt, zkllvm, spartan 2, and Binius are projects we are tracking, as well as companies working in the fully homomorphic encryption (FHE) field.

Please check out the Bonsol repository and ask questions about examples you need or ways you want to extend it. This is a very early-stage project, and you have the opportunity to make a big impact.

If you are working on interesting VC projects, apply for the Anagram EIR program.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。