一.前瞻

1. 宏观层面总结以及未来预测

上周,特朗普政府宣布将对所有非美国制造的汽车征收25%的关税,这一决定再次引发了市场的恐慌情绪。该关税政策不仅可能导致进口汽车和零部件价格大幅上涨,还可能引发贸易伙伴的报复性措施,进一步加剧国际贸易紧张局势。后续,投资者仍需密切关注贸易谈判进展和全球经济形势变化。

2. 加密行业市场变动及预警

上周,加密货币市场遭遇了一场由宏观层面的恐惧情绪所引发的显著回调,此前积累的反弹涨幅在短短数日内便大幅回吐,这一波动主要源于全球宏观经济环境的不确定性再次加剧。展望本周,市场关注的焦点将集中在比特币和以太坊价格是否能够有效跌破前期低点位置。这一位置不仅是技术面上的重要支撑位,也是市场心理层面的关键防线。4月2日,美国正式拉开征收对等关税的序幕。倘若这一举措未进一步激化市场的恐慌情绪,那么加密货币市场或许将迎来阶段性右侧抄底的契机。不过,投资者仍需时刻保持警惕,密切关注市场动态与各项相关指标的变化。

3. 行业以及赛道热点

Cobo以及YZI领投,Hashkey2次跟投的模块化的L1链抽象平台Particle通过简化跨链操作和支付,极大提升了用户体验和开发者效率,但也面临流动性和中心化管理的挑战;专注无缝链接主流VM应用层协议Skate提供了一种创新而高效的解决方案。通过提供统一的应用状态、简化跨链任务执行并确保安全性,Skate大大降低了开发者和用户在多链环境中的复杂性;Arcium 是一个快速、灵活且低成本的基础设施,旨在通过区块链实现对加密计算的访问。创新型去中心化存储解决方案Walrus融资创纪录的1.4亿美元。

二.市场热点赛道及当周潜力项目

1.潜力赛道表现

1.1. 浅析Hashkey领投的专注无缝链接主流VM应用层协议Skate有何特色

Skate 是专注于DAPP的基础设施层,允许用户通过连接到所有虚拟机(EVM、TonVM、SolanaVM)无缝地与其原生链进行交互。对于用户,Skate提供可以在其首选环境中运行的应用。对于开发者,Skate管理跨链的复杂性,并引入一种新的应用程序范式,使得应用可以在所有链和所有虚拟机上构建,并使用一个统一的应用状态来服务所有链。

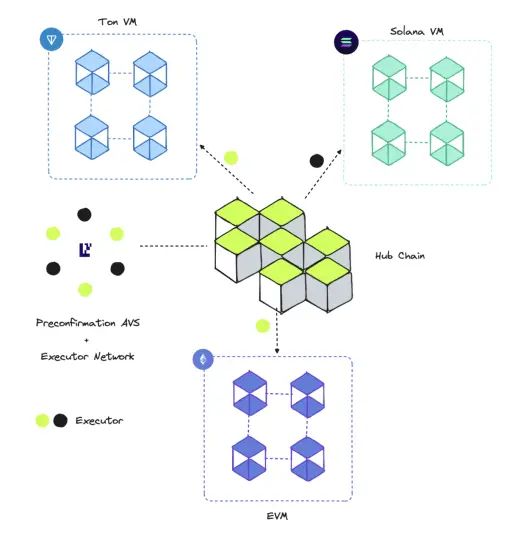

架构概览

Skate的基础设施由三个基础层构成:

- Skate的中心链:处理所有逻辑运算并存储应用状态的中央枢纽。

- 预确认AVS:部署在Eigenlayer上的AVS,促进将重新质押的ETH安全地委托给Skate的执行者网络。它作为主要的真实数据源,确保执行者在目标链上执行所需的操作。

- 执行者网络:一个由执行者组成的网络,负责执行应用程序定义的操作。每个应用程序都有自己的一组执行者。

作为中心链,Skate维护并更新共享状态,为连接的外围链提供指令,这些外围链只会响应Skate提供的调用数据。这一过程通过我们的执行者网络实现,其中每个执行者都是注册的AVS操作员,负责执行这些任务。如果出现不诚实行为,我们可以依赖预确认AVS作为真实数据源来惩罚违规操作员。

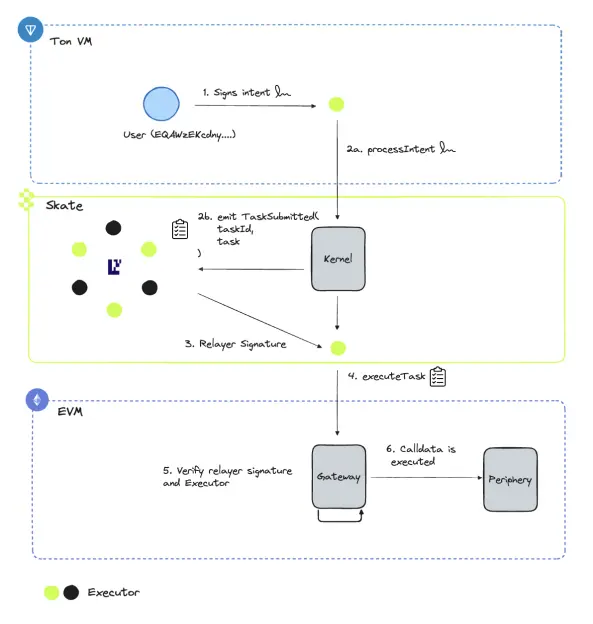

用户流程

Skate主要通过意图驱动,每个意图封装了表达用户想要执行的操作的关键信息,同时定义了必要的参数和边界。用户只需要通过他们自己的本地钱包签署意图,并仅在该链上进行交互,从而创造出一个用户原生的环境。

意图流程如下:

- 源链

用户将在TON/Solana/EVM链上通过签署意图发起操作。 - Skate

执行者接收意图并调用processIntent函数。这会创建一个任务,封装执行者任务执行所需的关键信息。同时,系统会触发一个TaskSubmitted事件。

AVS验证器会主动监听TaskSubmitted事件,并验证每个任务的内容。一旦在预确认AVS中达成共识,转发者将发出任务执行所需的签名。 - 目标链

执行者调用Gateway合约上的executeTask函数。

Gateway合约将验证该任务是否已通过AVS验证,即确认转发者的签名有效,然后才能执行任务中定义的函数。

函数调用的calldata被执行,意图被标记为完成。

点评

Skate为去中心化应用的跨链操作提供了一种创新而高效的解决方案。通过提供统一的应用状态、简化跨链任务执行并确保安全性,Skate大大降低了开发者和用户在多链环境中的复杂性。其灵活的架构和易于集成的特性使其在多链生态中具有广阔的应用前景。然而,要想在高并发和多链生态中实现全面落地,Skate仍需在性能优化和跨链兼容性方面持续努力。

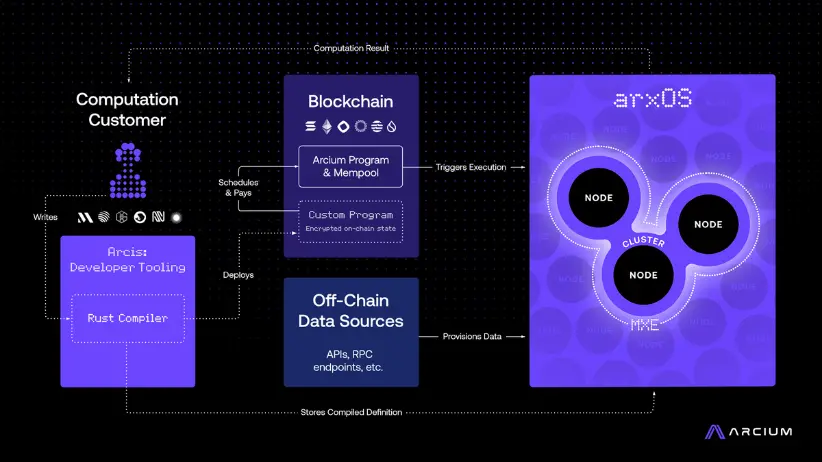

1.2. 由Coinbase,NGC以及Long Hash参投的去中心化加密计算网络Arcium是如何实现其愿景的

Arcium是一个快速、灵活且低成本的基础设施,旨在通过区块链实现对加密计算的访问。Arcium 是一个加密超算,提供大规模的加密计算服务,支持开发者、应用程序和整个行业在完全加密数据上进行计算,采用无信任、可验证且高效的框架。通过安全的多方计算(MPC)技术,Arcium 为Web2和Web3项目提供可扩展、安全的加密解决方案,并通过去中心化网络提供支持。

架构简述

Arcium 网络旨在为各种应用提供安全的分布式机密计算,从人工智能到去中心化金融(DeFi)及其他领域。它基于先进的密码学技术,包括多方计算(MPC),实现无信任和可验证的计算,无需中央权威的介入。

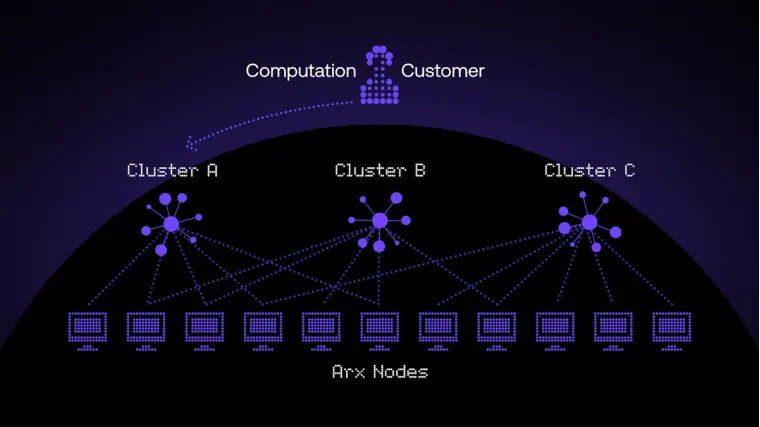

- 多方执行环境 (MXEs)

MXEs 是专门的、隔离的环境,用于定义和安全执行计算任务。它们支持并行处理(因为多个集群可以同时为不同的 MXEs 执行计算),从而提高吞吐量和安全性。

MXEs 具有高度的可配置性,允许计算客户根据自身需求定义安全要求、加密方案和性能参数。虽然单个计算任务会在 Arx 节点的特定集群中执行,但多个集群可以与一个 MXE 关联。这确保了即使某些节点在集群中处于离线状态或负载过高时,计算任务仍然能够可靠执行。通过预定义这些配置,客户可以根据具体的用例需求,高度灵活地定制环境。

- arxOS

arxOS 是 Arcium 网络中的分布式执行引擎,负责协调计算任务的执行,驱动 Arx 节点和集群。每个节点(类似计算机中的核心)提供计算资源来执行由 MXEs 定义的计算任务。

- Arcis(Arcium 的开发者框架)

Arcis 是一个基于 Rust 的开发者框架,使开发者能够在 Arcium 基础设施上构建应用,并支持 Arcium 所有的多方计算(MPC)协议。它包含一个基于 Rust 的框架和编译器。

- Arx 节点集群(运行 arxOS)

arxOS 是 Arcium 网络中的分布式执行引擎,协调计算任务的执行。每个节点(类似计算机中的核心)提供计算资源来执行由 MXEs 定义的计算任务。集群提供可定制的信任模型,支持不诚实多数协议(最初是 Cerberus)和“诚实但好奇”协议(如 Manticore)。未来将添加其他协议(包括诚实多数协议),以支持更多的用例场景。

链级强制执行

所有状态管理和计算任务的协调都通过 Solana 区块链在链上处理,Solana 作为共识层,协调 Arx 节点的操作。这确保了公平的奖励分配、网络规则的执行以及节点之间对网络当前状态的对齐。任务被排队在去中心化的内存池架构中,其中链上的组件帮助确定哪些计算任务具有最高优先级,识别不当行为,并管理执行顺序。

节点通过质押抵押品来确保遵守网络规则。如果发生不当行为或偏离协议,系统会实施惩罚机制,通过削减质押(slashing)来惩罚违规节点,维护网络的完整性。

点评

以下是使 Arcium 网络成为前沿安全计算解决方案的关键特性:

- 无信任、任意加密计算:Arcium 网络通过其多方执行环境(MXEs)实现无信任计算,允许在加密数据上进行任意计算而无需暴露数据内容。

- 保证执行:通过区块链基础的协调系统,Arcium 网络确保所有 MXEs 中的计算都能可靠执行。Arcium 的协议通过质押和惩罚机制来强制执行合规性,节点需承诺质押的抵押品,一旦偏离约定的执行规则,抵押品将被处罚,从而确保每个计算任务的正确完成。

- 可验证性和隐私保护:Arcium 提供了可验证计算机制,允许参与者公开审计计算结果的正确性,增强数据处理的透明度和可靠性。

- 链上协调:该网络利用 Solana 区块链来管理节点的调度、补偿和性能激励。质押、惩罚以及其他激励机制都完全在链上执行,保证系统的去中心化和公正性。

- 面向开发者的友好接口:Arcium 提供了双重接口:一个是面向非专业用户的基于网页的图形界面,另一个是兼容 Solana 的 SDK,供开发者创建定制应用。这样的设计使得机密计算既能为普通用户提供便捷,也能满足高度技术化的开发者需求。

- 多链兼容性:虽然初始基于 Solana,Arcium 网络设计时考虑了多链兼容性,能够支持不同区块链平台的接入。

通过这些特性,Arcium 网络旨在重新定义在无信任环境下如何处理和共享敏感数据,推动安全多方计算(MPC)的更广泛应用。

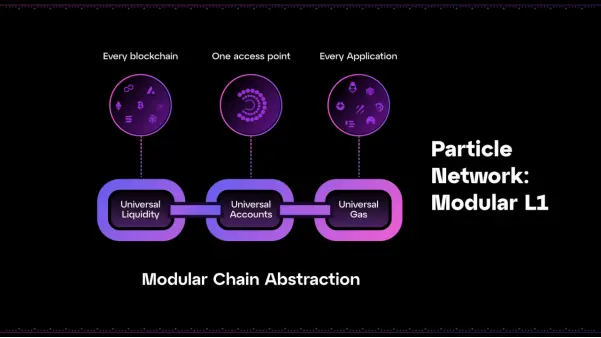

1.3.由Cobo以及YZI领投,Hashkey2次跟投的模块化的L1链抽象平台Particle有何特点?

Particle Network通过钱包抽象和链抽象,彻底简化了Web3的用户体验。通过其钱包抽象SDK,开发者可以通过社交登录实现一键引导用户进入智能账户。

此外,Particle Network的链抽象技术栈,以通用账户(Universal Accounts)为旗舰产品,使用户能够在每条链上拥有统一的账户和余额。

Particle Network的实时钱包抽象产品套件由三项关键技术组成:

- 用户引导 (User Onboarding)

通过简化的注册流程,用户可以更轻松地进入Web3生态,提升用户体验。 - 账户抽象 (Account Abstraction)

通过账户抽象,用户的资产和操作不再依赖于单一链,提升了灵活性和跨链操作的便捷性。 - 即将发布的产品:链抽象 (Chain Abstraction)

链抽象将进一步加强跨链能力,支持用户在多个区块链之间无缝操作和管理资产,打造统一的链上账户体验。

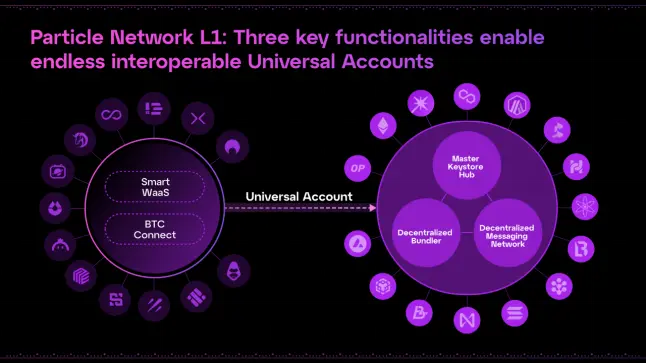

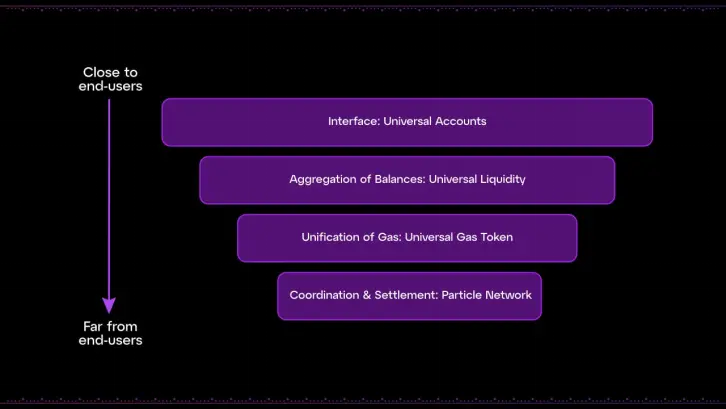

架构解析

Particle Network通过其通用账户 (Universal Accounts)和三个核心功能,在高性能的EVM执行环境中协调并完成跨链交易:

- 通用账户 (Universal Accounts)

提供统一的账户状态和余额,用户在所有链上的资产和操作通过单一账户进行管理。 - 通用流动性 (Universal Liquidity)

通过跨链流动性池,确保在不同链之间的资金能够无缝转移和使用。 - 通用燃料 (Universal Gas)

通过自动管理跨链交易所需的gas费用,简化用户的操作体验。

这三个核心功能共同作用,使得Particle Network能够统一所有链上的交互,并通过原子跨链交易实现自动化资金跨链转移,从而帮助用户达成目标,无需手动干预。

通用账户 (Universal Accounts)

Particle Network的通用账户汇总了所有链上的代币余额,使得用户可以像使用单一钱包一样,在任何链上的去中心化应用(dApp)中利用所有链上的资产。

通用账户通过**通用流动性 (Universal Liquidity)实现这一功能。它们可以理解为在所有链上部署和协调的专门智能账户实现。用户只需连接钱包,即可创建并管理通用账户,系统将自动为其分配管理权限。用户连接的钱包可以通过Particle Network的模块化智能钱包即服务 (Modular Smart Wallet-as-a-Service)**进行社交登录生成,也可以是普通的Web3钱包,如MetaMask、UniSat、Keplr等。

开发者可以通过实现Particle Network的通用SDK,在自己的dApp中轻松集成通用账户功能,赋能跨链资产管理和操作。

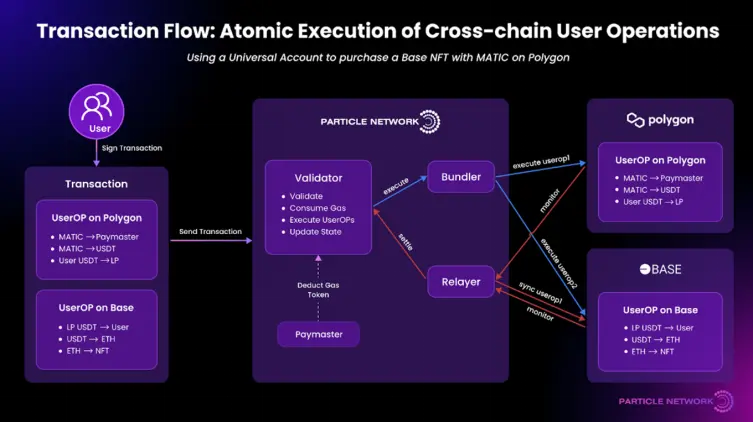

通用流动性 (Universal Liquidity)

通用流动性是支持在所有链上聚合余额的技术架构。其核心功能是通过原子跨链交易和交换,由Particle Network进行协调。这些原子交易序列由Bundler节点驱动,执行用户操作(UserOperations)并在目标链上完成操作。

通用流动性依赖于流动性提供者 (Liquidity Providers)(也称为填充者)网络,通过代币池在链之间移动中介代币(例如USDC和USDT)。这些流动性提供者确保资产能够跨链顺利流动。

举个例子,假设用户想要在Base链上用USDC购买一枚定价为ETH的NFT。在这种场景下:

- Particle Network聚合用户在多个链上的USDC余额。

- 用户使用自己的资产购买NFT。

- 在确认交易后,Particle Network自动将USDC兑换为ETH并购买NFT。

这些额外的链上操作只需几秒钟的处理时间,且对用户来说是透明的,用户无需手动干预。通过这种方式,Particle Network简化了跨链资产的管理,使得跨链交易和操作变得无缝、自动化。

通用燃料 (Universal Gas)

通过通用流动性在链之间统一余额,Particle Network还解决了燃料代币(gas token)的碎片化问题。

过去,用户需要在不同的钱包中持有多种链的燃料代币,以支付不同链上的gas费用,这给用户带来了较大的使用障碍。为了解决这一问题,Particle Network使用其原生的Paymaster,允许用户使用任何链上的任何代币来支付gas费用。这些交易最终会通过链的原生代币(PARTI)在Particle Network的L1进行结算。

用户无需持有PARTI代币来使用通用账户,因为他们的gas代币会自动进行兑换并用于结算。这使得跨链操作和支付变得更加简便,无需用户管理多种gas代币。

点评

优势:

- 跨链资产统一管理:通用账户和通用流动性使得用户可以在不同链上管理和使用资产,无需担心资产分散或跨链转移的复杂性。

- 简化用户体验:通过社交登录和模块化智能钱包即服务,用户可以轻松接入Web3,降低了入门门槛。

- 跨链交易自动化:原子跨链交易和通用燃料使得资产和gas代币的自动转换和支付变得无缝,提升了用户的操作便利性。

- 开发者友好:开发者可以通过Particle Network的通用SDK,在自己的dApp中轻松集成跨链功能,减少了跨链集成的复杂度。

劣势:

- 依赖流动性提供者:流动性提供者(如USDC和USDT的跨链转移)需要广泛的参与才能确保流动性平稳。如果流动性池不足或提供者参与度低,可能会影响交易的顺畅性。

- 中心化风险:Particle Network在一定程度上依赖其原生的Paymaster来处理gas费用支付和结算,可能会引入中心化的风险和依赖。

- 兼容性和普及度:尽管支持多个钱包(如MetaMask、Keplr等),不同链和钱包的兼容性可能仍是用户体验的一大挑战,尤其是对于较小的链或钱包提供商。

总体而言,Particle Network通过简化跨链操作和支付,极大提升了用户体验和开发者效率,但也面临流动性和中心化管理的挑战。

2. 当周关注项目详解

2.1. 详解A16z领衔,当月融资创纪录1.4亿美元的创新型去中心化存储解决方案Walrus

简介

Walrus,一种去中心化大数据存储的创新型方案。它结合了快速线性可解码的纠删码,能够扩展到数百个存储节点,从而在较低存储开销的情况下实现极高的弹性;并利用新一代公链Sui作为控制平面,管理从存储节点生命周期到大数据生命周期,再到经济学和激励机制,省去了需要一个完整的定制区块链协议的需求。

Walrus的核心是一个新的编码协议,称为Red Stuff,它采用了一种基于fountain codes的创新二维(2D)编码算法。与RS编码不同,fountain codes主要依赖于对大数据块进行XOR或其他非常快速的操作,避免了复杂的数学运算。这种简单性使得能够在单次传输中编码大文件,从而显著加快处理速度。Red Stuff的二维编码使得可以通过与丢失数据量成比例的带宽恢复丢失的片段。此外,Red Stuff还结合了经过认证的数据结构,以防止恶意客户端,确保存储和检索的数据保持一致性。

Walrus以epoch为单位运行,每个epoch由一个存储节点委员会管理。每个epoch中的所有操作都可以按blobid进行分片,从而实现高度的可扩展性。系统通过将数据编码为主片和次片,生成Merkle承诺,并将这些片段分布到存储节点来促进blob的写入过程。读取过程则涉及收集和验证片段,系统提供了最佳努力路径和激励路径来应对潜在的系统故障。为了确保在处理权限系统自然发生的参与者更替的同时,读写blob的可用性不受中断,Walrus具有高效的委员会重配置协议。

Walrus的另一个关键创新是其存储证明的方法,这是一种验证存储节点是否确实存储了它们所声称持有数据的机制。Walrus通过激励所有存储节点持有所有存储文件的片段来解决与这些证明相关的可扩展性挑战。这种完全复制使得能够采用一种新的存储证明机制,从整体上对存储节点进行挑战,而不是针对每个文件单独进行挑战。因此,证明文件存储的成本随着存储文件数量的增加呈对数增长,而不像许多现有系统中那样按线性规模增长。

最后,Walrus还引入了一种基于质押的经济模型,结合奖励和惩罚机制,以对齐激励并执行长期承诺。该系统包括一个存储资源和写入操作的定价机制,并配备一个用于参数调整的代币治理模型。

技术解析

Red Stuff 编码协议

当前业内编码协议实现了低开销因子和极高的保证,但仍不适用于长期部署。主要的挑战在于,在一个长期运行的大规模系统中,存储节点经常会遇到故障,丢失其片段,并需要被替换。此外,在一个无需许可的系统中,即使存储节点有足够的激励去参与,节点之间也会自然发生更替。

这两种情况都会导致大量的数据需要在网络上传输,等同于存储的数据总量,以便为新的存储节点恢复丢失的片段。这是极其昂贵的。因此,团队希望节点更替时,恢复的成本仅与需要恢复的数据量成比例,并且随着存储节点数量(n)增加而呈反比缩减。

为实现这一点,Red Stuff将大数据块以二维(2D)编码的方式进行编码。主要维度等同于之前系统中使用的RS编码。然而,为了高效恢复片段,Walrus还在次级维度上进行编码。Red Stuff基于线性纠删码和Twin-code框架,该框架提供了在容错设置下高效恢复的纠删码存储,适用于具有可信写入者的环境。团队将这个框架进行了改造,使其适用于拜占庭容错环境,并针对单一存储节点集群进行优化,下面将详细描述这些优化。

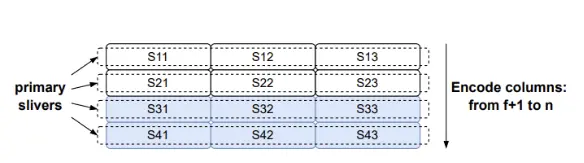

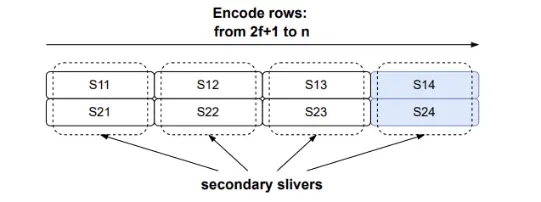

- 编码

我们的起点是将大数据块拆分为f + 1个片段。这不是仅仅编码修复片段,而是在拆分过程中首先增加了一个维度:

(a)二维主编码。文件被拆分成2f + 1列和f + 1行。每一列被编码为一个独立的blob,包含2f个修复符号。然后,每一行的扩展部分就是相应节点的主片段。

(b) 二维次级编码。文件被拆分成2f + 1列和f + 1行。每一行被编码为一个独立的blob,包含f个修复符号。然后,每一列的扩展部分就是相应节点的次级片段。

Figure 2: 2D Encoding/ Red Stuff

原始的blob被拆分成f + 1个主片段(图中为垂直方向),以及2f + 1个次级片段(图中为水平方向)。图2展示了这一过程。最终,文件被拆分成(f + 1)(2f + 1)个符号,可以在一个[f + 1, 2f + 1]矩阵中进行可视化。

给定这个矩阵后,在两个维度上生成修复符号。我们取每一个2f + 1列(每列大小为f + 1),并将其扩展为n个符号,使得矩阵的行数为n。我们将每一行分配为一个节点的主片段(见图2a)。这几乎将我们需要发送的数据量增加了三倍。为了提供每个片段的高效恢复,我们还将最初的[f + 1, 2f + 1]矩阵扩展,每一行从2f + 1个符号扩展到n个符号(见图2b),并使用我们的编码方案。这样,我们就创建了n列,每一列被分配为相应节点的次级片段。

对于每个片段(主片段和次级片段),W还计算其符号的承诺。对于每个主片段,承诺包含扩展行中的所有符号;而对于每个次级片段,承诺包含扩展列中的所有值。最后一步,客户端创建一个包含这些片段承诺的承诺列表,这个列表作为blob承诺。

- 写入协议

Red Stuff的写入协议与RS编码协议采用相同的模式。写入者W首先对blob进行编码,并为每个节点创建一个片段对。一个片段对i是第i个主片段和次级片段的配对。总共有n = 3f + 1个片段对,等同于节点的数量。

接着,W将所有片段的承诺发送给每个节点,并附带相应的片段对。各个节点检查自己在片段对中的片段是否与承诺一致,重新计算blob的承诺,并回复签名确认。当收集到2f + 1个签名后,W生成一个证书,并将其发布到链上,以证明该blob将是可用的。

在理论上的异步网络模型中,假设可靠的传输,这样所有正确的节点最终都会从一个诚实的写入者那里收到一个片段对。然而,在实际的协议中,写入者可能需要停止重传。收集到2f + 1个签名后,可以安全地停止重传,这样就确保至少有f + 1个正确节点(从2f + 1个响应的节点中选出)持有该blob的片段对。

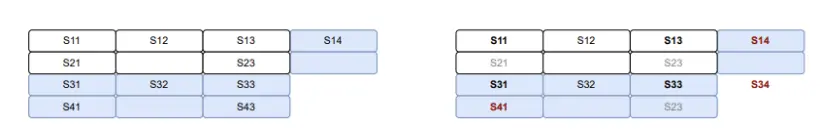

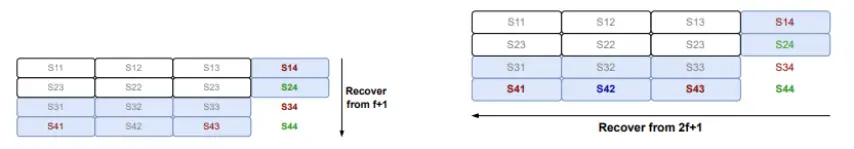

(a) 节点1和节点3共同持有两行和两列

在这种情况下,节点1和节点3分别持有文件的两行和两列。每个节点所持有的数据片段在二维编码中被分配到不同的行和列,确保数据在多个节点之间进行分布和冗余存储,以实现高可用性和容错性。

(b) 每个节点将其行/列与节点4的列/行的交集发送给节点4(红色)。节点3需要对这一行进行编码。

在此步骤中,节点1和节点3会将它们的行/列与节点4的列/行的交集发送给节点4。具体来说,节点3需要编码其所持有的行,以便与节点4的数据片段进行交集并传递给节点4。这样,节点4就能收到完整的数据片段,并能够执行恢复或校验工作。这个过程确保了数据的完整性和冗余,即使某些节点发生故障,其他节点仍能恢复数据。

(c) 节点4使用其列上的f + 1个符号来恢复完整的次级片段(绿色)。然后,节点4将恢复的列交集发送给其他恢复节点的行。

在此步骤中,节点4利用其列上f + 1个符号来恢复完整的次级片段。恢复过程基于数据的交集,确保数据恢复的高效性。当节点4恢复了其次级片段后,它会将恢复得到的列交集发送给其他正在恢复的节点,帮助这些节点恢复它们的行数据。这种交互方式保证了数据恢复的顺利进行,并且多个节点之间的协作可以加速恢复过程。

(d) 节点4使用其行上的f + 1个符号以及其他诚实恢复节点发送的所有恢复次级符号(绿色)(这些符号应至少为2f,加上之前步骤中恢复的1个符号),来恢复其主片段(深蓝色)。

在这一阶段,节点4不仅使用其行上的f + 1个符号来恢复主片段,还需要利用其他诚实恢复节点发送的次级符号来帮助完成恢复。通过这些从其他节点接收到的符号,节点4能够恢复它的主片段。为了确保恢复的准确性,节点4会接收到至少2f + 1个有效的次级符号(包括在之前步骤中恢复的1个符号)。这种机制通过集成多个来源的数据来增强容错性和数据的恢复能力。

- 读取协议

读取协议与RS编码的协议相同,节点仅需使用其主片段。读者(R)首先请求任何节点提供该blob的承诺集,并通过承诺开放协议检查返回的承诺集是否与所请求的blob承诺相匹配。接下来,R向所有节点请求读取该blob承诺,它们将响应并提供它们持有的主片段(为了节省带宽,这可能是逐渐进行的)。每个响应会与该blob的承诺集中的相应承诺进行检查。

当R收集到f + 1个正确的主片段后,R解码该blob并重新编码,重新计算blob承诺,并将其与所请求的blob承诺进行比较。如果这两个承诺匹配(即与W在链上发布的承诺相同),则R输出blob B,否则,R输出错误或无法恢复的指示信息。

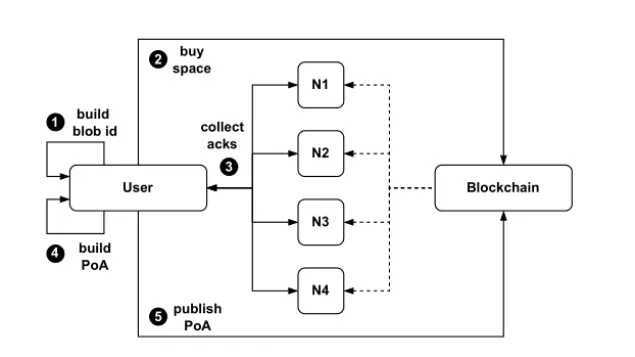

Walrus去中心化安全Blob存储

- 写入一个Blob

写入Walrus中的Blob过程可以通过图4来说明。

这个过程开始时,写入者(➊)使用Red Stuff对Blob进行编码,如图2所示。这个过程会生成sliver对、一组对sliver的承诺以及一个Blob承诺。写入者通过对Blob承诺进行哈希运算,并结合文件的长度、编码类型等元数据,来推导出一个blobid。

然后,写入者(➋)向区块链提交一笔交易,以便在一系列Epoch中为Blob存储空间获得足够的保障,并注册这个Blob。交易中会发送Blob的大小和Blob承诺,这些数据可以用来重新推导出blobid。区块链智能合约需要确保有足够的空间来存储每个节点上的编码sliver,以及所有与Blob承诺相关的元数据。一些支付可能会随着交易一起发送,用以保证空闲空间,或者空闲空间可以作为附加资源,随请求一起使用。我们的实现允许这两种选项。

一旦注册交易提交(➌),写入者会通知存储节点它们有责任存储该blobid的slivers,同时将交易、承诺以及分配给各个存储节点的主sliver和副sliver连同证明一起发送给这些存储节点,证明slivers与发布的blobid一致。存储节点会验证承诺,并在成功存储承诺和sliver对后,返回一个对blobid的签名确认。

最后,写入者等待收集到2f + 1个签名确认(➍),这些确认构成一个写入证书。这个证书随后会被发布到链上(➎),这标志着Blob在Walrus中的可用点(PoA)。PoA表示存储节点有义务在指定的Epochs内保持这些slivers的可用性,以供读取。此时,写入者可以从本地存储中删除该Blob,并且可以脱机。此外,写入者还可以将PoA用作向第三方用户和智能合约证明Blob可用性的凭证。

节点会监听区块链事件,查看Blob是否到达了其PoA。如果它们没有存储该Blob的sliver对,它们将执行恢复过程,获取所有Blob的承诺和sliver对,直到PoA时间点。这确保了最终所有正确的节点将会持有所有Blob的sliver对。

总结

总而言之,Walrus的贡献包括:

- 定义了异步完整数据共享的问题,并提出了Red Stuff,这是第一个能够在拜占庭容错下高效解决该问题的协议。

- 提出了Walrus,这是第一个为低复制成本而设计的、能够高效恢复因故障或参与者更替而丢失数据的权限去中心化存储协议。

- 通过引入基于质押的经济模型,结合奖励和惩罚机制来对齐激励并执行长期承诺,并提出了第一个异步挑战协议,以实现高效的存储证明。

三. 行业数据解析

1. 市场整体表现

1.1 现货BTCÐ ETF

从2025年3月24日到2025年3月29日,比特币(BTC)和以太坊(ETH)ETF的资金流动情况出现了不同的趋势:

比特币ETF:

- 2025年3月24日:比特币ETF迎来了8,420万美元的净流入,这是连续第七天的正流入,总流入额达到了8.698亿美元。

- 2025年3月25日:比特币ETF再次记录了2,680万美元的净流入,使得8天内的累计流入额达到了8.966亿美元。

- 2025年3月26日:比特币ETF继续增长,净流入达到了8,960万美元,标志着连续第九天的流入,总流入额达到了9.862亿美元。

- 2025年3月27日:比特币ETF的净流入为8,900万美元,维持了正流入的趋势。

- 2025年3月28日:比特币ETF继续录得8,900万美元的净流入,保持了连续的正流入趋势。

以太坊ETF:

- 2025年3月24日:以太坊ETF的净流入为0美元,结束了之前连续13天的资金流出。

- 2025年3月25日:以太坊ETF出现了330万美元的净流出,这是流出趋势重新启动后的首次流出。

- 2025年3月26日:以太坊ETF继续面临590万美元的净流出,投资者情绪依然谨慎。

- 2025年3月27日:以太坊ETF净流出420万美元,表明市场的恐慌情绪依然存在。

- 2025年3月28日:以太坊ETF继续遭遇420万美元的净流出,保持了负流出的趋势。

美东时间 11 月 1 日)以太坊现货 ETF 总净流出 1O92.56 万美元

1.2. 现货BTC vs ETH 价格走势

BTC

解析

BTC上周在测试楔形上轨(89000美元)附近失败后,如预期开启下行行情,而本周对于用户们仅需要关注三个重要支撑位,81400美元一线支撑,80000美元整数关口给予的二线支撑以及本年度最低点76600美元底部支撑。对于等待机会入场的用户来说,以上三个支撑位置都可被视为分批进场的合适点位。

ETH

解析

ETH在企稳2000美元上方失败后现已接近回调至本年度低点1760美元附近,后续走势几乎要看BTC脸色,若BTC能够企稳80000美元大关并开启反弹,那么ETH后续大概率在1760美元上方形成双底形态并可向上看至2300美元一线阻力。反之若BTC再度跌破80000美元并在76600美元甚至更低的价格寻求支撑,那么ETH则大概率可向下看至1700美元一线甚至1500美元二线底部支撑。

1.3. 恐慌&贪婪指数

2.公链数据

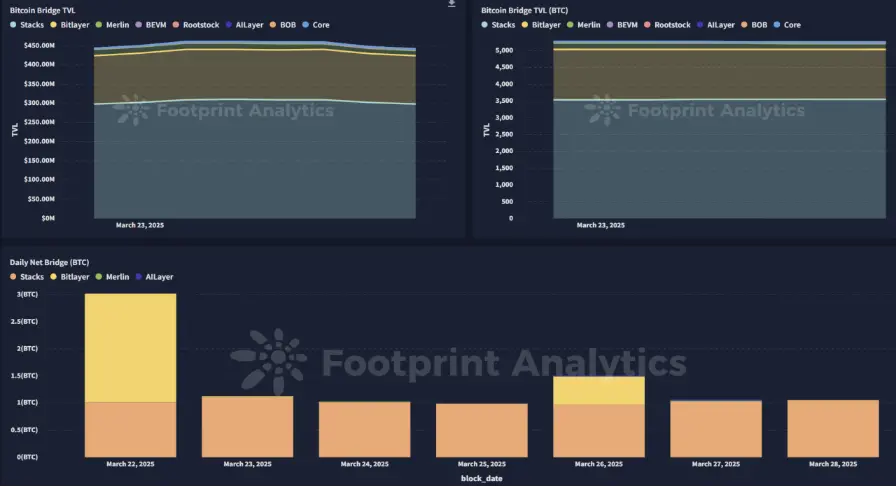

2.1. BTC Layer 2 Summary

解析

从2025年3月24日到3月28日,Bitcoin Layer-2(L2)生态系统经历了一些重要发展:

Stacks的sBTC存款上限增加:Stacks宣布完成了sBTC的cap-2扩展,将存款上限提高了2,000 BTC,总容量达到了3,000 BTC(约合2.5亿美元)。这次提升旨在增强流动性,支持Bitcoin-backed DeFi应用在Stacks平台上的需求增长。

Citrea的测试网里程碑:Bitcoin L2解决方案Citrea报告了一个重要的里程碑——其测试网上的交易量突破了1,000万笔。平台还更新了Clementine设计,简化了零知识证明(ZKP)验证器,并增强了安全性,为Bitcoin交易的可扩展性打下了基础。

BOB的BitVM桥接启用:BOB(Build on Bitcoin)成功在测试网上启用了BitVM桥接,允许用户通过最小的信任假设将BTC铸造成Yield BTC。这一进展增强了Bitcoin与其他区块链网络之间的互操作性,使得在不妥协安全性的前提下,能够进行更复杂的交易。

Bitlayer的BitVM桥接发布:Bitlayer推出了BitVM桥接,允许用户通过最小信任假设将BTC铸造成Yield BTC。这一创新提高了Bitcoin交易的可扩展性和灵活性,支持Bitcoin生态系统内DeFi应用的发展。

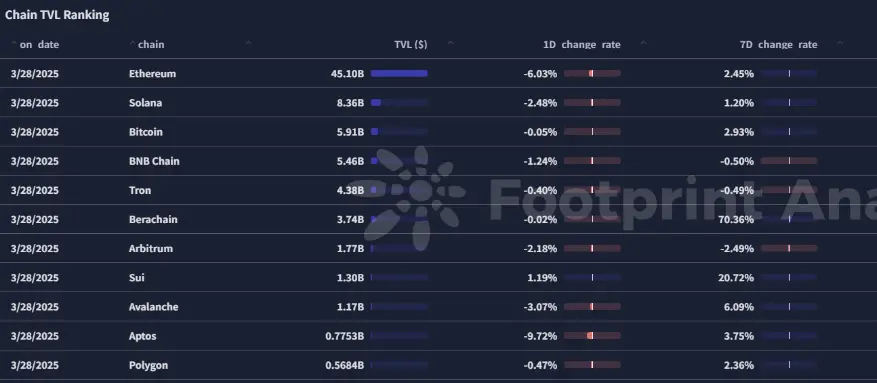

2.2. EVM &non-EVM Layer 1 Summary

解析

EVM兼容的Layer 1区块链:

- BNB链的2025年路线图:BNB链公布了2025年愿景,计划扩展到每天100百万次交易,提高安全性以应对矿工可提取价值(MEV)问题,并引入类似EIP-7702的智能钱包解决方案。 该路线图还强调了人工智能(AI)用例的整合,专注于利用宝贵的私人数据并提升开发者工具。

- Polkadot的2025年发展:Polkadot发布了2025年路线图,突出了对EVM和Solidity的支持,旨在增强互操作性和可扩展性。 该计划包括实施多核架构以增加容量,并通过XCM v5升级跨链消息传递。

非EVM Layer 1区块链:

- W Chain主网软启动:W Chain,一个总部位于新加坡的混合区块链网络,宣布其Layer 1主网进入软启动阶段。 在成功的测试网阶段后,W Chain引入了W Chain桥接功能,以增强跨平台兼容性和互操作性。 商业化主网预计将于2025年3月正式上线,并计划推出去中心化交易所(DEX)和大使计划等功能。

- N1区块链投资者支持确认:N1,一个超低延迟的Layer 1区块链,确认了其原始投资者,包括Multicoin Capital和Arthur Hayes将继续支持该项目,预计将在主网发布前启动。 N1旨在为开发者提供不受限制的可扩展性和超低延迟的去中心化应用(DApps)支持,并支持多种编程语言以简化开发。

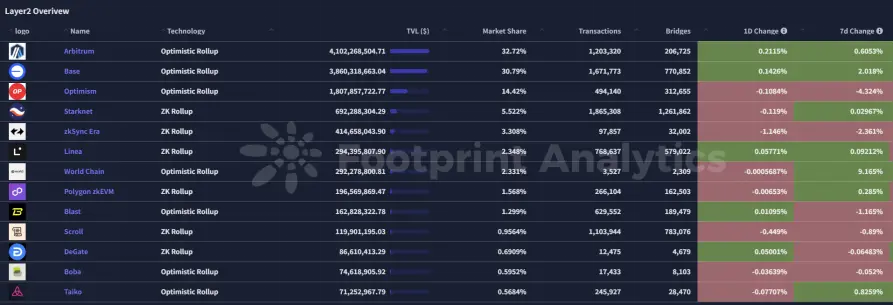

2.3. EVM Layer 2 Summary

解析

在2025年3月24日至3月29日之间,EVM Layer 2 生态系统出现了几项重要发展:

- Polygon zkEVM 主网Beta版上线:2025年3月27日,Polygon成功推出了zkEVM(零知识以太坊虚拟机)主网Beta版。该Layer 2扩容解决方案通过执行链下计算,提高了以太坊的可扩展性,实现了更快速且低成本的交易。开发者可以无缝地将其以太坊应用迁移到Polygon的zkEVM,因为它完全兼容以太坊的代码库。

- Telos基金会的ZK-EVM开发路线图:Telos基金会公布了基于SNARKtor的ZK-EVM开发路线图。计划包括在2024年第四季度将在Telos测试网上部署硬件加速的zkEVM,随后在2025年第一季度与以太坊主网集成。接下来的阶段旨在集成SNARKtor以提高Layer 1上的验证效率,预计到2025年第四季度将完成全面集成。

四.宏观数据回顾与下周关键数据发布节点

3月28日公布的2月核心PCE物价指数年率录得2.7%(预期2.7%,前值2.6%),连续第三个月高于美联储目标,主要受关税导致的进口成本上升推动。

本周(3月31日-4月4日)重要宏观数据节点包括:

4月1日:美国3月ISM制造业PMI

4月2日:美国3月ADP就业人数

4月3日:美国至3月29日当周初请失业金人数

4月4日:美国3月失业率;美国3月季调后非农就业人口

五. 监管政策

周内,美国SEC结束对Crypto.com和Immutable的调查,特朗普也赦免了BitMex的联合创始人,针对稳定币的专门法案也正式被提上讨论日程,对加密行业的松绑与合规化监管进程正在加快推进。

美国:俄克拉荷马州通过战略比特币储备法案

俄克拉荷马州众议院投票通过战略比特币储备法案。该法案允许该州将 10% 的公共资金投资于比特币或任何市值超过 5000 亿美元的数字资产。

另外,美国司法部宣布破获了一项正在进行的恐怖主义融资计划,查获约 201,400 美元(按当前价值计算)的加密货币,这些加密货币存放在旨在为哈马斯提供资金的钱包和账户中。查获的资金来自哈马斯筹款地址,据称由哈马斯控制,自 2024 年 10 月以来,这些地址被用于洗钱超过 150 万美元的虚拟货币。

巴拿马:公布拟议加密法案

巴拿马公布拟议加密法案,以监管加密货币和促进基于区块链服务的发展。拟议的法案为使用数字资产建立了法律框架,为服务提供商制定了许可要求,并包括符合国际金融标准的严格合规措施。数字资产被承认为一种合法的支付手段,允许个人和企业自由商定在商业和民事合同中使用数字资产。

欧盟:或对加密资产实施 100% 资本支持要求

据 Cointelegraph 报道,欧盟保险监管机构提议对持有加密资产的保险公司实施 100% 资本支持要求,理由是加密资产存在「固有风险和高波动性」。

韩国:拟对 Kucoin等17 家境外应用实施访问屏蔽

韩国金融情报分析院(FIU)发布公告称,自 3 月 25 日起将对 17 家未在韩国注册的海外虚拟资产服务提供商(VASP)的 Google Play 平台应用实施国内访问限制,包括 KuCoin、MEXC 等,这意味着用户无法新安装相关应用,现有用户也无法更新。

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。